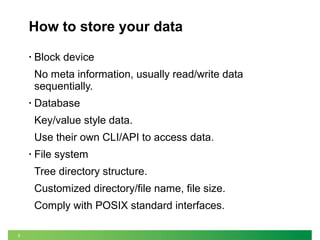

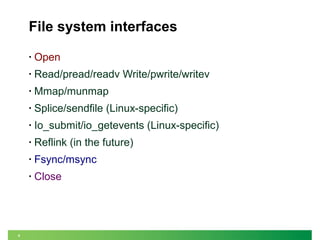

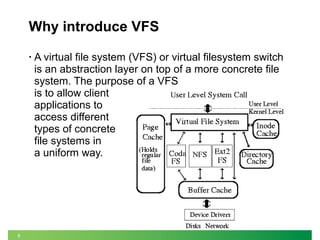

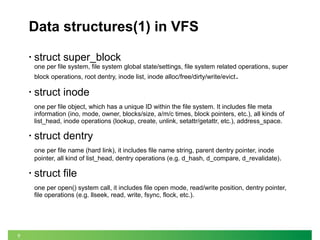

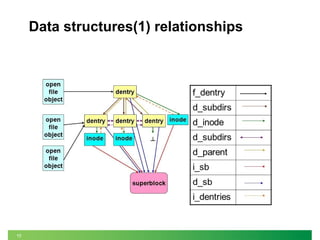

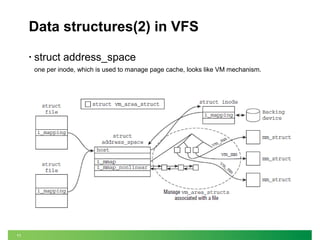

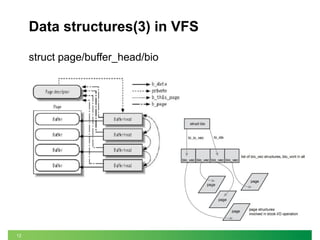

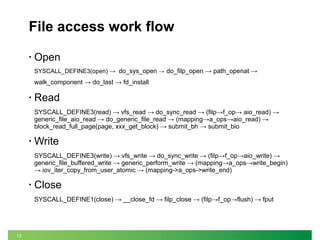

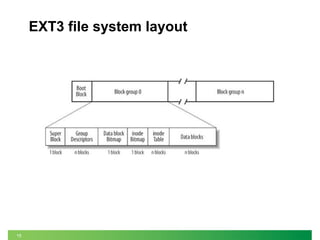

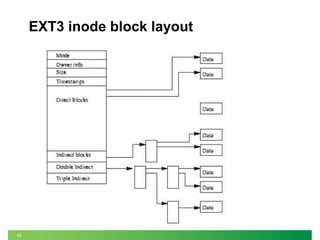

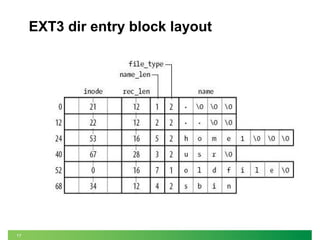

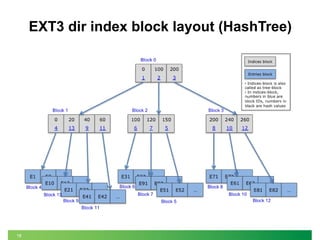

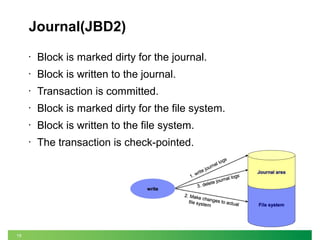

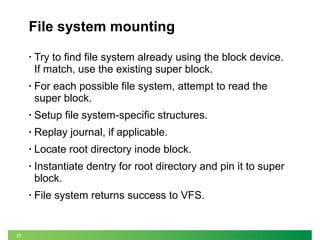

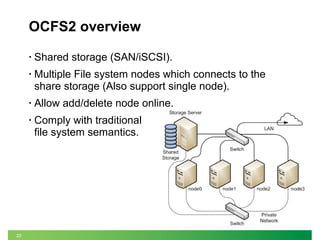

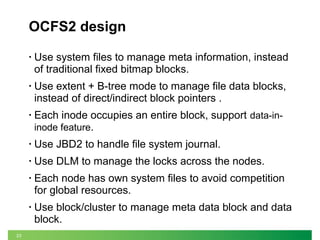

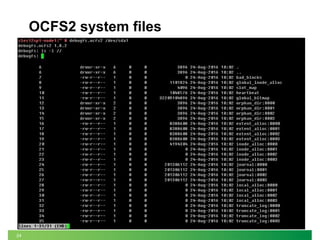

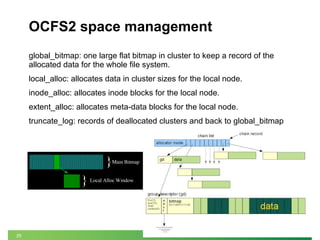

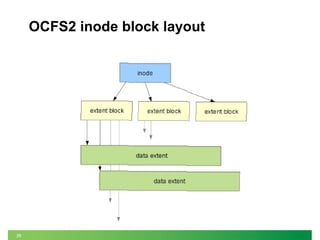

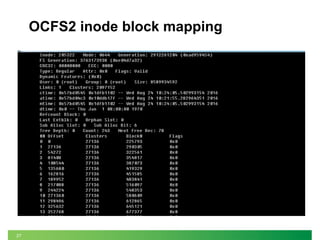

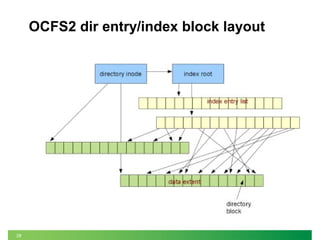

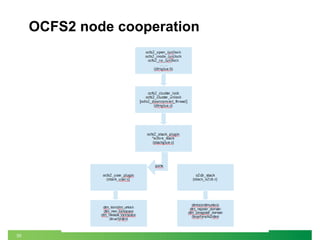

This document provides an introduction to file systems and the OCFS2 file system. It begins with basic concepts of how data can be stored using block devices, databases, and file systems. It then discusses file system interfaces, I/O models, and classifications. It provides an overview of the virtual file system (VFS) layer and its key data structures. It describes the EXT3 and OCFS2 file systems in detail, covering their layouts, journaling, mounting, and space management.