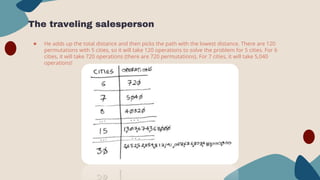

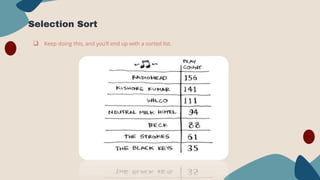

This document discusses algorithms and their analysis. It covers linear search, binary search, sorting algorithms, and analyzing time complexity using Big O, Big Theta, and Big Omega notation. Binary search runs in logarithmic time O(log n) while linear search takes linear time O(n). The traveling salesperson problem takes exponential time O(n!) and is considered an inefficient algorithm.