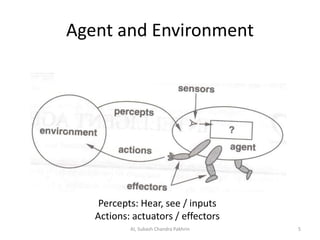

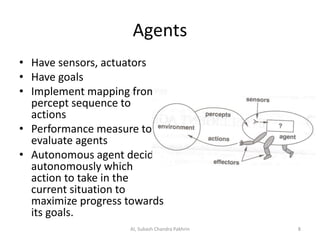

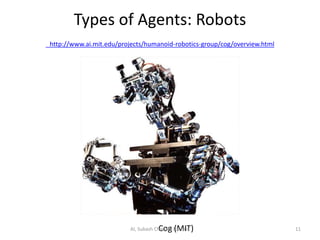

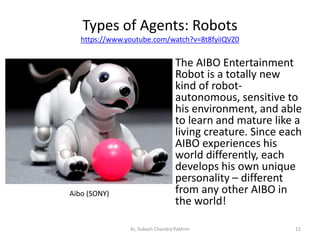

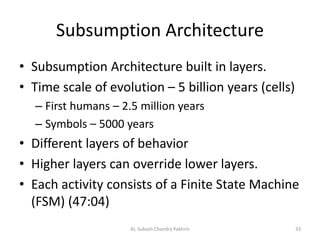

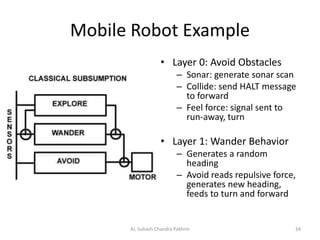

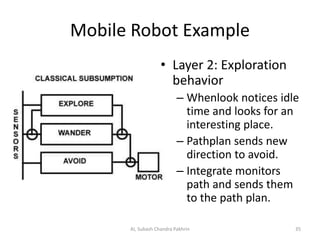

This document provides an overview of intelligent agents. It defines key terms like agent, environment, percepts, actions, rational agents, and bounded rationality. It discusses different types of environments an agent can operate in and different agent architectures like stimulus-response agents, state-based agents, and learning agents. The document also provides examples of different types of agents like robots, software agents, and expert systems. It describes how agents interact with their environment through sensors and effectors to achieve their goals.