The document summarizes several papers presented at CHI 2018 on the topics of:

1) Understanding user experience of co-creation with AI through a drawing collaboration study.

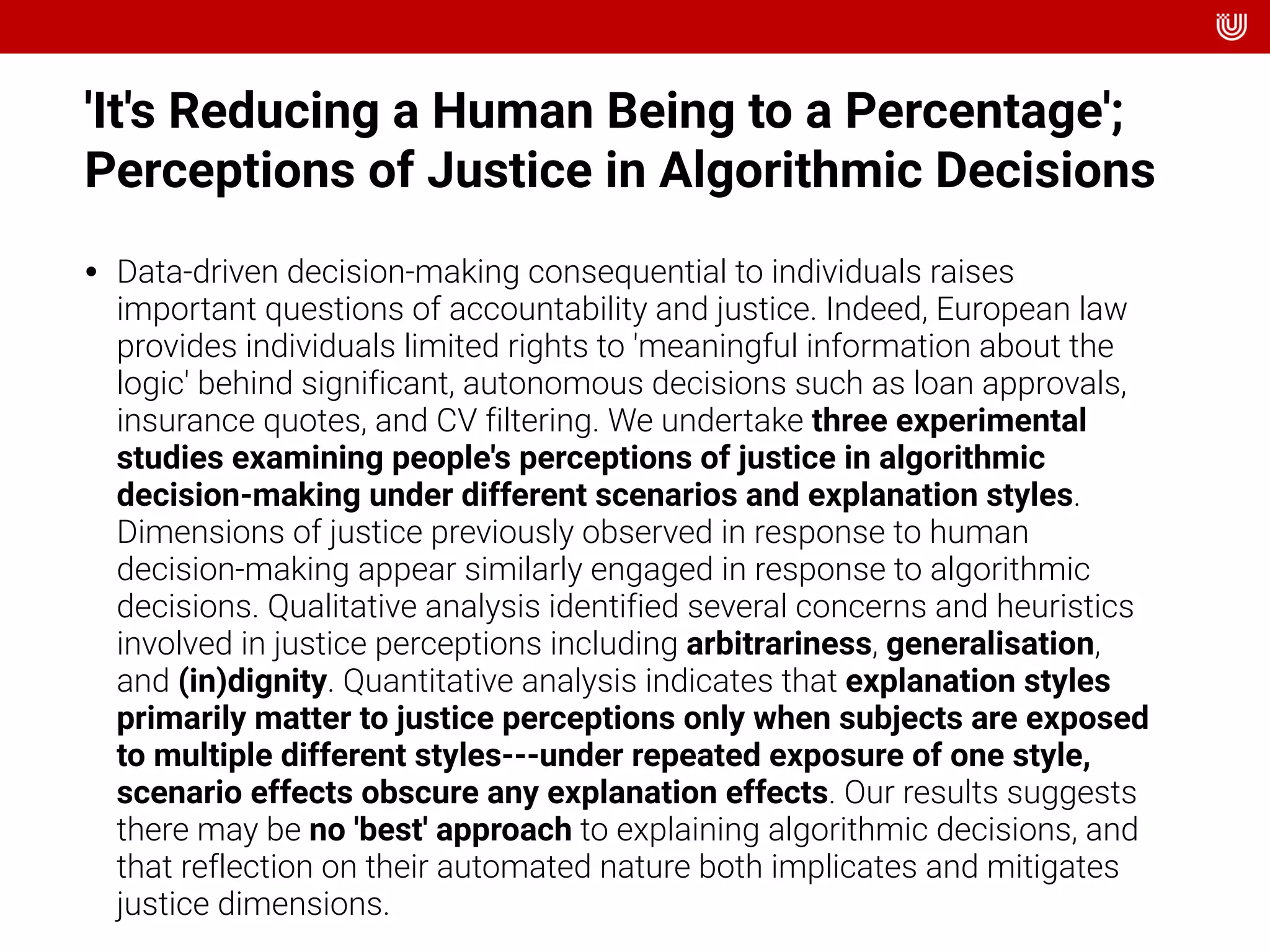

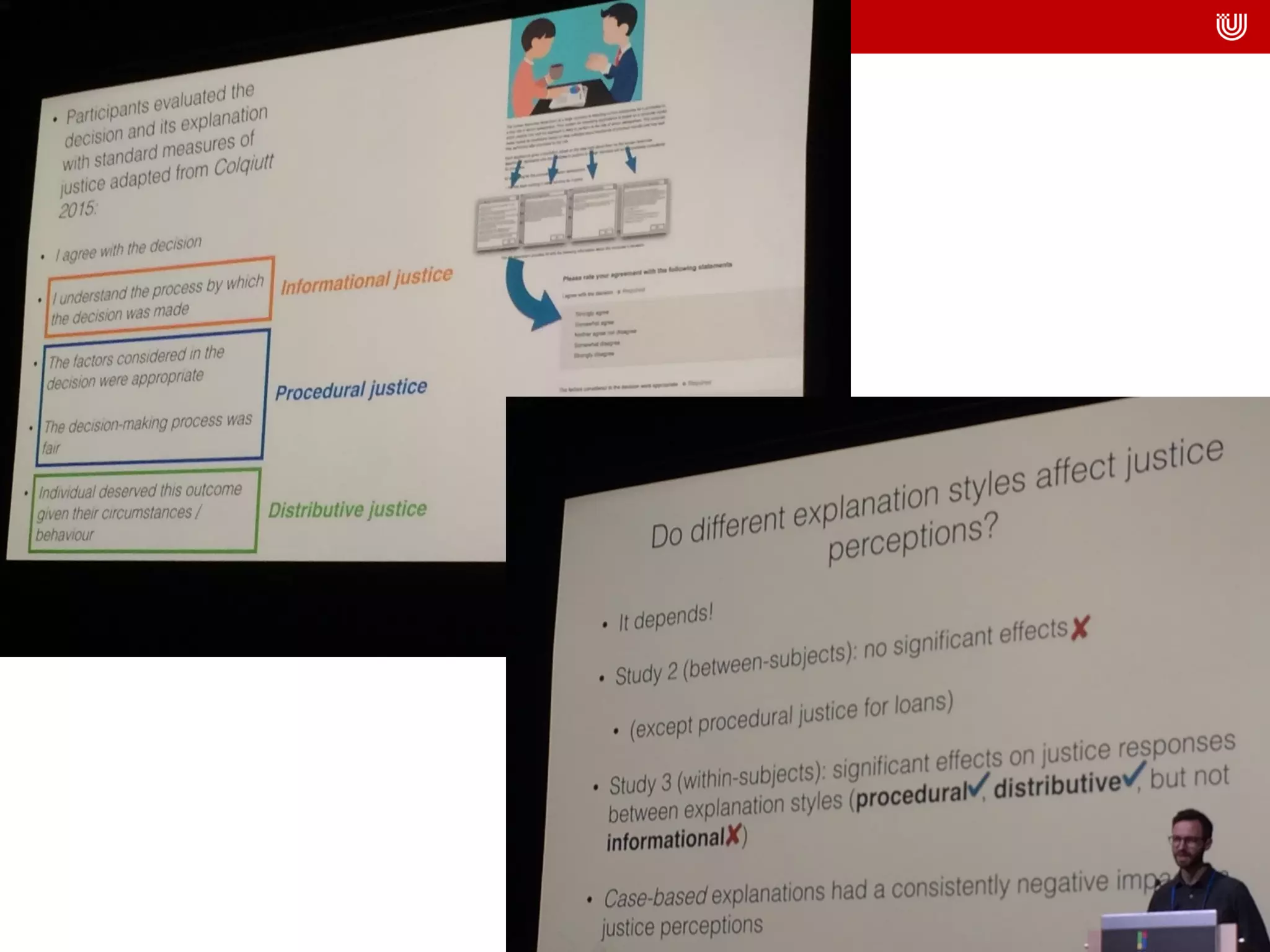

2) Perceptions of justice and fairness in algorithmic decision-making through experimental studies.

3) A qualitative study of perceptions of algorithmic fairness among marginalized groups.

4) The effects of communicating advertising algorithm processes on user perceptions and trust.