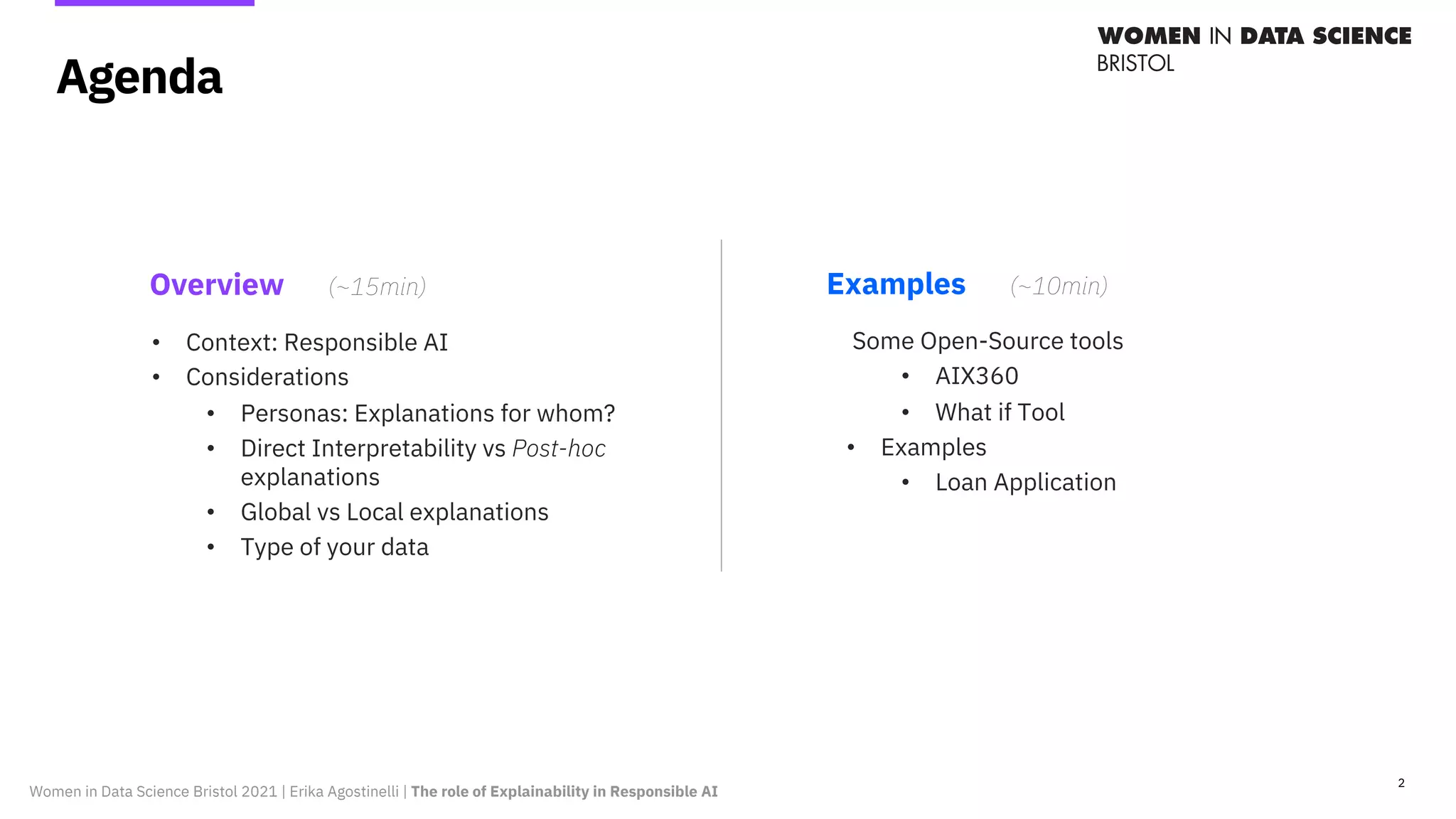

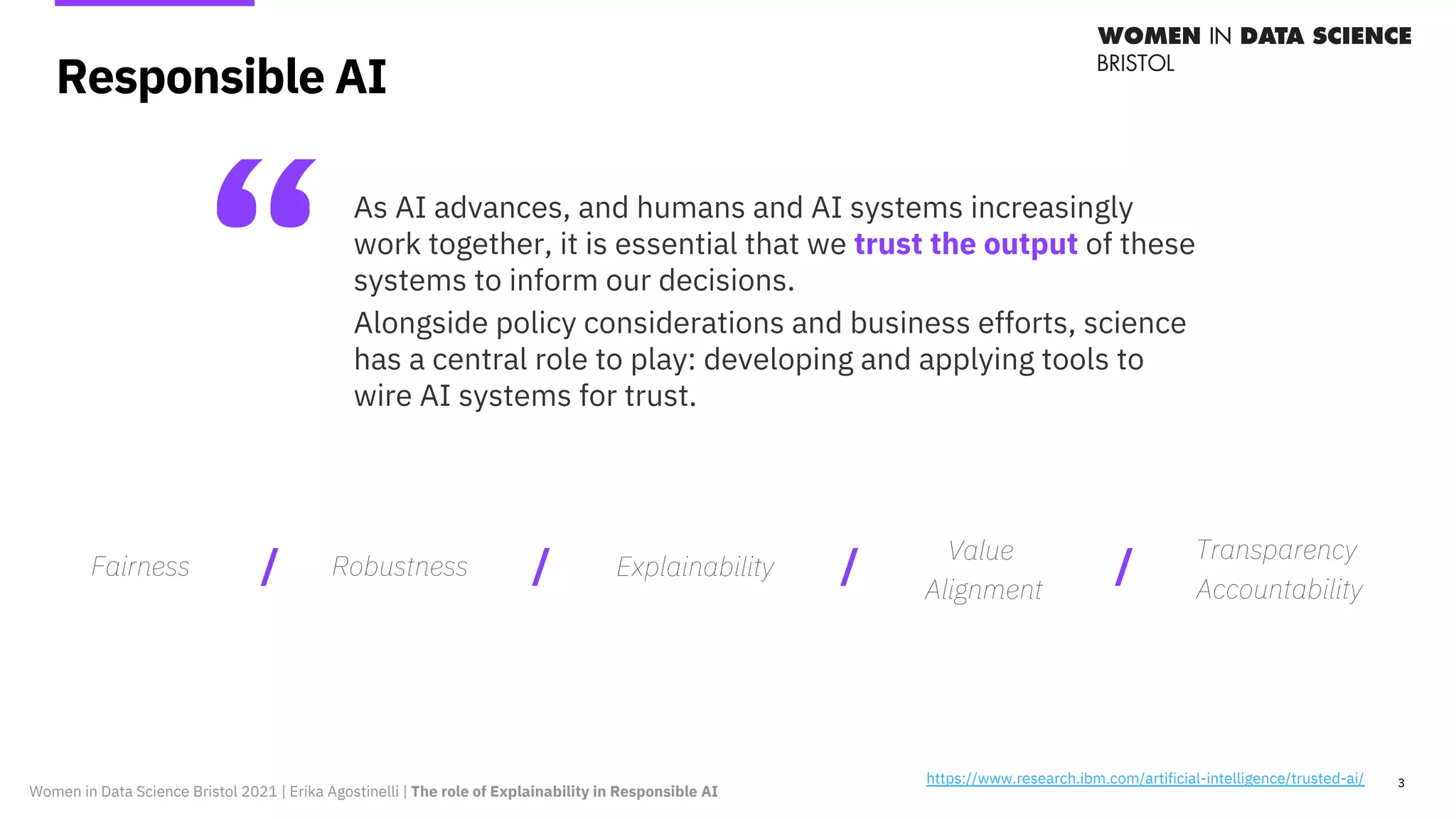

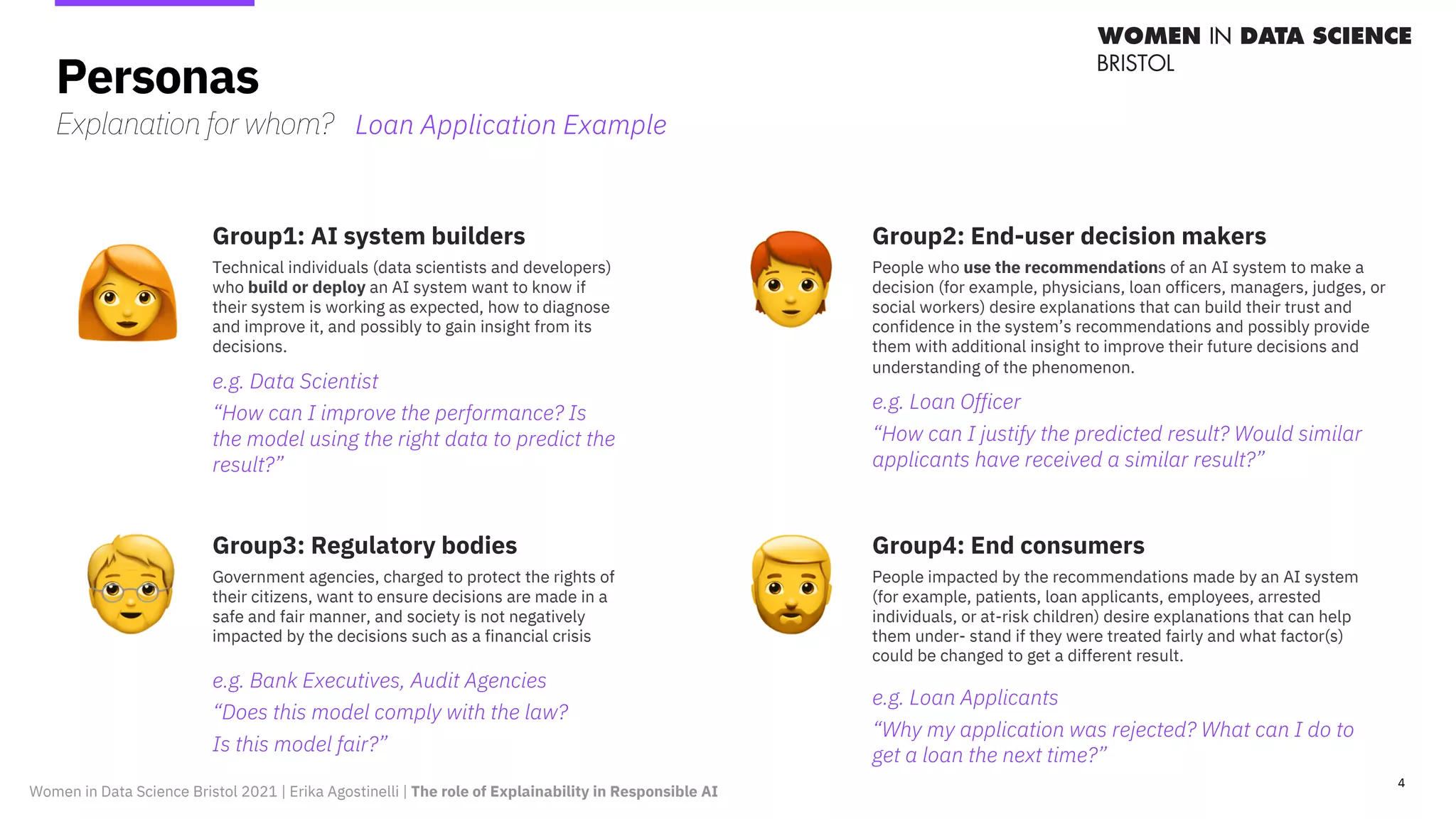

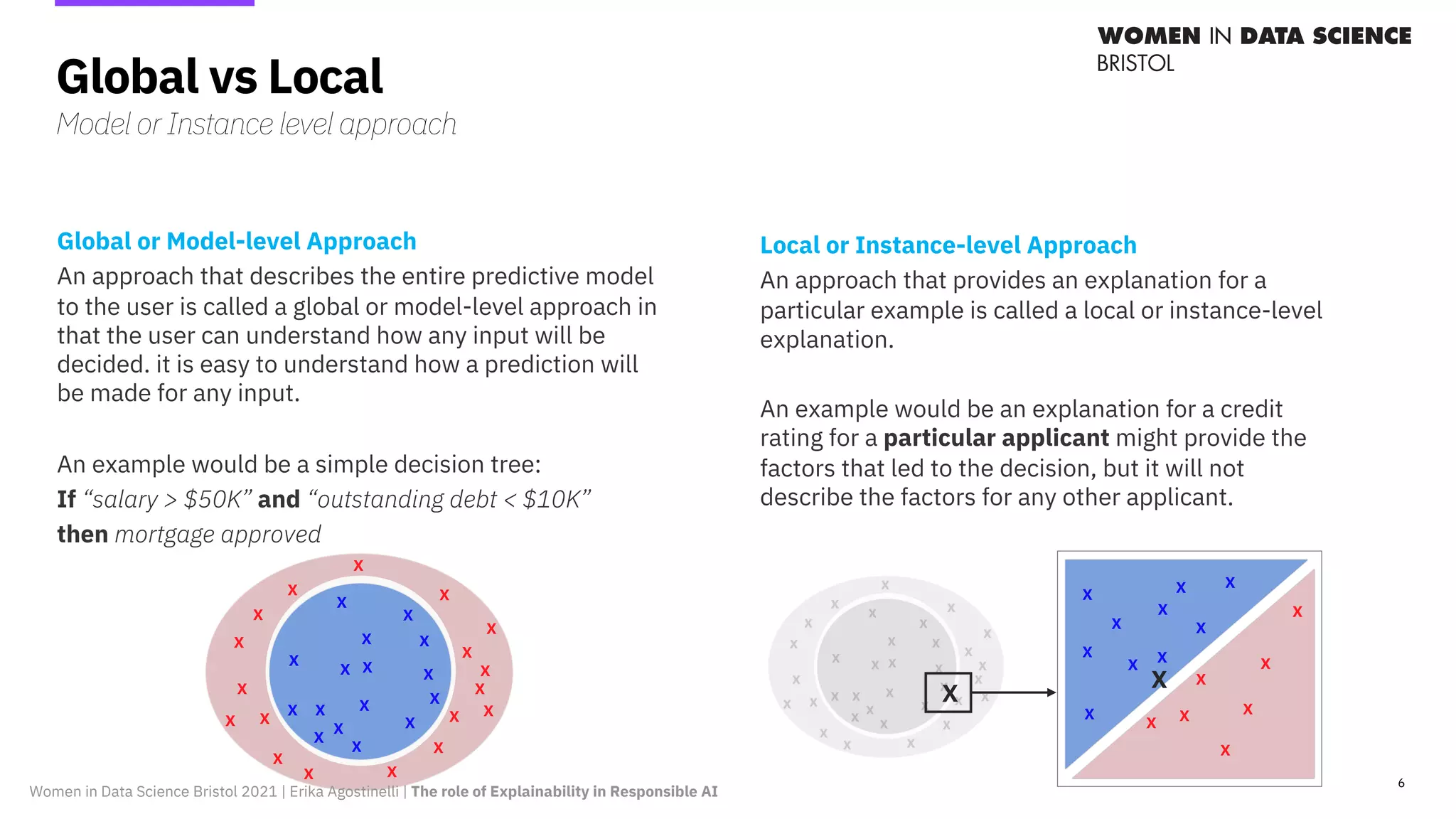

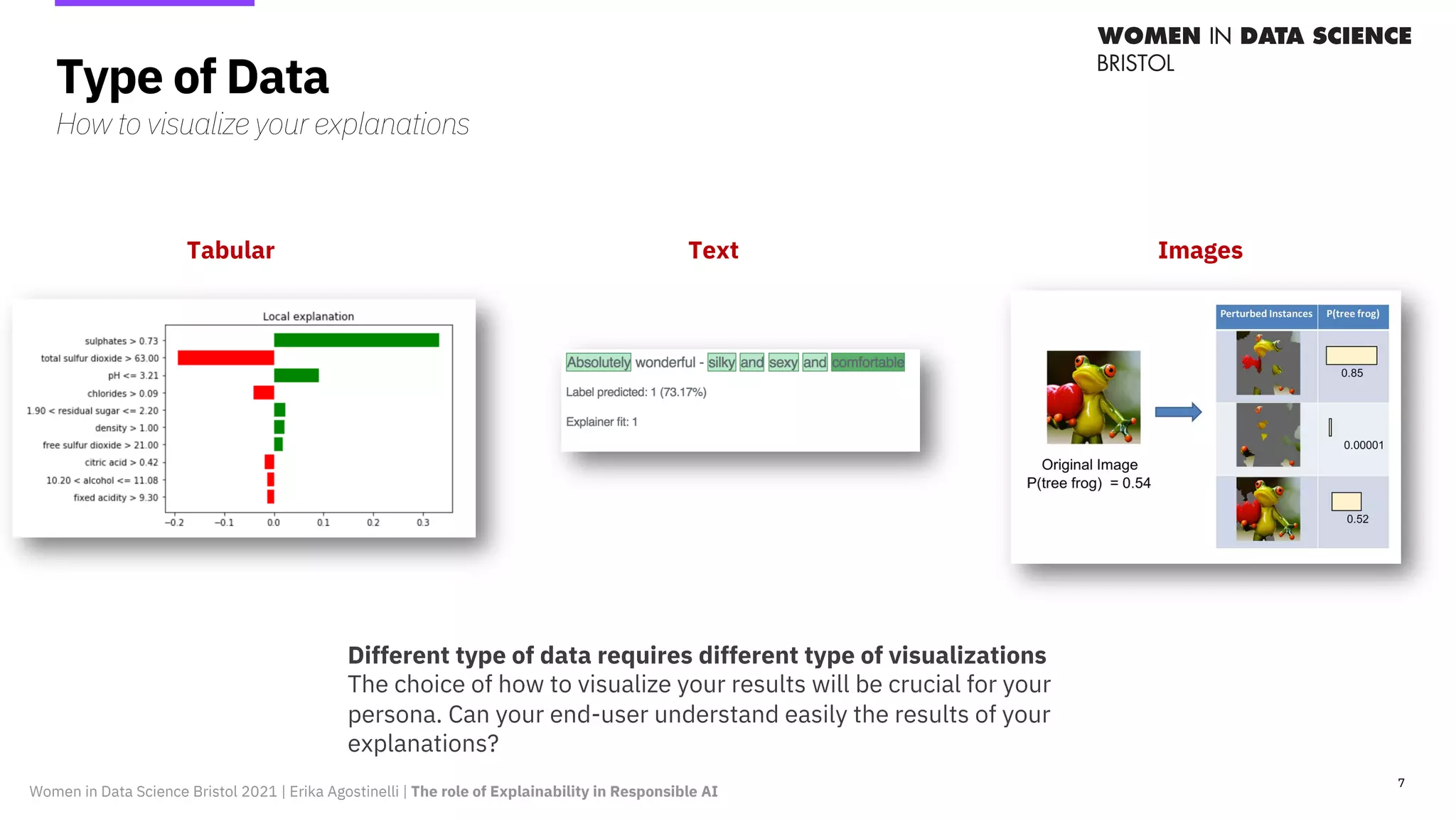

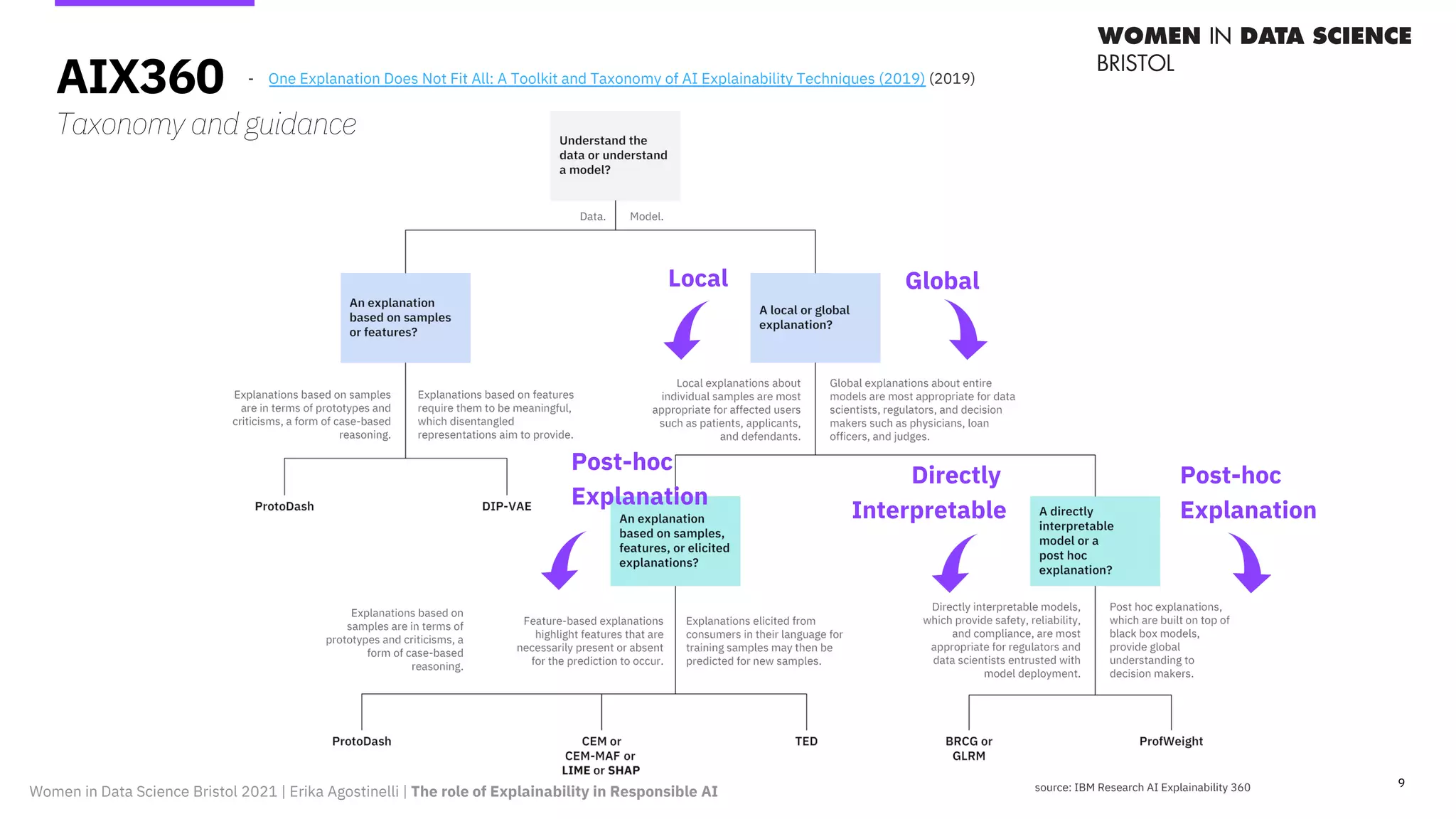

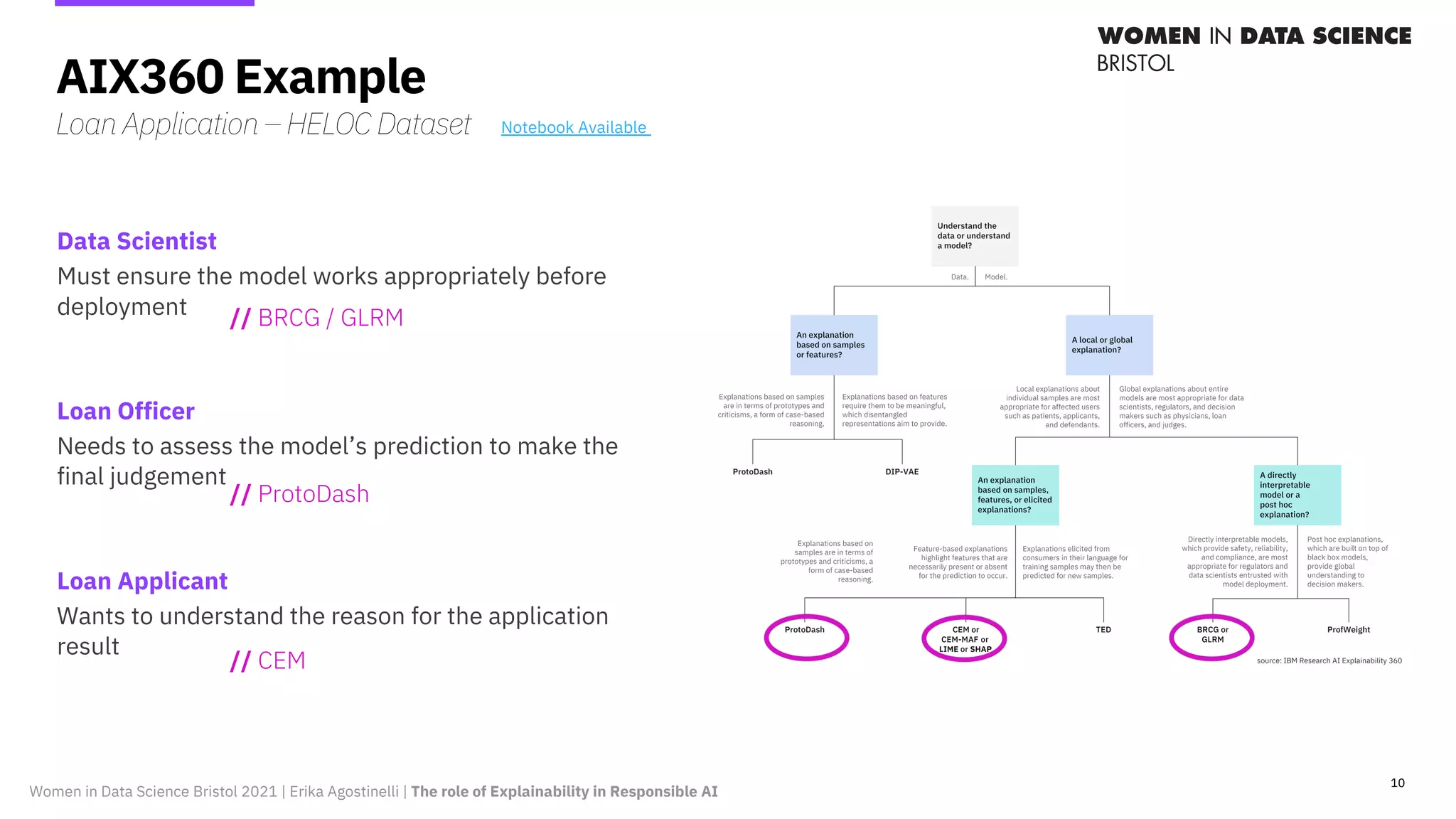

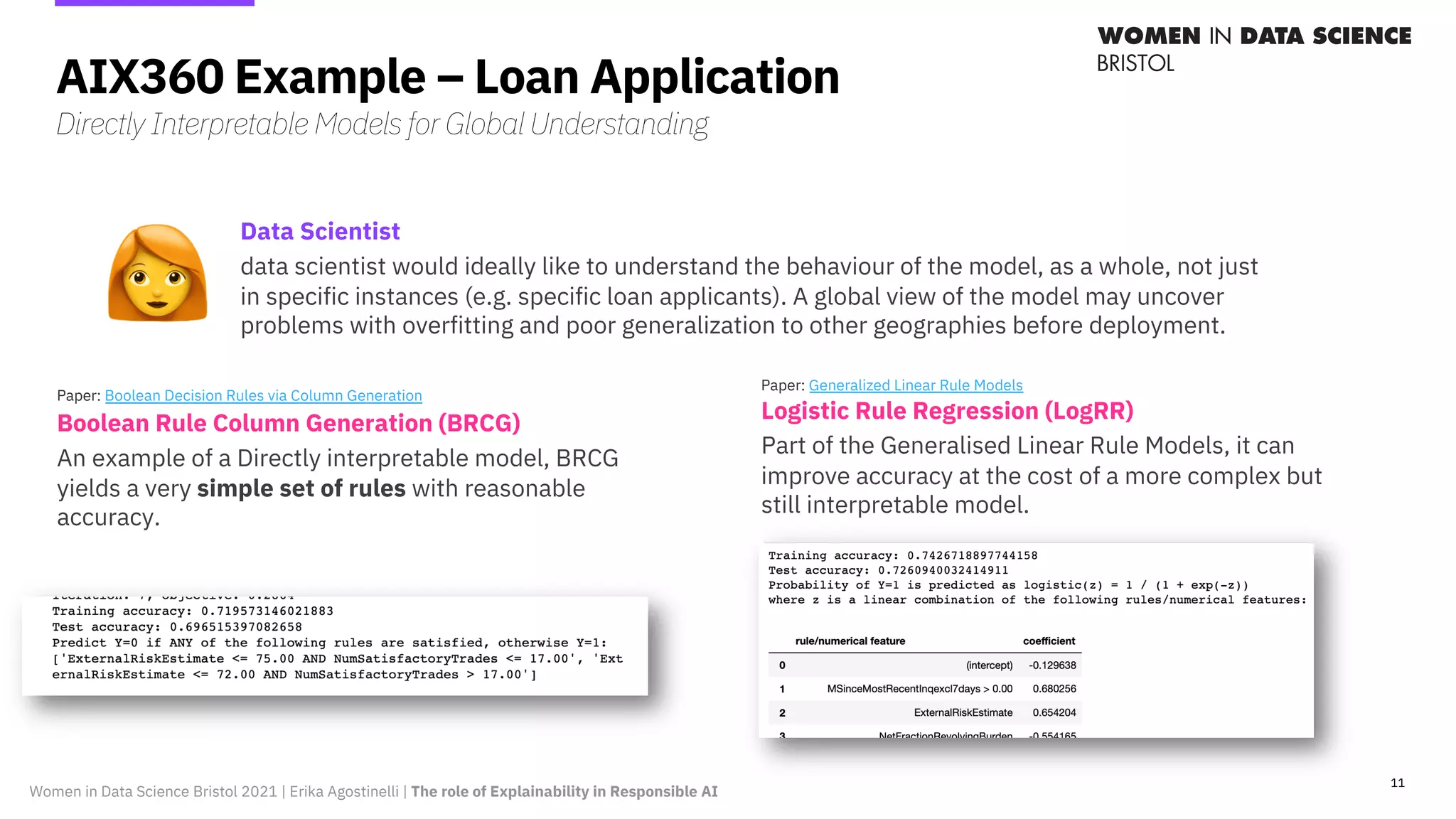

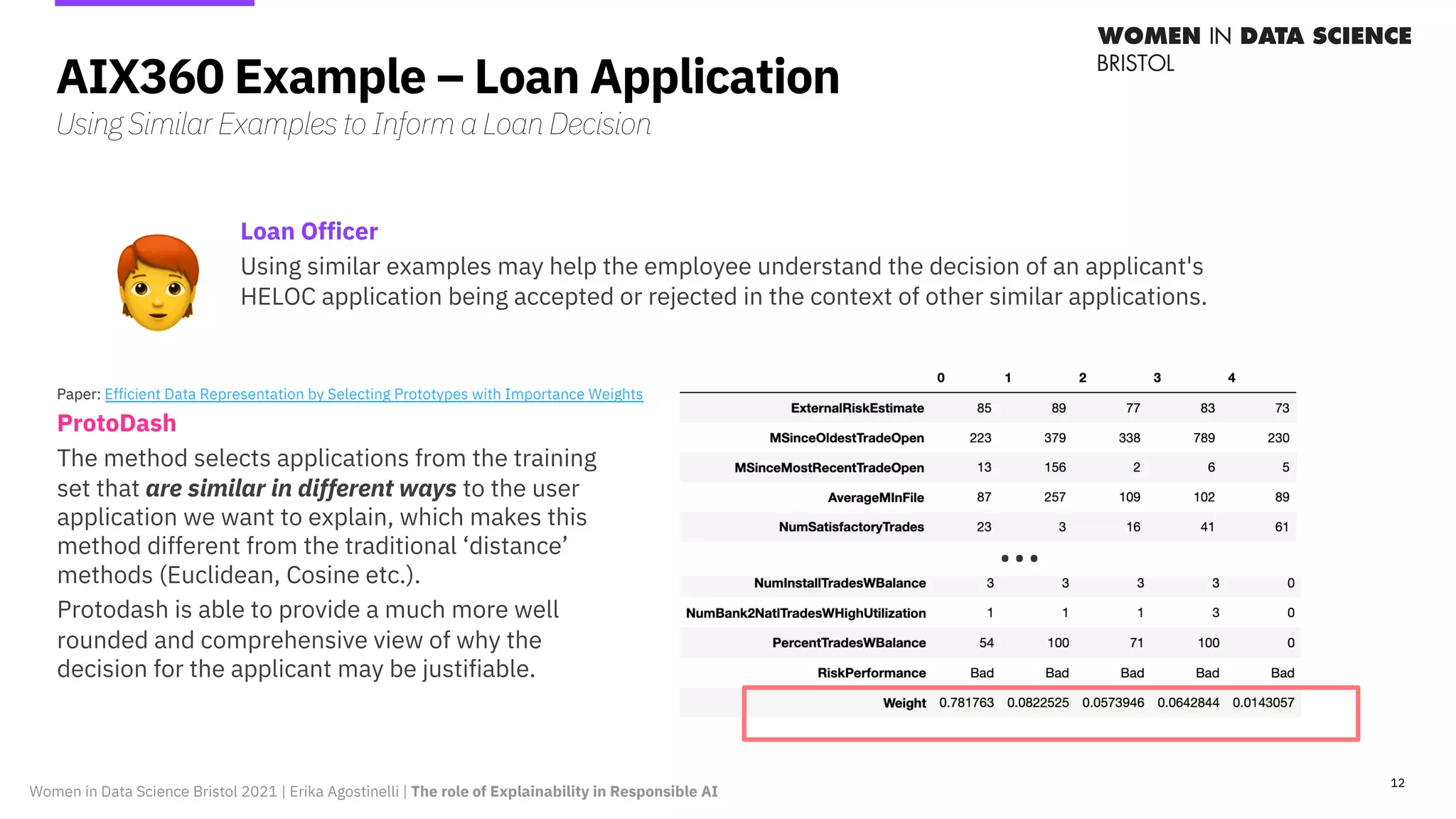

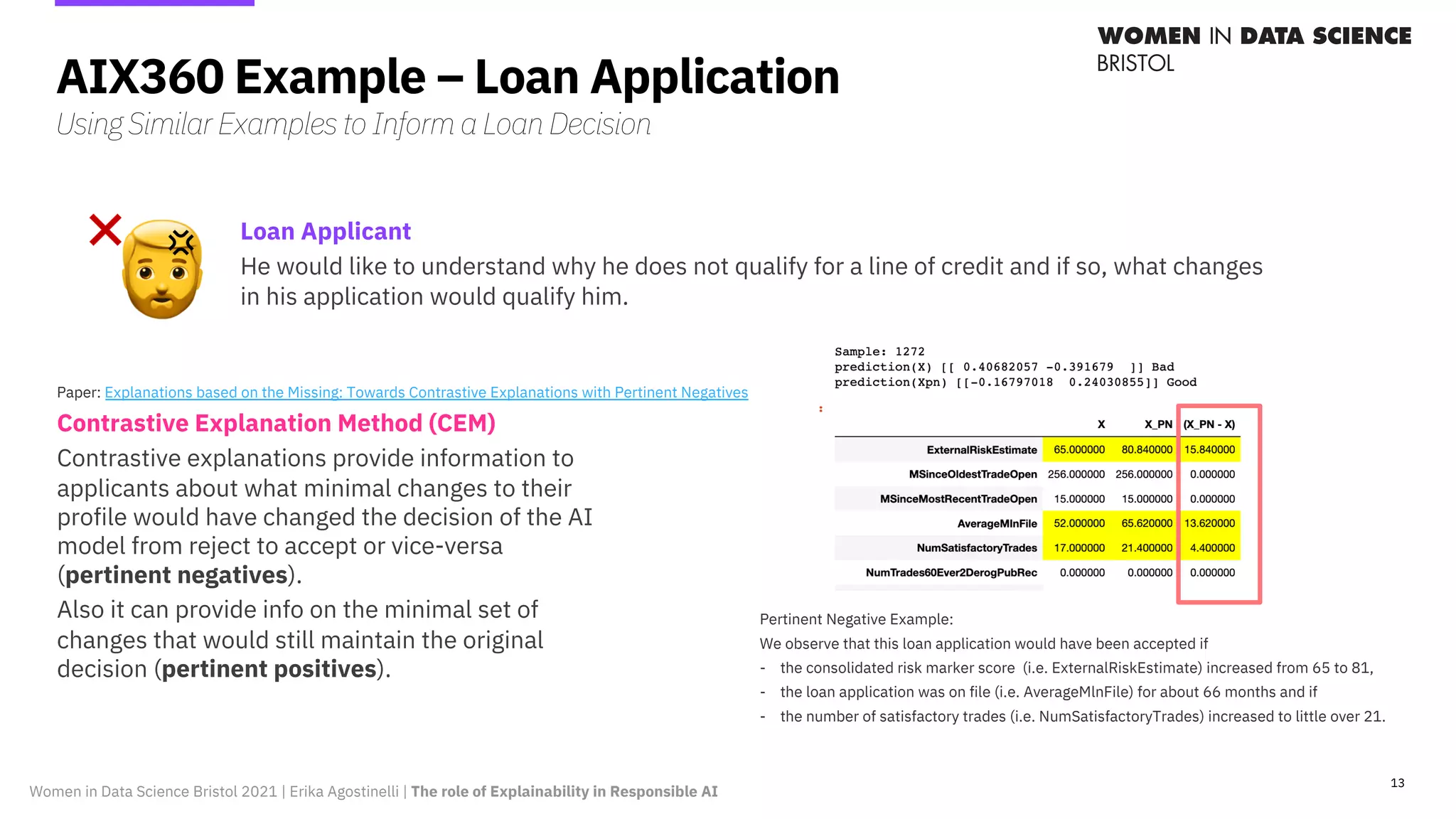

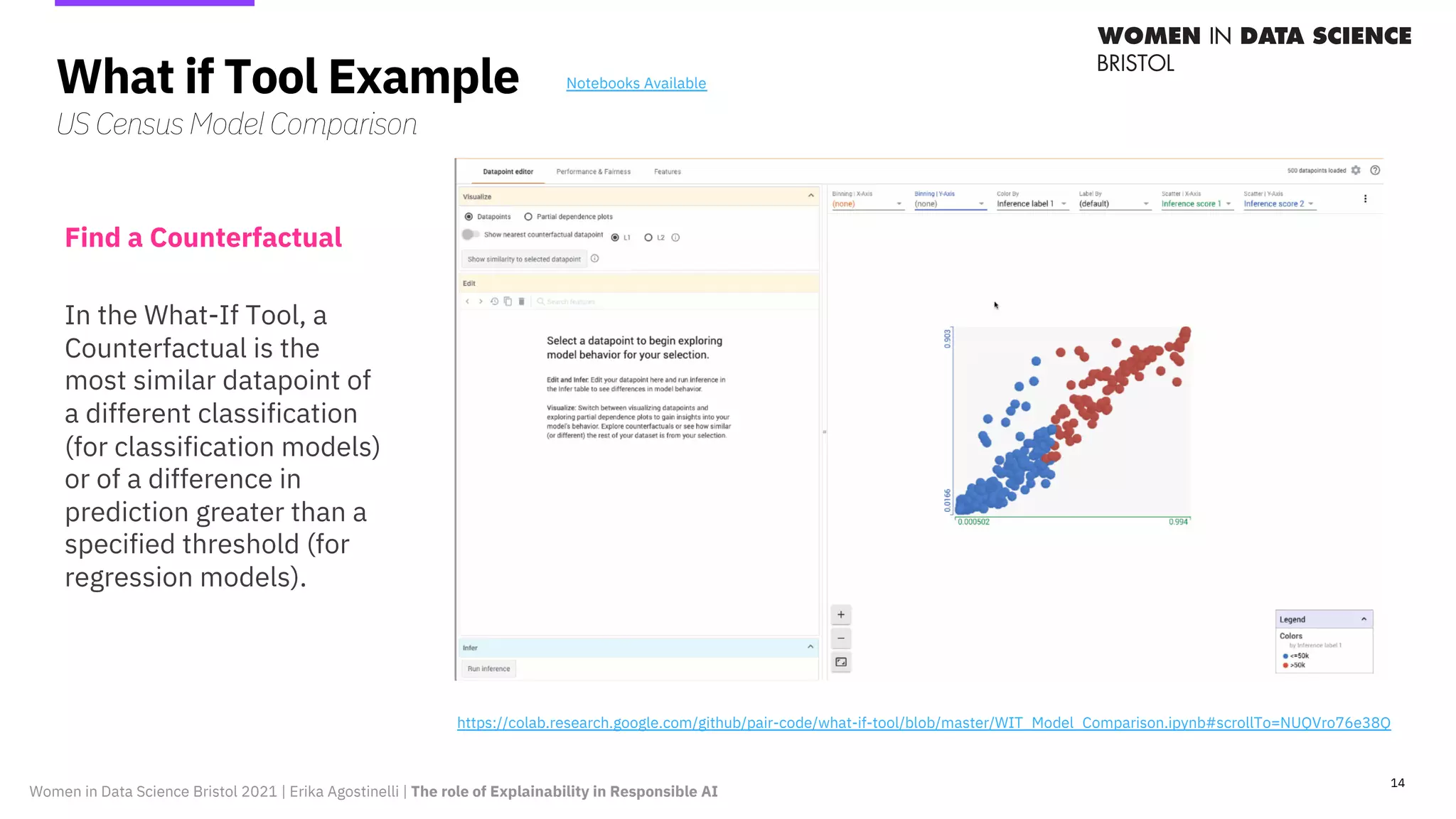

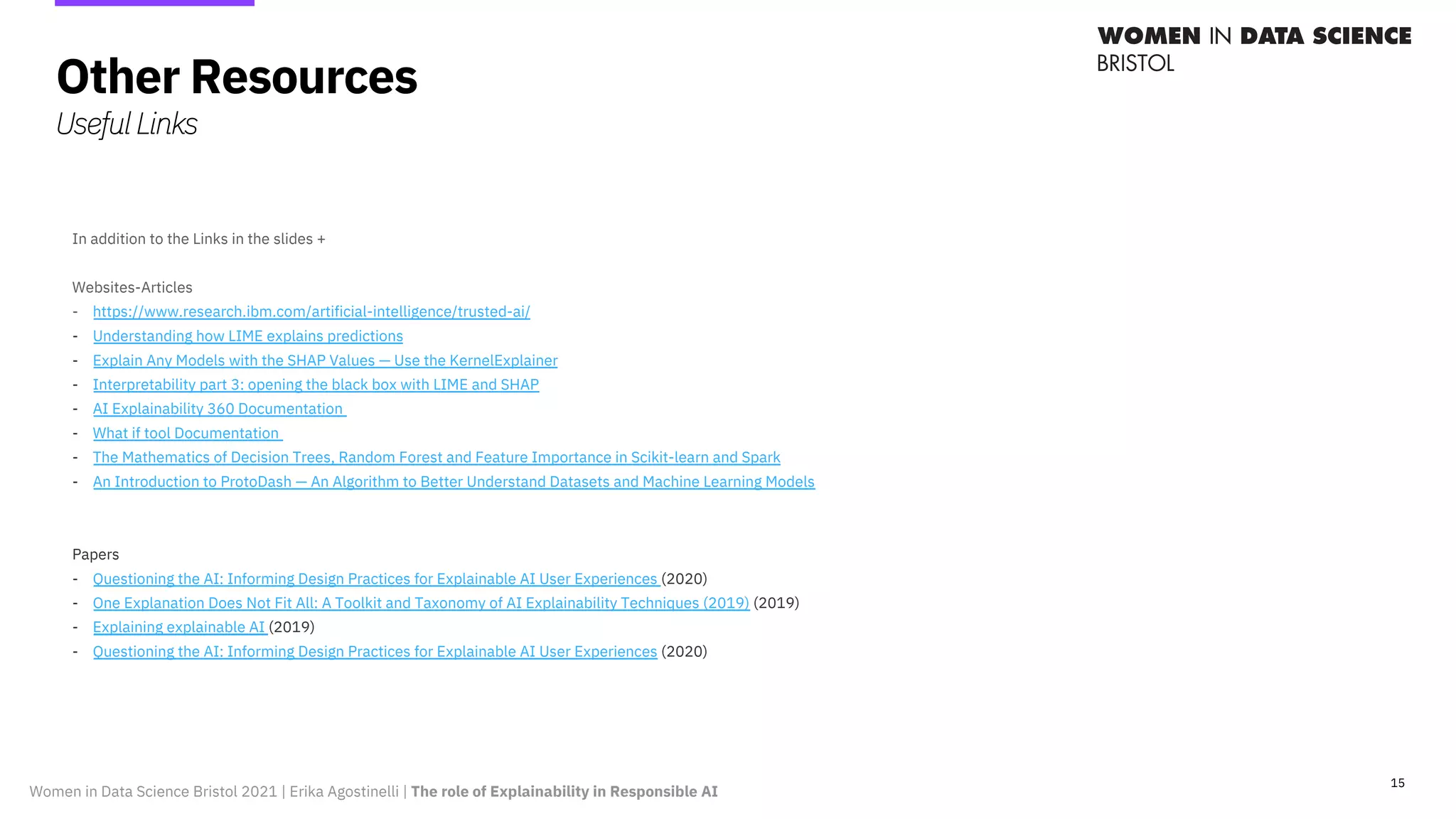

The document discusses the importance of explainability in responsible AI. It outlines different types of explanations like global vs local and direct vs post-hoc explanations. It also describes who explanations are needed for, such as data scientists, end users, and regulators. Open-source explanation tools are presented, including AIX360 and What-If Tool. An example using AIX360 to explain a loan approval model with different techniques is described in detail.