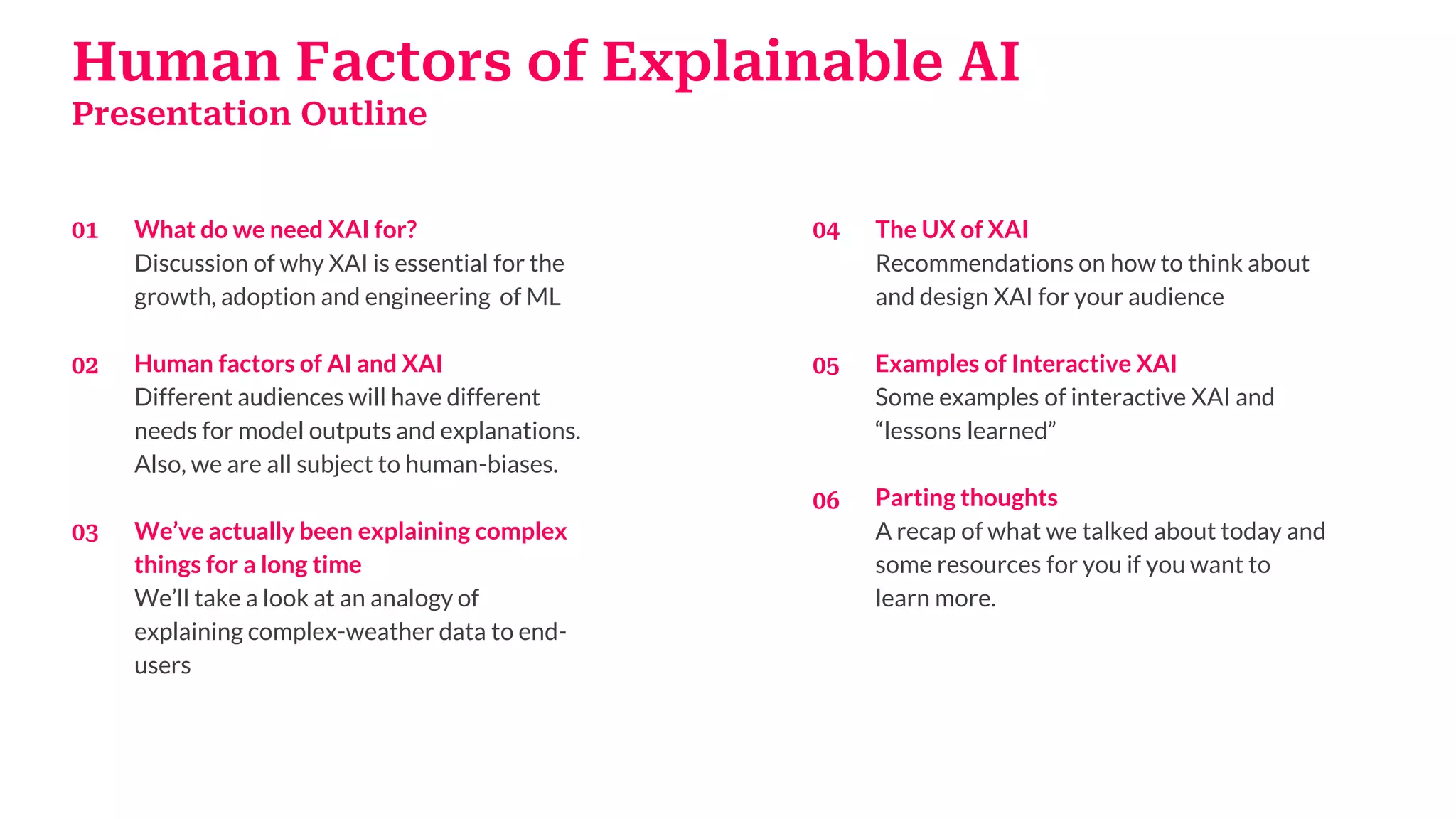

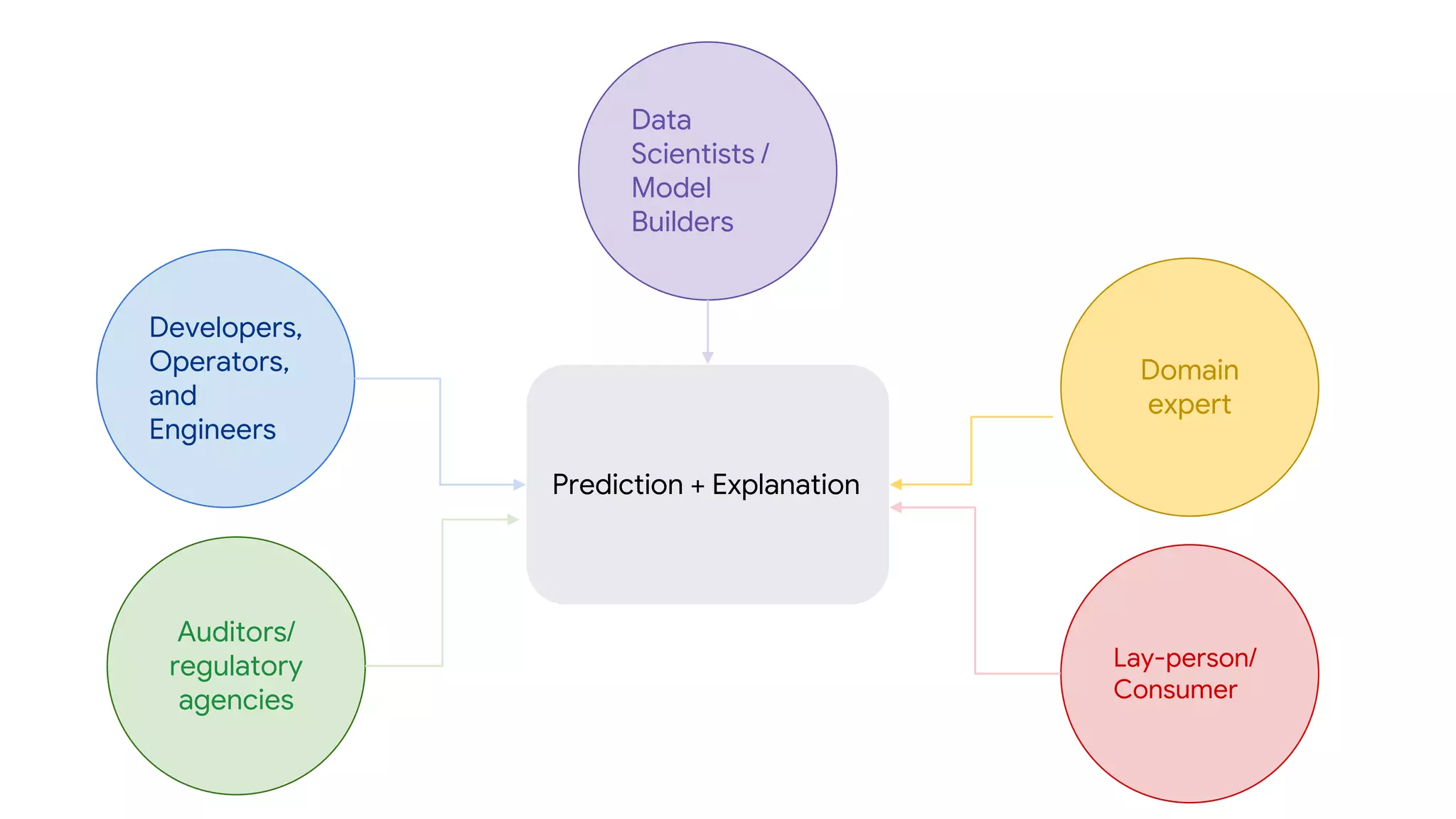

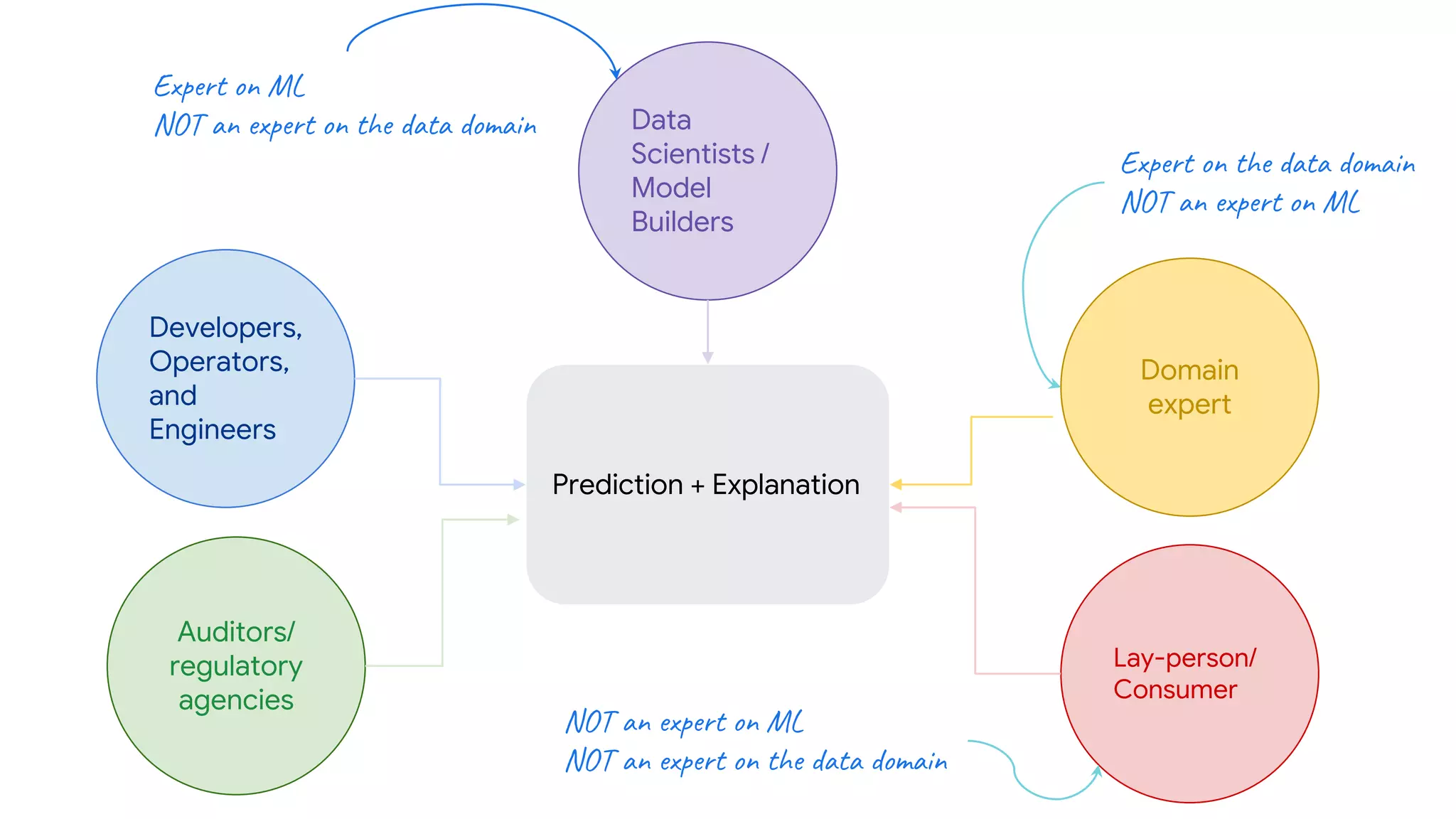

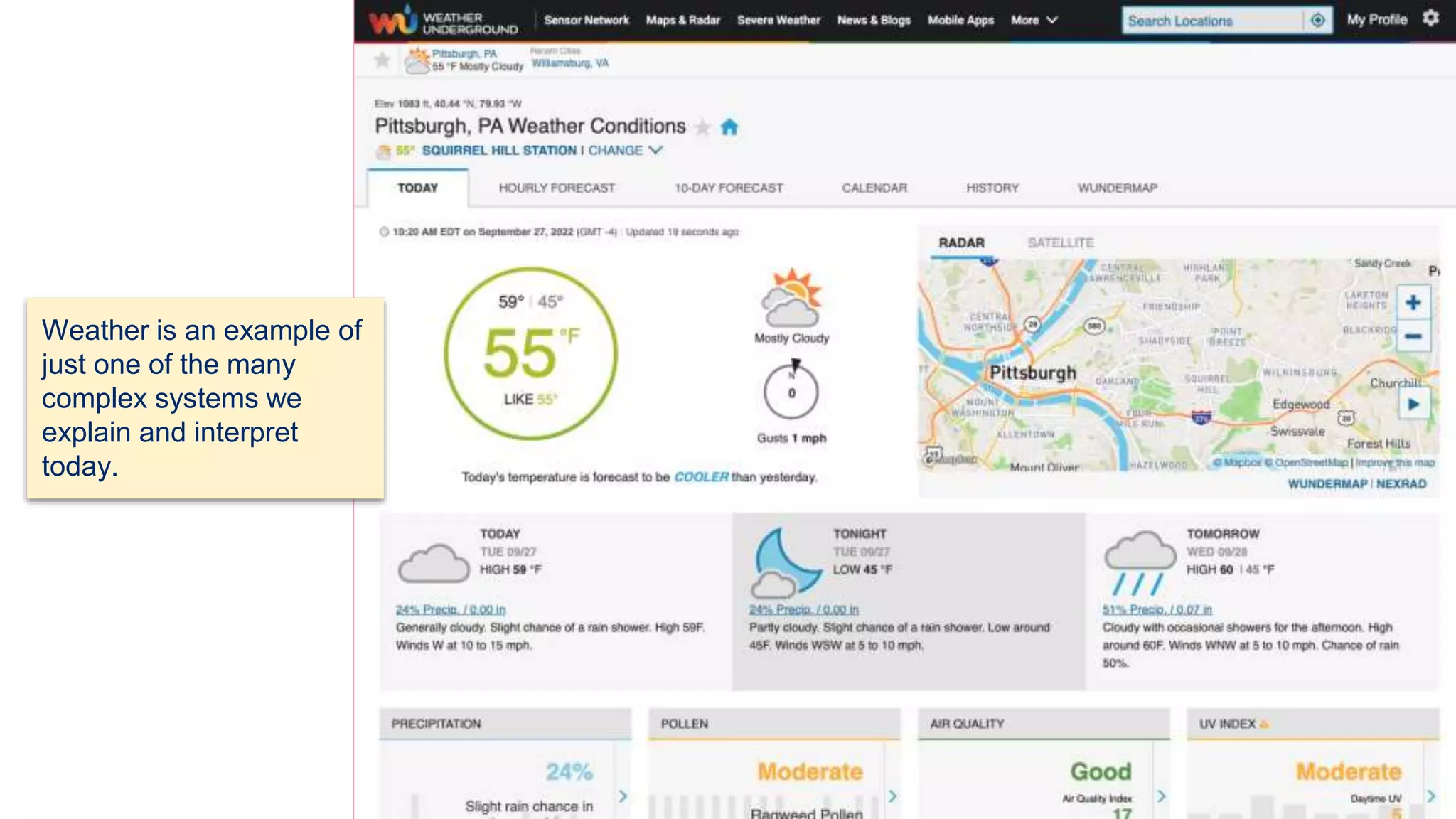

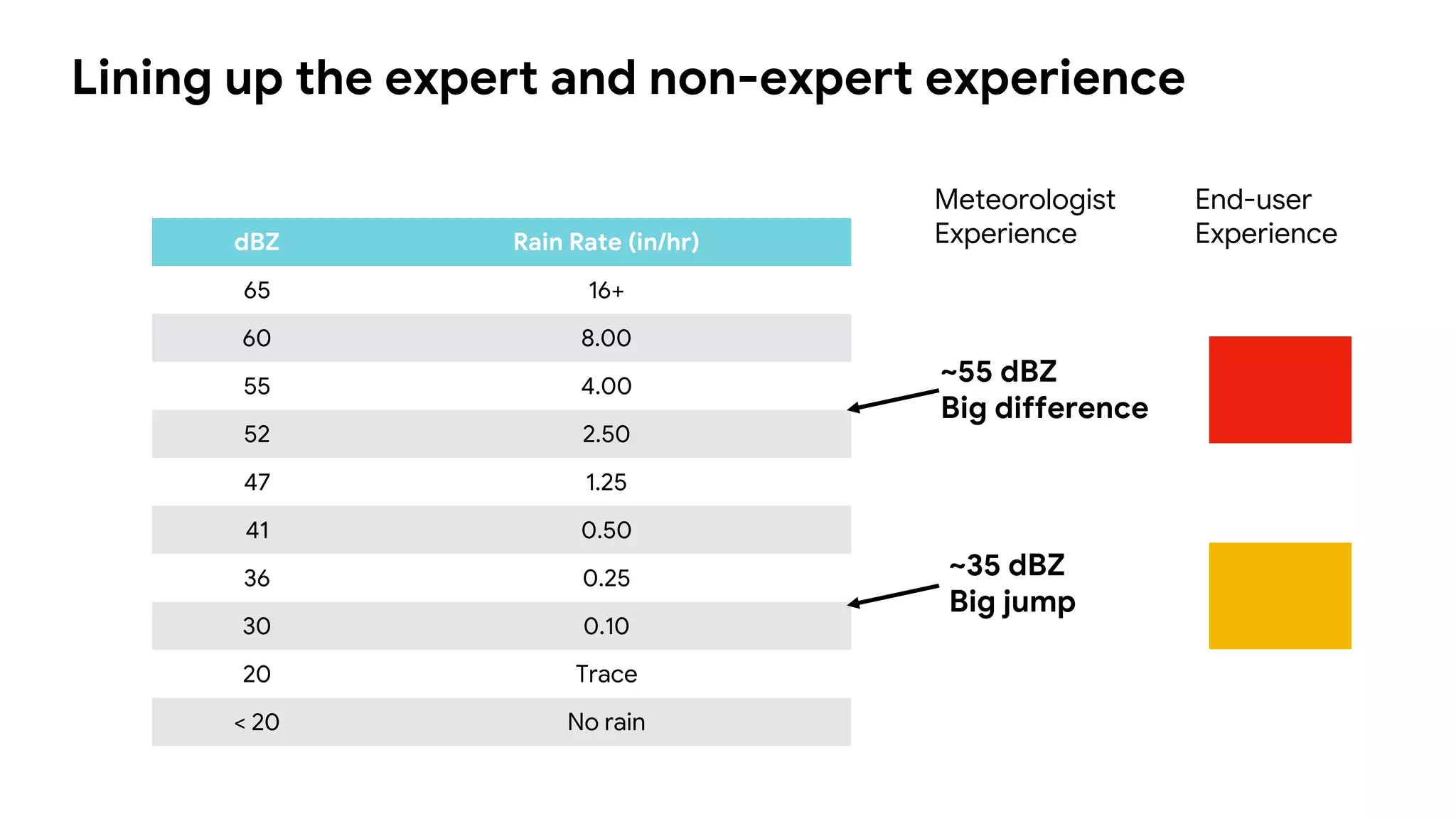

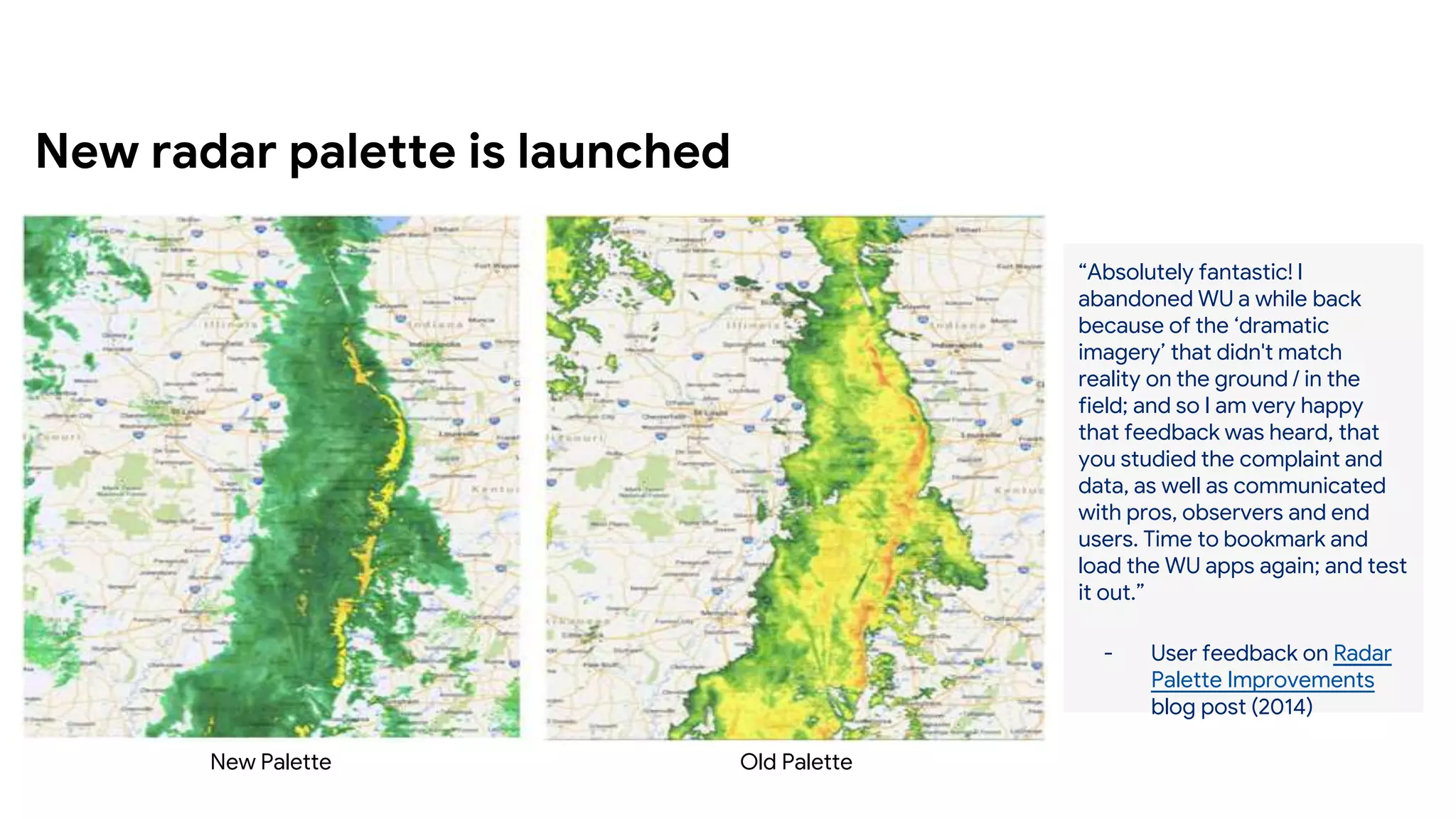

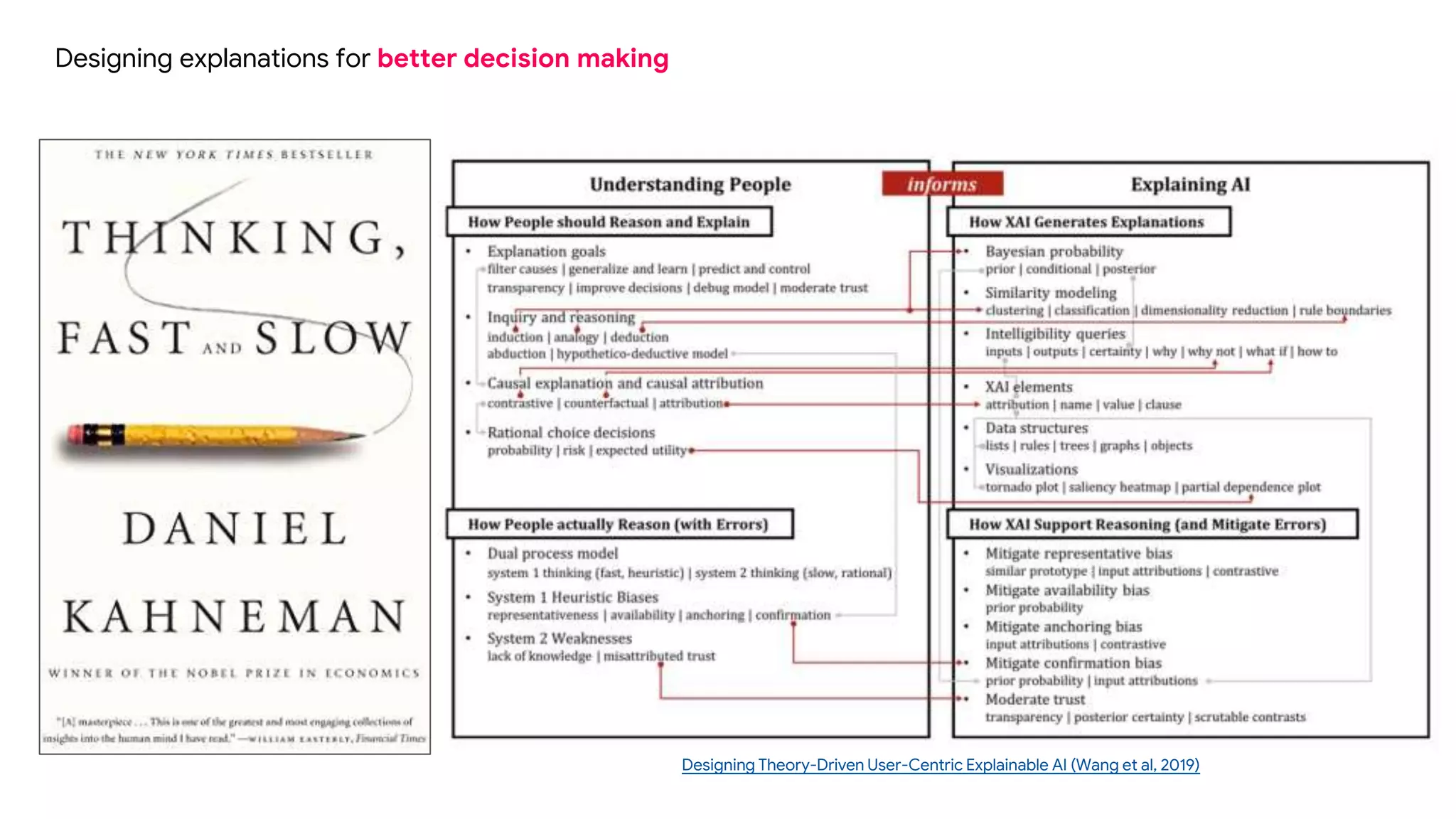

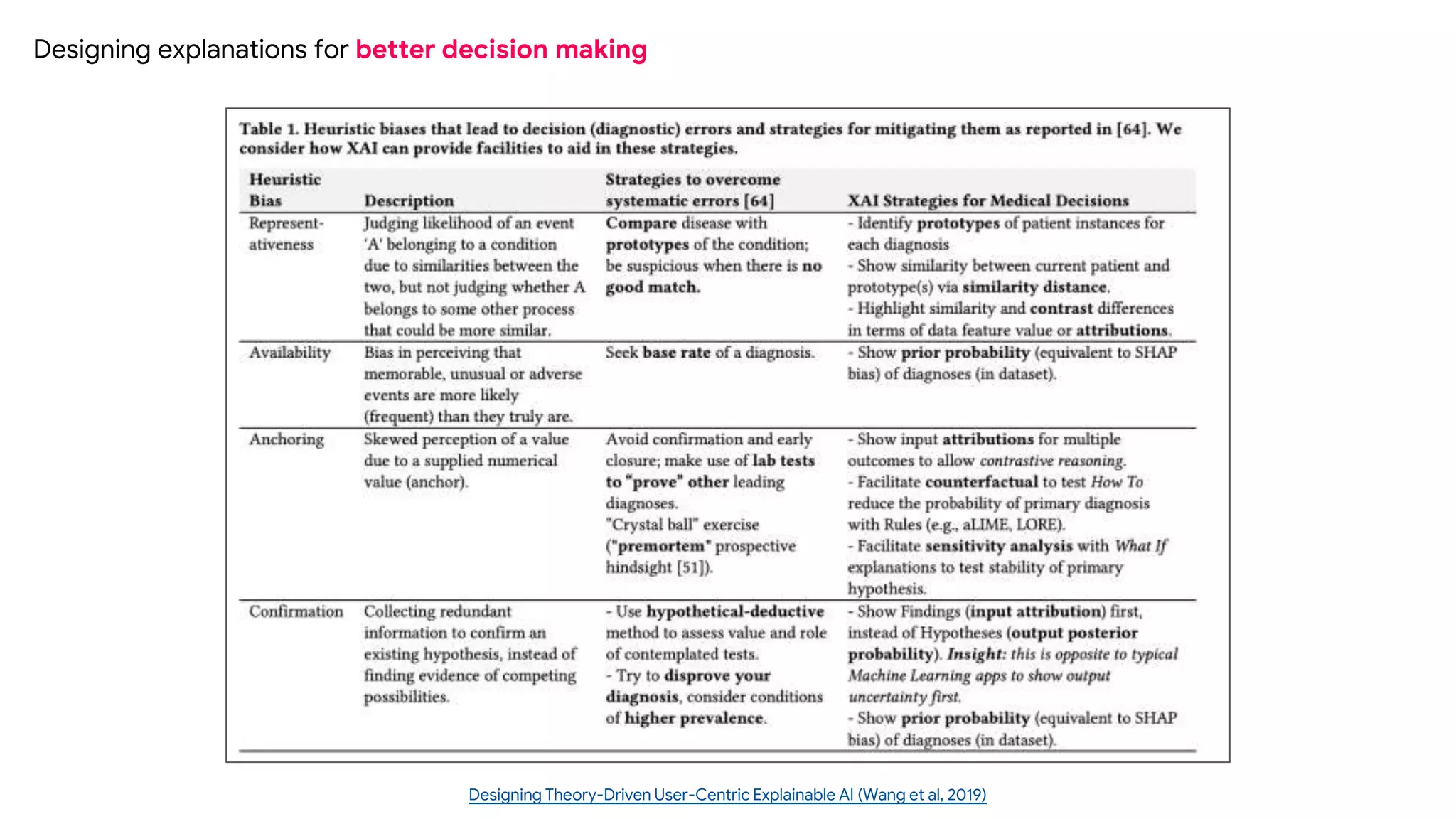

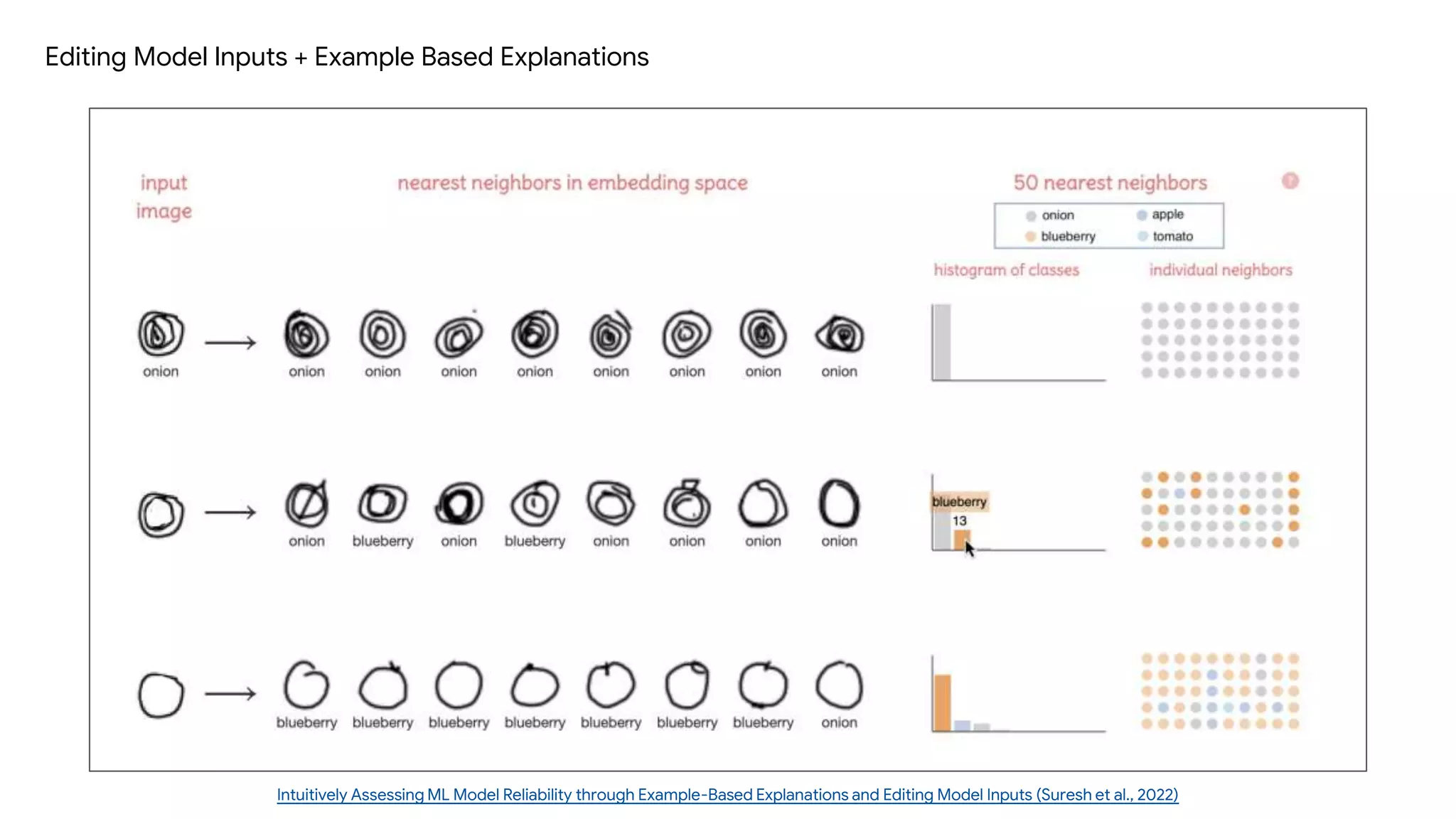

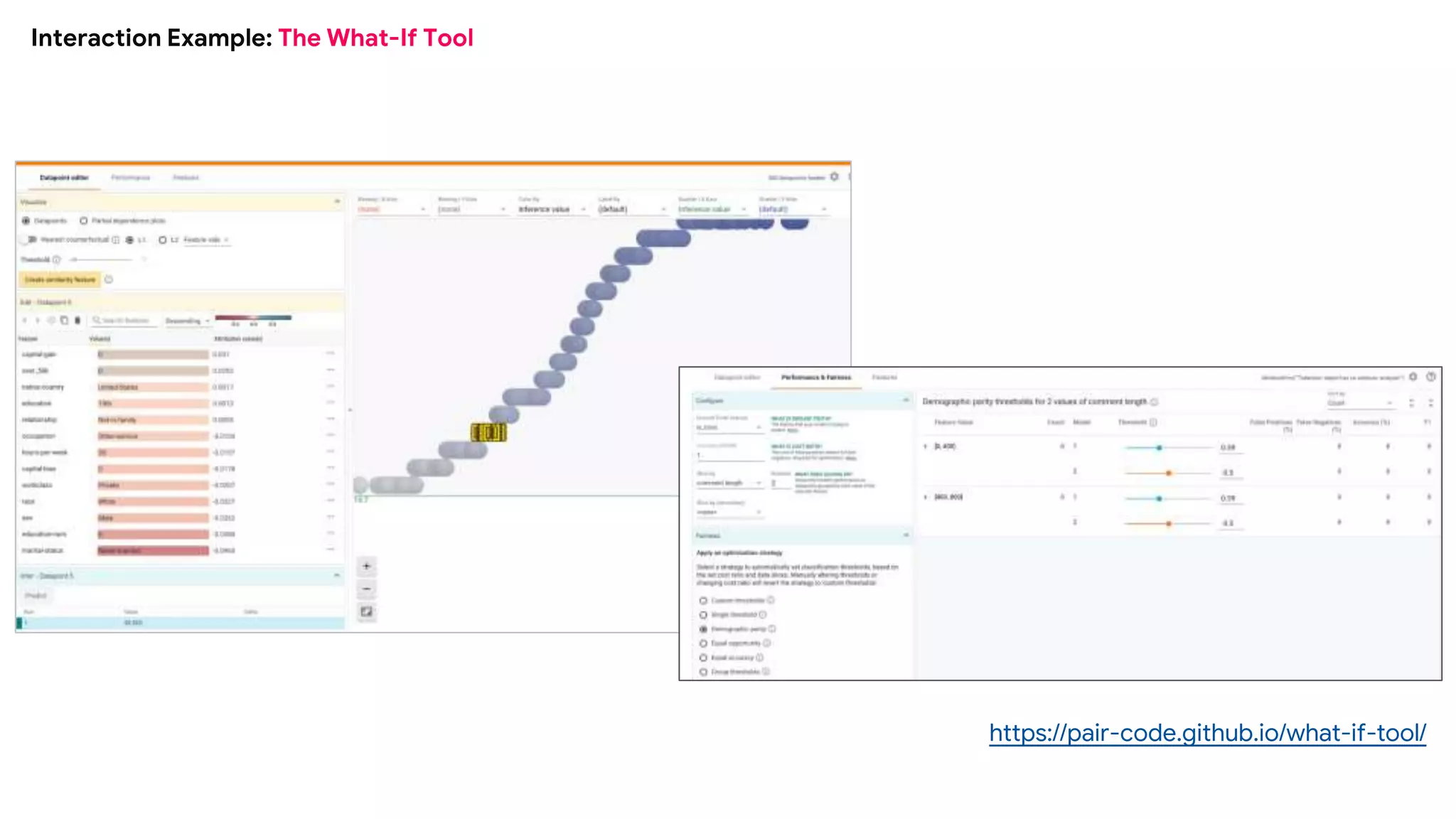

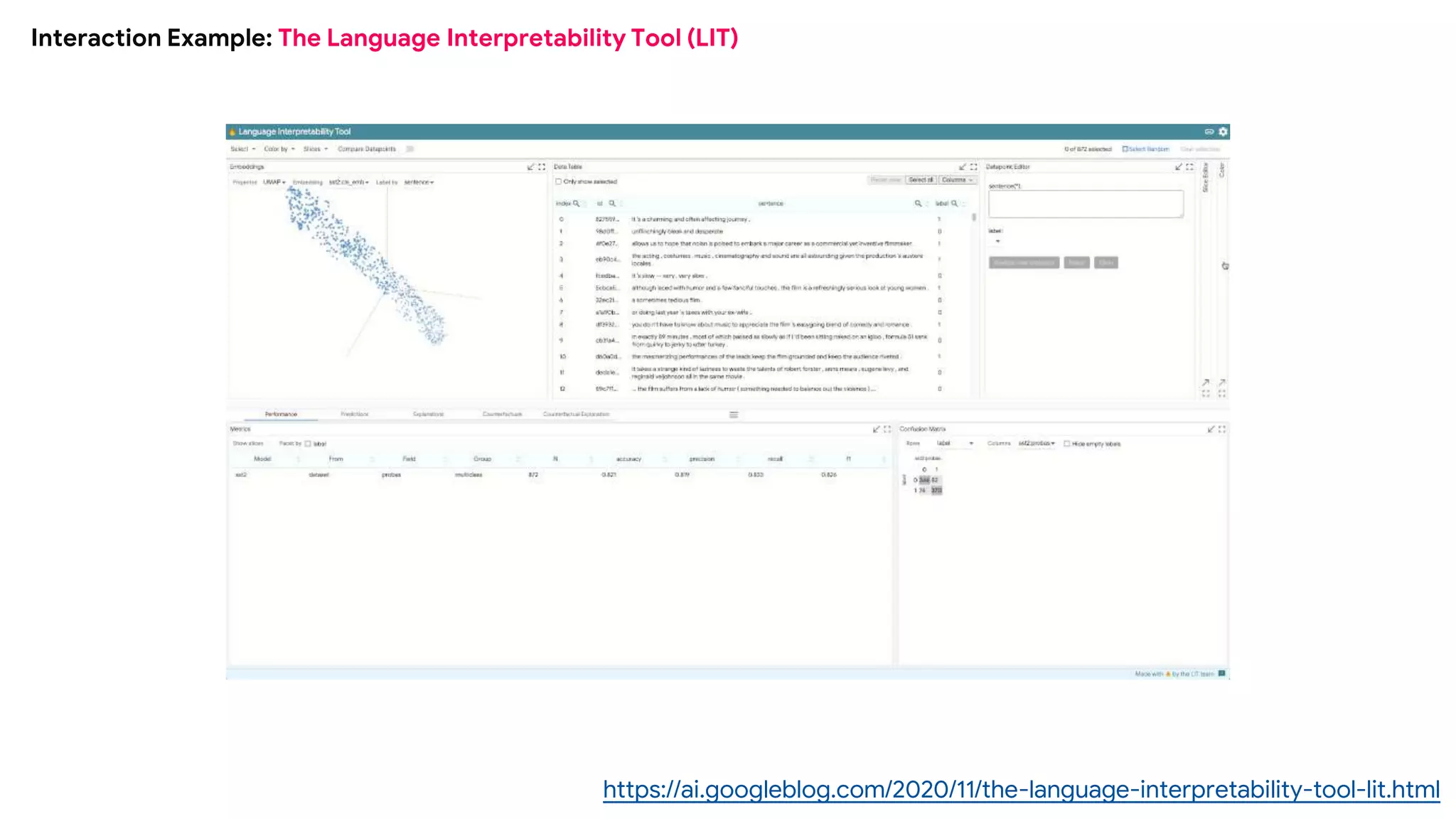

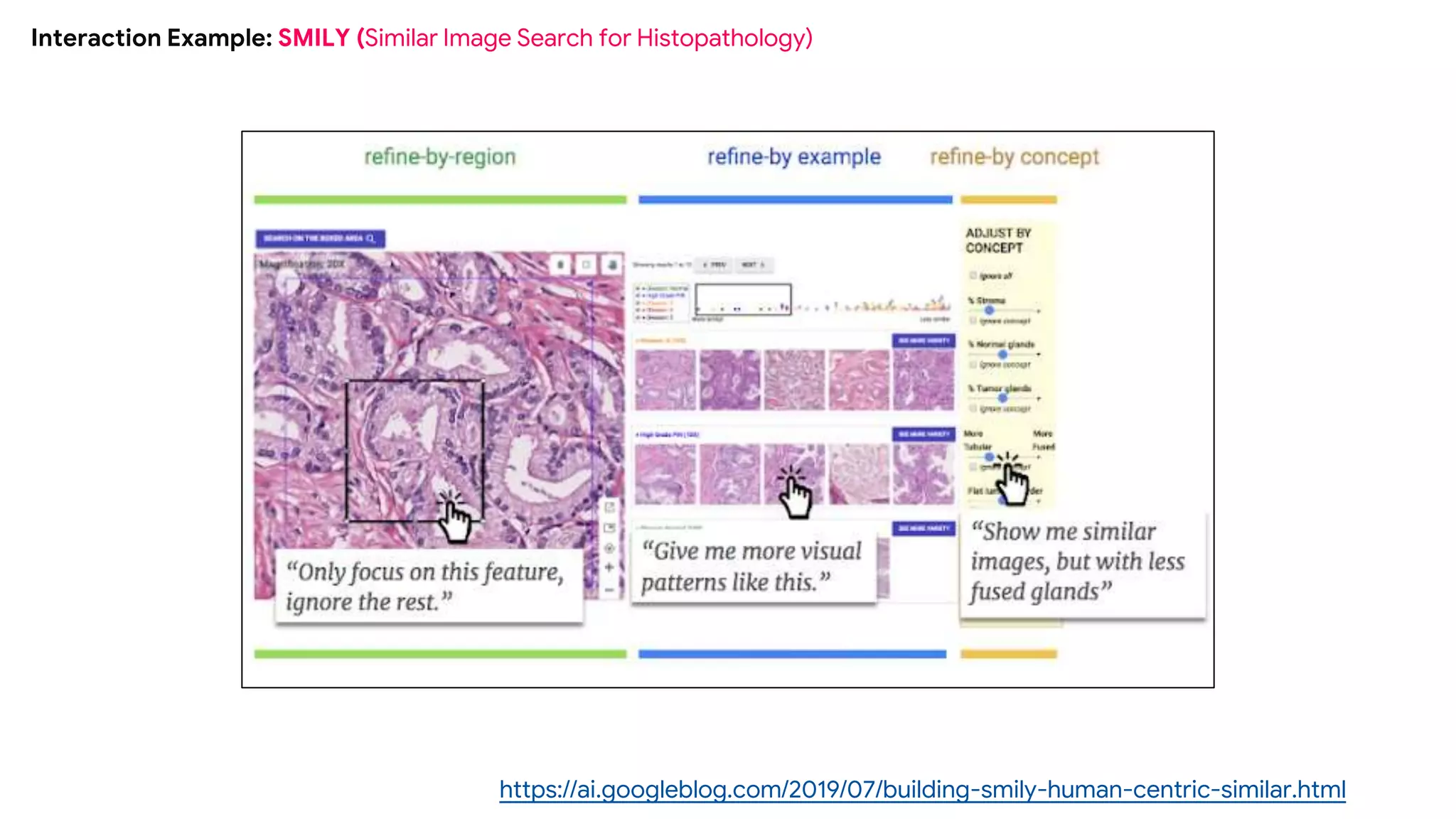

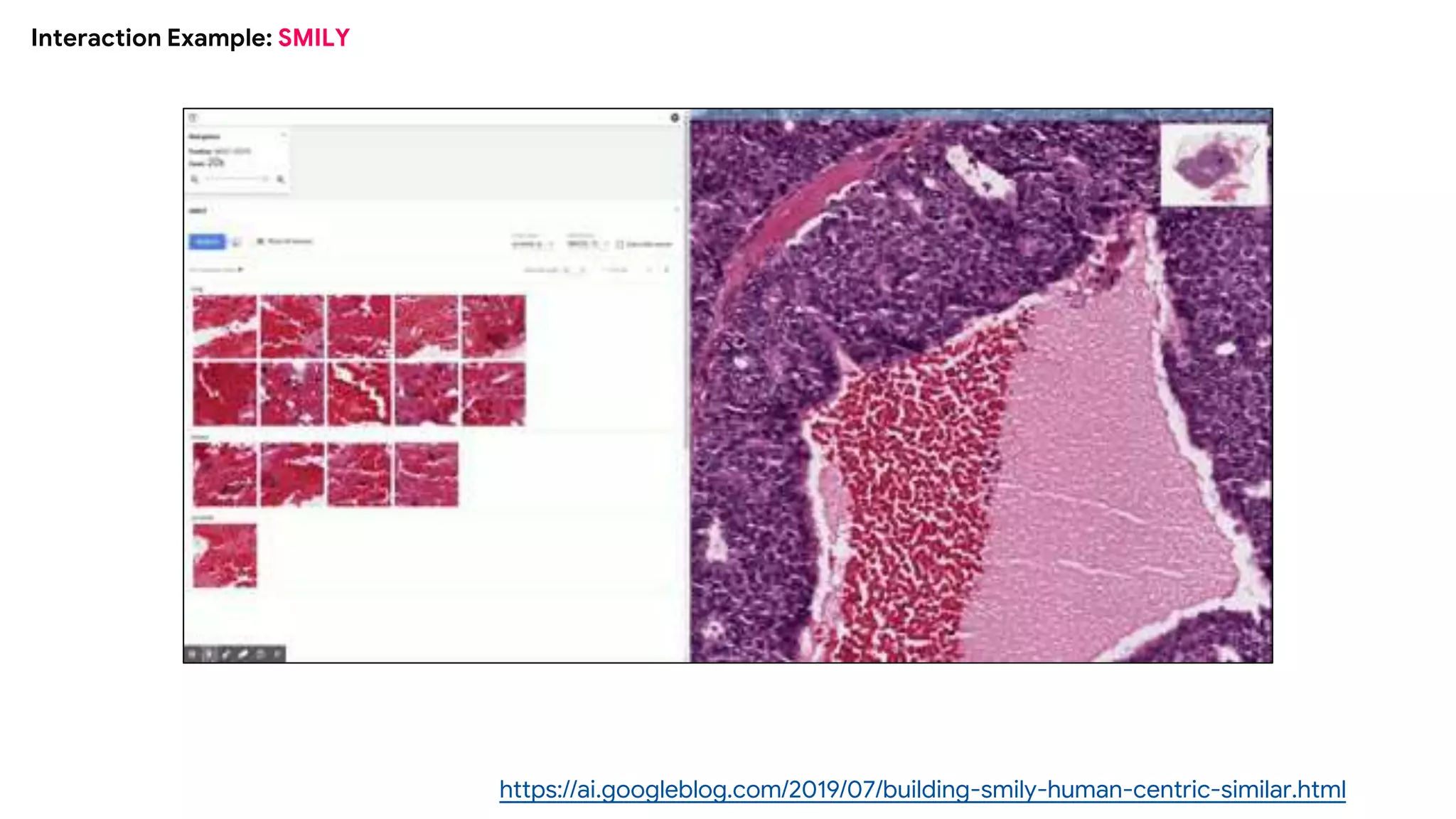

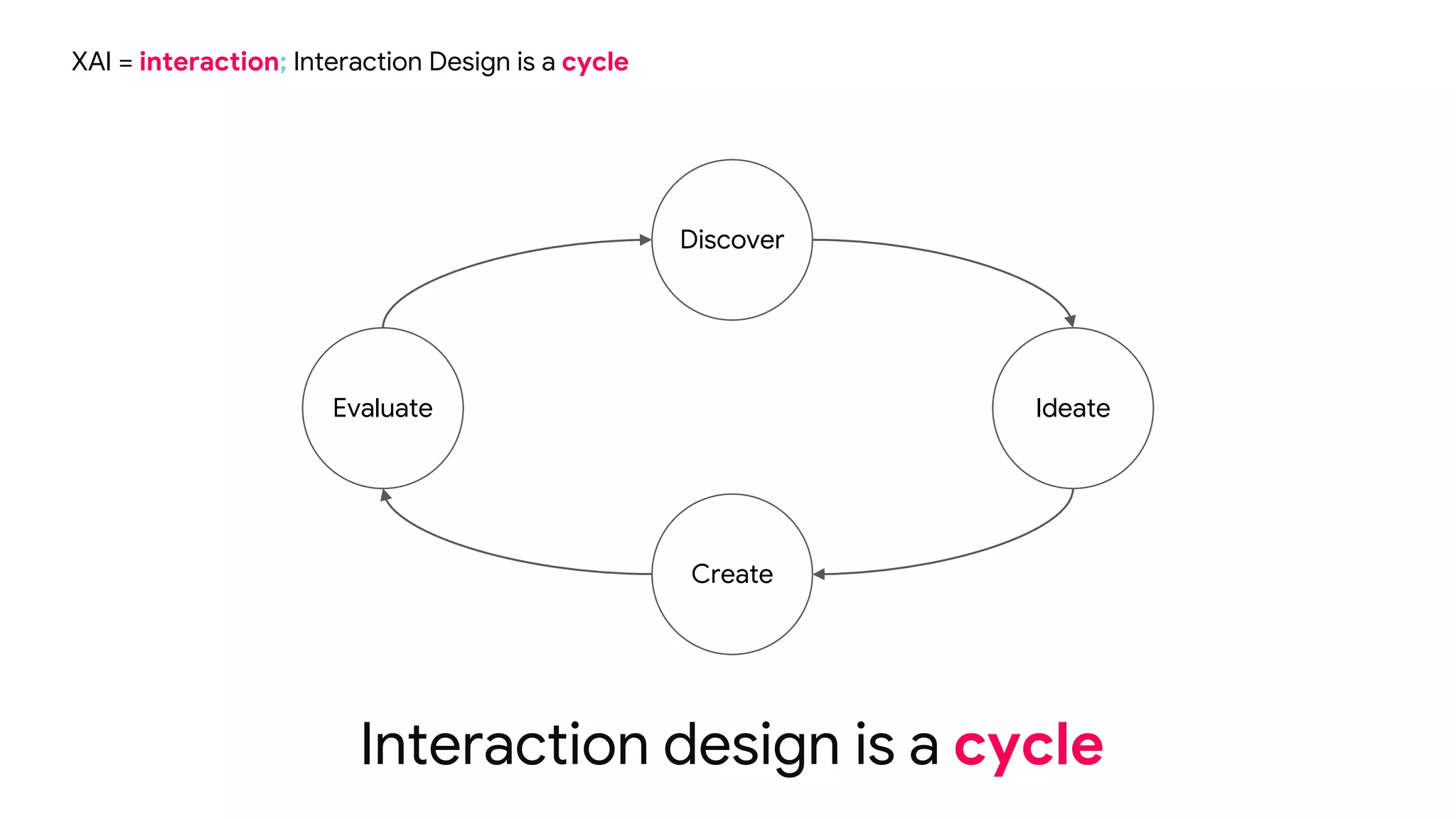

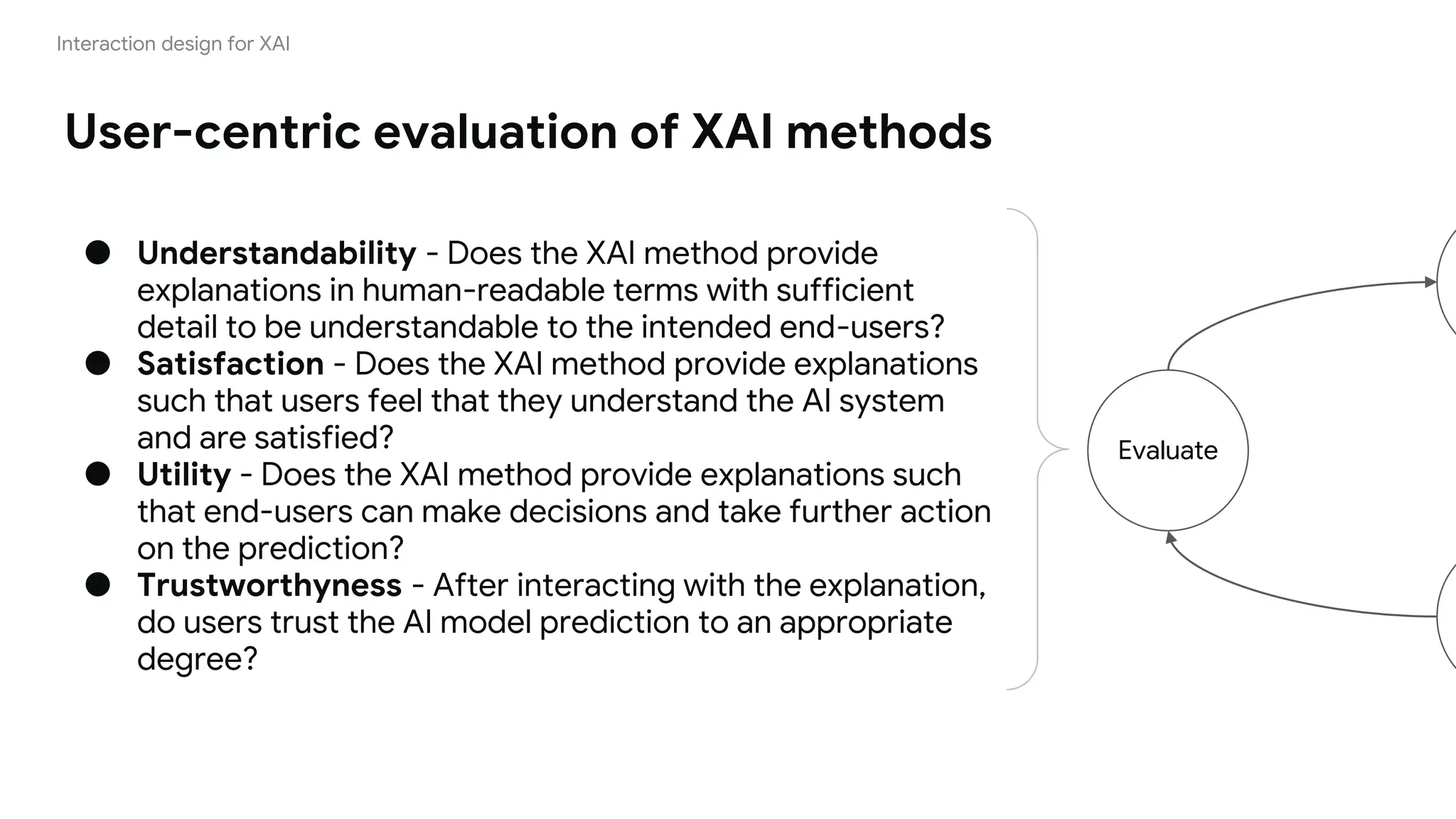

The document discusses the importance of explainable artificial intelligence (XAI) for facilitating machine learning (ML) adoption, addressing issues like human biases and decision-making transparency. It highlights the varying needs for model explanations among different audiences, emphasizes designing user-centric explanations, and discusses interactive XAI examples to enhance understanding and trust. The author, Meg Kurdziolek, provides resources and insights on optimizing XAI for better user experience and utility.