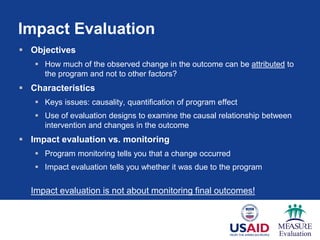

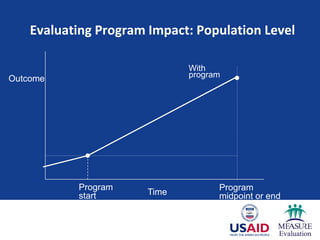

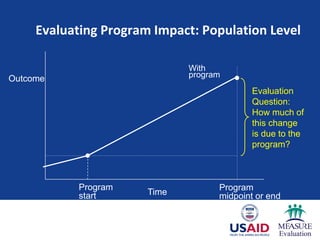

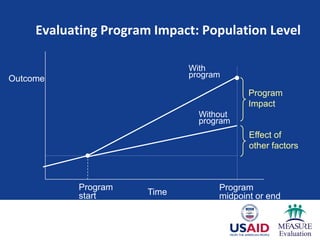

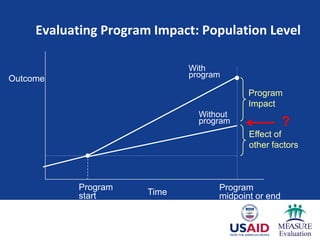

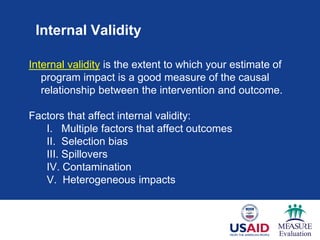

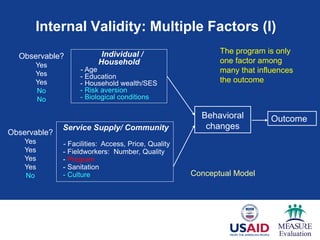

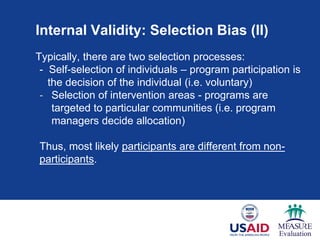

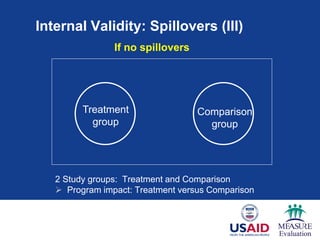

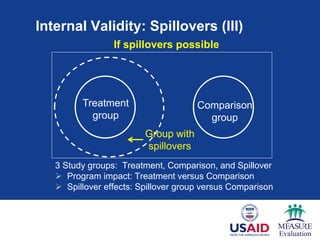

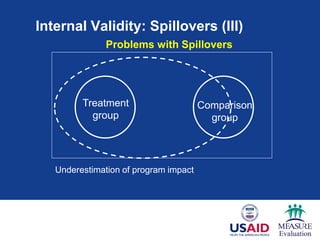

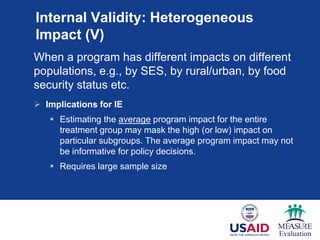

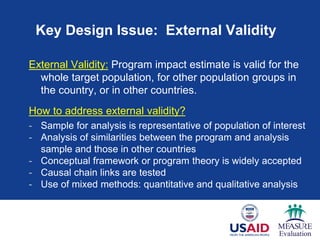

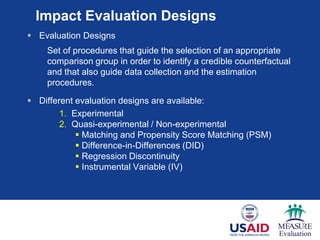

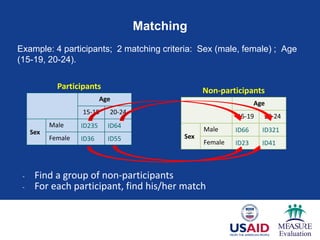

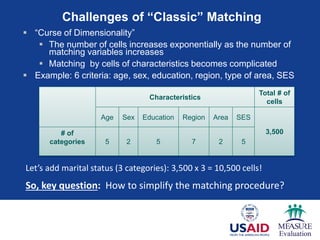

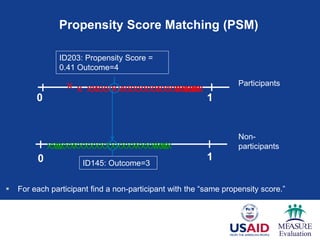

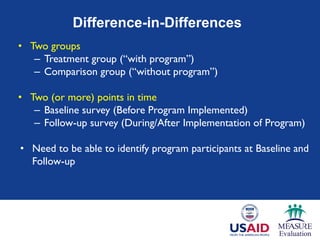

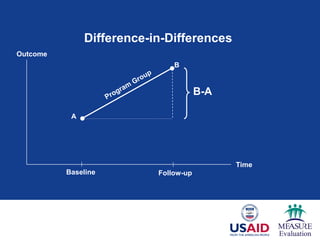

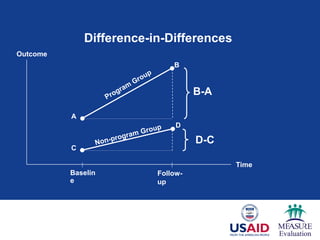

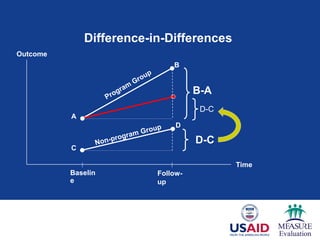

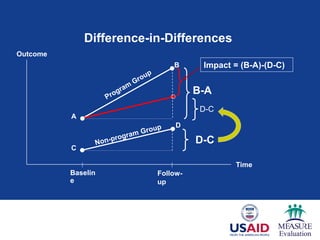

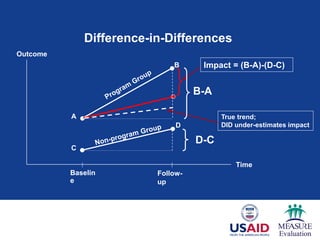

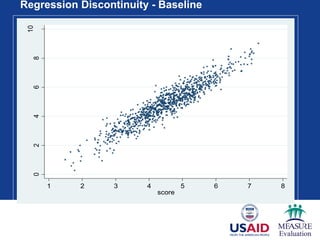

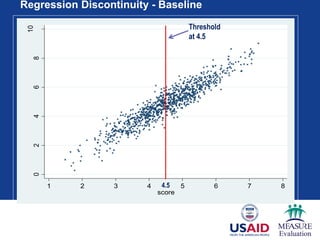

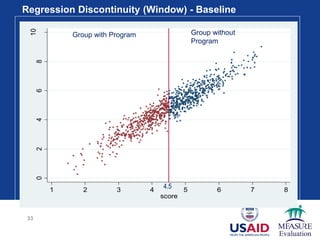

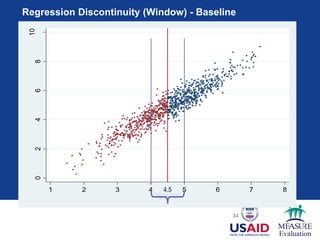

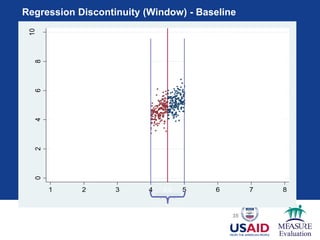

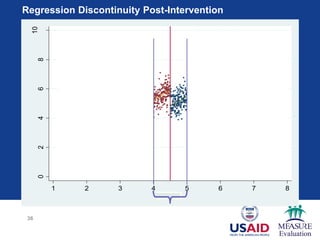

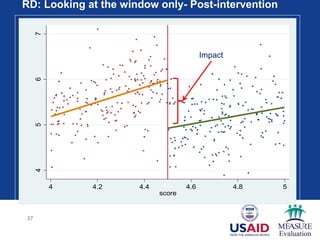

The document outlines a webinar discussing the principles of impact evaluation within USAID's framework, emphasizing the systematic collection and analysis of information to improve program effectiveness and accountability. It details evaluation types, key concepts such as causality, internal and external validity, and various methodologies for conducting evaluations, including experimental and quasi-experimental designs. The importance of understanding the distinction between impact evaluation and performance monitoring is highlighted, alongside challenges like bias and spillover effects.