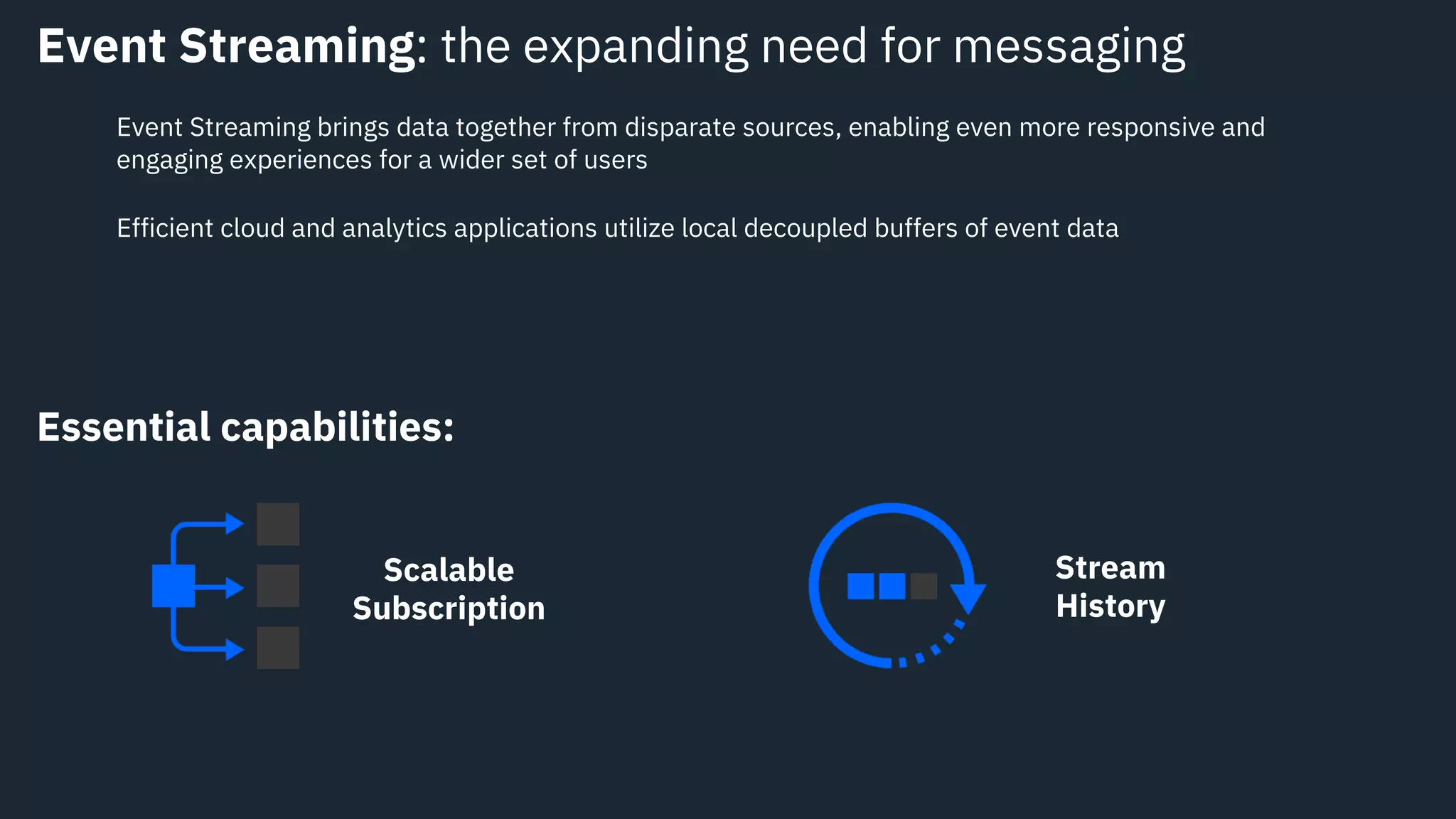

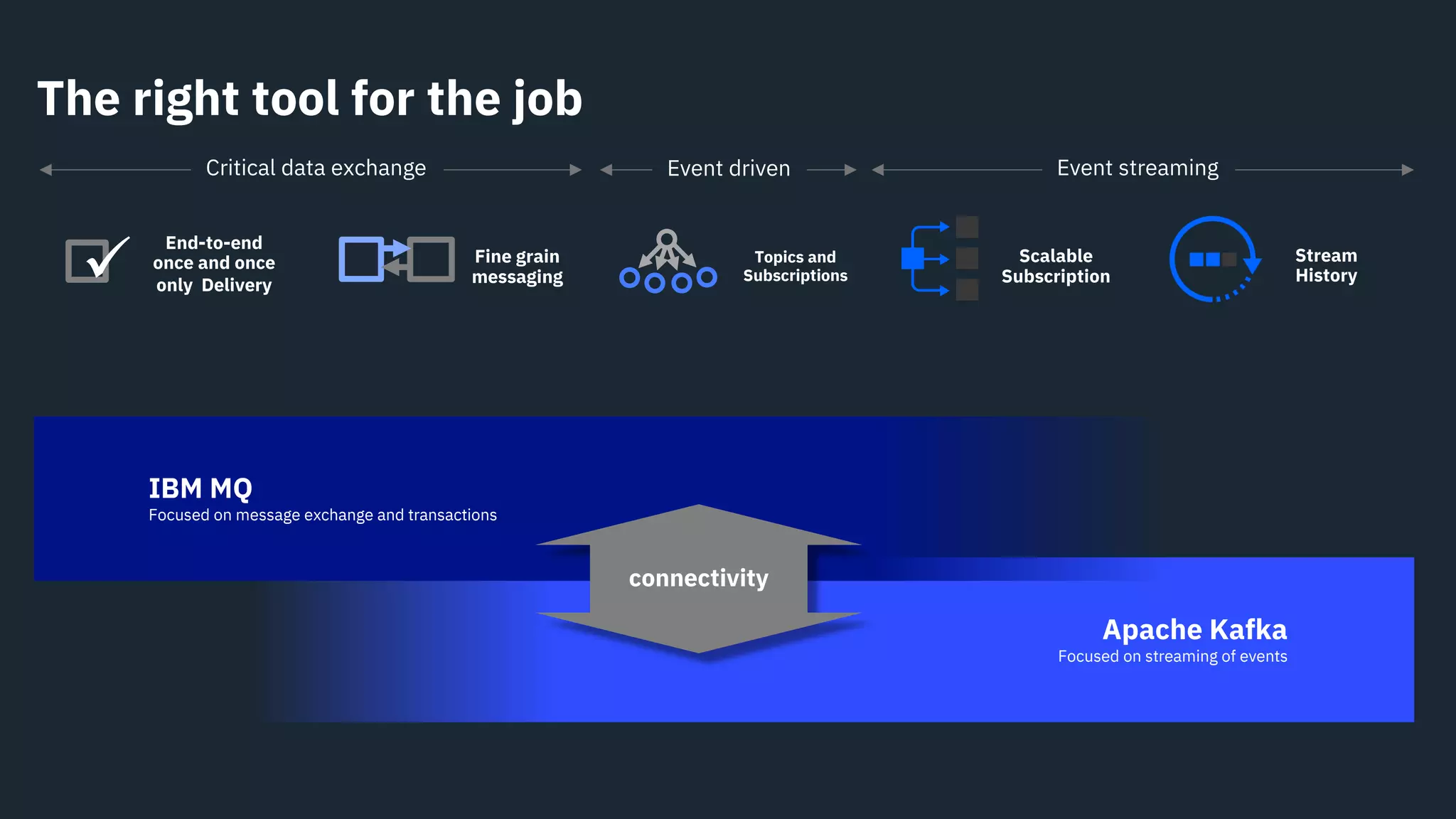

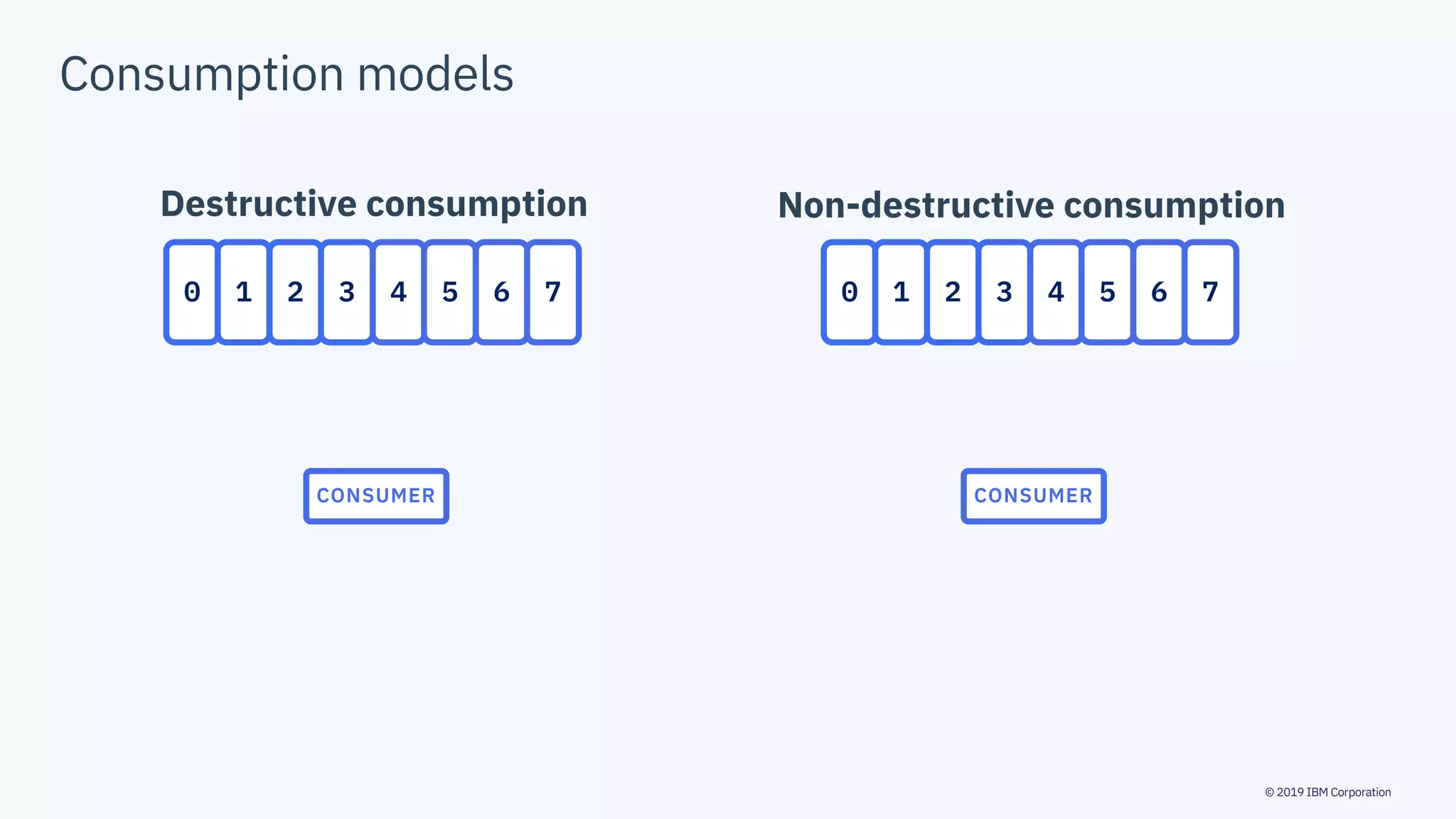

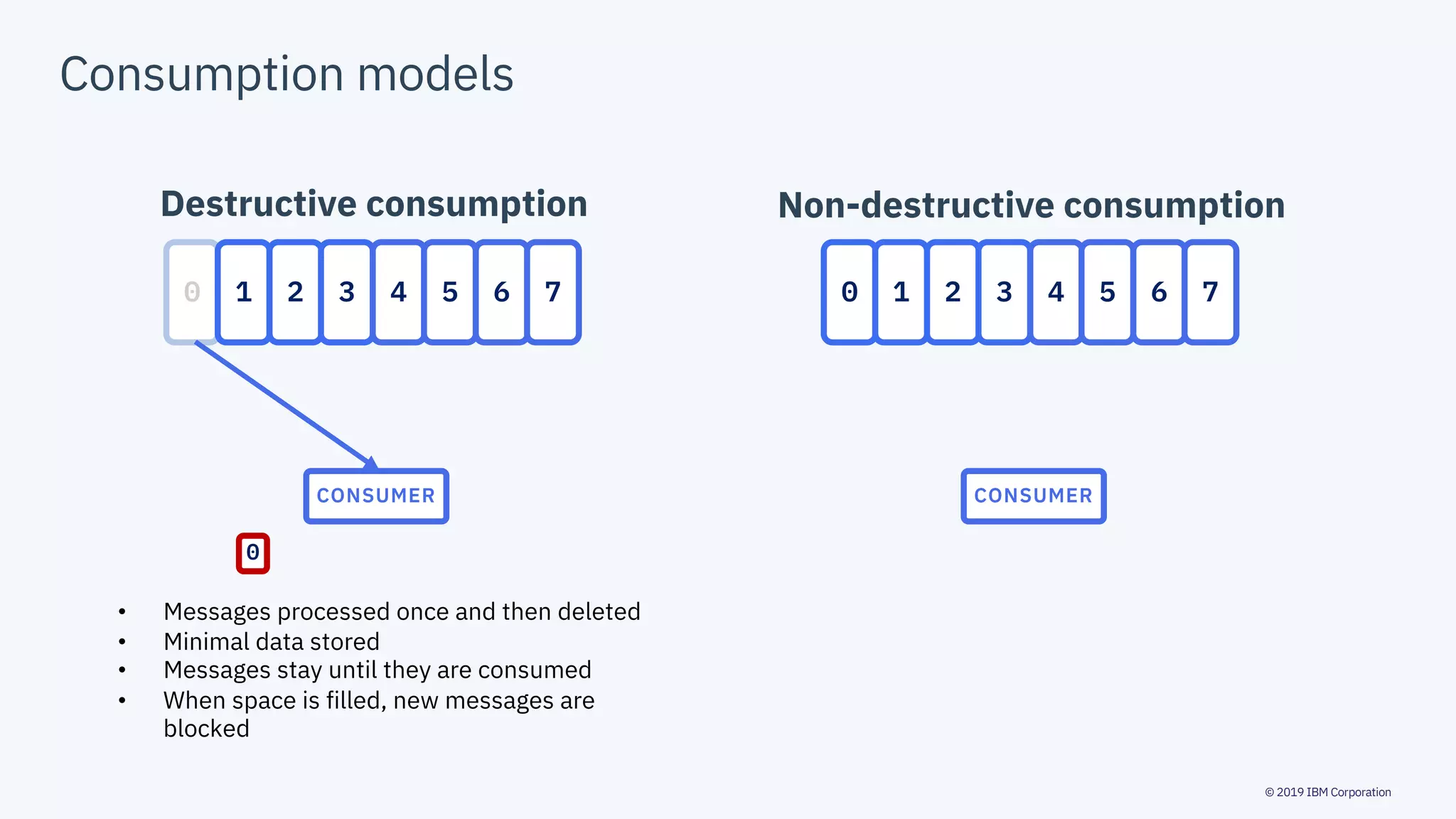

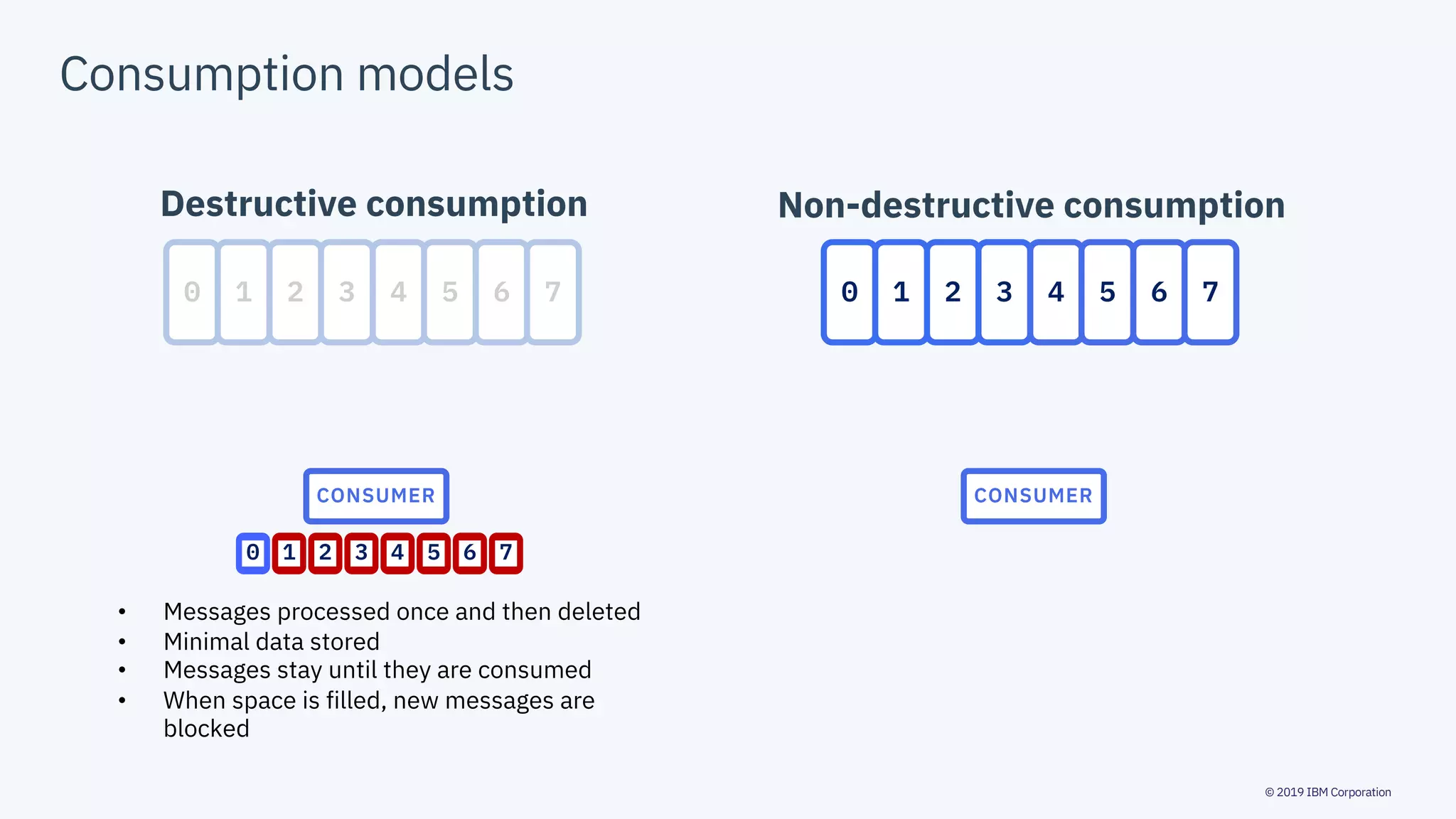

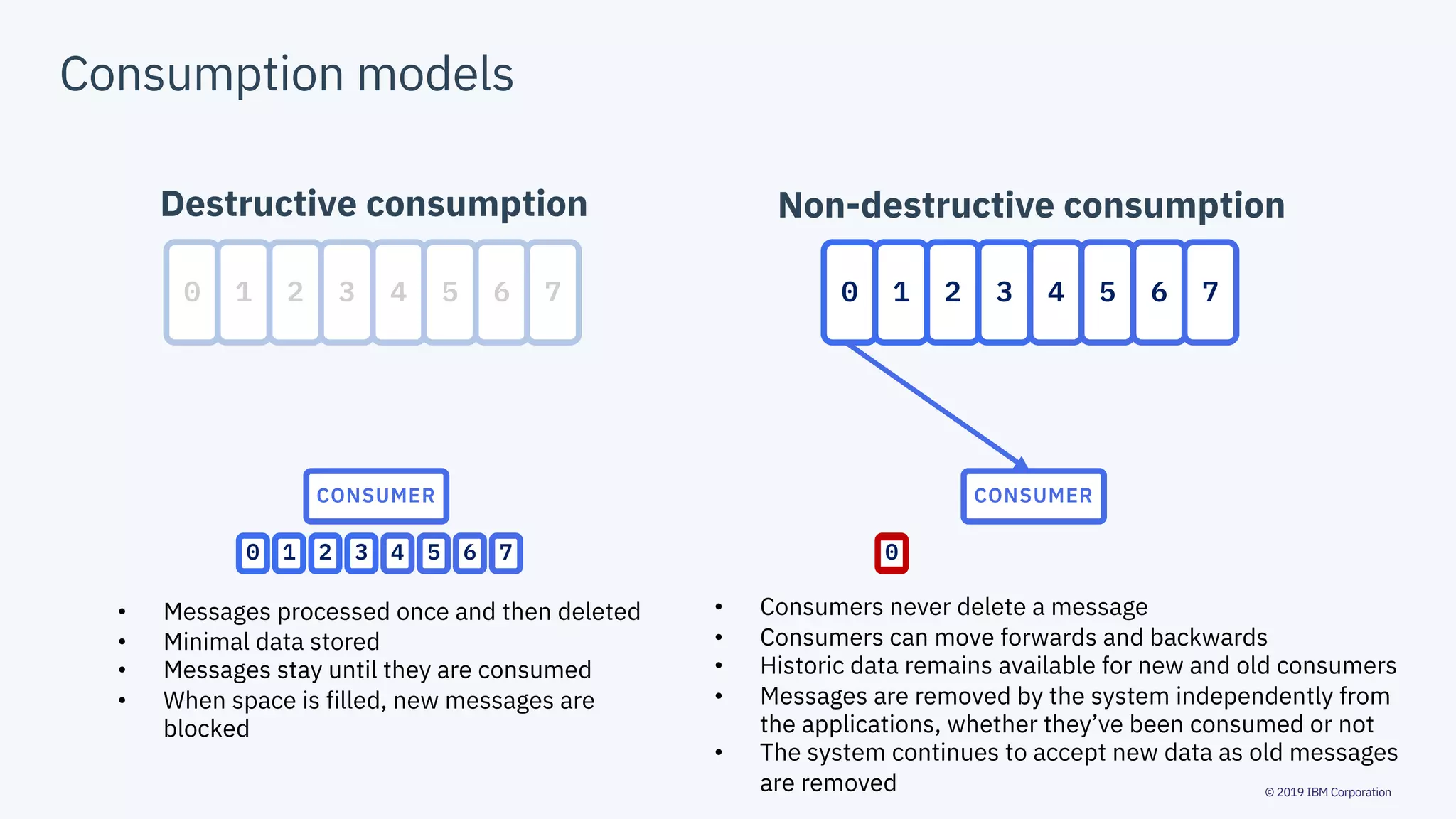

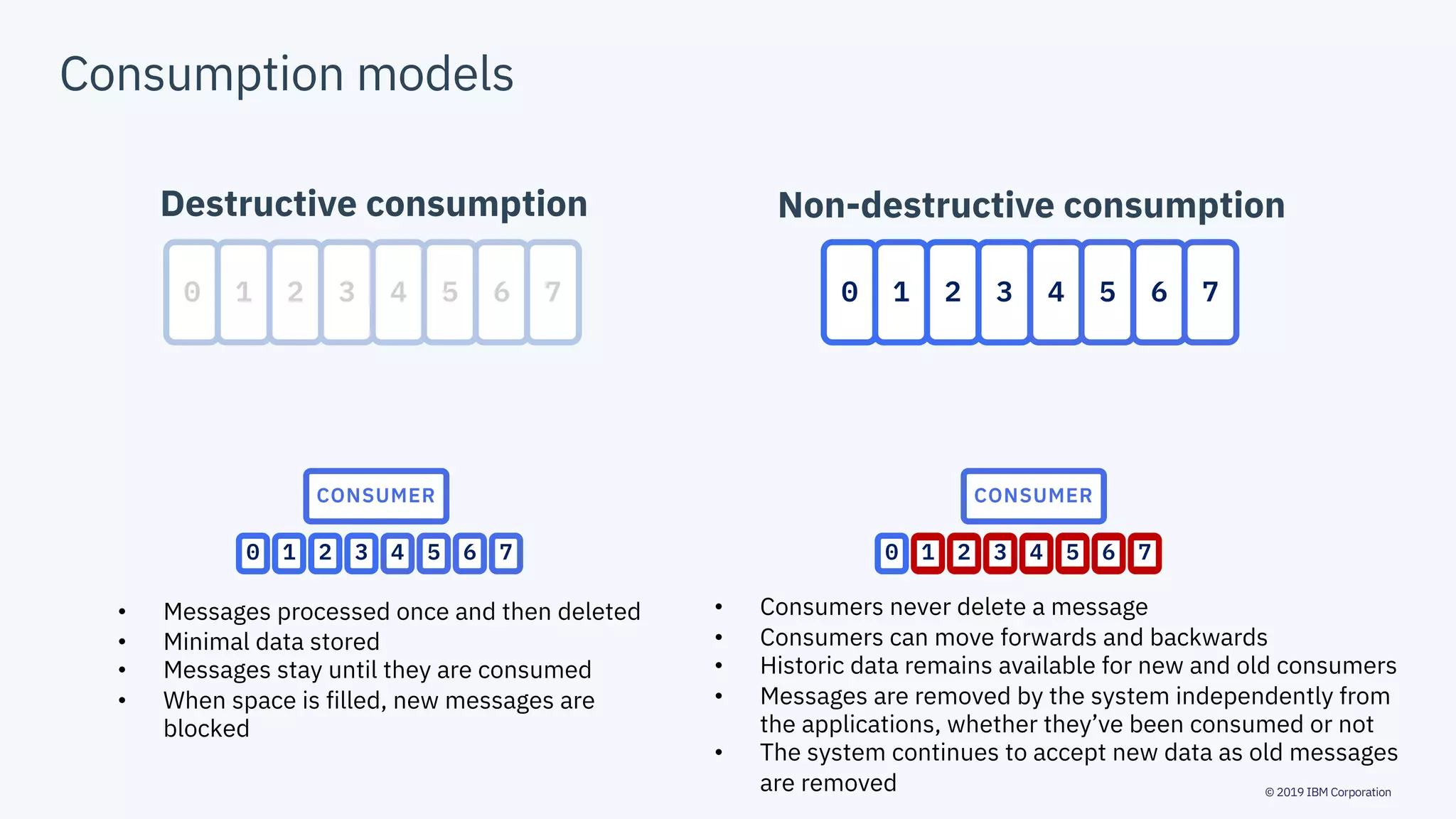

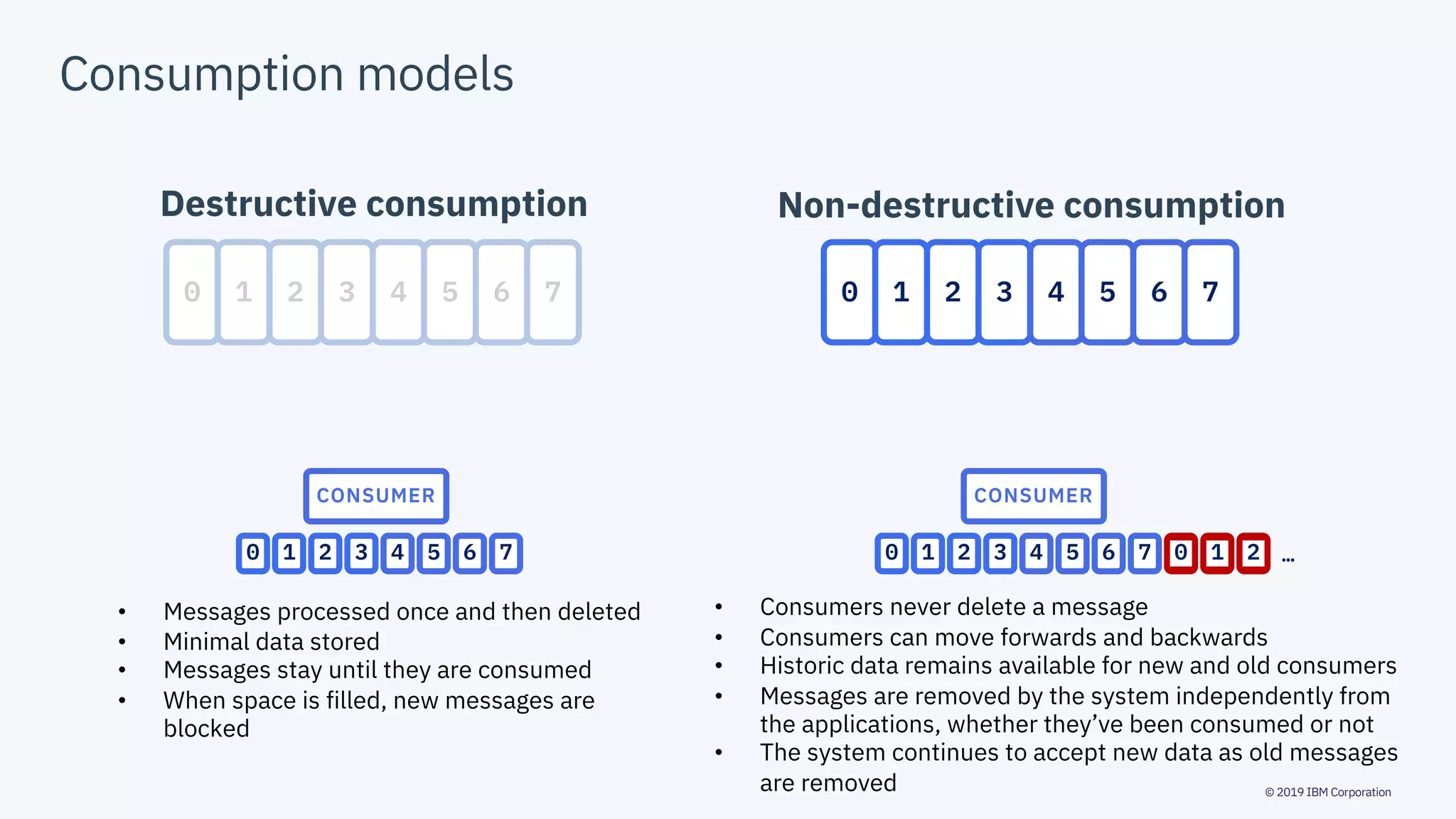

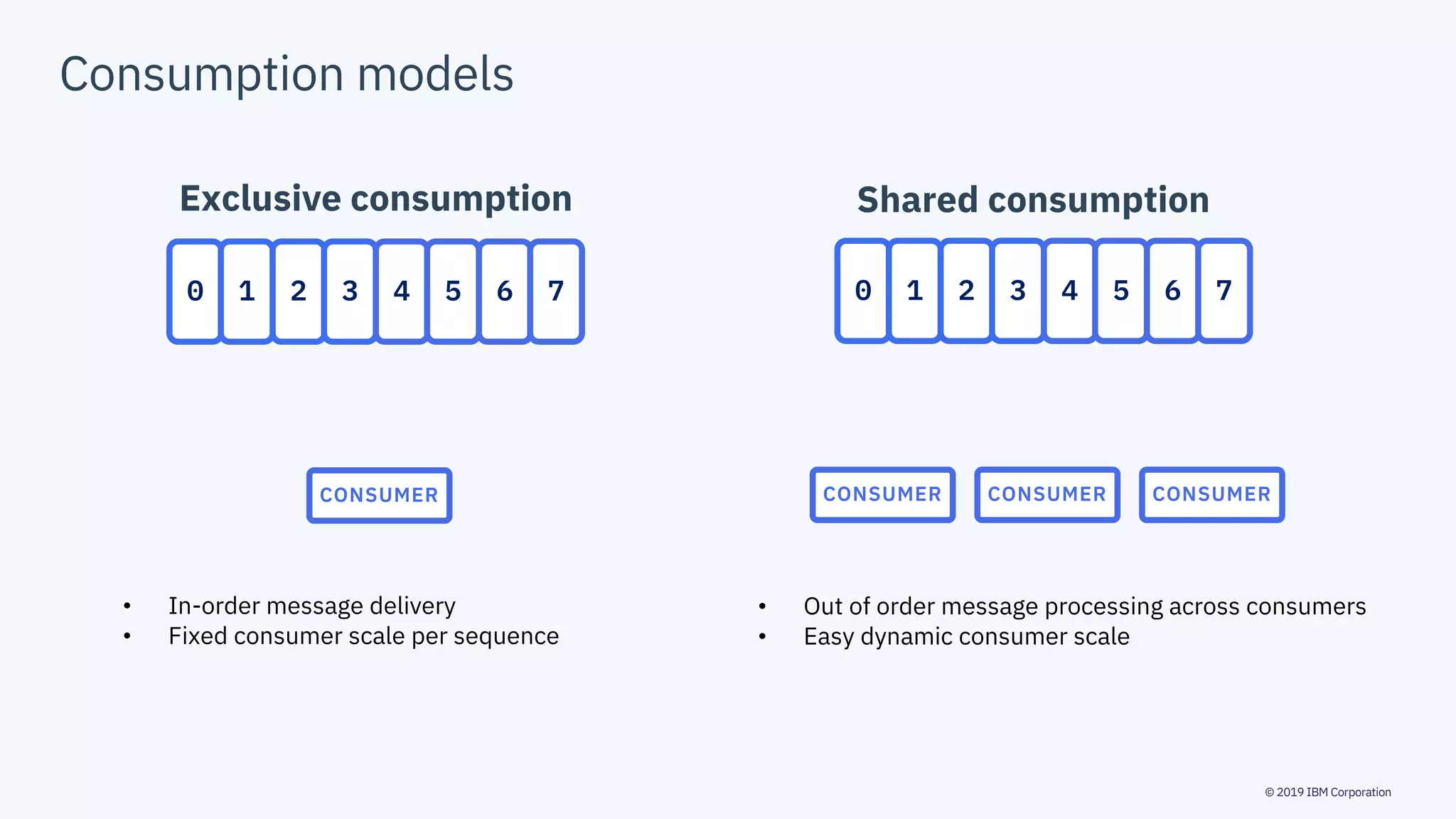

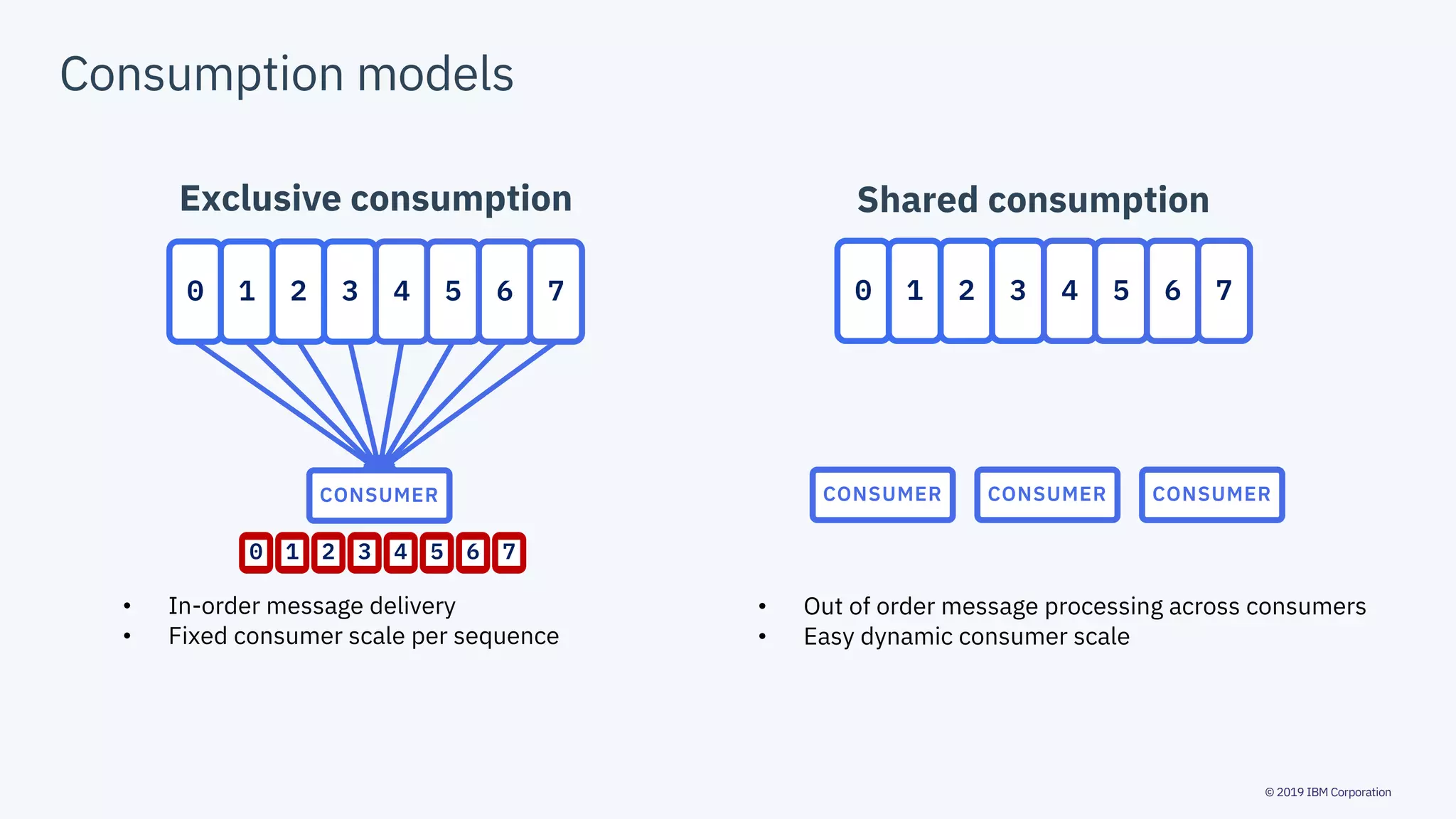

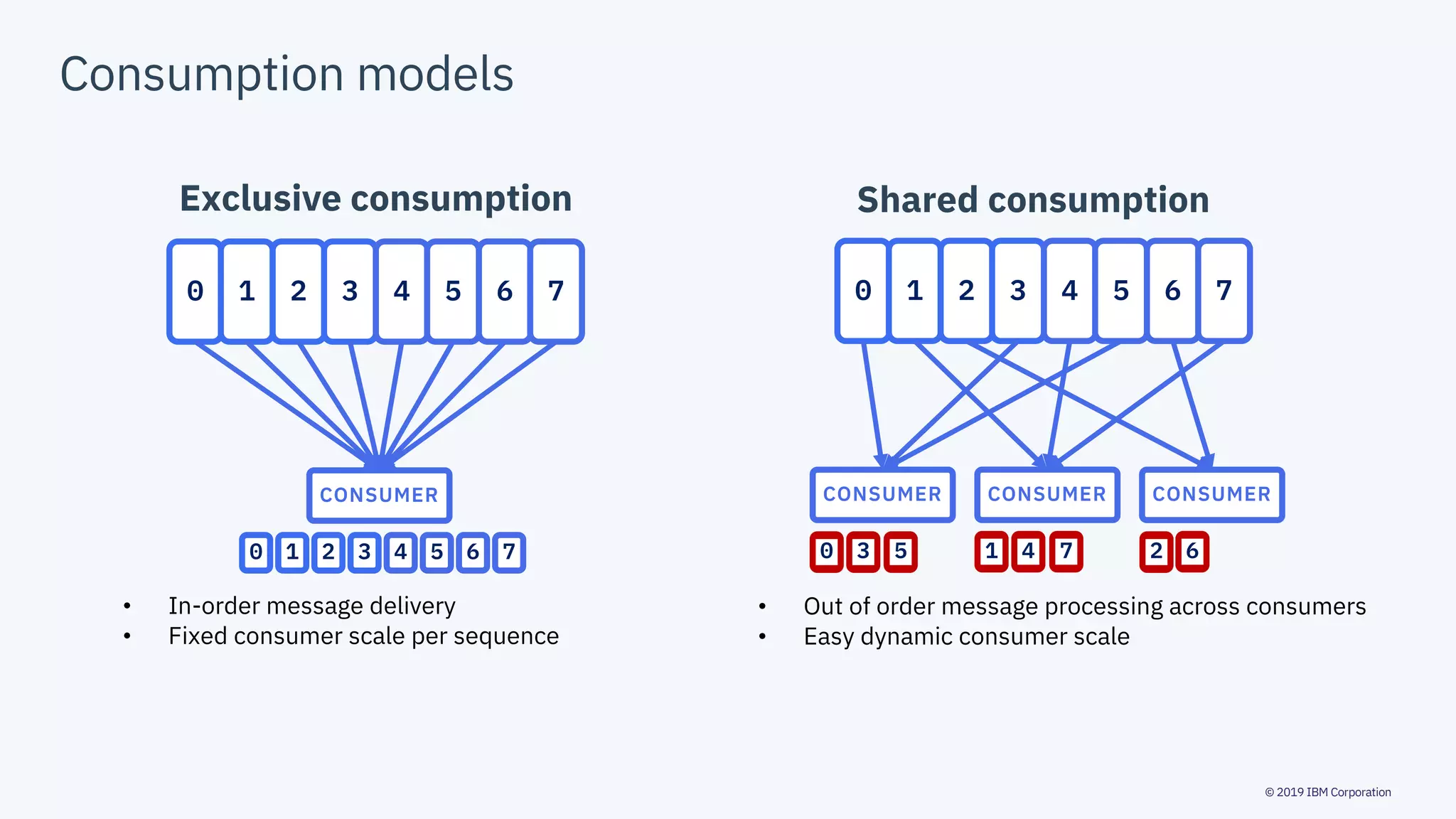

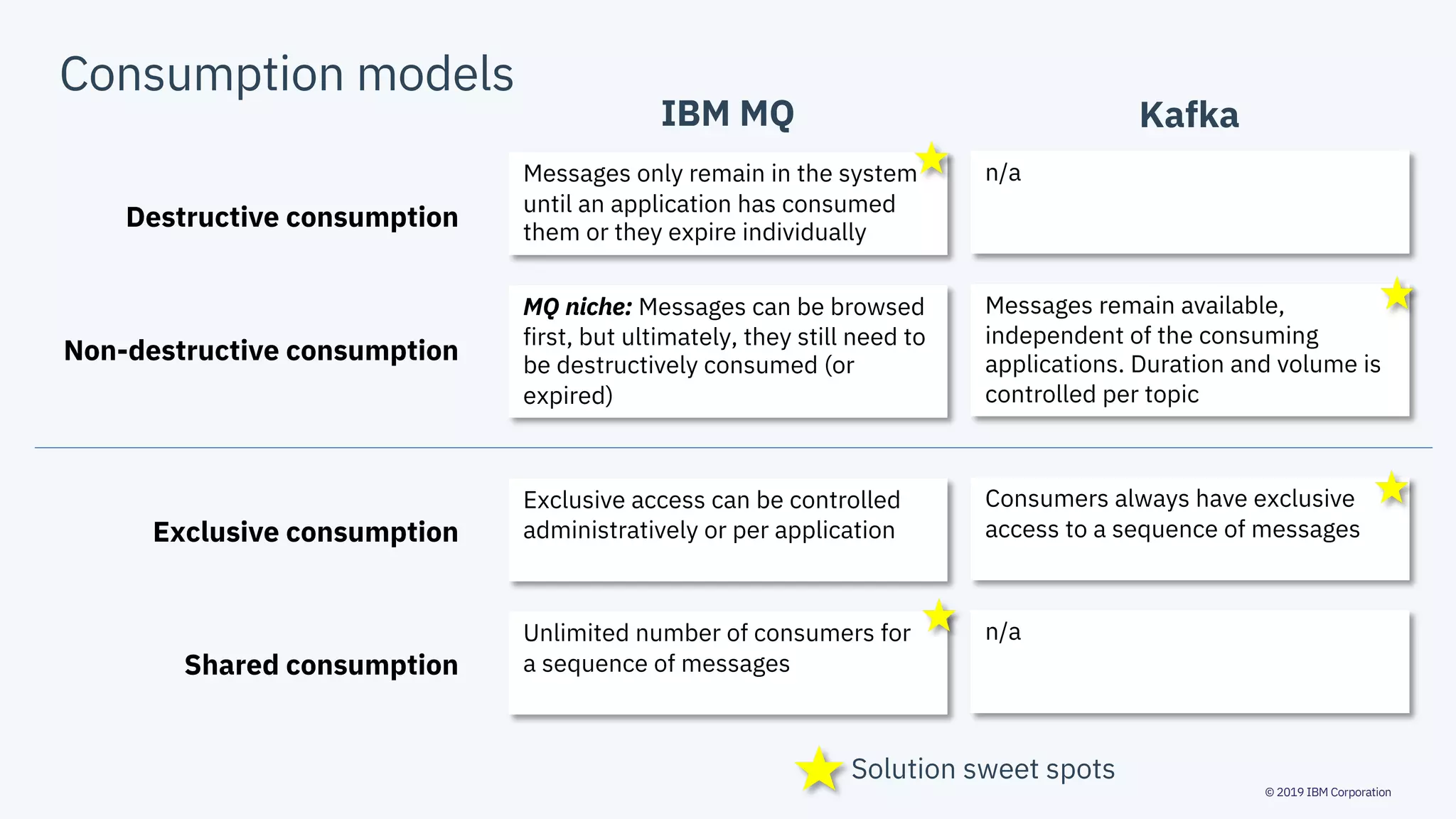

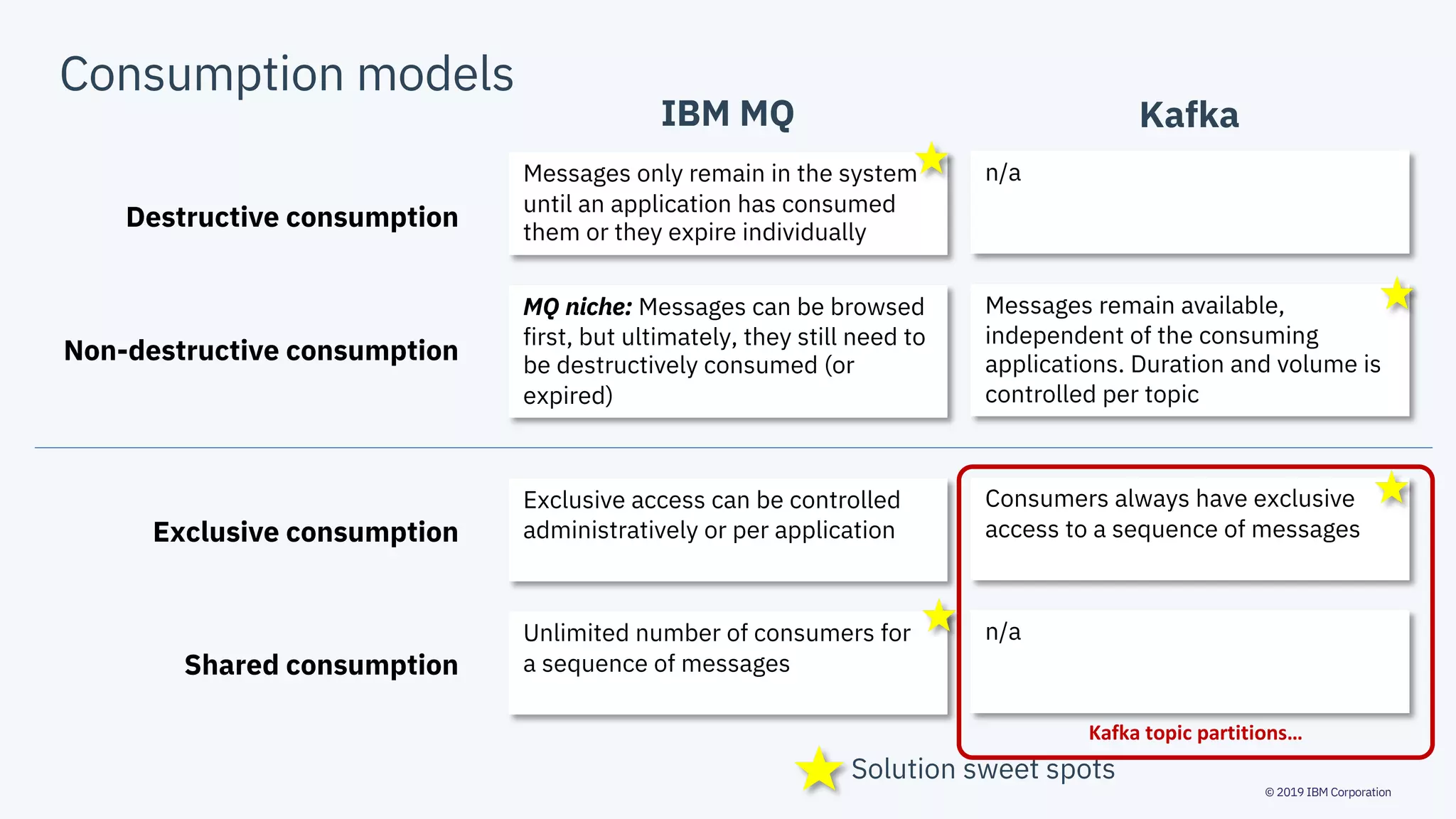

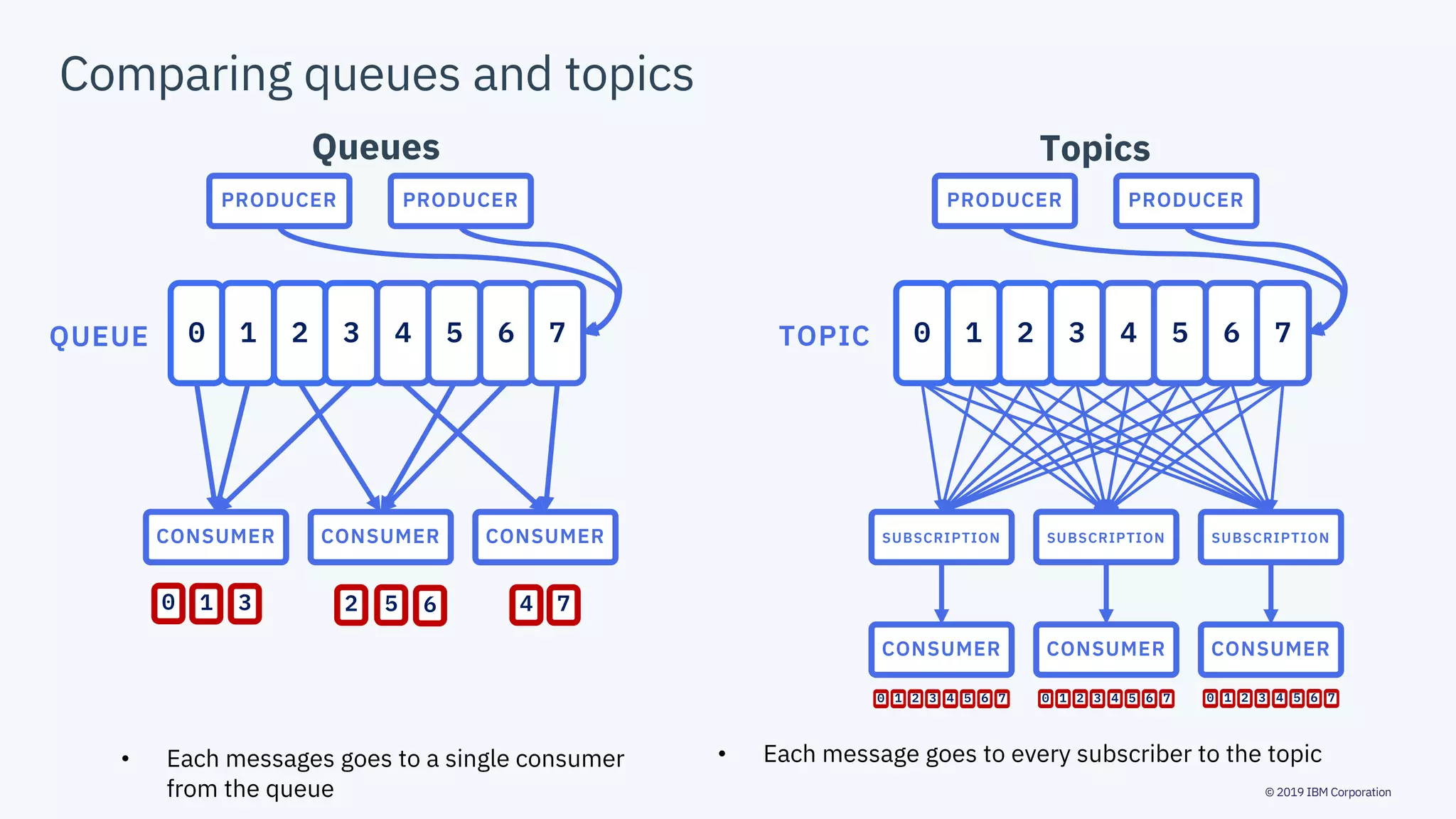

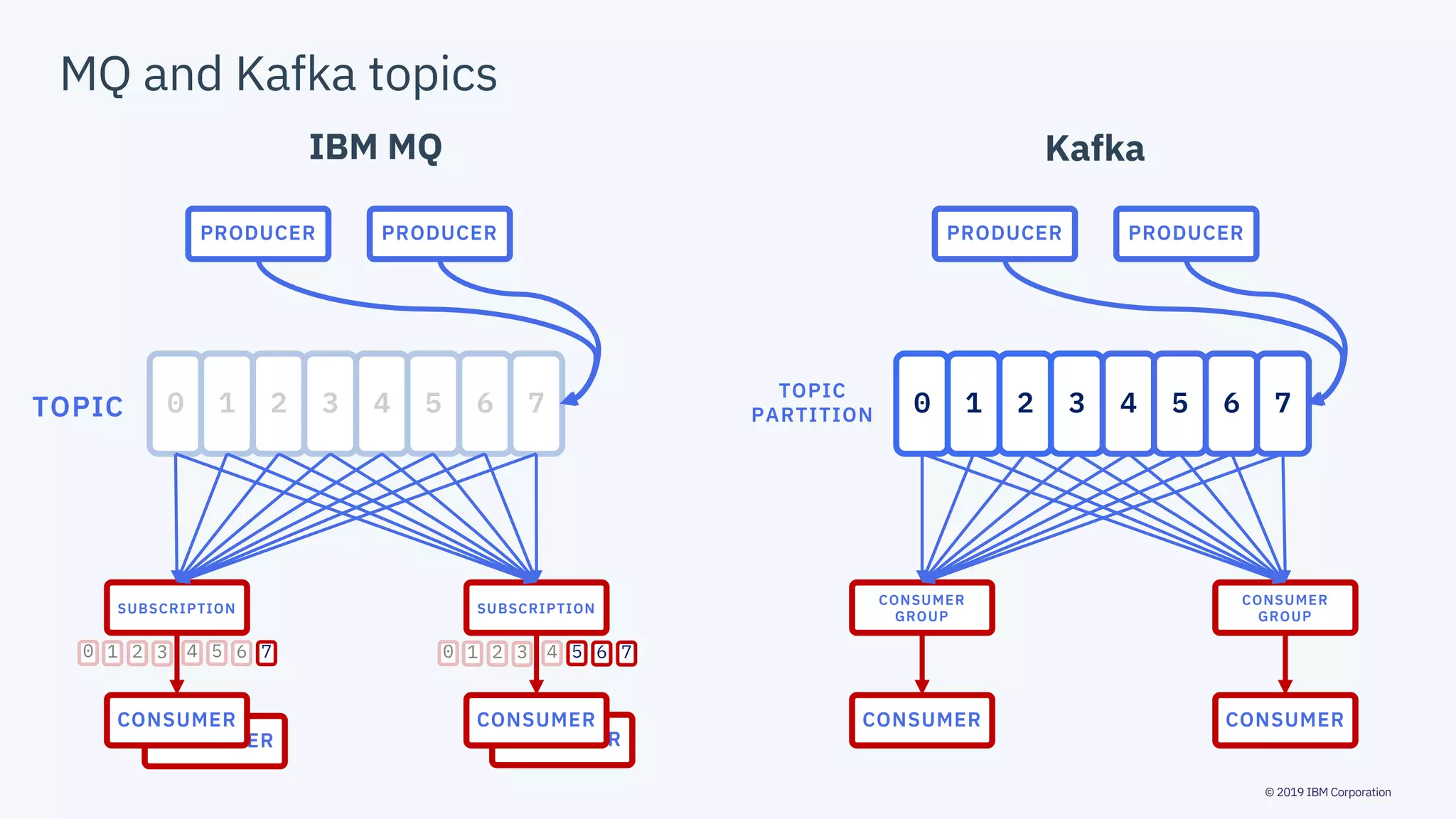

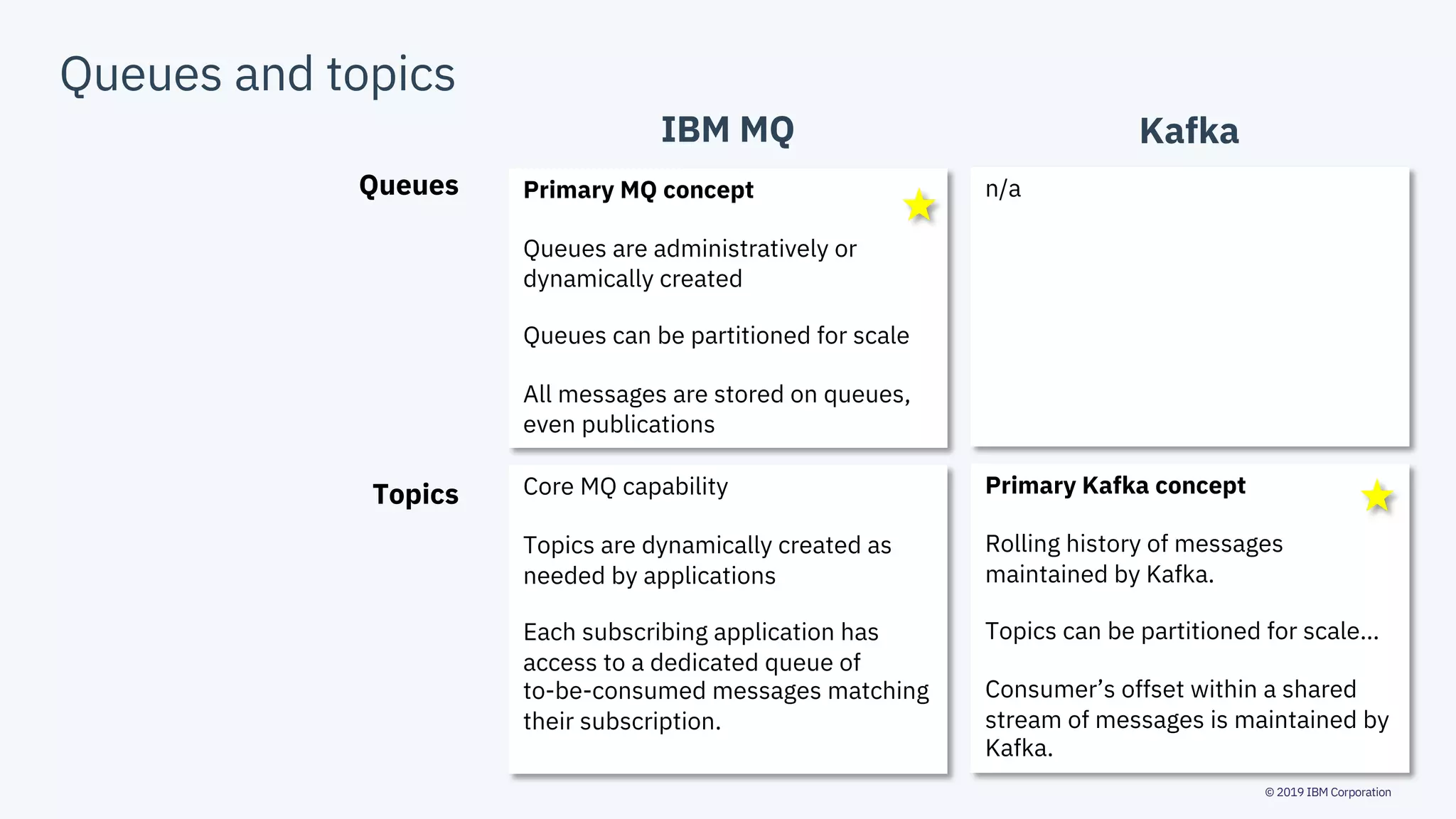

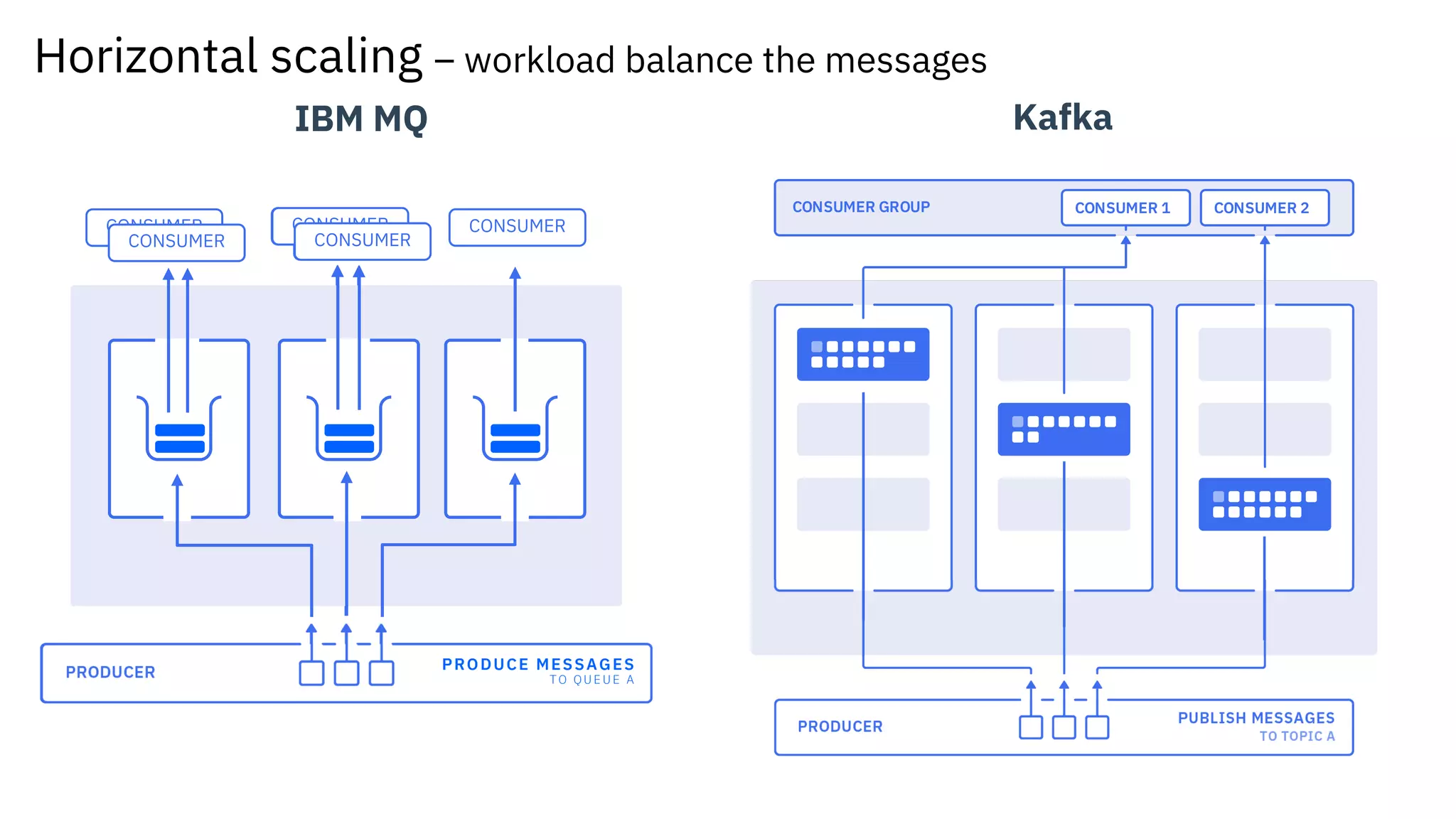

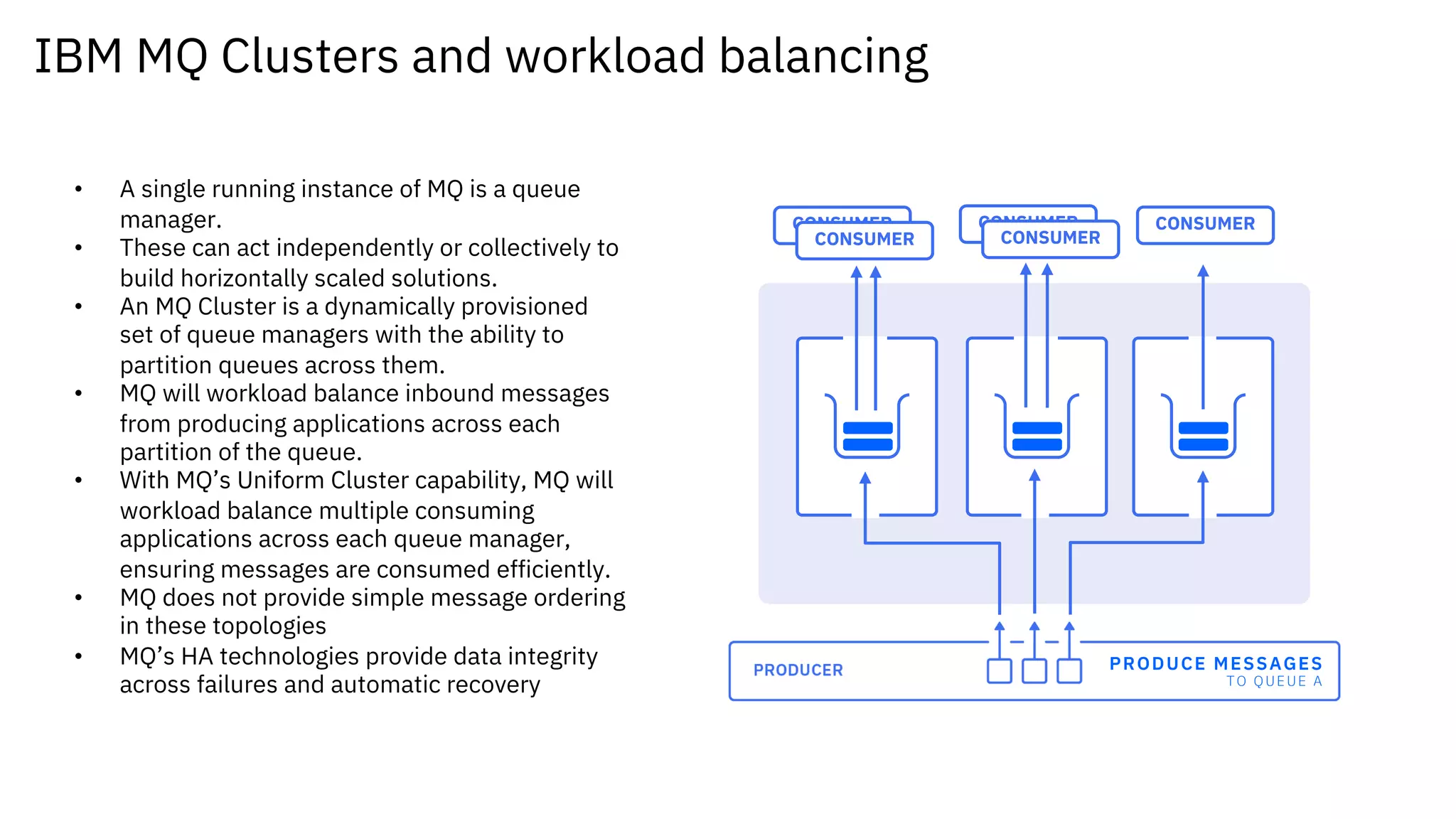

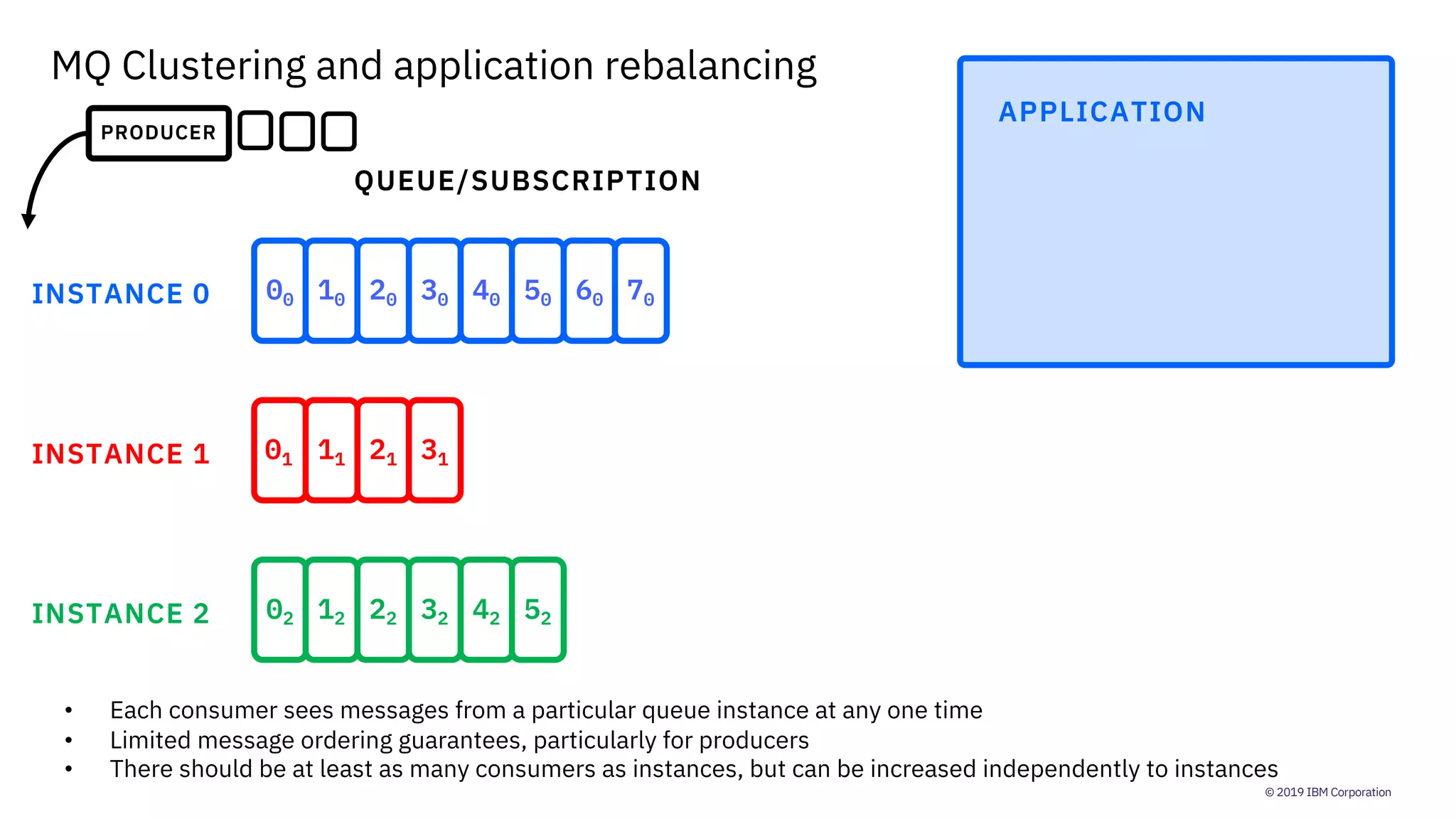

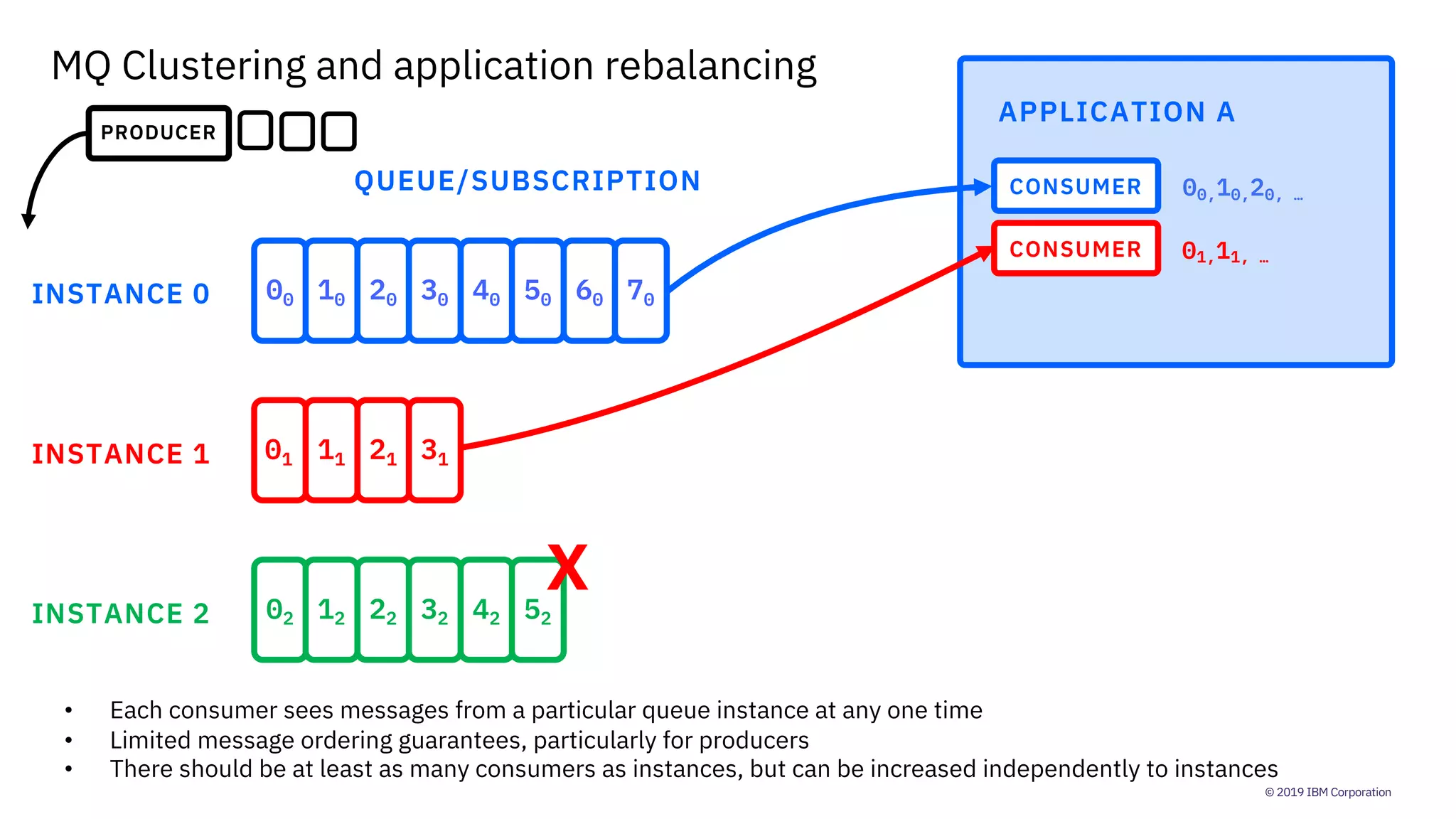

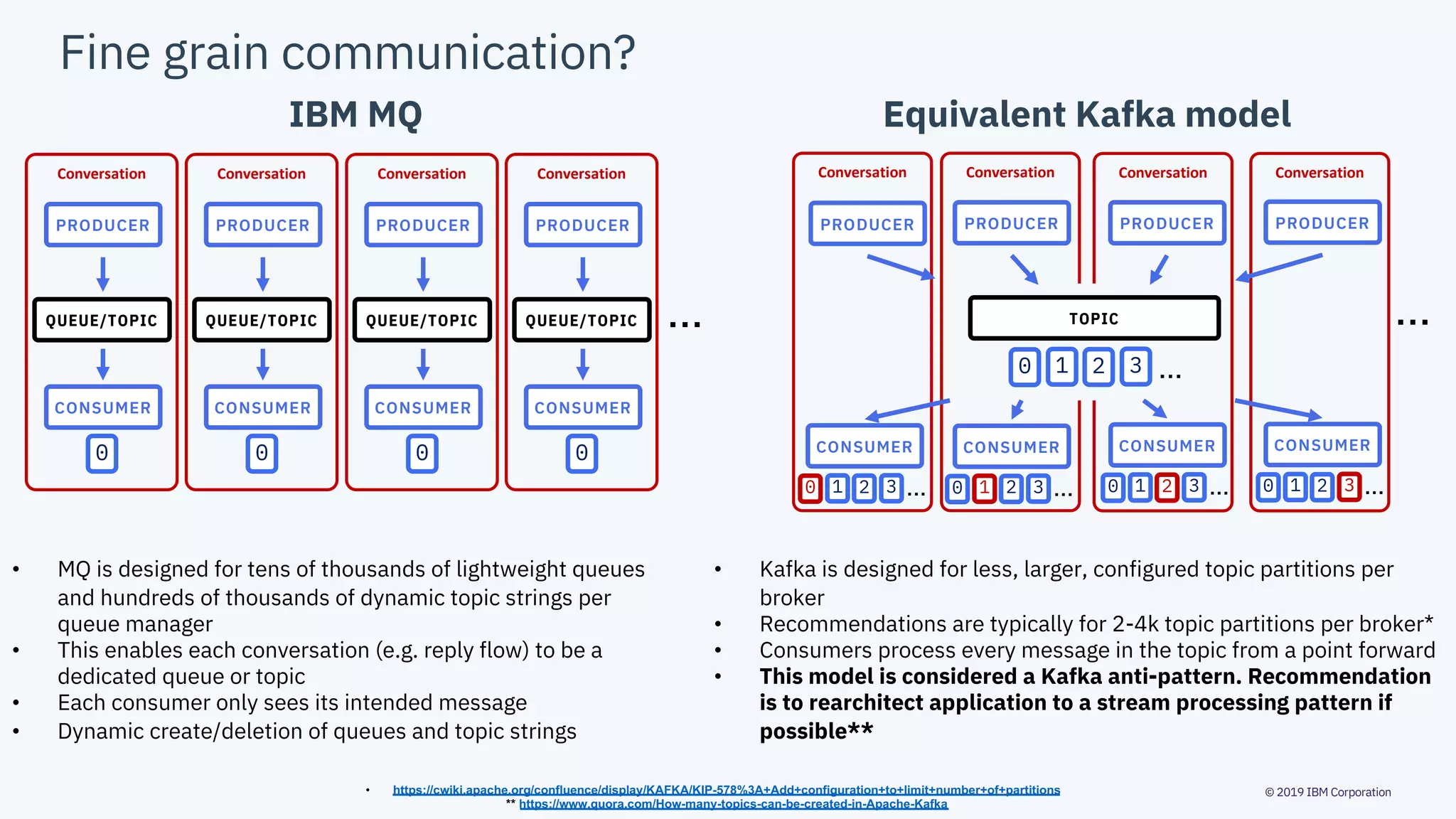

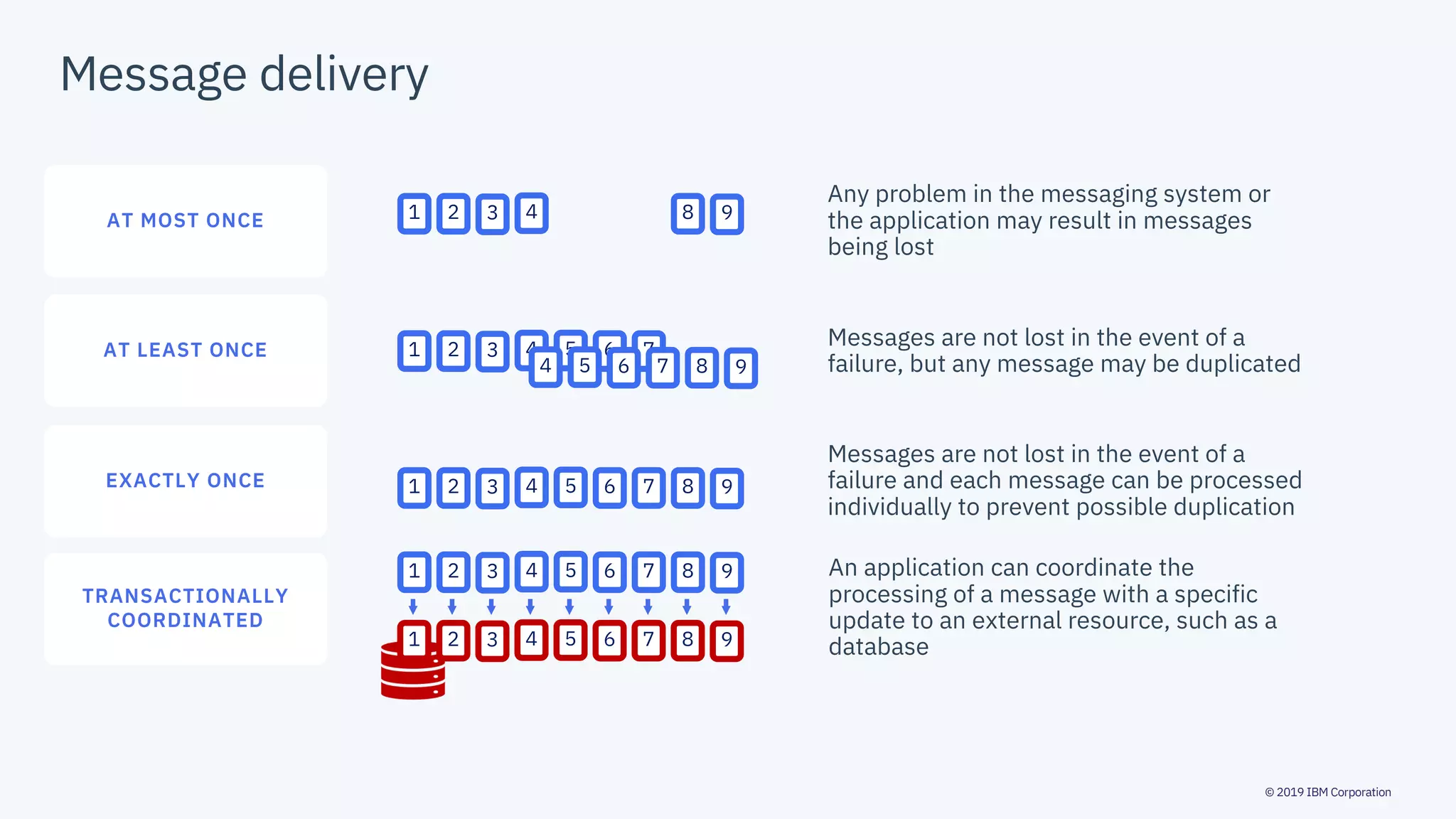

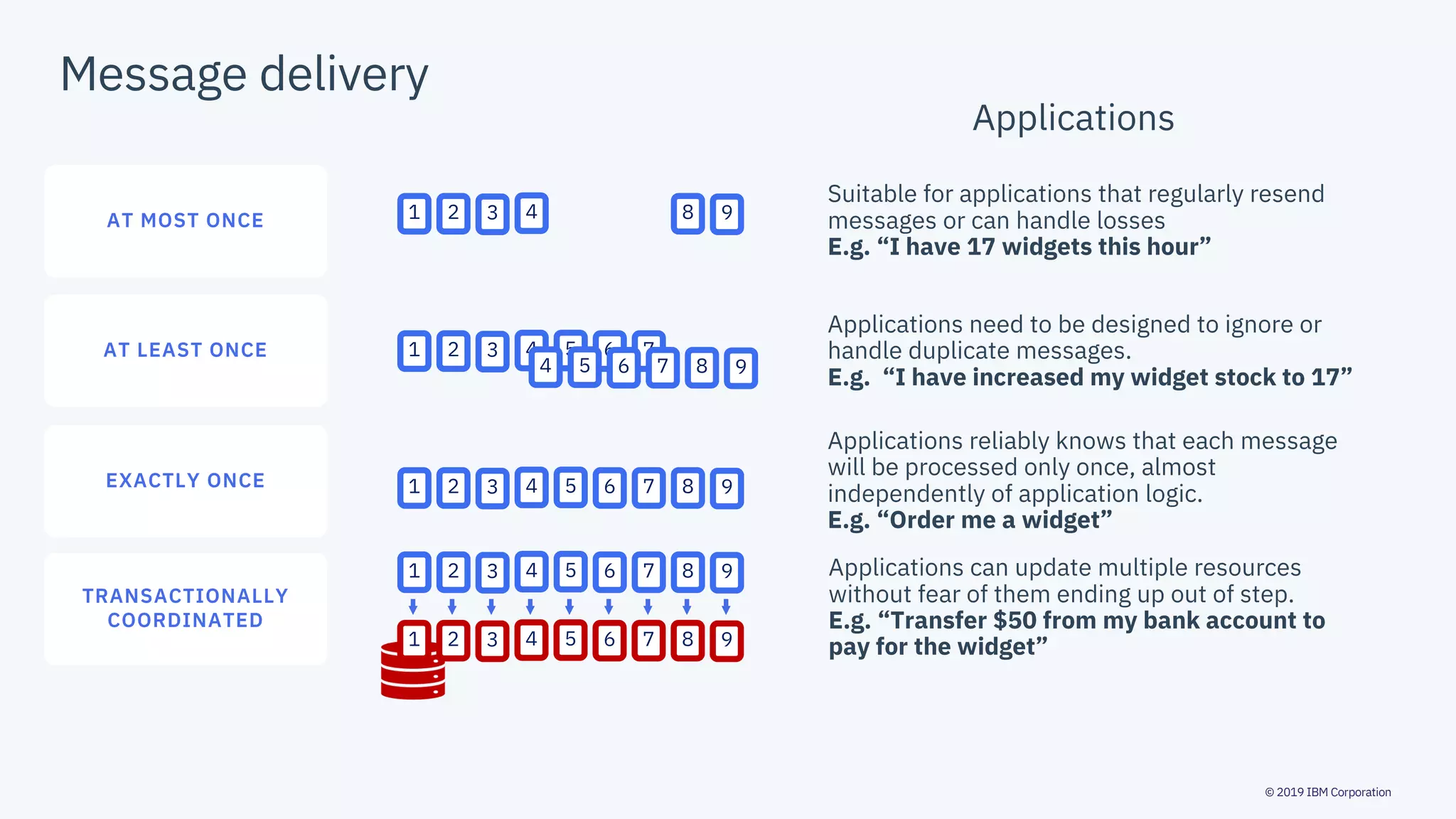

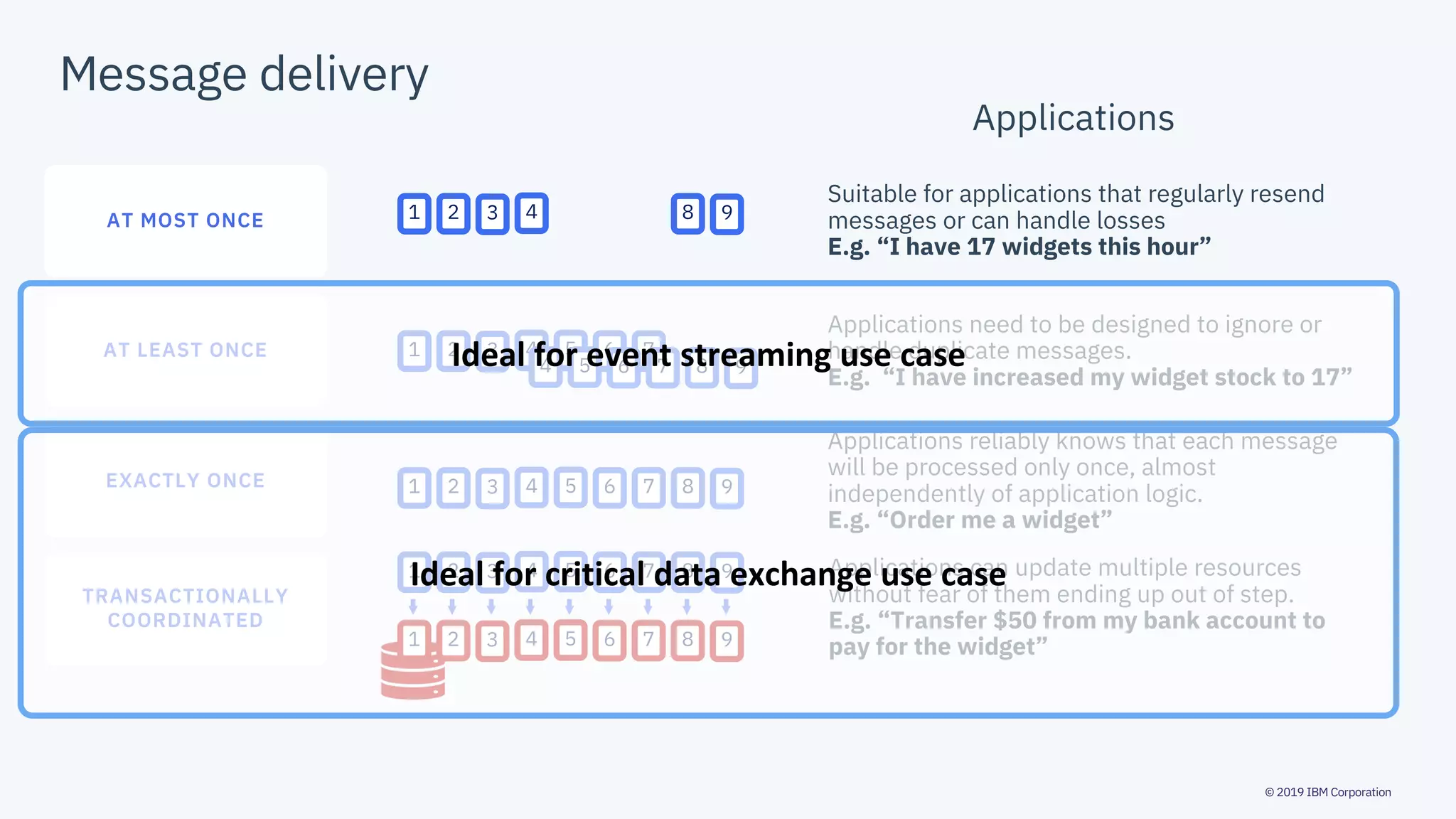

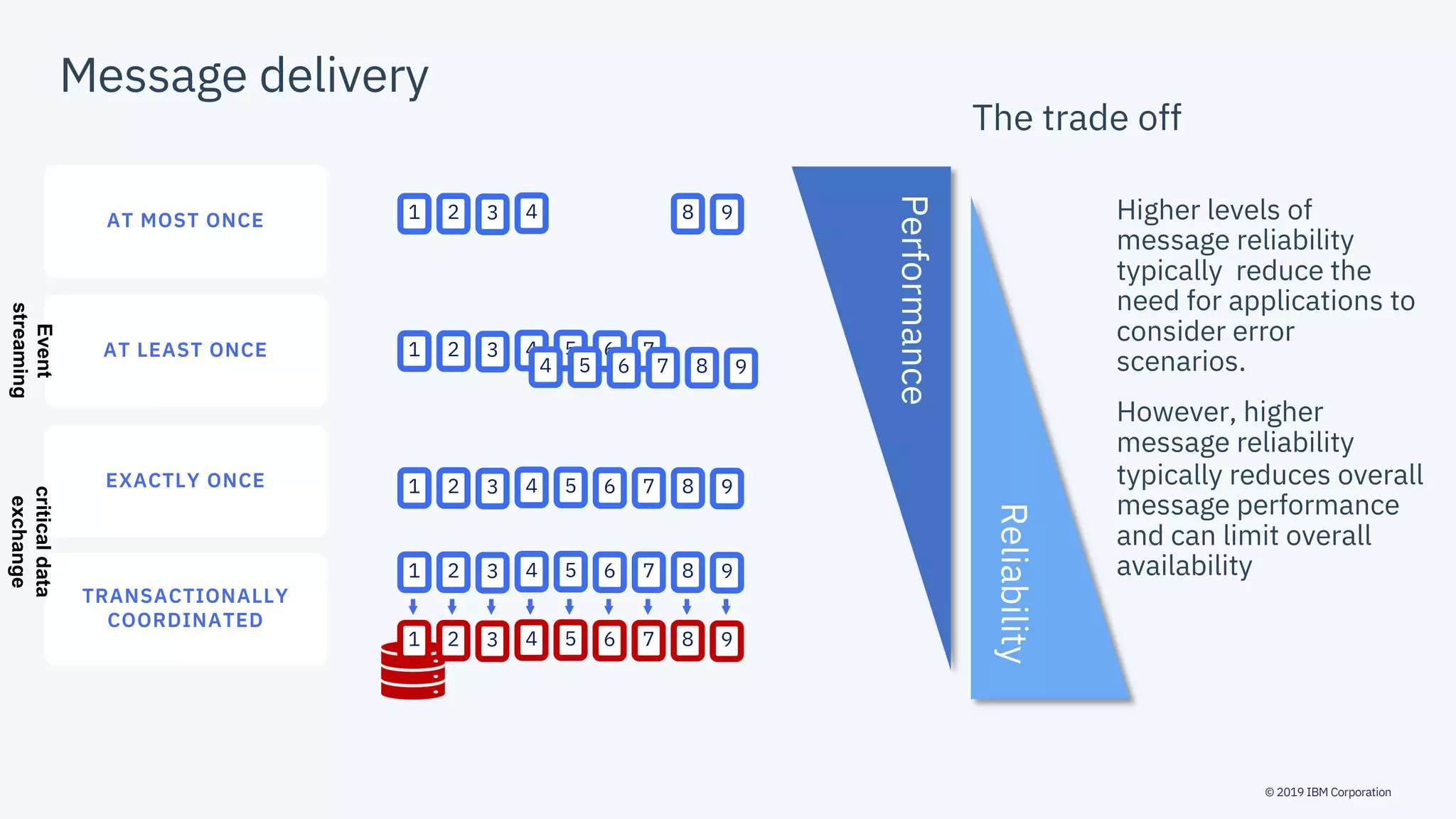

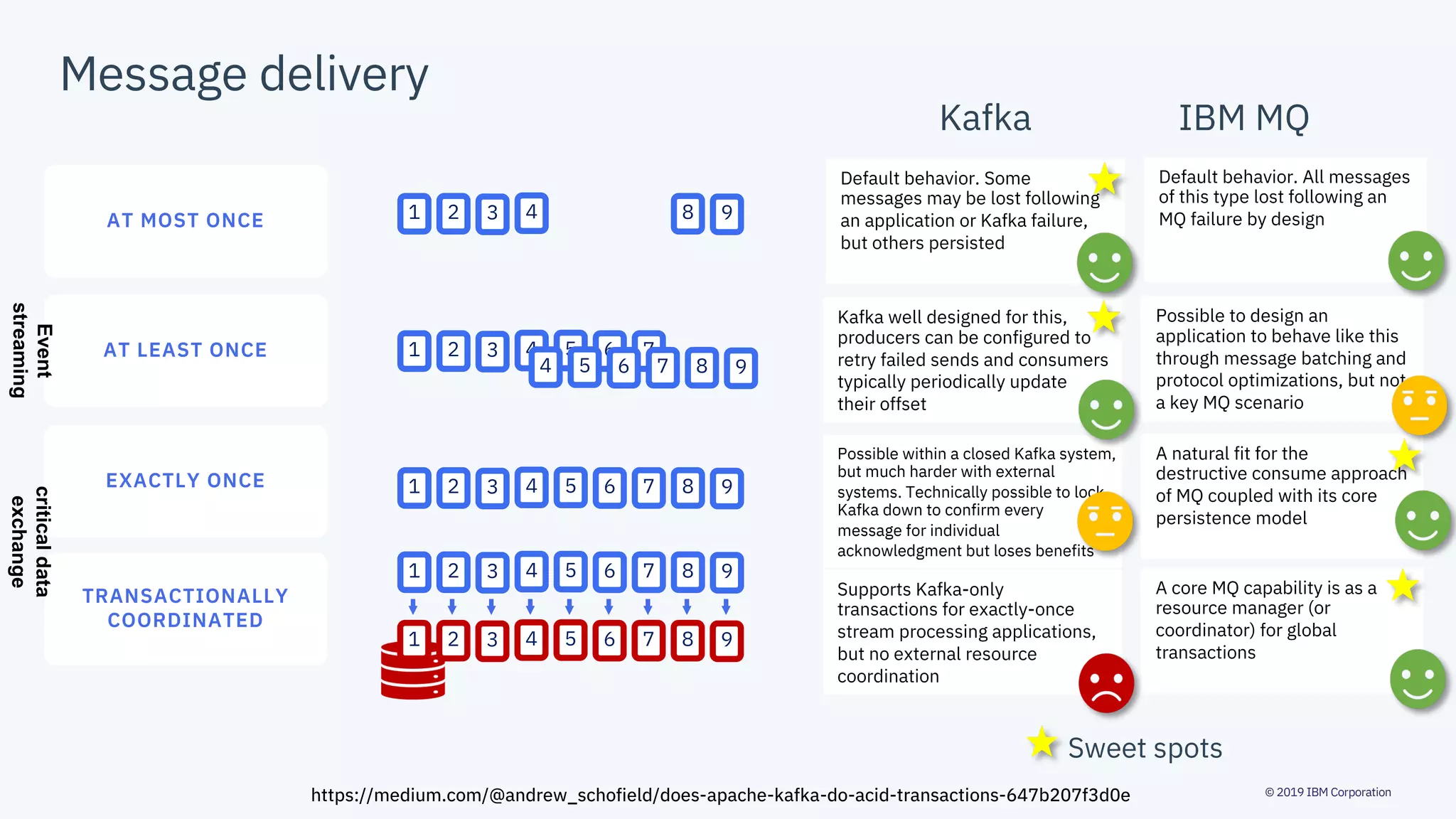

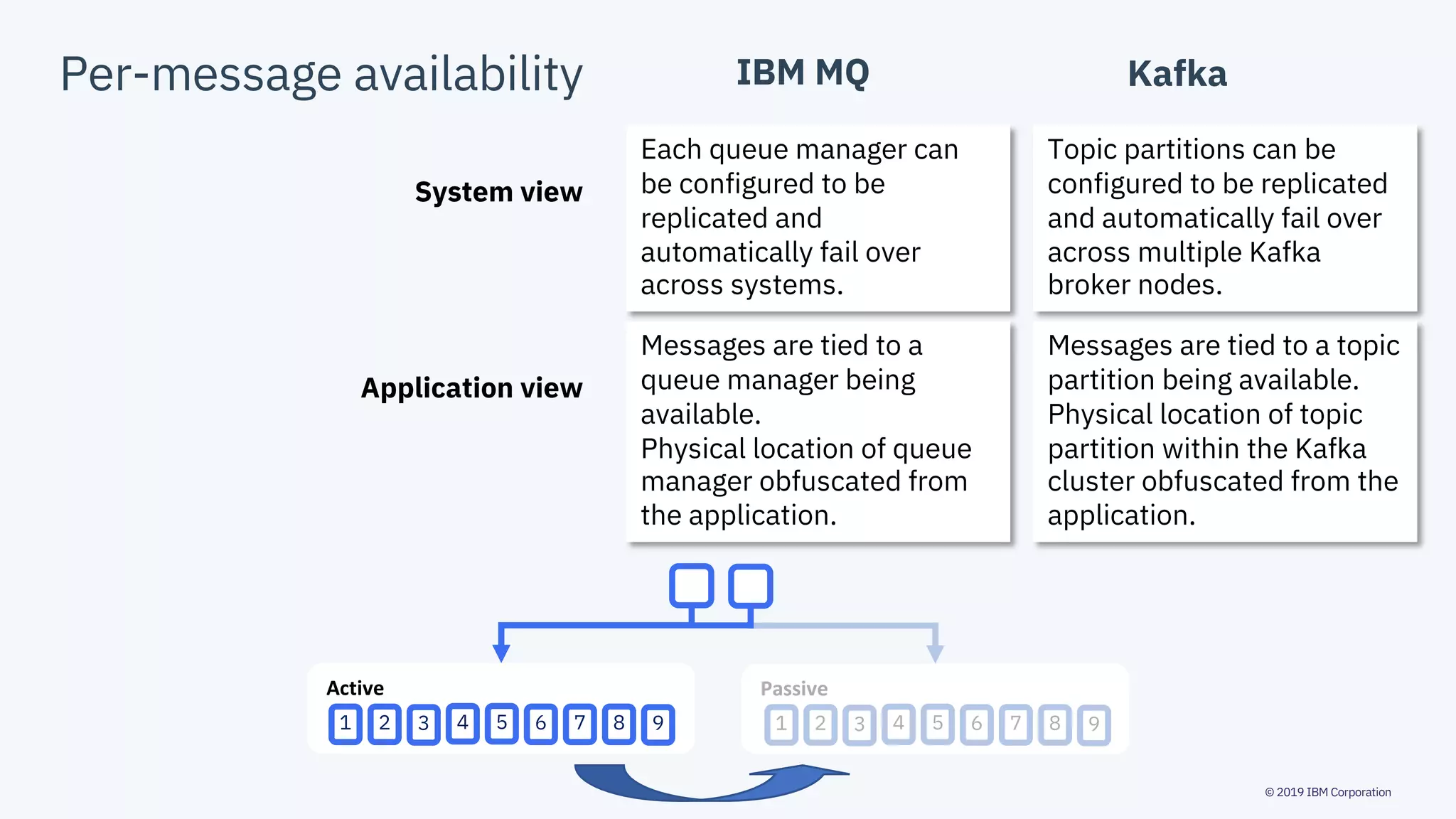

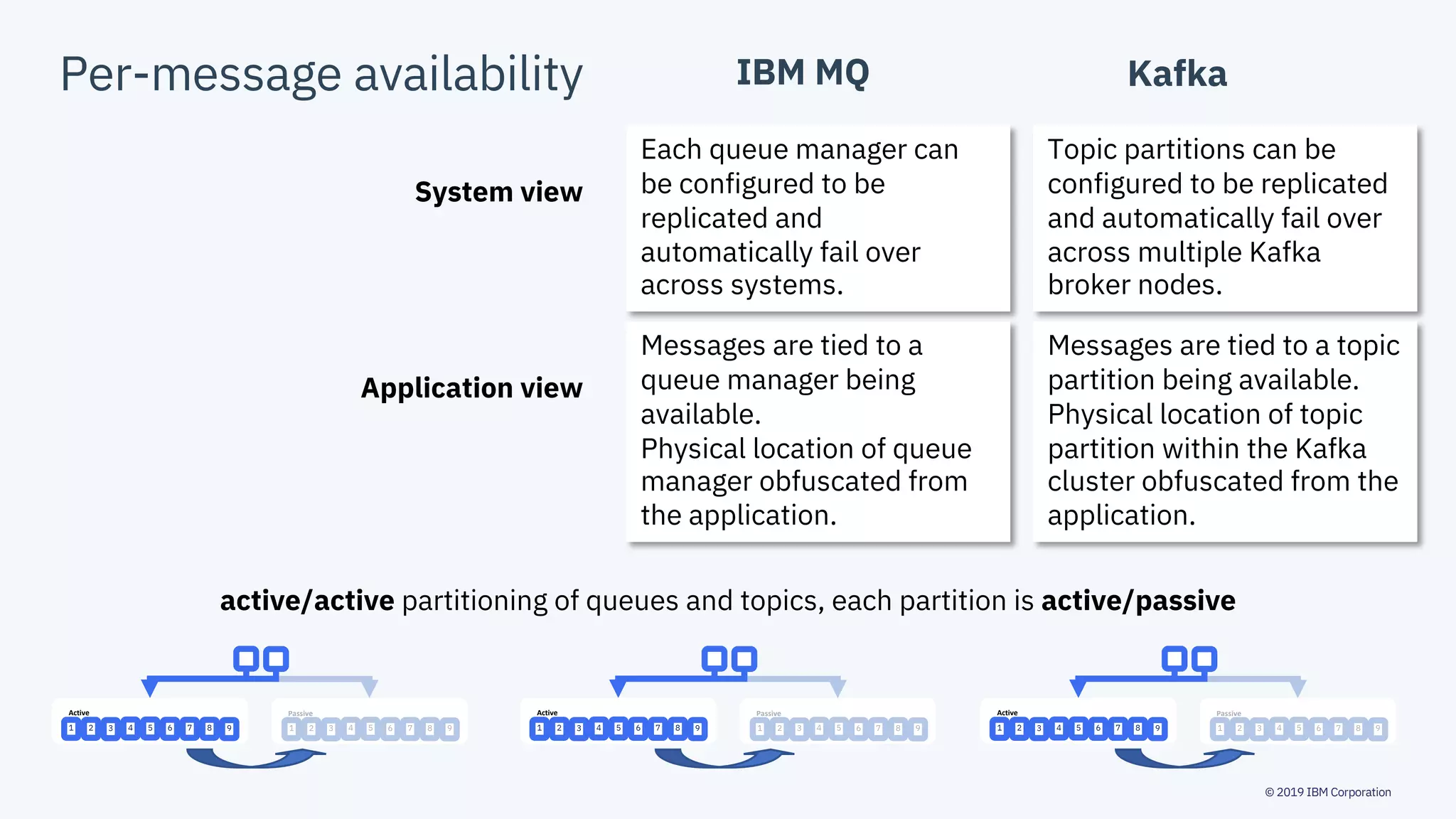

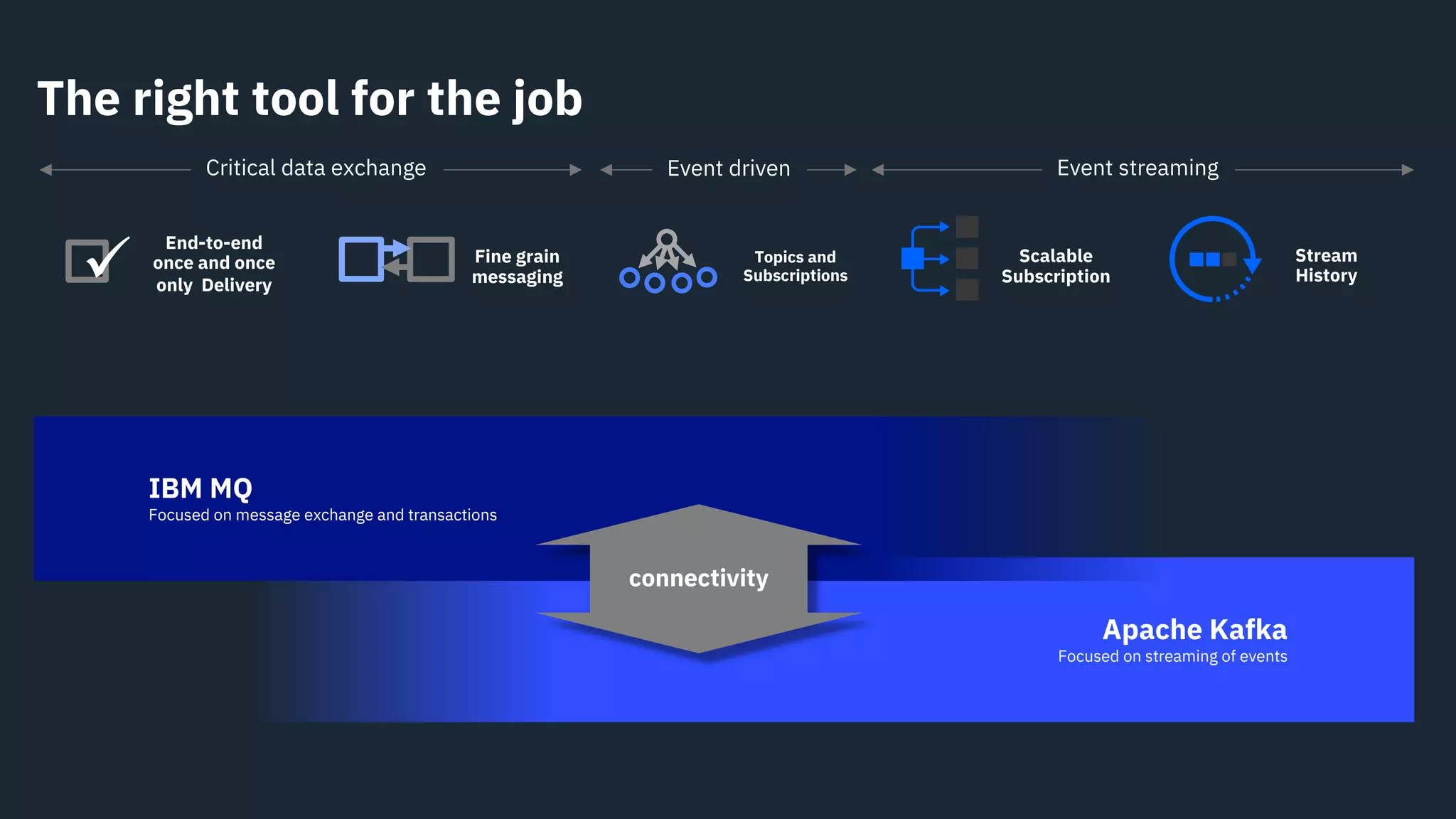

The document compares IBM MQ and Apache Kafka, highlighting their distinct messaging capabilities and use cases. IBM MQ focuses on assured asynchronous message delivery and transactional message exchange, while Kafka emphasizes event streaming and scalability for microservices architectures. Choosing the appropriate tool depends on specific enterprise messaging requirements, such as critical data exchange, event-driven interaction, and system consumption models.