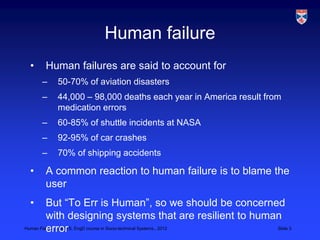

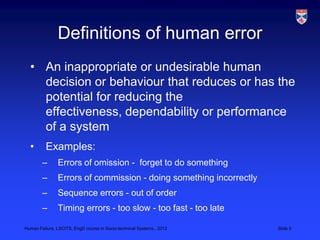

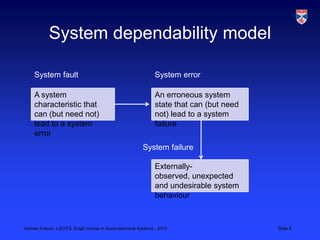

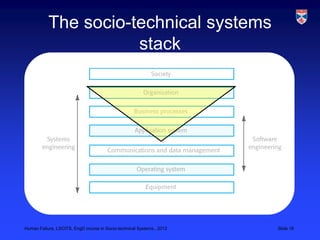

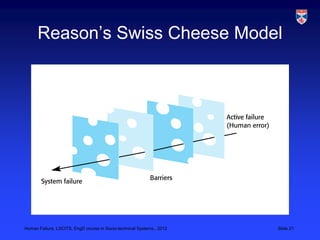

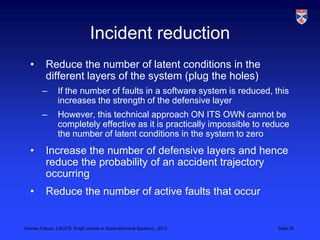

This document discusses human failure in socio-technical systems through a series of slides. It begins by outlining statistics on how human failures contribute to accidents in various domains like aviation and transportation. It then defines human error and different types of errors. The rest of the document discusses how human errors interact with vulnerabilities in complex socio-technical systems using models like GEMS and Reason's Swiss Cheese model. It emphasizes that human errors should be viewed from a dependability perspective and provides guidelines for designing more error-tolerant systems.