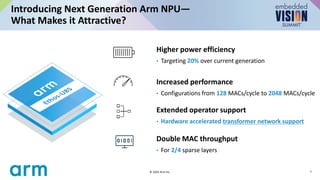

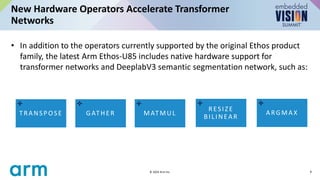

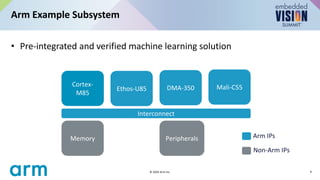

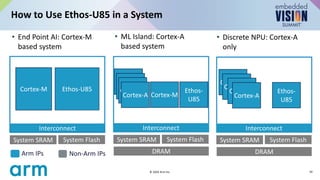

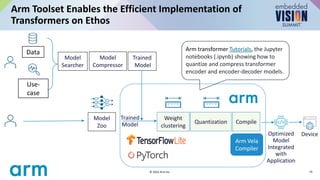

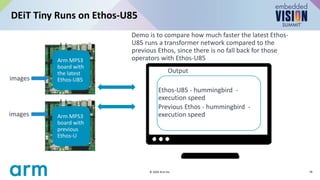

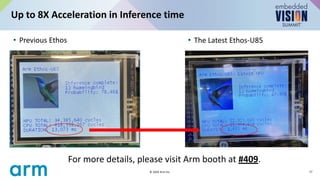

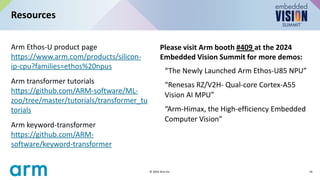

Arm's latest machine learning solution, featuring the Ethos-U85 NPU, enhances the deployment of vision transformers at the edge by improving power efficiency and performance for tasks such as image classification and object detection. The transformer architecture's self-attention mechanism offers advantages over traditional CNNs, particularly for extendable applications across various domains. The Ethos-U85 supports hardware acceleration for transformer networks, facilitating faster and more efficient AI processing at the edge.

![• What is a transformer? Ref. [1]

Vaswani et al. Attention is all you need,

NIPS 2017

• A highly scalable network

architecture based on self-

attention

Transformer Background

2

© 2024 Arm Inc.](https://image.slidesharecdn.com/e2r04suarm2024-240611120818-6867f827/85/How-Arm-s-Machine-Learning-Solution-Enables-Vision-Transformers-at-the-Edge-a-Presentation-from-Arm-2-320.jpg)

![• Reference [1]: A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Kaiser, and I. Polosukhin,

“Attention is all you need,” in Proceedings of the 31st International Conference on Neural Information Processing

Systems, 2017, pp. 6000–6010

Reference

20

© 2024 Arm Inc.](https://image.slidesharecdn.com/e2r04suarm2024-240611120818-6867f827/85/How-Arm-s-Machine-Learning-Solution-Enables-Vision-Transformers-at-the-Edge-a-Presentation-from-Arm-20-320.jpg)