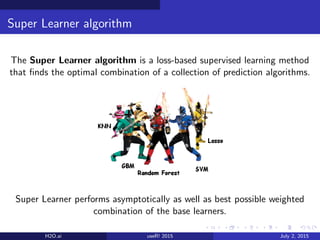

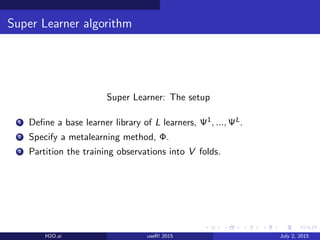

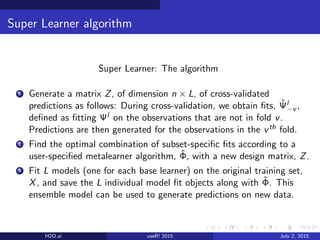

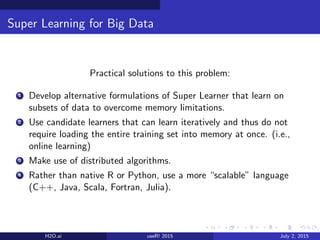

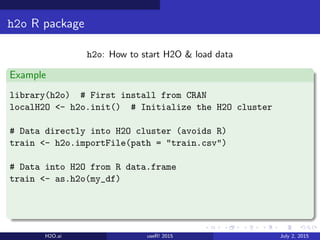

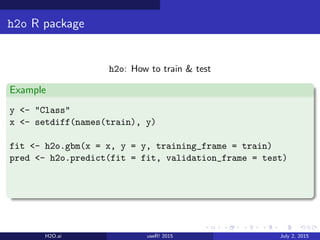

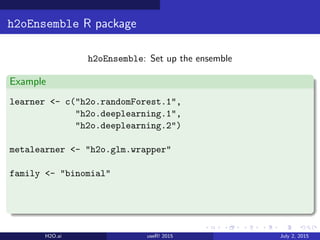

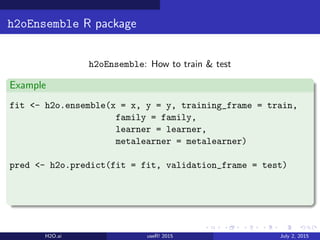

This document discusses ensemble learning methods for scaling to large datasets using H2O. It introduces ensemble learning and the Super Learner algorithm for combining multiple models. It describes how H2O and the h2oEnsemble R package implement ensemble learning and the Super Learner algorithm in a scalable way using H2O's distributed architecture. This allows ensemble methods to be applied to large datasets that cannot fit in memory.