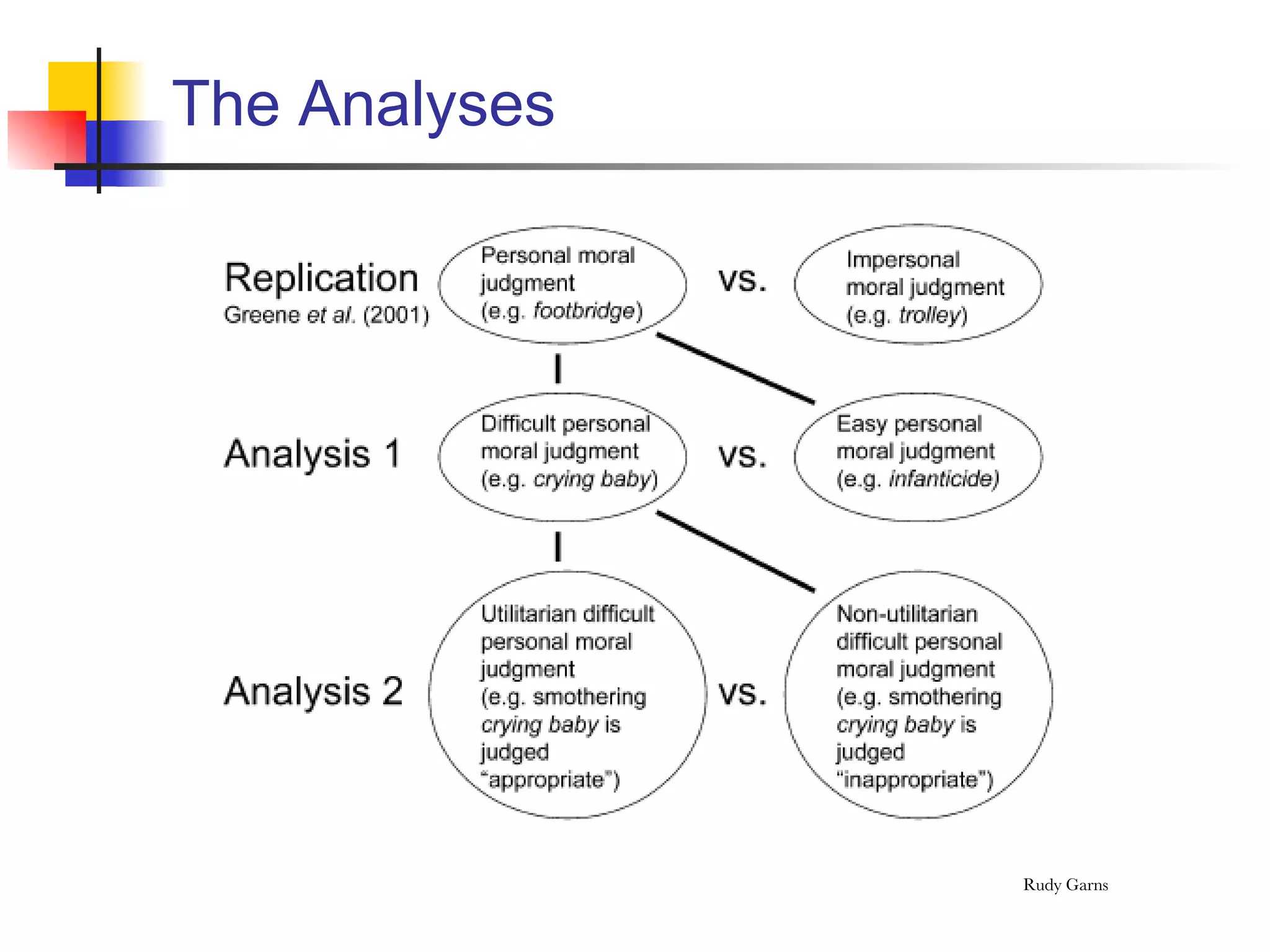

1. The study examined the neural bases of cognitive conflict during moral judgment using fMRI. It presented participants with personal and impersonal moral dilemmas.

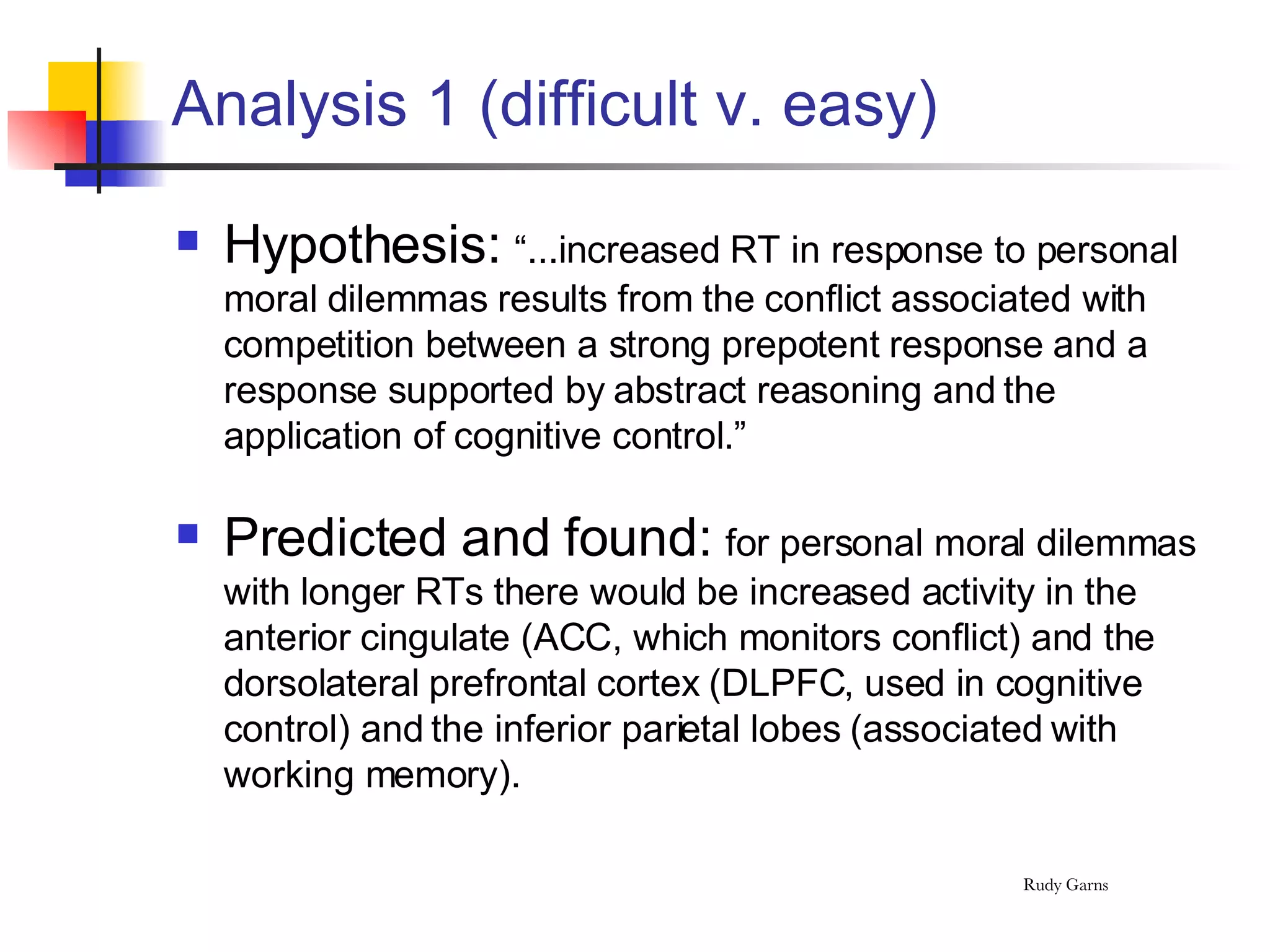

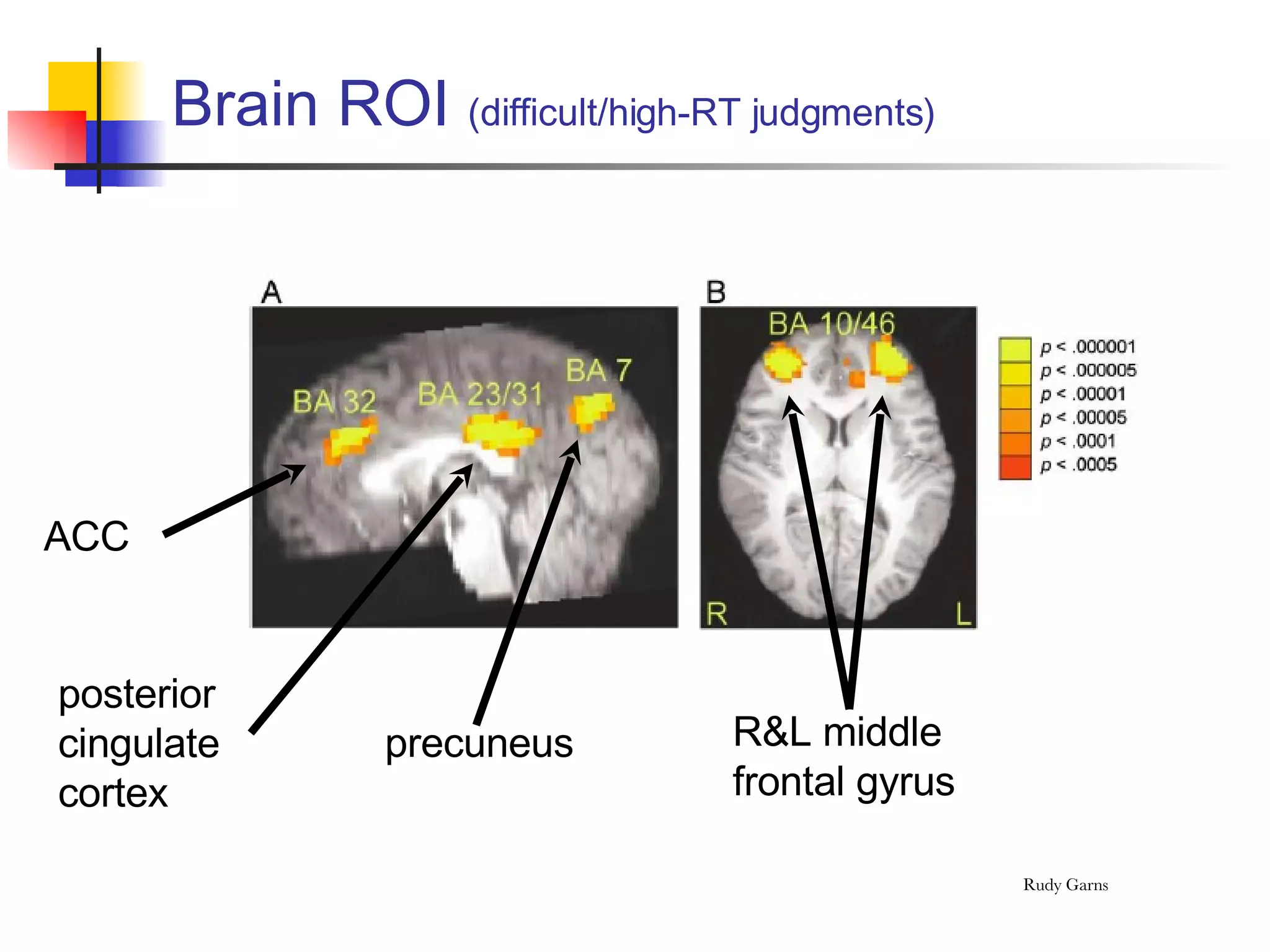

2. For difficult personal dilemmas requiring longer response times, brain regions associated with cognitive control like the anterior cingulate cortex and dorsolateral prefrontal cortex were more active, indicating cognitive conflict.

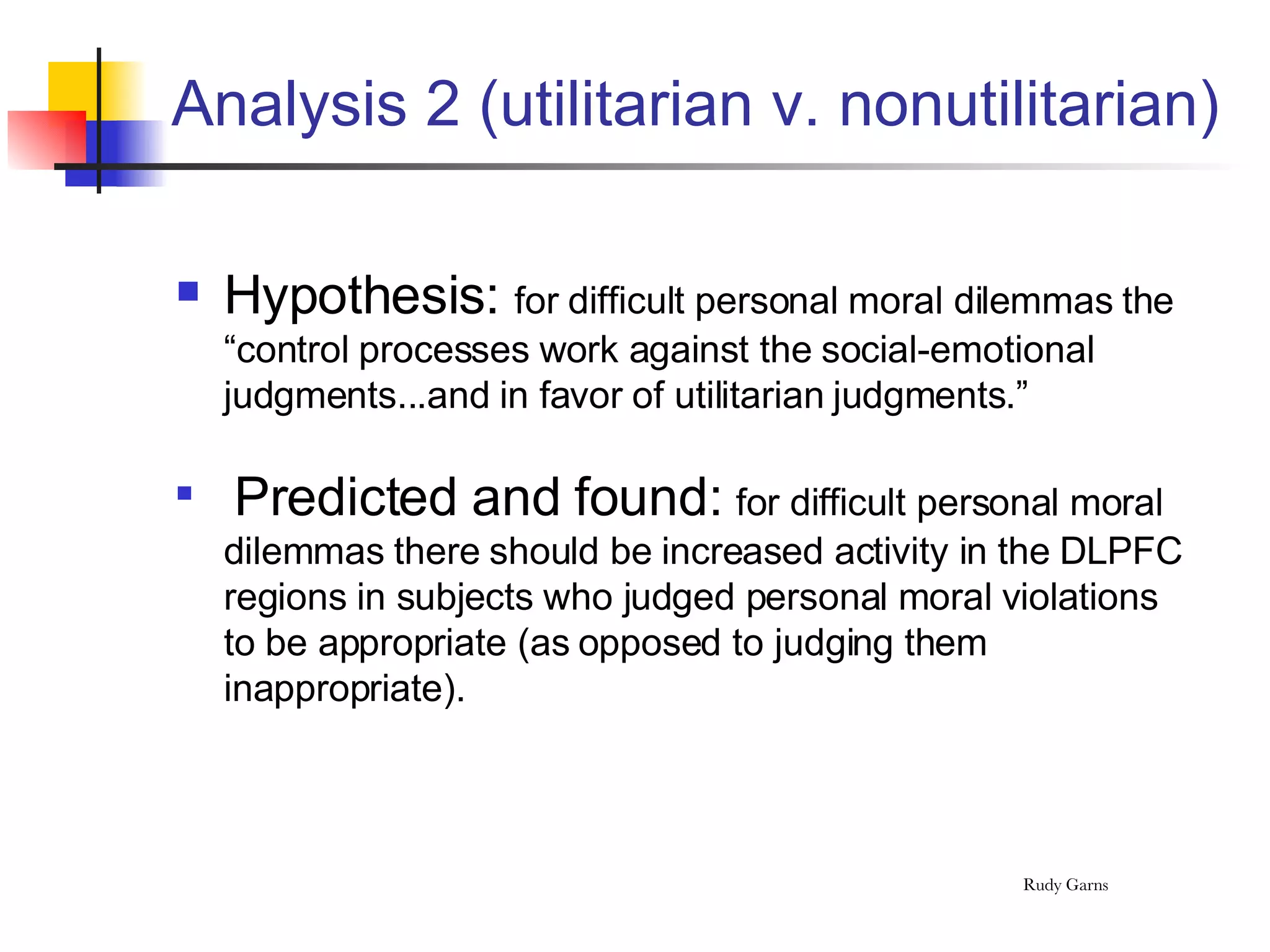

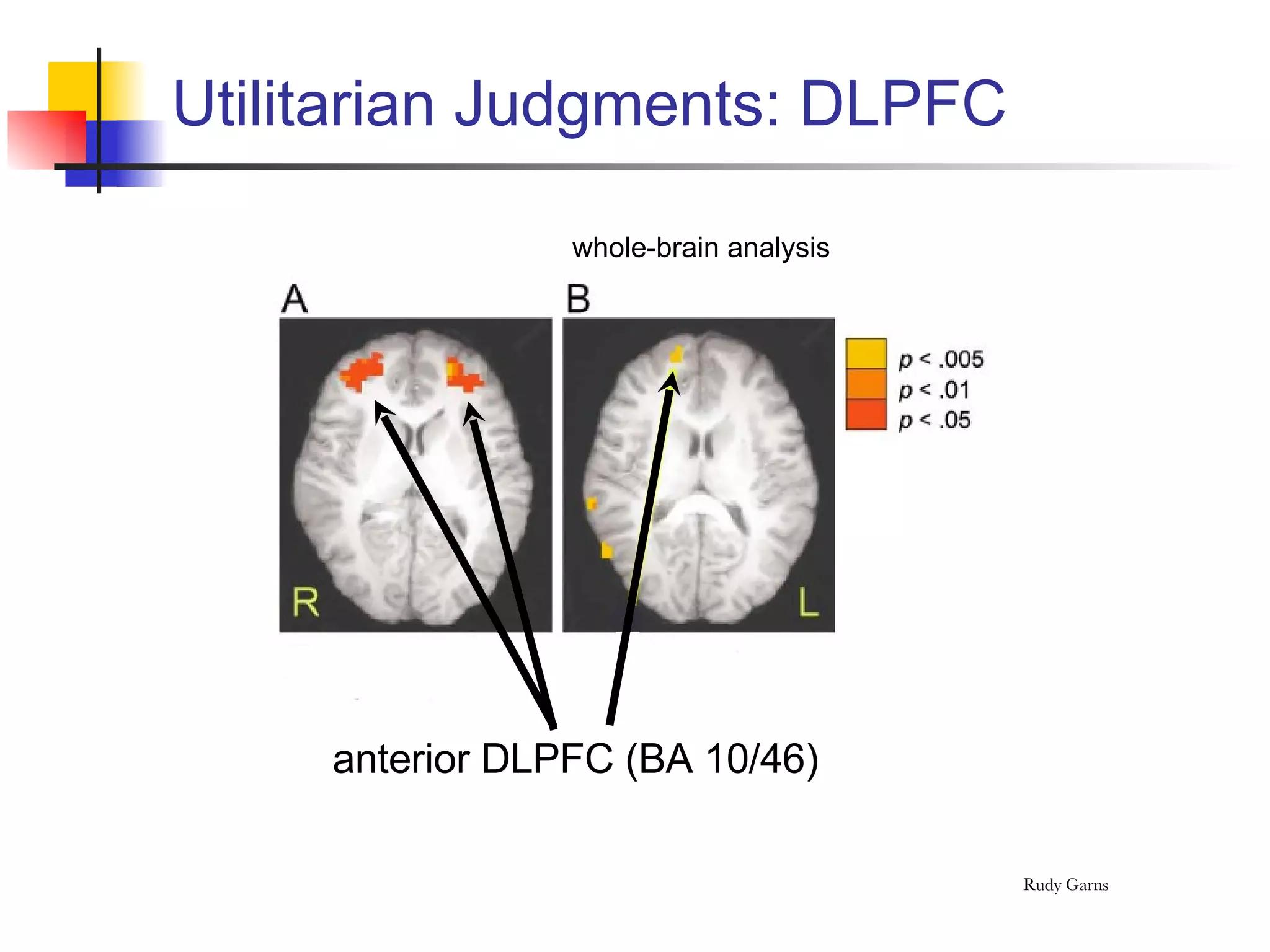

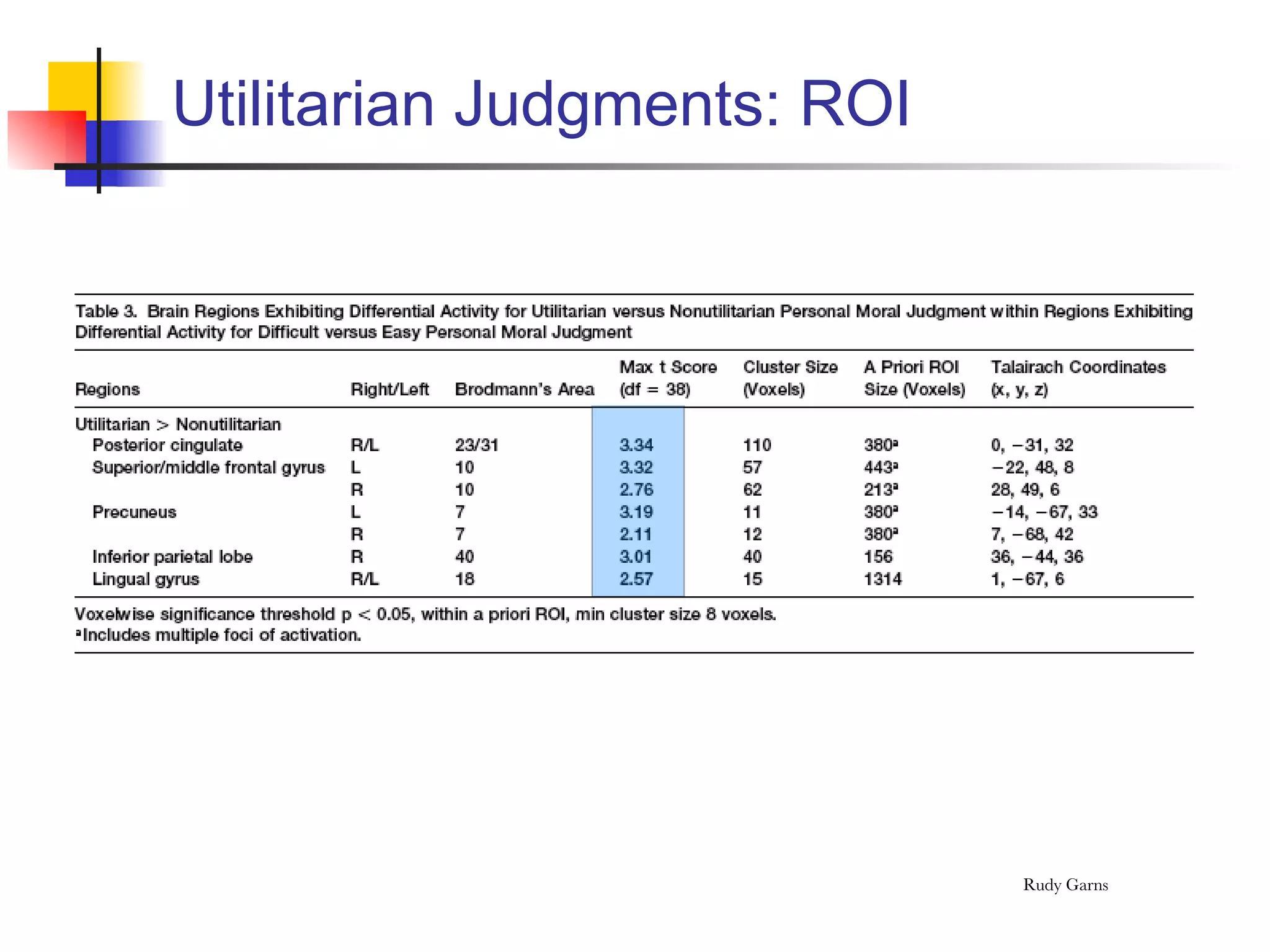

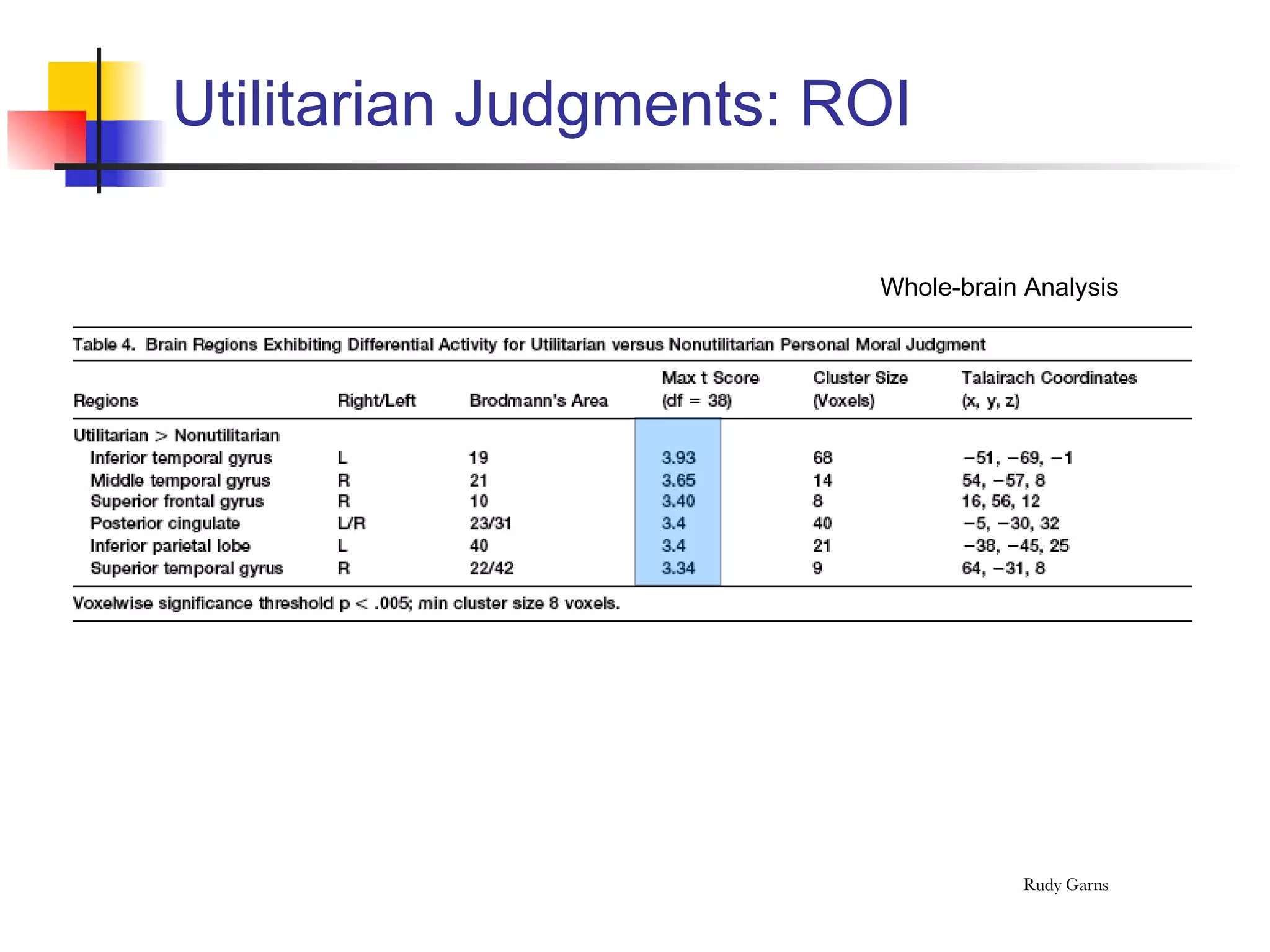

3. For difficult personal dilemmas where participants made utilitarian judgments, areas in the dorsolateral prefrontal cortex associated with cognitive control were more active, suggesting these regions worked against emotional responses to support utilitarian thinking.

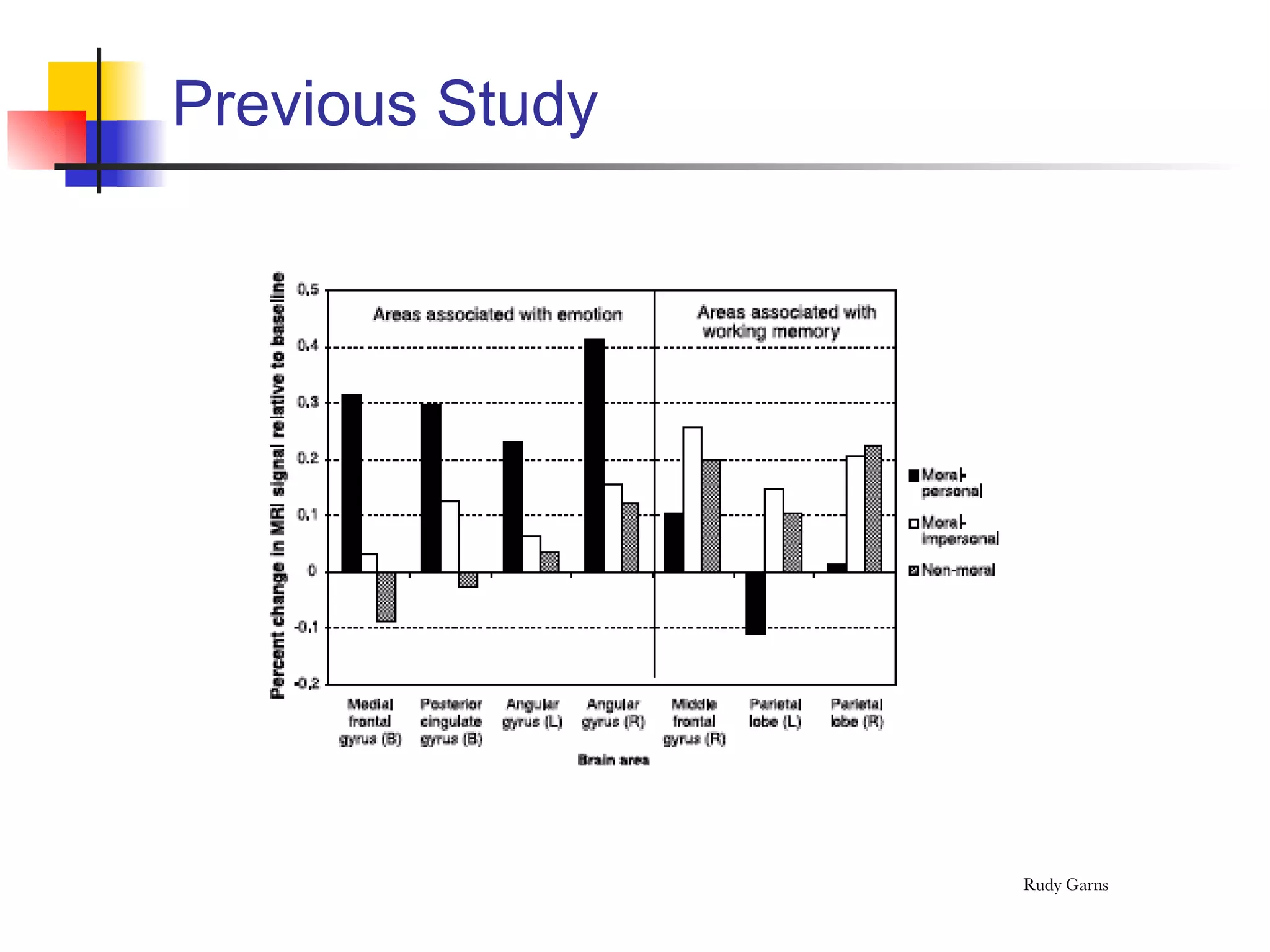

![Previous Study* Hypothesis: “...moral judgment in response to [moral] violations familiar to our primate ancestors (personal violations) are driven by social-emotional responses while moral judgment in response to distinctively human (impersonal) moral violation is (or can be) more ‘cognitive’.” Predicted and found : (a) more activity in social-emotional brain regions for dilemmas involving personal moral violations, and (b) longer reactions times (RT) for those whose response was incongruent with the emotional response. * “An fMRI Investigation of Emotional Engagement in Moral Judgment.” Greene, et al. Science , Vol. 293, September 14, 2001](https://image.slidesharecdn.com/greene-presentation-1201615722189369-2/75/Greene-Presentation-9-2048.jpg)