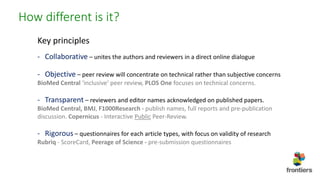

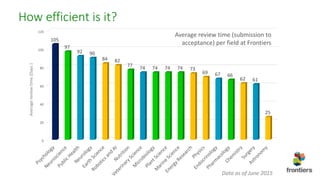

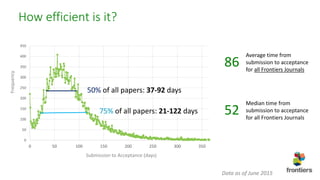

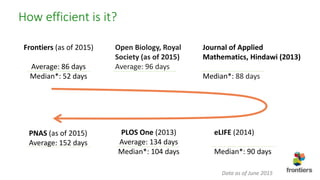

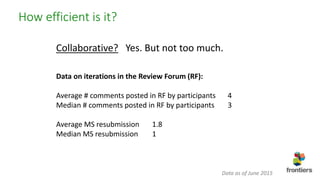

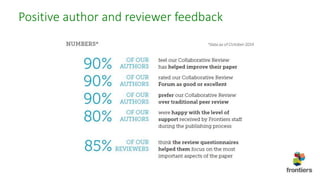

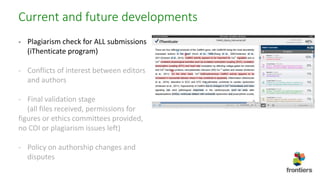

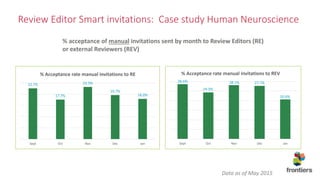

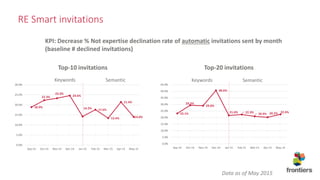

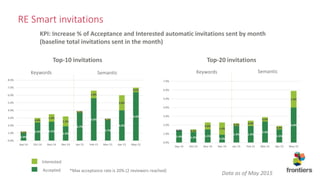

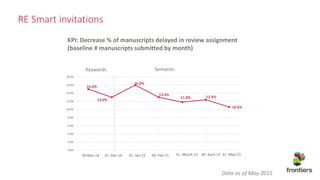

The document summarizes Frontiers Collaborative Review process. It discusses how the process is different by being collaborative, objective, transparent, and rigorous. It also analyzes the efficiency of the process, showing average review times, number of reviewer comments, and resubmission rates. Future developments discussed include plagiarism checking, validating submissions, and improving reviewer invitation algorithms. Data on acceptance rates and delays is also presented.