- LLaMA 2 is a family of large language models developed by Meta in partnership with Microsoft and others. It has been pretrained on 2 trillion tokens and has three model sizes up to 70 billion parameters.

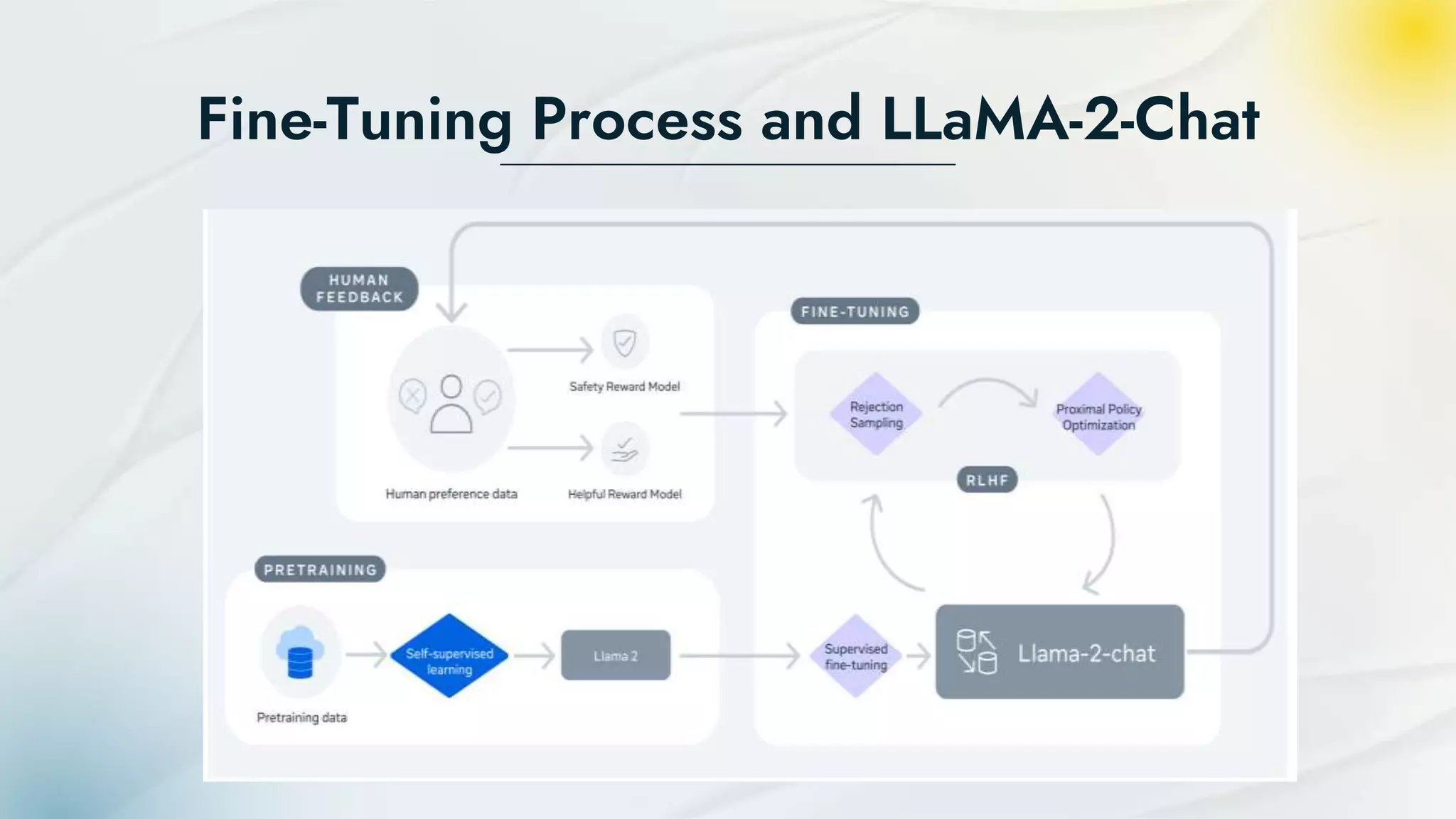

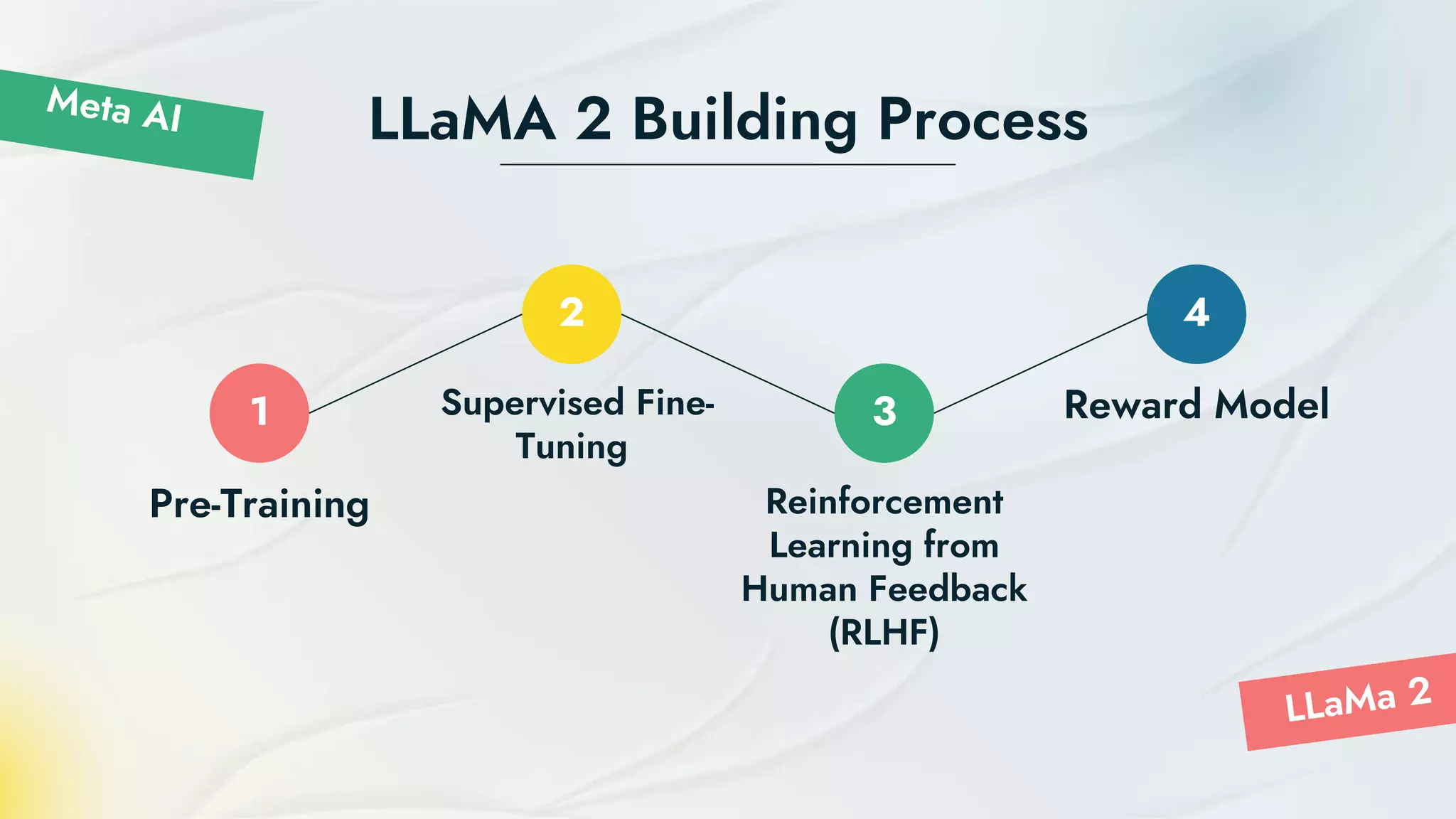

- LLaMA 2 was trained using an auto-regressive transformer and reinforcement learning from human feedback to improve safety and alignment. It can generate text, translate languages, and answer questions.

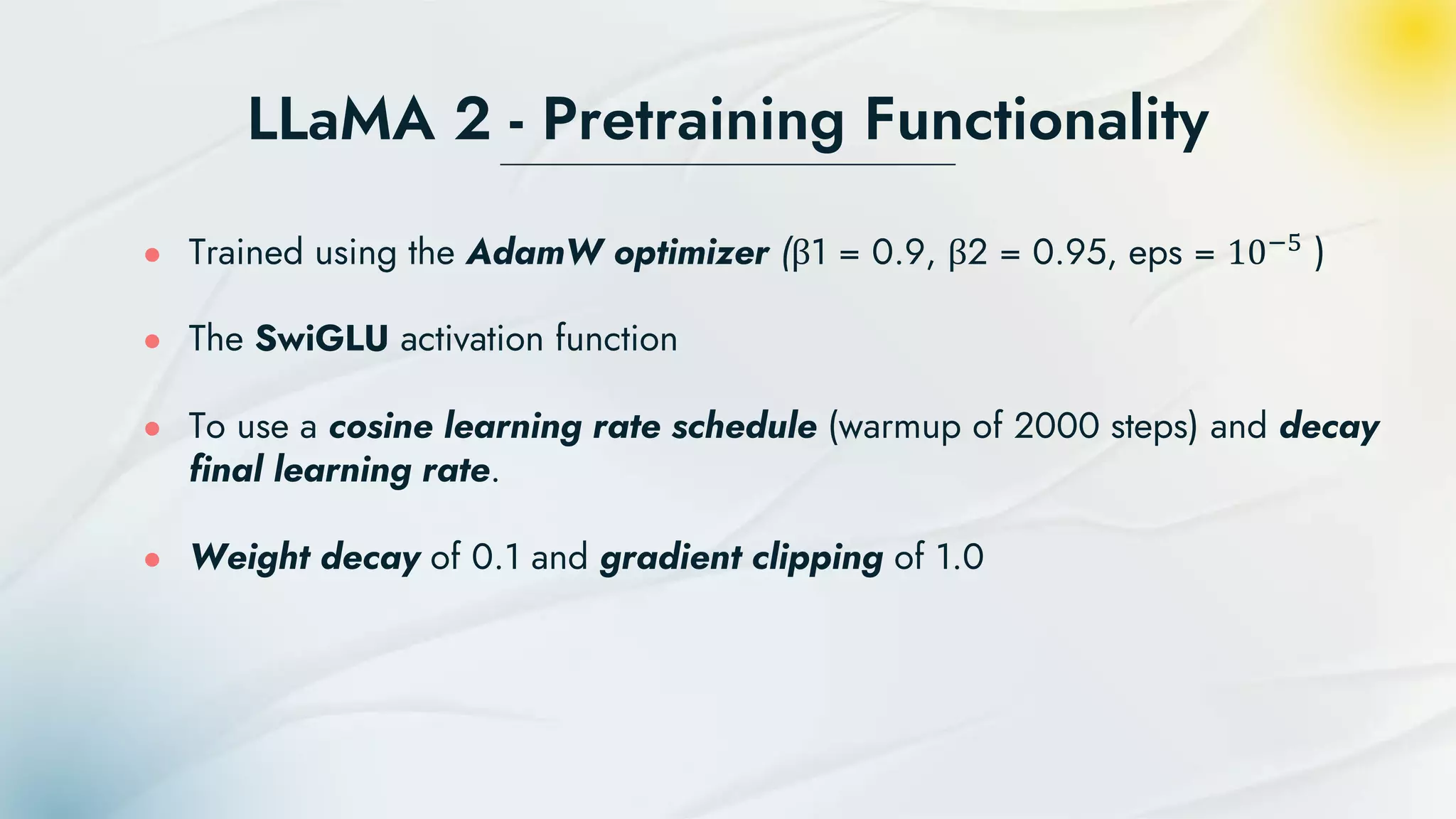

- The models were pretrained on Meta's research supercomputers then fine-tuned for dialog using supervised learning and reinforcement learning from human feedback to further optimize safety and usefulness.