How to choose the right AI model for your application?

- 1. HOW TO CHOOSE THE RIGHT AI MODEL FOR YOUR APPLICATION? Talk to our Consultant Listen to the article Arti몭cial intelligence (AI) has taken center stage in the tech world, transforming industries and paving the way for a future of limitless possibilities. But amidst all the buzz, you may 몭nd yourself wondering, “What exactly is an AI model, and why is choosing the right one so crucial?”

- 2. An AI model is a mathematical framework that allows computers to learn from data and make predictions or decisions without being explicitly programmed to do so. They are the engines under the hood of AI, turning raw data into meaningful insights and actions. These models range from the LaMDA, designed by Google to converse on any topic, to GPT models, created by OpenAI, that excel at generating human-like text. Each model has its strengths and weaknesses, making them better suited to certain tasks than others. The sea of AI models available can be overwhelming, but understanding these models and choosing the right one is key to harnessing the full potential of AI for your speci몭c application. Making an informed choice could mean the di몭erence between an AI system that e몭ciently solves your problems or one that falls short of your objectives. It’s not just about jumping on the AI bandwagon in this fast-paced AI-driven world. It’s about making informed decisions and choosing the right tools for your unique requirements. So, whether you are a retail business looking to streamline operations, a healthcare provider aiming to improve patient outcomes, or an educational institution striving to enrich learning experiences, picking the right AI model is a critical 몭rst step in your AI journey. This article will guide you through this complex landscape, equipping you with the knowledge to make the best choice for your needs. What is an AI model? Understanding the di몭erent categories of AI models Supervised learning Unsupervised learning Semi-supervised learning Reinforcement learning Deep learning Importance of AI models Selecting the right AI model/ML algorithm for your application

- 3. Linear regression Deep Neural Networks (DNNs) Logistic regression Decision trees Linear Discriminant Analysis (LDA) Naïve Bayes Support Vector Machines (SVMs) Learning Vector Quantization (LVQ) K-Nearest Neighbor (KNN) Random forest Factors to consider when choosing an AI model Problem categorization Model performance Explanability of the model Model complexity Data set type and size Feature dimensionality Training duration and expense Inference speed Validation strategies used for AI model selection What is an AI model? Artificial Intelligence (AI) Machine Learning (ML) To incorporate human behavior and Intelligence to machine or systems. Methods to learn from data or past experience, which automates analytical model building. Computation through multilayer neural networks and processing. Deep Learning (DL) LeewayHertz

- 4. An arti몭cial intelligence model is a sophisticated program designed to imitate human thought processes – learning, problem-solving, decision-making, and pattern recognition – by analyzing and processing data. You can think of it as a digital brain; just as humans use their brains to learn from experience, an AI model uses algorithms and tools to learn from data. This data could be pictures, text, music, numbers, and you name it. The model ‘trains’ on this data, hunting for patterns and connections. For instance, an AI model designed to recognize faces will study thousands of images of faces, learning to identify key features like eyes, nose, mouth, and ears. Once the AI model has been trained, it can then make decisions or predictions based on new data. Let us understand this with the face recognition example: After its training, such a model could be used to unlock a smartphone by recognizing the face of the user. Moreover, AI models are incredibly versatile, utilized in various applications such as natural language processing (helping computers understand human language), image recognition (identifying objects in an image), predictive analytics (forecasting future trends), and even in autonomous vehicles. But how do we know if an AI model is doing its job well? Just as students are tested on what they have learned, AI models are also evaluated. They are given a new data set, di몭erent from what they were trained on. This ‘test’ data helps measure the e몭ectiveness of the AI model by checking metrics like accuracy, precision, and recall. For example, a face-recognition AI model would be tested to identify faces from a new set of images accurately. Build robust AI apps with LeewayHertz! Maximize your app’s e몭ciency with the right AI model. Our expertise ensures accurate model selection, 몭ne- tuning, and seamless integration.

- 5. Learn More Understanding the different categories of AI models Arti몭cial Intelligence models can be broadly divided into a few categories, each with its distinct characteristics and applications. Let us take a brief tour of these models: Supervised learning models: Imagine a teacher guiding a student through a lesson; that’s essentially how supervised learning models work. Humans, usually experts in a particular 몭eld, train these models by labeling data points. For instance, they might label a set of images as “cats” or “dogs.” This labeled data helps the model to learn so that it can make predictions when presented with new, similar data. For example, after training, the model should be able to correctly identify whether a new image is of a cat or a dog. Such models are typically used for predictive analyses. Unsupervised learning models: Unlike the supervised models, unsupervised learning models are a bit like self-taught learners. They don’t need humans to label the data for them. Instead, they are programmed to identify patterns or trends in the input data independently. This enables them to categorize the data or summarize the content without human intervention. They are most often used for exploratory analyses or when the data isn’t labeled. Semi-supervised learning models: These models strike a balance between supervised and unsupervised learning. Think of them as students who get some initial guidance from a teacher but are left to explore and learn more independently. In this approach, experts label a small subset of data, which is used to train the model partially. The model then uses this initial learning to label a larger dataset itself, a process known as “pseudo-labeling.” This type of model can be used for both descriptive and predictive purposes. Reinforcement learning models: Reinforcement learning models learn

- 6. much like a child does – through trial and error. These models interact with their environment and learn to make decisions based on rewards and penalties. The goal of reinforcement learning is to 몭nd the best possible strategy, or ‘policy,’ to obtain the greatest reward over time. It’s commonly used in areas like game playing and robotics. Deep learning models: Deep learning models are a bit like the human brain. The ‘deep’ in deep learning models refers to the presence of several layers in their arti몭cial neural networks. These models are especially good at learning from large, complex datasets and can automatically extract features from the data, eliminating the need for manual feature extraction. They have found immense success in tasks like image and speech recognition. Importance of AI models Arti몭cial intelligence models have become integral to business operations in the modern data-driven world. The sheer volume of data produced today is massive and continually growing, posing a challenge to businesses attempting to extract valuable insights from them. This is where AI models step in as invaluable tools. They simplify complex processes, expedite tasks that would otherwise take humans a substantial amount of time, and provide precise outputs to enhance decision-making. Here are some of the signi몭cant ways AI models contribute: Data collection: In a competitive business environment where data is a key di몭erentiator, gathering relevant data for training AI models is paramount. Whether it is unexplored data sources or data domains where rivals have restricted access, AI models help businesses tap into these resources e몭ciently. Moreover, businesses can continually re몭ne their models by regularly re-training using the latest data, enhancing their accuracy and relevance. Generation of new data: AI models, especially Generative Adversarial Networks (GANs), can produce new data that resembles the training data. They can create diverse outputs, ranging from artistic sketches to

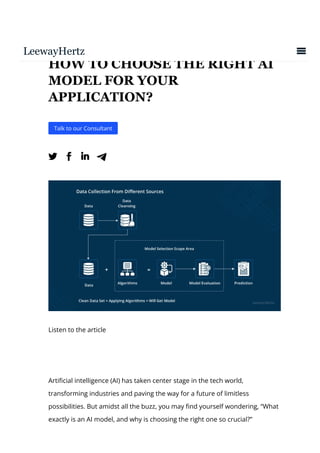

- 7. They can create diverse outputs, ranging from artistic sketches to photorealistic images, like the ones created by models such as DALL-E 2. This opens up new avenues for innovation and creativity in various industries. Interpretation of large datasets: AI models excel at handling large datasets. They can quickly analyze and extract meaningful patterns from vast, complex data that would otherwise be impossible for humans to comprehend. In a process known as model inference, AI models use input data to predict corresponding outputs, even on unseen data or real-time sensory data. This ability facilitates quicker, data-driven decision-making within organizations. Automation of tasks: Integrating AI models into business processes can lead to signi몭cant automation. These models can handle di몭erent stages of a work몭ow, including data input, processing, and 몭nal output presentation. This results in e몭cient, consistent, and scalable processes, freeing up human resources to focus on more complex and strategic tasks. The above are some of the ways AI models are reshaping the business landscape by harnessing the power of data. They play a vital role in giving businesses a competitive edge by enabling e몭ective data collection, fostering innovation through data generation, enabling an understanding of vast data sets, and promoting process automation. Selecting the right AI model/ML algorithm for your application Machine Learning Supervised Learning Classification Regression Clustering Decision Making Unsupervised Learning Reinforcement Learning

- 8. K Means Clustering Meanshift Clustering DBSCAN Clustering Agglomerative Hierarchical Clustering Gaussian Mixture Linear Regression Neural Network Regression Support Vector Regression Decision Tree Regression Lasso Regression Ridge Regression Naive Bayes Classifier Decision Trees Support Vector Machines Random Forest K Nearest Neighbors Q Learning R Learning TD Learning LeewayHertz There are numerous AI models with distinct architectures and varying degrees of complexity. Each model has its strengths and weaknesses based on the algorithm it uses and is chosen based on the nature of the problem, the data available, and the speci몭c task at hand. Some of the most frequently utilized AI model algorithms are as follows: Linear regression Deep Neural Networks (DNNs) Logistic regression Decision trees Linear Discriminant Analysis (LDA) Naïve Bayes Support Vector Machines (SVMs) Learning Vector Quantization (LVQ) K-Nearest Neighbor (KNN) Random forest Linear regression Linear regression is a straightforward yet powerful machine-learning algorithm. It operates assuming a linear relationship between the input and output variables. This means that it predicts the output variable (dependent variable) as a weighted sum of the input variables (independent variables) plus a bias (also known as the intercept). This algorithm is primarily used for regression problems, aiming to predict a

- 9. continuous output. For instance, predicting the price of a house based on its attributes like size, location, age, proximity to amenities, and more is a typical use case for linear regression. Each of these features is given a weight or coe몭cient that signi몭es its importance or in몭uence on the 몭nal price. Moreover, one of the critical strengths of linear regression is its interpretability. The weights assigned to the features provide a clear understanding of how each feature in몭uences the prediction, which can be incredibly useful for understanding the problem at hand. However, linear regression makes several assumptions about the data, including linearity, independence of errors, homoscedasticity (equal variance of errors), and normality. If these assumptions are violated, it may result in less accurate or biased predictions. Despite these limitations, Linear Regression remains a popular starting point for many prediction tasks due to its simplicity, speed, and interpretability. Deep Neural networks(DNNs) Deep Neural Networks (DNNs) represent a class of arti몭cial intelligence/machine learning models characterized by multiple ‘hidden’ layers existing between the input and output layers. Inspired by the intricate neural network found within the human brain, DNNs are constructed using interconnected units known as arti몭cial neurons. Exploring how DNN models operate thoroughly is bene몭cial to truly understanding these AI tools. They excel at discerning patterns and relationships in data, attributing to their extensive application across numerous 몭elds. Industries that leverage DNN models commonly deal with tasks like speech recognition, image recognition, and Natural Language Processing (NLP). These complex models have signi몭cantly contributed to advancements in these areas, enhancing the machine’s understanding and interpretation of human-like data. Logistic regression

- 10. Logistic regression is a statistical model used for binary classi몭cation tasks, meaning it is designed to handle two possible outcomes. Unlike linear regression, which predicts continuous outcomes, logistic regression calculates the probability of a certain class or event. It’s bene몭cial because it provides both the signi몭cance and direction of predictors. Even though it’s unable to capture complex relationships due to its linear nature, its e몭ciency, ease of implementation, and interpretability make it an attractive choice for binary classi몭cation problems. Additionally, logistic regression is commonly used in 몭elds like healthcare for disease prediction, 몭nance for credit scoring, or marketing for customer retention prediction. Despite its simplicity, it forms a fundamental building block in the toolbox of machine learning, providing valuable insights with lower computational costs, especially when the relationships in the data are relatively straightforward and not steeped in complexity. Decision trees Decision trees are an e몭ective supervised learning algorithm for classi몭cation and regression tasks. They function by continuously subdividing the dataset into smaller sections, simultaneously creating an associated decision tree. The outcome is a tree with distinct decision nodes and leaf nodes. This approach provides a clear if/then structure that is easy to understand and implement. For example, if you opt to pack your lunch rather than purchase it, you could save money. This simple yet e몭cient model traces back to the early days of predictive analytics, showcasing its enduring usefulness in the realm of AI. Linear Discriminant Analysis (LDA) LDA is a machine learning model that’s really good at 몭nding patterns and making predictions, especially when we’re trying to distinguish between two or more groups. When we feed data to the LDA model, it works like a detective to 몭nd a pattern or rule in the data. For example, if we’re trying to predict whether a

- 11. patient has a certain disease, the LDA model will analyze the patient’s symptoms and try to 몭nd a pattern that can indicate whether the patient has the disease or not. Once the LDA model has found this rule, it can then use it to make predictions about new data. So if we give the model the symptoms of a new patient, it can use the rule it found to predict whether this new patient has the disease or not. LDA is also really good at simplifying complex data. Sometimes we have so much data that it’s hard to make sense of it all. The LDA model can help by reducing this data into a simpler form, making it easier for us to understand without losing any important information. Naïve Bayes Naïve Bayes is a powerful AI model rooted in Bayesian statistics principles. It applies Bayes’ theorem, assuming a strong (naïve) independence between features. Given a data set, the model calculates the probability of each class or outcome, considering each feature as independent. This makes Naïve Bayes particularly e몭ective for large dimensional datasets commonly used in spam 몭ltering, text classi몭cation, and sentiment analysis. Its simplicity and e몭ciency are its key strengths. Building on its strengths, Naïve Bayes is quick to build and run and easy to interpret, making it a good choice for exploratory data analysis. Moreover, due to its assumption of feature independence, it can handle irrelevant features quite well, and its performance is relatively una몭ected by them. Despite its simplicity, Naïve Bayes often outperforms more complex models when the dimensionality of the data is high. Moreover, it requires less training data and can update its model easily with new training data. Its 몭exible and adaptable nature makes it popular in numerous real-world applications.

- 12. Build robust AI apps with LeewayHertz! Maximize your app’s e몭ciency with the right AI model. Our expertise ensures accurate model selection, 몭ne- tuning, and seamless integration. Learn More Support Vector Machines (SVMs) Support Vector Machines, or SVMs, are extensively utilized machine learning algorithms for classi몭cation and regression tasks. These remarkable algorithms excel by identifying an optimal hyperplane that most e몭ectively divides data into distinct classes. To delve deeper, imagine trying to distinguish two di몭erent data groups. SVMs aim to discover a line (in 2D) or hyperplane (in higher dimensions) that not only separates the groups but also stays as far away as possible from the nearest data points of each group. These points are known as “support vectors,” which are crucial in determining the optimal boundary. SVMs are particularly well-suited to handle high-dimensional data and have robust mechanisms to tackle over몭tting. Their ability to handle both linear and non-linear classi몭cation using di몭erent types of kernels, such as linear, polynomial, and Radial Basis Functions (RBF), adds to their versatility. SVMs are commonly used in a variety of 몭elds, including text and image classi몭cation, handwriting recognition, and biological sciences for protein or cancer classi몭cation. Learning Vector Quantization (LVQ) Learning Vector Quantization (LVQ) is a type of arti몭cial neural network algorithm that falls under the umbrella of supervised machine learning. This technique classi몭es data by comparing them to prototypes representing di몭erent classes, and it is particularly suitable for pattern recognition tasks.

- 13. LVQ operates by initially creating a set of prototypes from the training data, which can be considered general representatives of each class in the dataset. The algorithm then goes through each data point, measures its similarity to each prototype, and assigns it to the class of the most similar prototype. The key distinguishing feature of LVQ is its learning process. As the model iterates through the data, it adjusts the prototypes to improve the classi몭cation. This is achieved by bringing the prototype closer to the data point if it belongs to the same class and pushing it away if it belongs to a di몭erent class. LVQ is commonly used in scenarios where the data may not be linearly separable or has complex decision boundaries. Some typical applications include image recognition, text classi몭cation, and bioinformatics. LVQ is particularly e몭ective when the dimensionality of the data is high, but the amount of data is limited. K-nearest neighbors K-nearest neighbors, often abbreviated as KNN, is a powerful algorithm typically employed for tasks like classi몭cation and Regression. Its mechanism revolves around identifying the ‘k’ points in the training dataset closest to a given test point. This algorithm doesn’t create a generalized model for the entire dataset but waits until the last moment before making any predictions. This is why KNN is known as a lazy learning algorithm. KNN works by looking at the ‘k’ nearest data points to the point in question in the dataset, where ‘k’ can be any integer. Based on the values of these nearest neighbors, the algorithm then makes its prediction. For example, the prediction might be the most common class among the neighbors in classi몭cation. One of the main advantages of KNN is its simplicity and ease of interpretation. However, it can be computationally expensive, particularly with large datasets, and may perform poorly when there are many irrelevant features.

- 14. Random forest Random forest is a powerful and versatile machine-learning method that falls under the category of ensemble learning. Essentially, it’s a collection, or “forest,” of decision trees, hence the name. Instead of relying on a single decision tree, it leverages the power of multiple decision trees to generate more accurate predictions. The way it works is fairly straightforward. When a prediction is needed, the random forest takes the input, runs it through each decision tree in the forest, and makes a separate prediction. The 몭nal prediction is then determined by averaging the predictions of each tree for regression tasks or by majority vote for classi몭cation tasks. This approach reduces the likelihood of over몭tting, a common problem with single decision trees. Each tree in the forest is trained on a di몭erent subset of the data, making the overall model more robust and less sensitive to noise. Random forests are widely used and highly valued for their accuracy, versatility, and ease of use. Factors to consider when choosing an AI model When deciding on an AI model, these key factors need careful consideration: Problem categorization Problem categorization is an essential step in selecting an AI model. It involves categorizing the problem based on the type of input and output. By categorizing the problem, we can determine the suitable algorithms to apply. If the data is labeled for input categorization, it falls under supervised learning. If the data is unlabeled and we aim to uncover patterns or structures, it belongs to unsupervised learning. On the other hand, if the goal is to optimize an objective function through interactions with an environment, it falls under reinforcement learning. If the model predicts numerical values, output categorization is a regression problem. If the model

- 15. numerical values, output categorization is a regression problem. If the model assigns data points to speci몭c classes, it is a classi몭cation problem. If the model groups similar data points together without prede몭ned classes, it is a clustering problem. Once the problem is categorized, we can explore the available algorithms suitable for the task. Implementing multiple algorithms in a machine learning pipeline to compare their performance using carefully selected evaluation criteria is recommended. The algorithm that yields the best results is chosen automatically. Optionally, hyperparameters can be optimized using techniques like cross-validation to 몭ne-tune the performance of each algorithm. However, if time is limited, manually selected hyperparameters can be su몭cient. It is important to note that this explanation provides a general overview of problem categorization and algorithm selection. Model performance When selecting an AI model, the foremost consideration should be the model’s performance quality. You should lean towards algorithms that amplify this performance. The nature of the problem often determines the metrics that can be employed to analyze the model’s results. Frequently used metrics encompass accuracy, precision, recall, and the f1-score. However, it is important to note that not all metrics are universally applicable. For instance, accuracy is not suitable when dealing with datasets that are not evenly distributed. Hence, choosing an appropriate metric or set of metrics to assess your model’s performance is an essential step before embarking on the model selection process. Explainability of the model The ability to interpret and explain model outcomes is crucial in various contexts. However, the issue is that numerous algorithms function like “black boxes,” making explaining their results challenging, irrespective of how

- 16. excellent they may be. The inability to do so can become a signi몭cant impediment in scenarios where explainability is critical. Certain models like linear regression and decision trees fare better when it comes to explainability. Hence, gauging the interpretability of each model’s results becomes vital in choosing an appropriate model. Interestingly, complexity and explainability often stand at opposite ends of the spectrum, leading us to consider complexity as a crucial factor. Model complexity The complexity of a model plays a signi몭cant role in its capability to uncover intricate patterns within the data, but this advantage can be o몭set by the challenges it poses in maintenance and interpretability. There are a few key notions to consider: Greater complexity can often lead to enhanced performance, albeit at increased costs. There is an inverse relationship between complexity and explainability; the more intricate the model is, the tougher it is to elucidate its outputs. Apart from explainability, the cost involved in constructing and maintaining a model is a pivotal factor in the success of a project. The more complex a model is, the greater its impact will be throughout the model’s lifecycle. Data set type and size The volume and kind of training data at your disposal are critical considerations when selecting an AI model. Neural networks excel at managing and interpreting large volumes of data, whereas a K-Nearest Neighbors (KNN) model performs optimally with fewer examples. Beyond the sheer quantity of data available, another consideration is how much data you require to yield satisfactory results. Sometimes, a robust solution can be developed with merely 100 training instances; you might need 100,000 at other times. Understanding your problem and the required data volume should guide your selection of a model capable of processing it. Di몭erent AI models necessitate varying types and quantities of data for successful training and operation. For instance, supervised learning models demand a

- 17. substantial amount of labeled data, which can be costly and labor-intensive to acquire. Unsupervised learning models can function with unlabeled data, but the results might lack meaningfulness if the data is noisy or irrelevant. In contrast, reinforcement learning models require numerous interactions with an environment in a trial-and-error fashion, which can be di몭cult to simulate or model in reality. Feature dimensionality Dimensionality, in the context of selecting an AI model, is a signi몭cant aspect to consider and can be perceived from two perspectives: a dataset’s vertical and horizontal dimensions. The vertical dimension refers to the volume of data available, while the horizontal dimension denotes the number of features within the dataset. The role of the vertical dimension in choosing an appropriate model has already been discussed. Meanwhile, the horizontal dimension is equally vital to consider, as an increased number of features often enhance the model’s ability to formulate superior solutions. However, more features also add to the model’s complexity. The “Curse of Dimensionality” phenomenon provides an insightful understanding of how dimensionality in몭uences a model’s complexity. It’s noteworthy that not every model is equally scalable with high-dimensional datasets. Therefore, for managing high-dimensional datasets e몭ectively, it may be necessary to incorporate speci몭c dimensionality reduction algorithms, such as Principal Component Analysis (PCA), one of the most widely used methods for this purpose. Training duration and expense The duration and cost of training a model are crucial considerations in selecting an AI model. The question of whether to opt for a model that’s 98% accurate but costs $100,000 to train or a slightly less accurate model at 97% accuracy but only costs $10,000 is dependent on your speci몭c situation. AI models that need to incorporate new data swiftly can’t a몭ord lengthy training cycles. For instance, a recommendation system that requires regular updates

- 18. based on user interactions bene몭ts from a cost-e몭ective and swift training cycle. Striking the right balance between the training time, cost, and model performance is a key factor when architecting a scalable solution. It’s all about maximizing e몭ciency without compromising the performance of the model. Inference speed Inference speed, or the duration it takes a model to process data and produce a prediction, is vital when choosing an AI model. Consider the scenario of a self-driving vehicle system – it requires instantaneous decisions, thereby ruling out models with slow inference times. To illustrate, the KNN (K-Nearest Neighbors) model performs most of its computational work during the inference stage, which can make it slower to generate predictions. On the other hand, a decision tree model takes less time during the inference phase, even though it may require a longer training period. So, understanding the requirements of your use case in terms of inference speed is crucial when deciding on an AI model. Few additional points that you could consider including: Real-time requirements: If the AI application needs to process data and provide results in real-time, the model selection will need to consider this. For instance, certain complex models may not be suitable due to their longer inference times. Hardware constraints: If the AI model needs to run on speci몭c hardware (like a smartphone, an embedded system, or a speci몭c server con몭guration), these constraints may in몭uence the type of model that can be used. Maintenance and updating: Models often need to be updated with new data, and some models are easier to update than others. This could be an important factor depending on the application. Data privacy: If the AI application deals with sensitive data, you may want to consider models that can learn without needing access to raw data. For instance, federated learning is a machine learning approach that allows for

- 19. instance, federated learning is a machine learning approach that allows for model training on decentralized devices or servers holding local data samples without exchanging them. Model robustness and generalization: The model’s ability to generalize from its training data to unseen data, and its robustness to adversarial attacks or shifts in the data distribution, are important considerations. Ethical considerations and bias: AI models can unintentionally introduce or amplify bias, leading to unfair or unethical outcomes. Strategies for understanding and mitigating these biases should be included. Speci몭c AI model architectures: You may want to delve deeper into speci몭c AI architectures such as Convolutional Neural Networks (CNNs) for image processing tasks, Recurrent Neural Networks (RNNs) or Transformer-based models for sequential data, and other architectures suited to speci몭c tasks or data types. Ensemble methods: These methods combine several models to achieve better performance than any single model. AutoML and Neural Architecture Search (NAS): These techniques automatically search for the best model architecture and hyperparameters, which can be very helpful when the best model type is unknown or too costly to 몭nd manually. Transfer learning: Using pre-trained models for similar tasks to leverage the knowledge gained from previous tasks is a common practice in AI. This reduces the requirement for large data and saves computational time. Remember, the best AI model depends on your needs, resources, and constraints. It’s all about 몭nding the right balance to meet your project’s objectives. Build robust AI apps with LeewayHertz! Maximize your app’s e몭ciency with the right AI model. Our expertise ensures accurate model selection, 몭ne- tuning, and seamless integration.

- 20. tuning, and seamless integration. Learn More Validation strategies used for AI model selection Validation techniques are key in model selection as they help assess a model’s performance on unseen data, ensuring that the selected model can generalize well. Resampling methods: Resampling methods in AI are techniques used to assess and evaluate the performance of machine learning models on unseen data samples. These methods involve rearranging and reusing the available data to gain insights into how well the model can generalize. Examples of resampling methods include random split, time-based split, K- fold cross-validation, bootstrap, and strati몭ed K-fold. Resampling methods help mitigate biases in data sampling, ensure accurate model evaluation, handle time series data, stabilize model performance, and address issues like over몭tting. They play a crucial role in model selection and performance assessment in AI applications. Random split: Random split is a technique that randomly distributes a portion of data into training, testing, and, ideally, validation subsets. The main advantage of this method is that it provides a good likelihood that all three subsets will represent the original population fairly well. Put simply, random splitting helps avoid biased data sampling. The use of the validation set in model selection is worth noting. This second test set prompts the question, why do we need two test sets? During the feature selection and model tuning phases, the test set is used for evaluating the model. This implies that the model’s parameters and feature set are chosen to deliver an optimal result on the test set. Hence, the validation set, which comprises completely unseen data points (that haven’t been utilized in the tuning and feature selection stages), is used to evaluate the

- 21. utilized in the tuning and feature selection stages), is used to evaluate the model. Time-based split: In certain scenarios, data cannot be split randomly. For example, when training a model for weather forecasting, the data cannot be split randomly into training and testing sets, as this would disrupt the seasonal patterns. This type of data is often termed as Time Series data. In such situations, a time-oriented split is employed. For instance, the training set could contain data from the past three years and the initial ten months of the current year. The remaining two months of data could be designated as the testing or validation set. There’s also a concept known as ‘window sets’ – the model is trained up until a speci몭c date and then tested on subsequent dates in an iterative manner such that the training window progressively shifts forward by one day (correspondingly, the test set decreases by a day). The advantage of this method is that it helps stabilize the model and prevent over몭tting, especially when the test set is relatively small (e.g., 3 to 7 days). However, time-series data does have a downside in that the events or data points are not mutually independent. A single event might in몭uence all subsequent data inputs. For instance, changing the ruling political party might signi몭cantly alter population statistics in the following years. Or a global event like the COVID-19 pandemic can profoundly impact economic data for the forthcoming years. In such scenarios, a machine learning model cannot learn e몭ectively from past data because signi몭cant disparities exist between data points before and after the event. K-fold cross-validation: K-fold cross-validation is a robust validation technique that begins with randomly shu몭ing the dataset and subsequently dividing it into k subsets. The procedure then iteratively treats each subset as a test set while all other subsets are pooled together to form the training set. The model is trained on this training set and subsequently tested on the test set. This process repeats k times, once for each subset, resulting in k di몭erent outcomes from the k di몭erent test sets. This comprehensively evaluates the model’s performance across

- 22. di몭erent parts of the data. By the end of this iterative process, the best- performing model can be identi몭ed and chosen based on the highest average score it achieves across the k-test sets. This method provides a more balanced and thorough assessment of a model’s performance than a single train/test split. Strati몭ed K-fold: Strati몭ed K-fold is a variation of K-fold cross-validation that considers the distribution of the target variable. This is a key di몭erence from regular K-fold cross-validation, which does not consider the target variable’s values during the splitting process. In strati몭ed K-fold, the procedure ensures that each data fold contains a representative ratio of the target variable classes. For example, if you have a binary classi몭cation problem, strati몭ed K-fold will distribute the instances of both classes evenly across all folds. This approach enhances the accuracy of model evaluation and reduces bias in model training, ensuring that each fold is a good representative of the overall dataset. Consequently, the model’s performance metrics are likely more reliable and indicate its performance on unseen data. Bootstrap: Bootstrap is a powerful method used to achieve a stable model, and it shares similarities with the random splitting technique due to its reliance on random sampling. To begin bootstrapping, you 몭rst select a sample size, which is generally the same size as your original dataset. Following this, you randomly choose a data point from the original dataset and include it in the bootstrap sample. Importantly, after selecting a data point, you replace it back into the original dataset. This “sampling with replacement” process is repeated N times, where N is the sample size. As a result of this resampling technique, your bootstrap sample might contain multiple occurrences of the same data point because every selection is made from the full set of original data points, regardless of previous selections. The model is then trained using this bootstrap sample. For evaluation, we use the data points that were not selected in the bootstrap process, known as out-of-bag samples. This technique e몭ectively measures how well the model is likely to perform on unseen data.

- 23. how well the model is likely to perform on unseen data. Probabilistic measures: Probabilistic measures provide a comprehensive evaluation of a model by considering the model’s performance and complexity. The complexity of a model is indicative of its ability to capture the variability in the data. For instance, a model like linear regression, prone to high bias, is considered less complex, while a neural network, capable of modeling complex relationships, is viewed as highly complex. When considering probabilistic measures, it’s essential to mention that the model’s performance is calculated solely based on the training data, eliminating the need for a separate test set. However, a limitation of probabilistic measures is their disregard for the uncertainty inherent in models. This could lead to a propensity for selecting simpler models at the expense of more complex ones, which might not always be the optimal choice. Akaike Information Criterion (AIC): Akaike Information Criterion (AIC), developed by statistician Hirotugu Akaike, is used to evaluate the quality of a statistical model. The main premise of AIC is that no model can completely capture the true reality, and thus there will always be some information loss. This information loss can be quanti몭ed using the Kullback-Leibler (KL) divergence, which measures the divergence between the probability distributions of two variables. Akaike identi몭ed a correlation between KL divergence and Maximum Likelihood, a principle where the goal is to maximize the conditional probability of observing a data point X, given certain parameters and a speci몭ed probability distribution. As a result, Akaike formulated the Information Criterion (IC), now known as AIC, which quanti몭es information loss, gauging the discrepancy between di몭erent models. The preferred model is the one that has the minimum information loss. However, AIC has its limitations. While it is excellent for identifying models that minimize training information loss, it may struggle with generalization. It tends to favor complex models, which may perform well on training data but may not perform as well on unseen data. Bayesian Information Criterion (BIC): Bayesian Information Criterion (BIC),

- 24. grounded in Bayesian probability theory, is designed to evaluate models trained using maximum likelihood estimation. The fundamental aspect of BIC is its incorporation of a penalty term for the complexity of the model. The principle behind this is simple: the more complex a model, the higher the risk of over몭tting, especially with small datasets. So, BIC attempts to counteract this by adding a penalty that increases with the complexity of the model. This ensures the model does not become overly complicated, avoiding over몭tting. However, a key consideration when using BIC is the size of the dataset. It’s more suitable when the dataset is large because, with smaller datasets, BIC’s penalization could result in the selection of oversimpli몭ed models that may not capture all the nuances in the data. Minimum Description Length (MDL): The Minimum Description Length (MDL) is a principle that originates from information theory, a 몭eld that deals with measures like entropy to quantify the average bit representation required for an event given a particular probability distribution or random variable. The MDL refers to the smallest number of bits necessary to represent a model. The goal under the MDL principle is to 몭nd a model that can be described using the fewest bits. This means 몭nding the most compact representation of the model, keeping the complexity under control while accurately representing the data. This approach fosters simplicity and parsimony in model representation, which can help avoid over몭tting and improve model generalizability. Structural Risk Minimization (SRM): Structural Risk Minimization (SRM) addresses the challenge machine learning models face in 몭nding a general theory from limited data, which often leads to over몭tting. Over몭tting occurs when a model becomes excessively tailored to the training data, compromising its ability to generalize to unseen data. SRM aims to strike a balance between the model’s complexity and its ability to 몭t the data. It recognizes that a complex model may 몭t the training data very well but can su몭er from poor performance on new, unseen data. On the other hand, a simple model may not capture the intricacies of the data and may under몭t. By incorporating the concept of structural risk, SRM seeks to minimize both

- 25. By incorporating the concept of structural risk, SRM seeks to minimize both the training error and the model complexity. It avoids excessive complexity that could lead to over몭tting while still achieving reasonable accuracy on unseen data. SRM promotes selecting a model that achieves a good trade- o몭 between model complexity and data 몭tting, thus improving generalization capabilities. Each validation technique has its unique strengths and limitations, which must be carefully considered in the model selection process. Endnote In the realm of arti몭cial intelligence, choosing the right AI model is a blend of science, engineering, and a dash of intuition. It’s about understanding the intricacies of supervised, unsupervised, semi-supervised, and reinforcement learning. It’s about delving deep into the world of deep learning, appreciating the strengths of di몭erent model types – from the elegance of linear regression, the complexities of deep neural networks and the versatility of decision trees to the probabilistic magic of Naive Bayes and the collaborative power of random forests. However, choosing the right AI model isn’t just a matter of understanding these models. It’s about factoring in the problem at hand, weighing the pros and cons of model complexity against performance, comprehending your data type and size, and accommodating the dimensions of your features. It’s about considering the resources, time, and expense needed for training and the speed required for inference. It’s about ensuring a thorough validation strategy for your model selection. Choosing the right AI model is a journey, a labyrinth of choices and considerations. But with the knowledge of AI models and the considerations involved, you are ready to make your way through this complex process, one decision at a time. So take this knowledge, apply it to your unique application, and unlock the true potential of arti몭cial intelligence. Ultimately, it’s not just about choosing an AI model but the right path to a more

- 26. it’s not just about choosing an AI model but the right path to a more intelligent future. Ready to leverage the power of arti몭cial intelligence but lost in a sea of choices? Contact LeewayHertz’s AI consulting team and let our experts handpick the perfect AI model for your application. Dive into the future with us! Author’s Bio Akash Takyar CEO LeewayHertz Akash Takyar is the founder and CEO at LeewayHertz. The experience of building over 100+ platforms for startups and enterprises allows Akash to rapidly architect and design solutions that are scalable and beautiful. Akash's ability to build enterprise-grade technology solutions has attracted over 30 Fortune 500 companies, including Siemens, 3M, P&G and Hershey’s. Akash is an early adopter of new technology, a passionate technology enthusiast, and an investor in AI and IoT startups. Write to Akash

- 27. Start a conversation by filling the form Once you let us know your requirement, our technical expert will schedule a call and discuss your idea in detail post sign of an NDA. All information will be kept con몭dential. Name Phone Company Email Tell us about your project Send me the signed Non-Disclosure Agreement (NDA ) Start a conversation Insights

- 28. AI in procurement: Redefining efficiency through automation Arti몭cial intelligence is playing a transformative role in procurement, bringing e몭ciency and optimization to decision-making and operational processes. From data to direction: How AI in sentiment analysis redefines decisionmaking for businesses Read More

- 29. LEEWAYHERTZPORTFOLIO AI for sentiment analysis is an innovative way to automatically decipher the emotional tone embedded in comments, giving businesses quick, real-time insights from vast sets of customer data. How is generative AI disrupting the insurance sector? Generative AI disrupts the insurance sector with its transformative capabilities, streamlining operations, personalizing policies, and rede몭ning customer experiences. Read More Read More Show all Insights

- 30. SERVICES GENERATIVE AI INDUSTRIES PRODUCTS CONTACT US Get In Touch 415-301-2880 info@leewayhertz.com jobs@leewayhertz.com 388 Market Street Suite 1300 San Francisco, California 94111 About Us Global AI Club Careers Case Studies Work Community TraceRx ESPN Filecoin Lottery of People World Poker Tour Chrysallis.AI Generative AI Arti몭cial Intelligence & ML Web3 Blockchain Software Development Hire Developers Generative AI Development Generative AI Consulting Generative AI Integration LLM Development Prompt Engineering ChatGPT Developers Consumer Electronics Financial Markets Healthcare Logistics Manufacturing Startup Whitelabel Crypto Wallet Whitelabel Blockchain Explorer Whitelabel Crypto Exchange Whitelabel Enterprise Crypto Wallet Whitelabel DAO

- 31. Privacy & Cookies Policy Sitemap ©2023 LeewayHertz. All Rights Reserved.