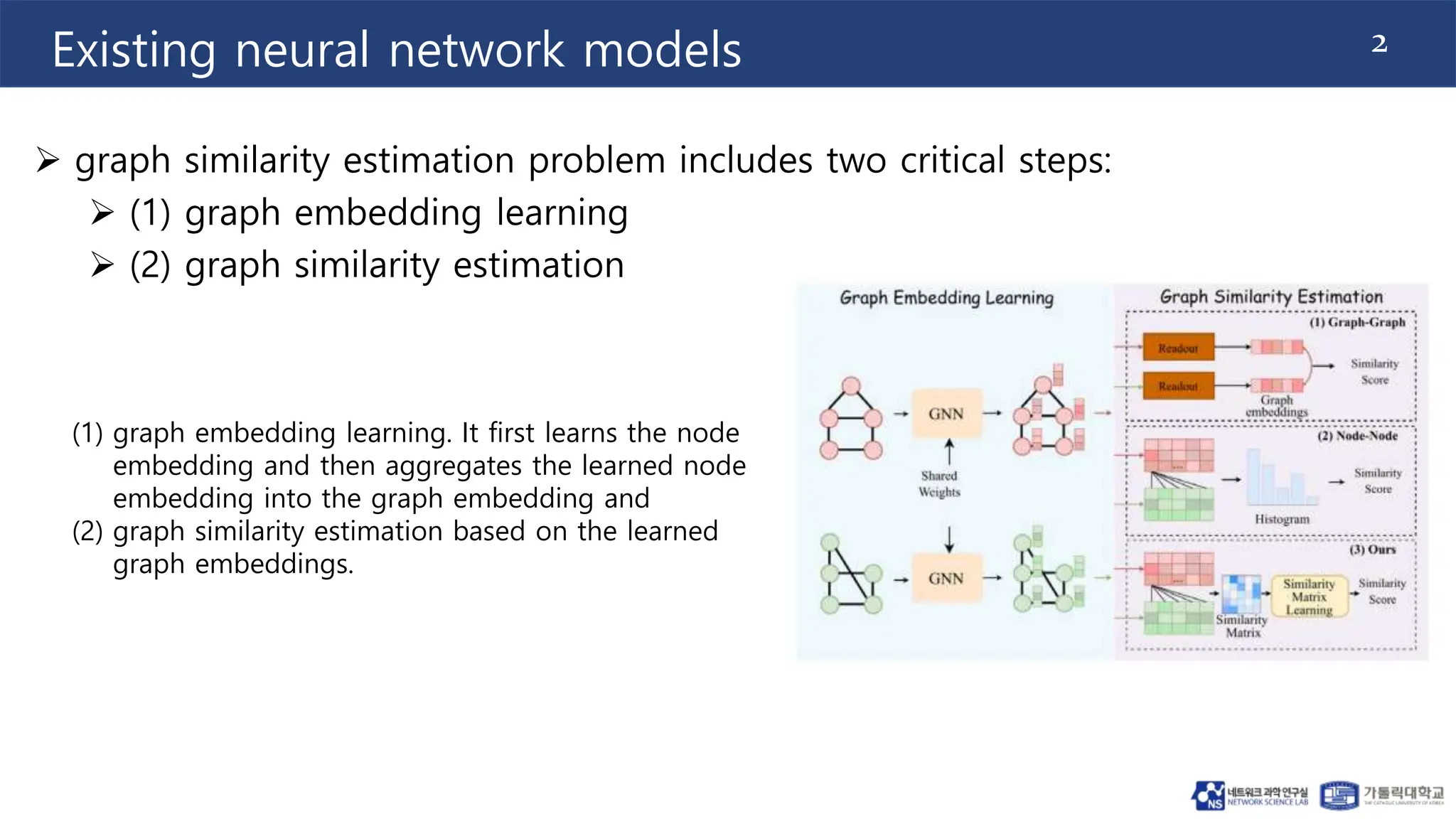

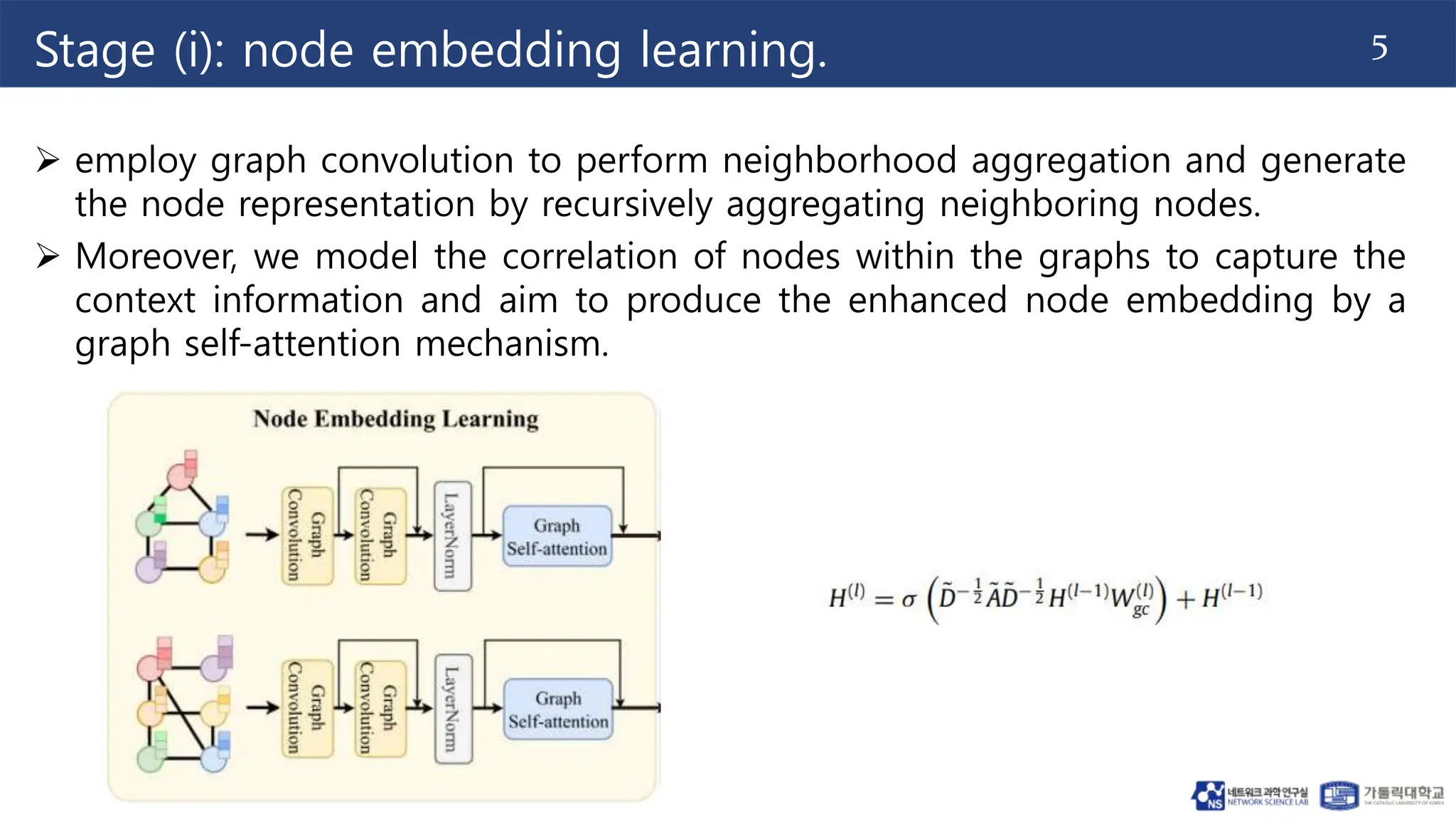

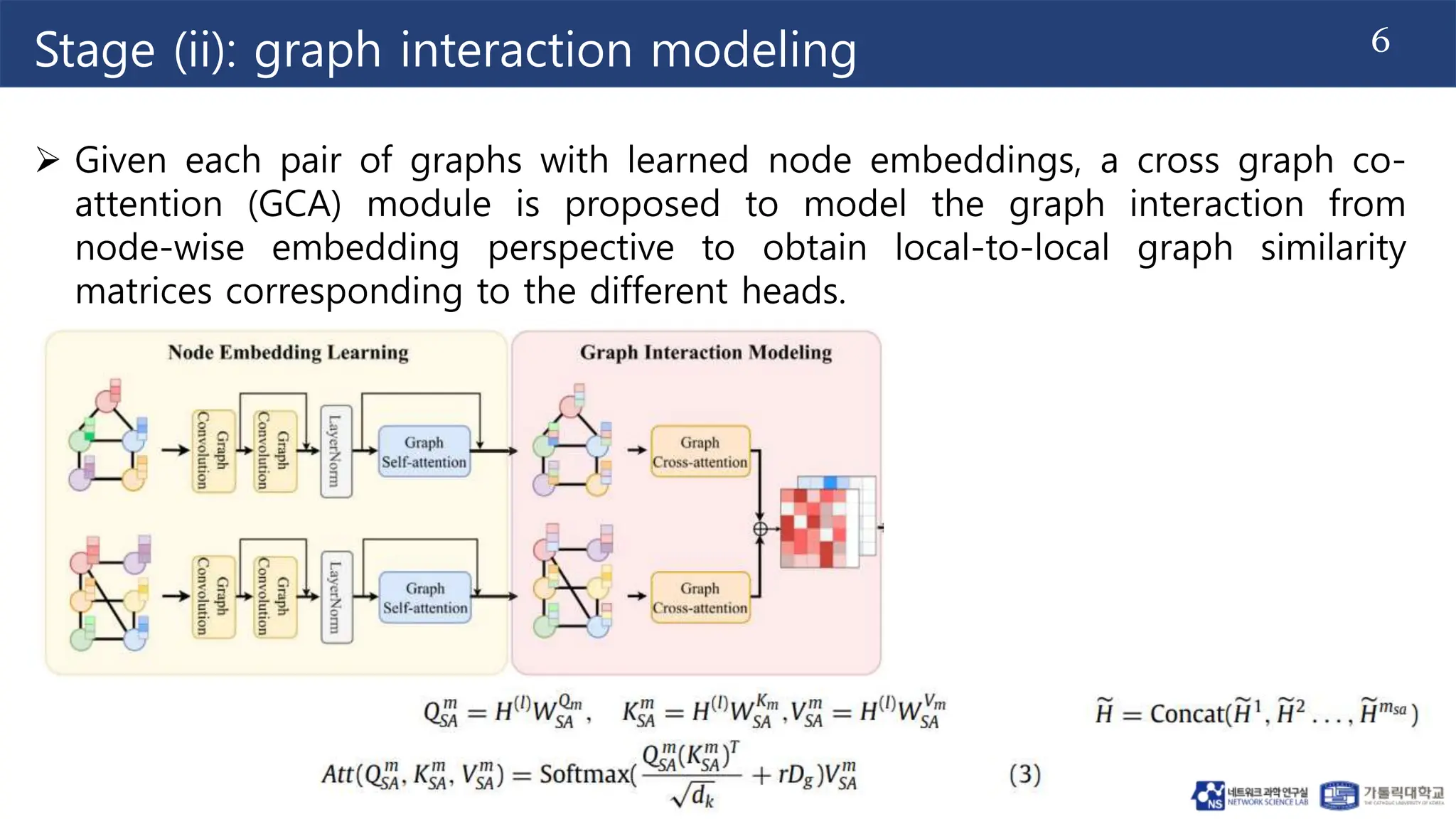

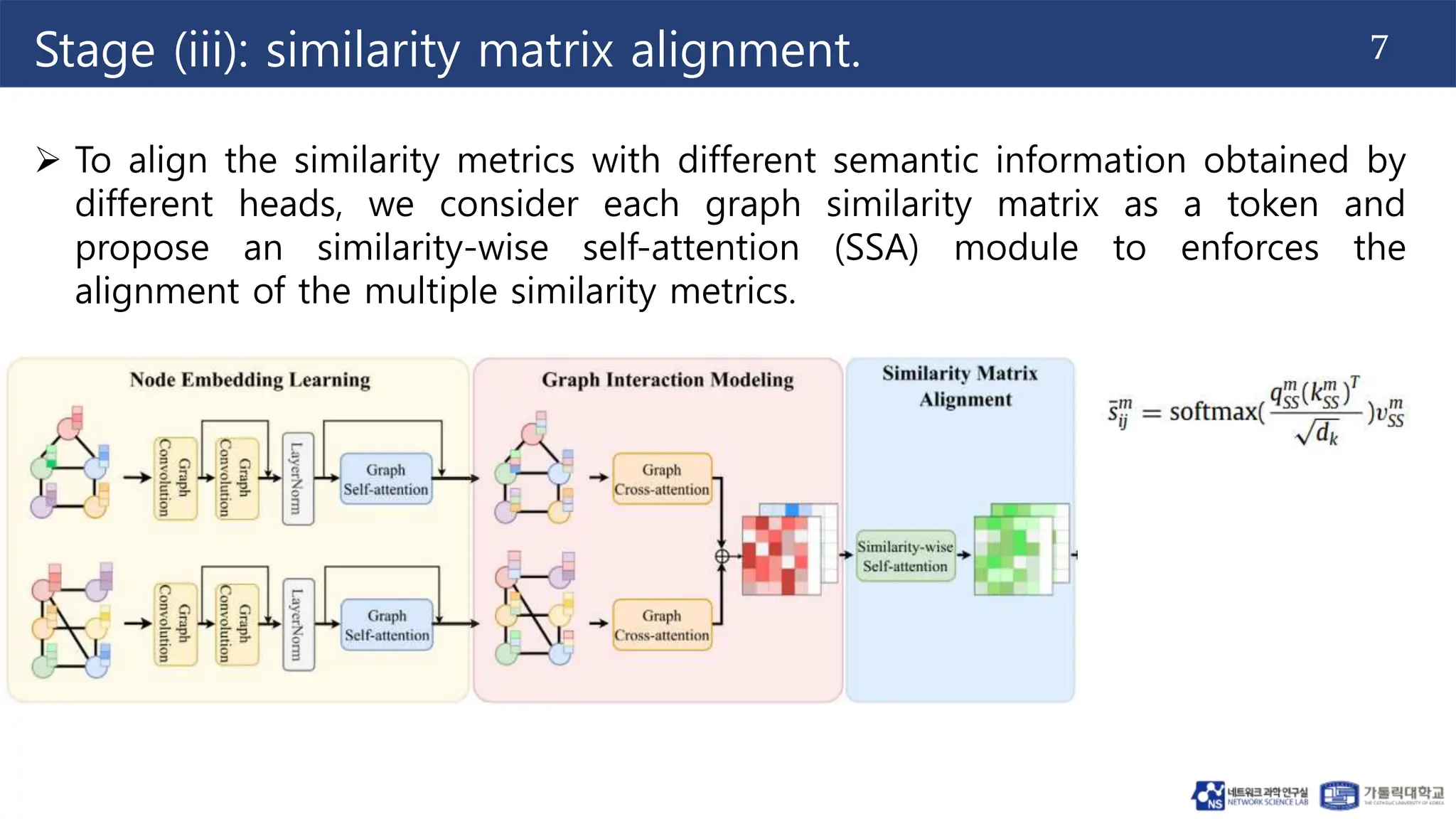

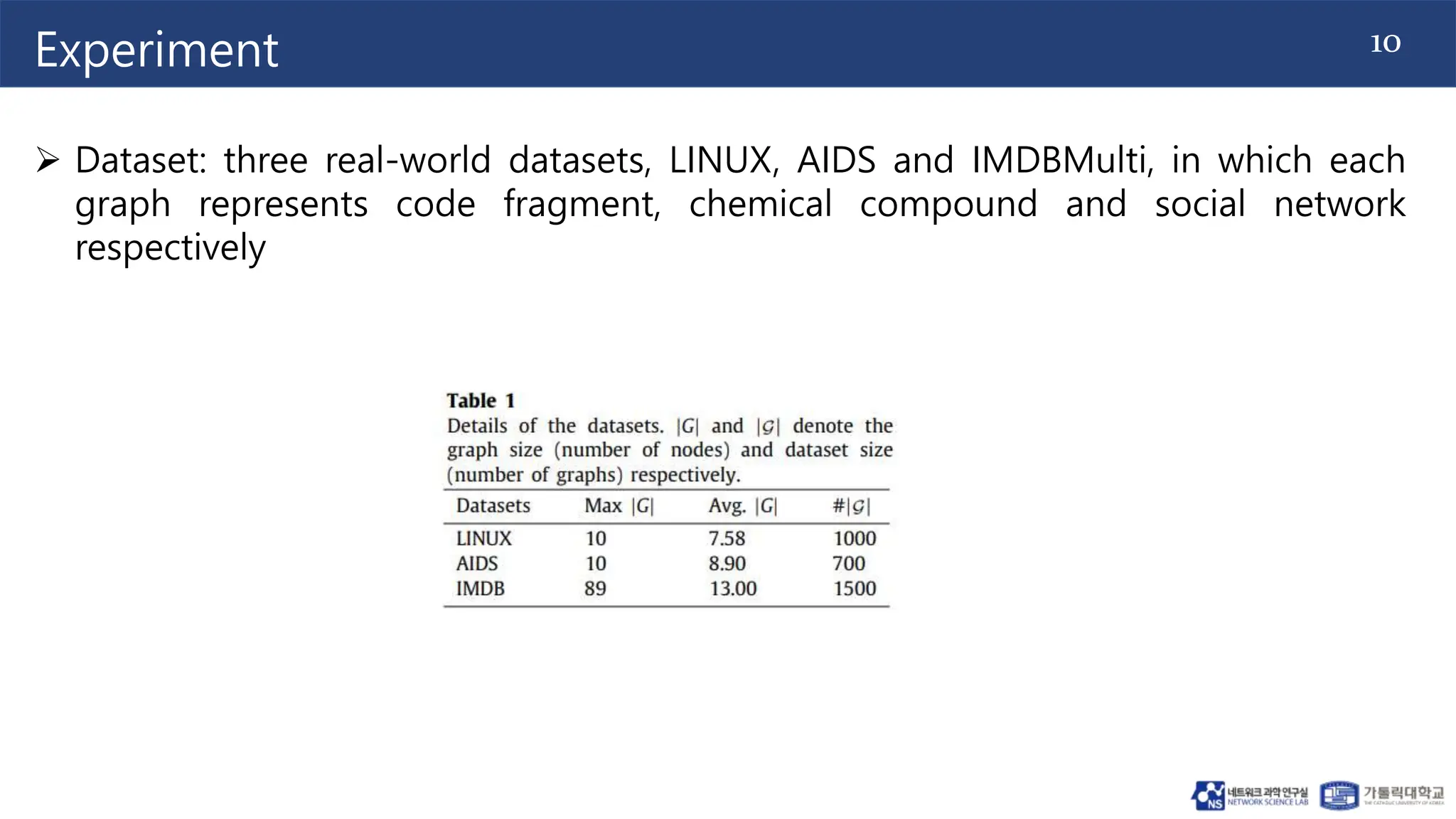

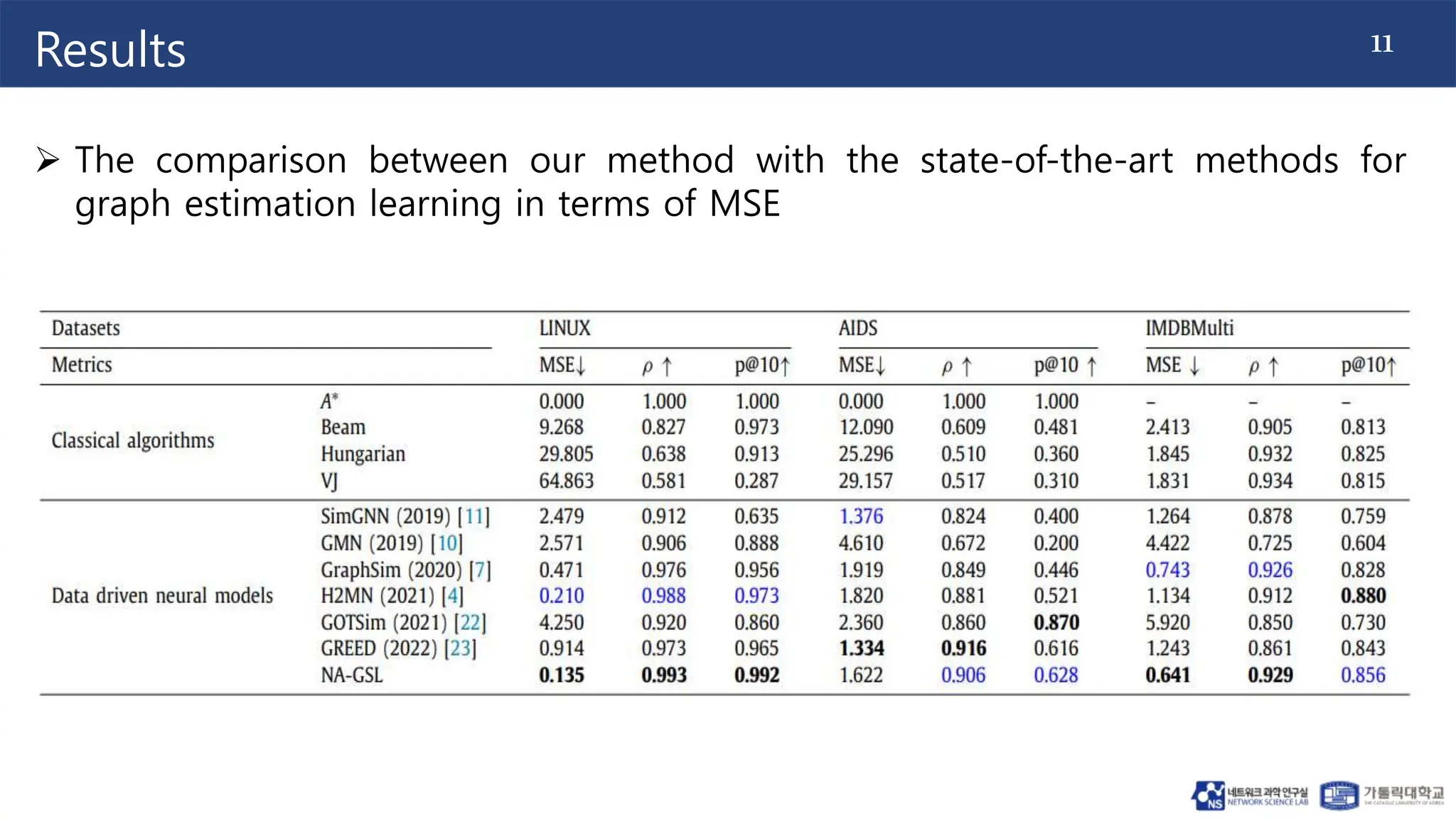

The document proposes a method for graph similarity learning using node-wise attention. It involves 4 stages: (1) node embedding learning using graph convolution, (2) graph interaction modeling using cross-graph co-attention, (3) similarity matrix alignment using similarity-wise self-attention, and (4) similarity matrix learning using a similarity structure learning module. The method is evaluated on three datasets and shown to outperform state-of-the-art methods according to mean squared error. Ablation experiments demonstrate the effectiveness of using different graph neural networks. The approach aims to improve graph similarity learning by encoding node features and structural properties using attention mechanisms.