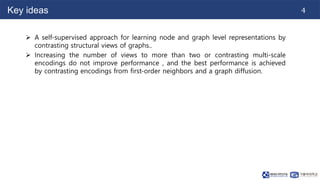

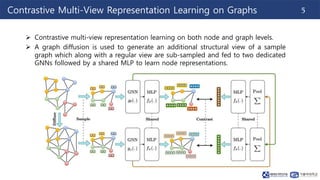

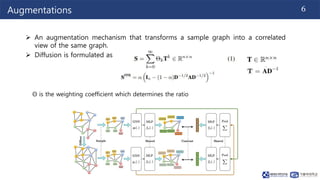

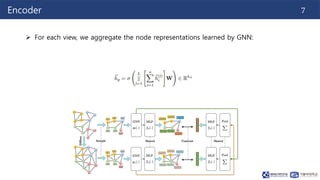

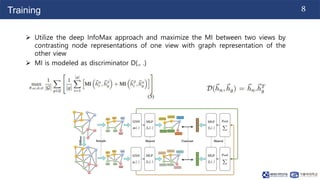

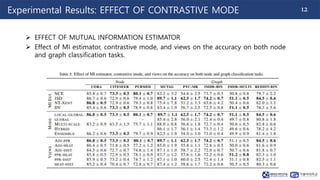

This document summarizes a paper on contrastive multi-view representation learning on graphs. It proposes generating two structural views of a graph, including encodings from first-order neighbors and a graph diffusion, and learning node and graph representations by contrasting the encodings from the two views using mutual information. The best performance is achieved with two views contrasting first-order neighbors and a graph diffusion. Increasing the number of views or contrasting multi-scale encodings does not further improve performance.

![240311_Thuy_Labseminar[Contrastive Multi-View Representation Learning on Graphs].pptx](https://image.slidesharecdn.com/240311thuylabseminar-240409105318-e4ddfee7/85/240311_Thuy_Labseminar-Contrastive-Multi-View-Representation-Learning-on-Graphs-pptx-15-320.jpg)