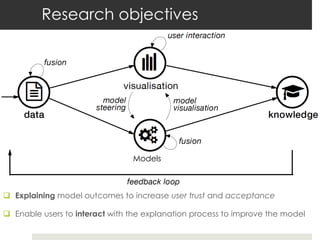

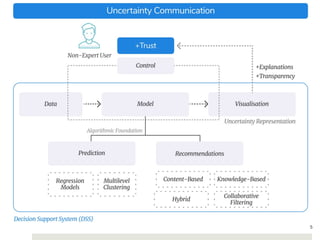

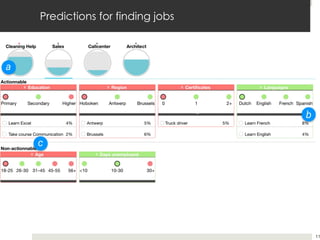

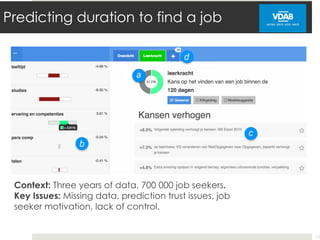

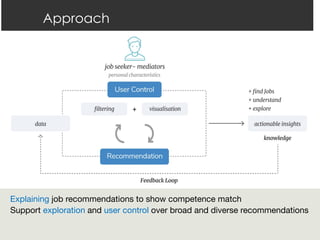

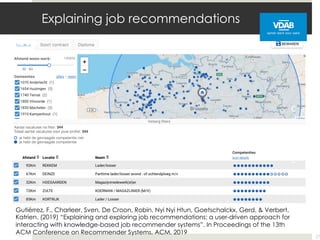

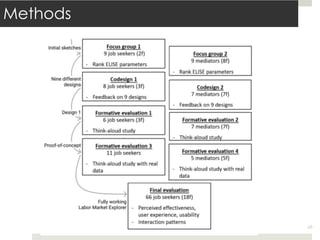

This document summarizes Katrien Verbert's presentation on explaining job recommendations from a human-centered perspective. The presentation discusses (1) the need to explain job recommendation models to increase user trust and acceptance, (2) using explanation methods like visualizations to enable user interaction with explanations and improve models, and (3) designing explanations of a job recommendation system to increase user empowerment, clarify recommendations, and support job mediators. The research aims to balance explanation, exploration, and actionable insights when interacting with recommender systems.

![Support the dialogue between domain expert and laymen

14

Human-in-the-loop

Sven Charleer, Andrew Vande Moere, Joris Klerkx, Katrien Verbert, and Tinne De Laet. 2017. Learning

Analytics Dashboards to Support Adviser-Student Dialogue. IEEE Transactions on Learning Technologies

(2017), 1–12.

“… the expert can become the

intermediary between the [system] and

the [end-user] in order to avoid

misinterpretation and incorrect

decisions on behalf of the data… “](https://image.slidesharecdn.com/xai-hr-220923135626-77475239/85/Explaining-job-recommendations-a-human-centred-perspective-14-320.jpg)

![Design goals

16

[DG1] Control the message

[DG2] Clarify the recommendations

[DG3] Support the mediator](https://image.slidesharecdn.com/xai-hr-220923135626-77475239/85/Explaining-job-recommendations-a-human-centred-perspective-16-320.jpg)

![[DG1] control the message

20

Two themes

(1) Customization

(2) Importance of the human factor](https://image.slidesharecdn.com/xai-hr-220923135626-77475239/85/Explaining-job-recommendations-a-human-centred-perspective-20-320.jpg)

![[DG2] Clarify recommendations

21

Two themes

(1) Understanding the visualisation

(2) Convincing power](https://image.slidesharecdn.com/xai-hr-220923135626-77475239/85/Explaining-job-recommendations-a-human-centred-perspective-21-320.jpg)

![[DG3] Support the mediator

Useful cases

¤ Orientation

¤ Job mobility

22](https://image.slidesharecdn.com/xai-hr-220923135626-77475239/85/Explaining-job-recommendations-a-human-centred-perspective-22-320.jpg)

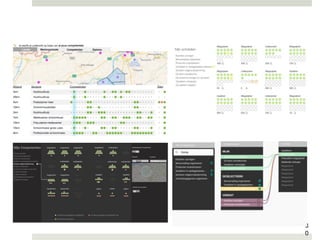

![Labor Market Explorer Design Goals

31

[DG1] Exploration/Control

Job seekers should be able to control

recommendations and filter out the information

flow coming from the recommender engine by

prioritizing specific items of interest.

[DG2] Explanations

Recommendations and matching scores should be

explained, and details should be provided on-

demand.

[DG3] Actionable Insights

The interface should provide actionable insights to

help job-seekers find new or more job

recommendations from different perspectives.](https://image.slidesharecdn.com/xai-hr-220923135626-77475239/85/Explaining-job-recommendations-a-human-centred-perspective-31-320.jpg)