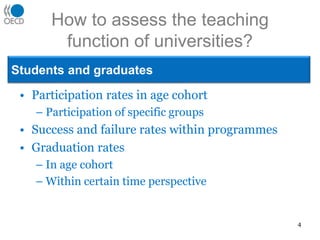

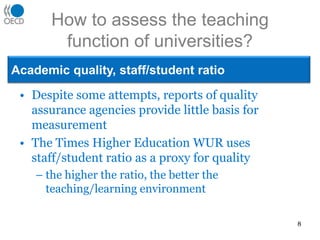

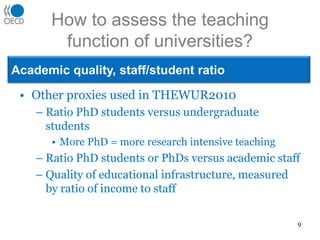

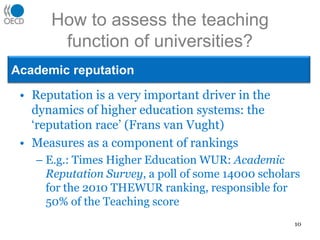

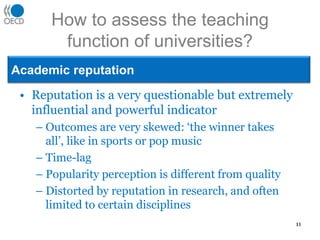

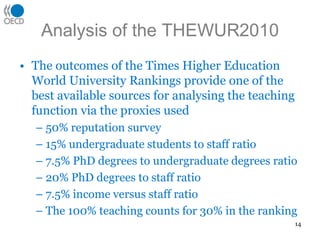

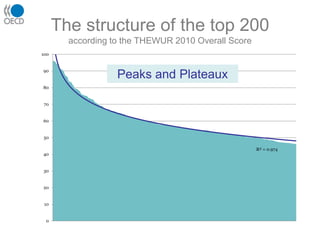

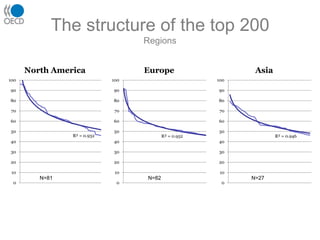

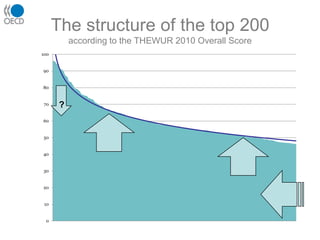

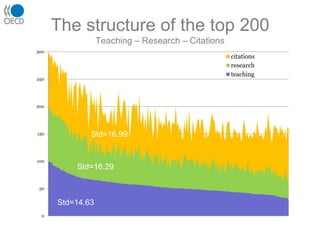

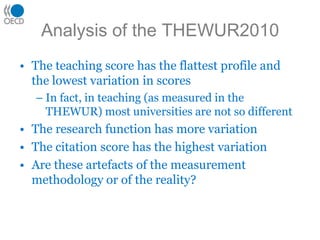

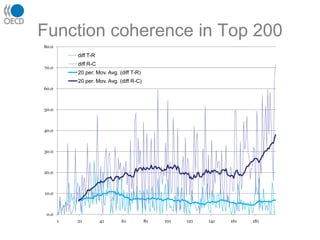

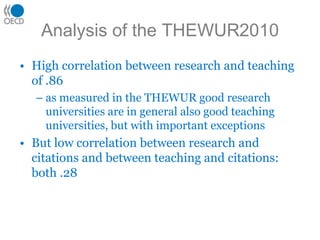

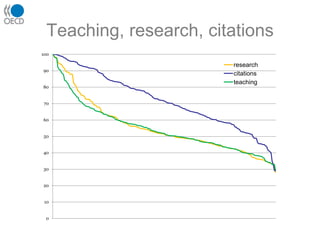

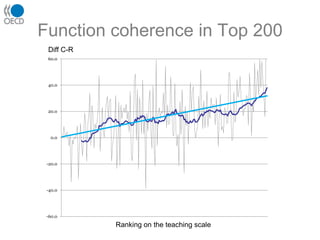

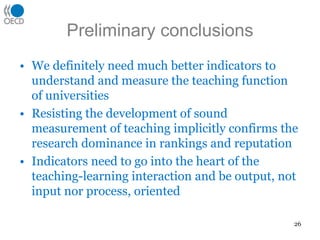

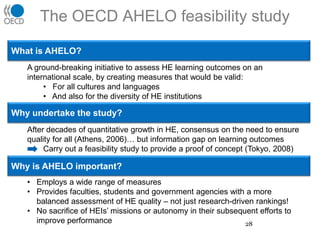

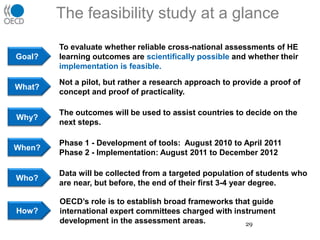

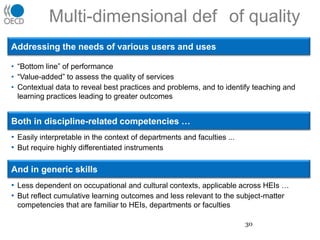

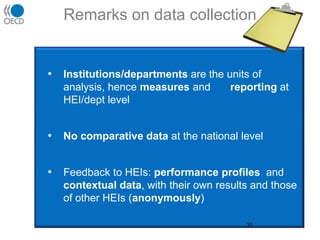

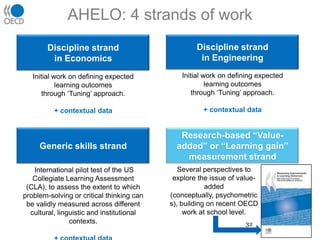

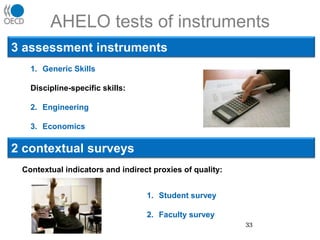

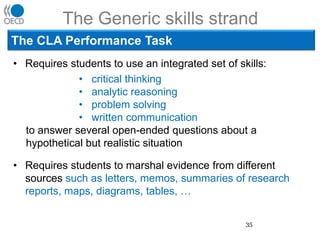

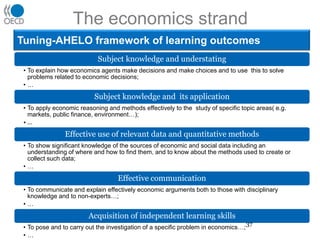

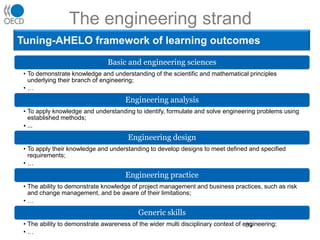

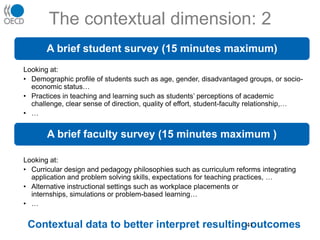

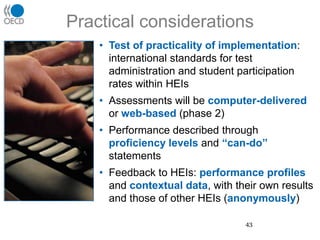

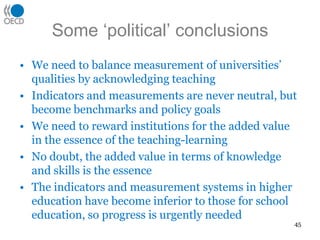

The document discusses various approaches to assessing the teaching function of universities, highlighting the challenges and methodologies involved in measuring educational outcomes. It emphasizes the need for improved indicators and examines the relationship between teaching and research quality, as well as the influence of academic reputation on university rankings. Additionally, it outlines the AHELO initiative aimed at evaluating higher education learning outcomes globally while ensuring quality assessments across diverse educational contexts.