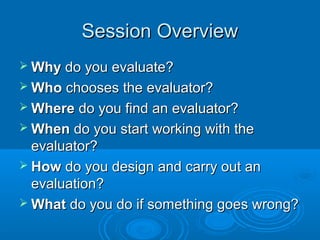

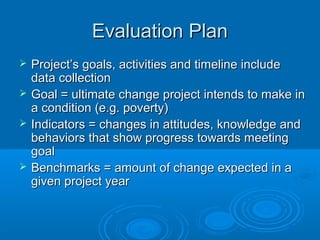

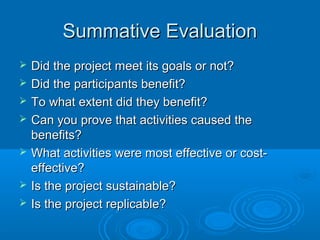

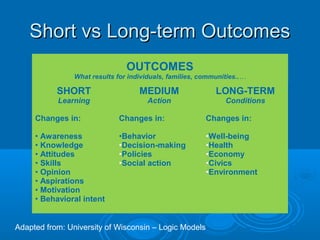

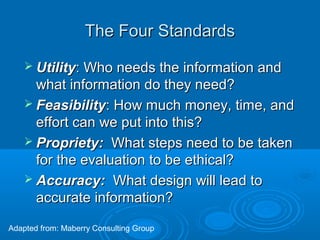

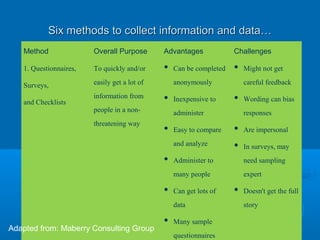

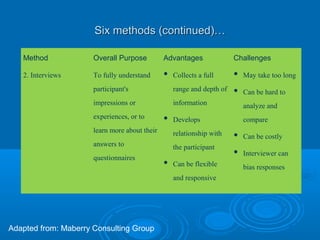

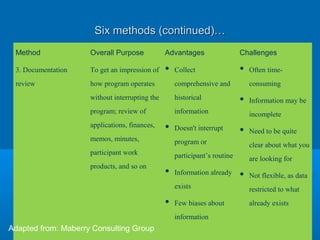

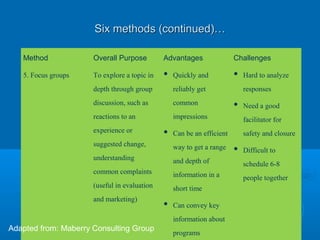

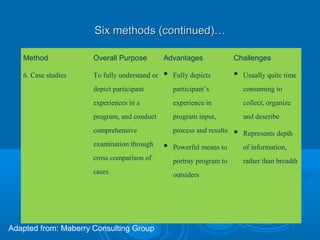

This document provides an overview of project evaluation. It discusses why evaluations are conducted (to provide feedback and determine if objectives were met), who is typically involved in the evaluation process (e.g. project directors, evaluators), and how to design and carry out an evaluation (e.g. creating an evaluation plan with goals, indicators, and benchmarks). The document also covers common evaluation methods like interviews, surveys, documentation review and observations. Overall, the document aims to demystify the evaluation process and provide guidance on the key questions of why, who, where, when and how to approach project evaluation.