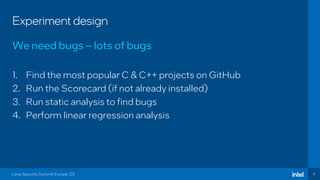

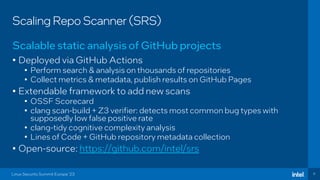

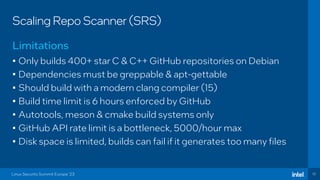

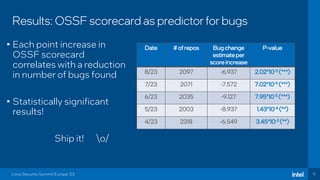

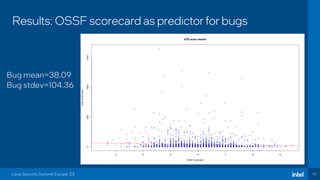

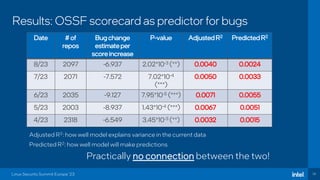

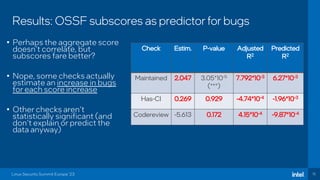

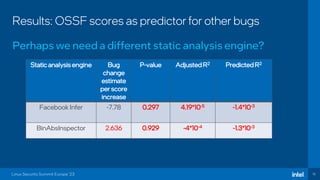

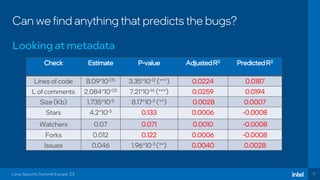

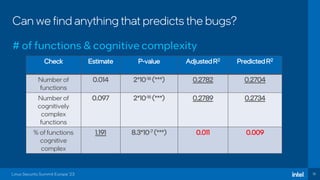

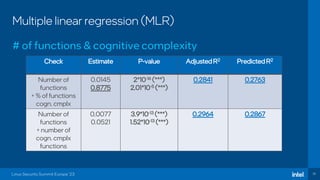

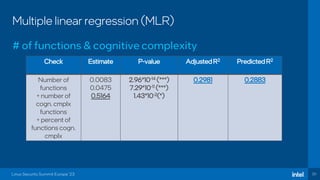

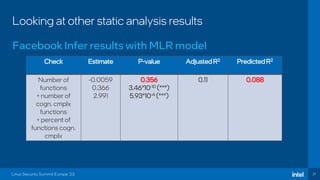

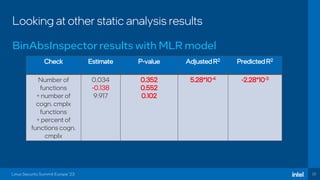

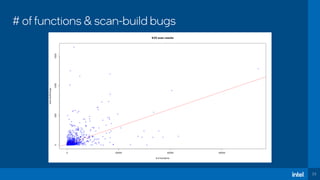

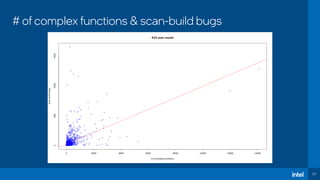

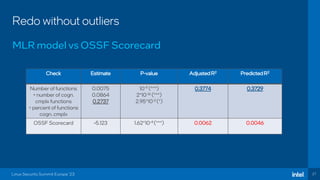

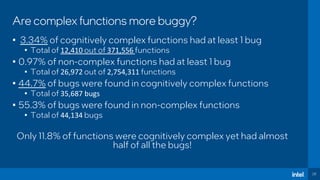

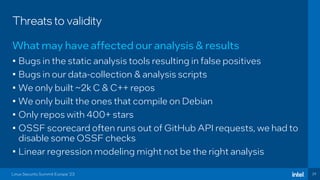

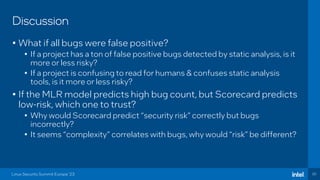

The document discusses the challenges of estimating security risks in open-source projects using the OpenSSF scorecard and automated static analysis tools. It presents findings that suggest a correlation between higher scorecard ratings and reduced bug density, though indicates potential limitations and biases in the data. Ultimately, it emphasizes the need for improved methods to evaluate open-source project risks scientifically and calls for community feedback and collaboration in this area.