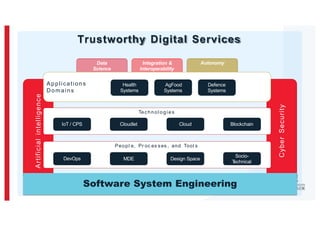

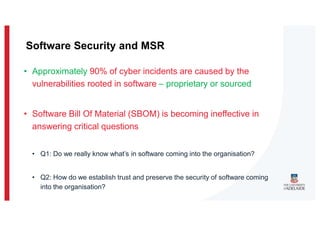

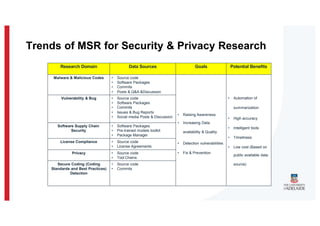

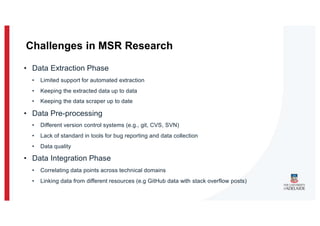

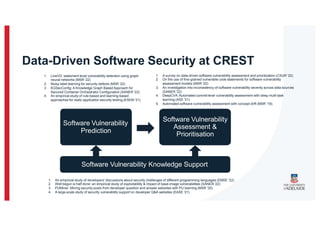

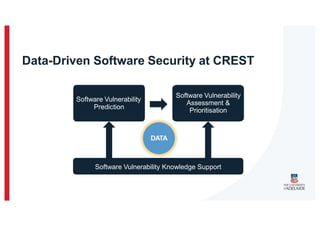

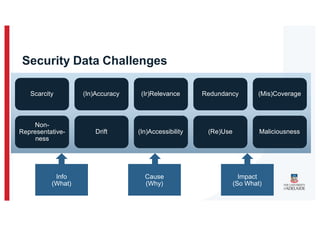

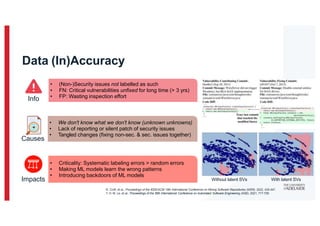

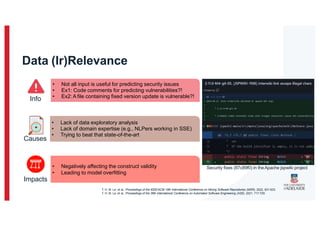

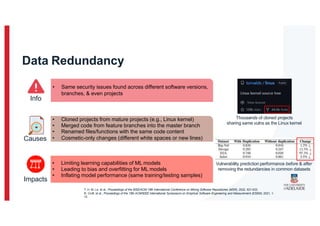

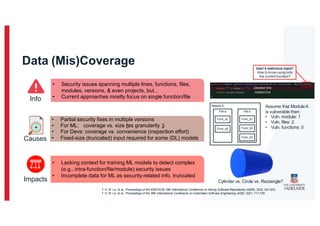

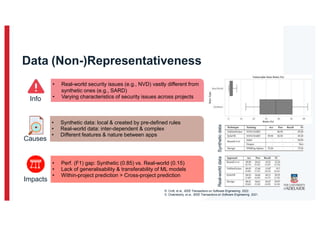

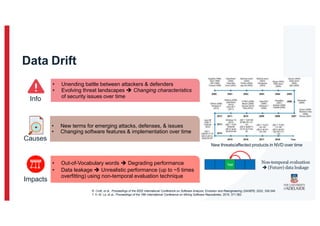

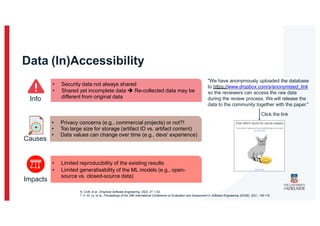

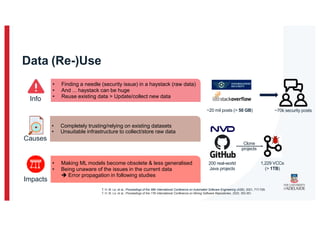

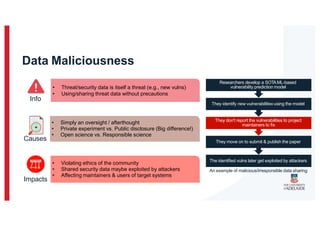

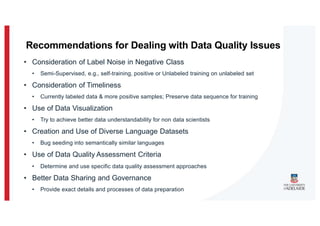

The document discusses the challenges and data quality issues in mining software repositories (MSR) for security purposes. It highlights the prevalence of vulnerabilities in software and the limitations of current frameworks like the Software Bill of Materials (SBOM) in ensuring security. Recommendations for addressing data-related challenges, such as improving data collection and sharing practices, are also provided.