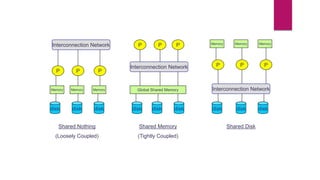

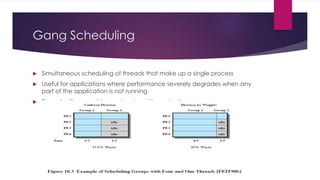

This document discusses scheduling for tightly coupled multiprocessor systems. It describes two types of multiprocessor systems: loosely coupled systems where each processor has its own memory and I/O, and tightly coupled systems where processors share main memory. It also defines levels of granularity in parallel applications from independent to fine-grained parallelism. The key issues in multiprocessor scheduling are process assignment, multiprogramming, and dispatching. Different strategies like load sharing, gang scheduling, and dedicated assignment are used to address these issues.