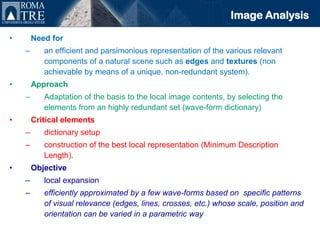

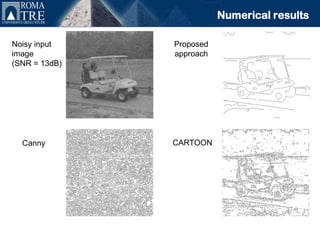

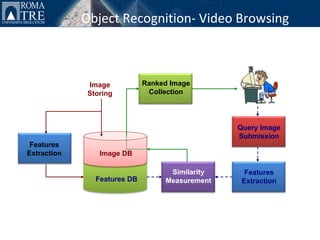

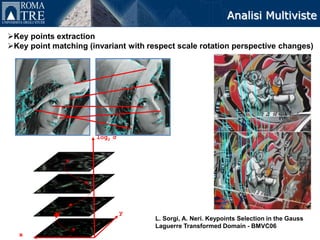

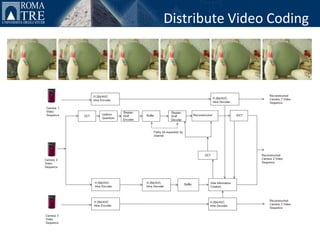

The document discusses research into multimedia information processing for smart environments. It covers topics such as feature extraction, object recognition, distributed video coding for multiple sources, and new imaging techniques. The overall goal is to develop technologies that integrate sensors, distributed computing systems, and communications to create environments that can adapt to conditions, respond to users, and improve quality of life.

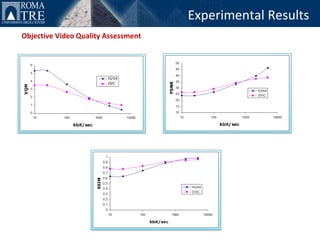

![Experimental results

‘’Breakdancer’’ multiview sequence.

Source: Veronica Palma, PhD Thesis

50

48

MDVC_Zernike

46

H.264/AVC

44

Encoder driven fusion

[1]

42

PSNR (dB)

40

38

36

34

32

30

80 200 300 800

Kbit/s

[1] M. Ouaret, F. Dufaux and T. Ebrahimi, ‘’ MULTIVIEW DISTRIBUTED VIDEO CODING WITH ENCODER DRIVEN FUSION ‘’. In EUSIPCO Proceedings, 2007

[2]M. Ouaret, F. Dufuax, and T. Ebrahimi. ‘’Recent advances in multi-view distributed video coding’’. In SPIE Mobile Multimedia/Image Processing for

Military and Security Applications, April 2007.](https://image.slidesharecdn.com/alessandroneri-130410053115-phpapp02/85/Elettronica-Multimedia-Information-Processing-in-Smart-Environments-by-Alessandro-Neri-18-320.jpg)