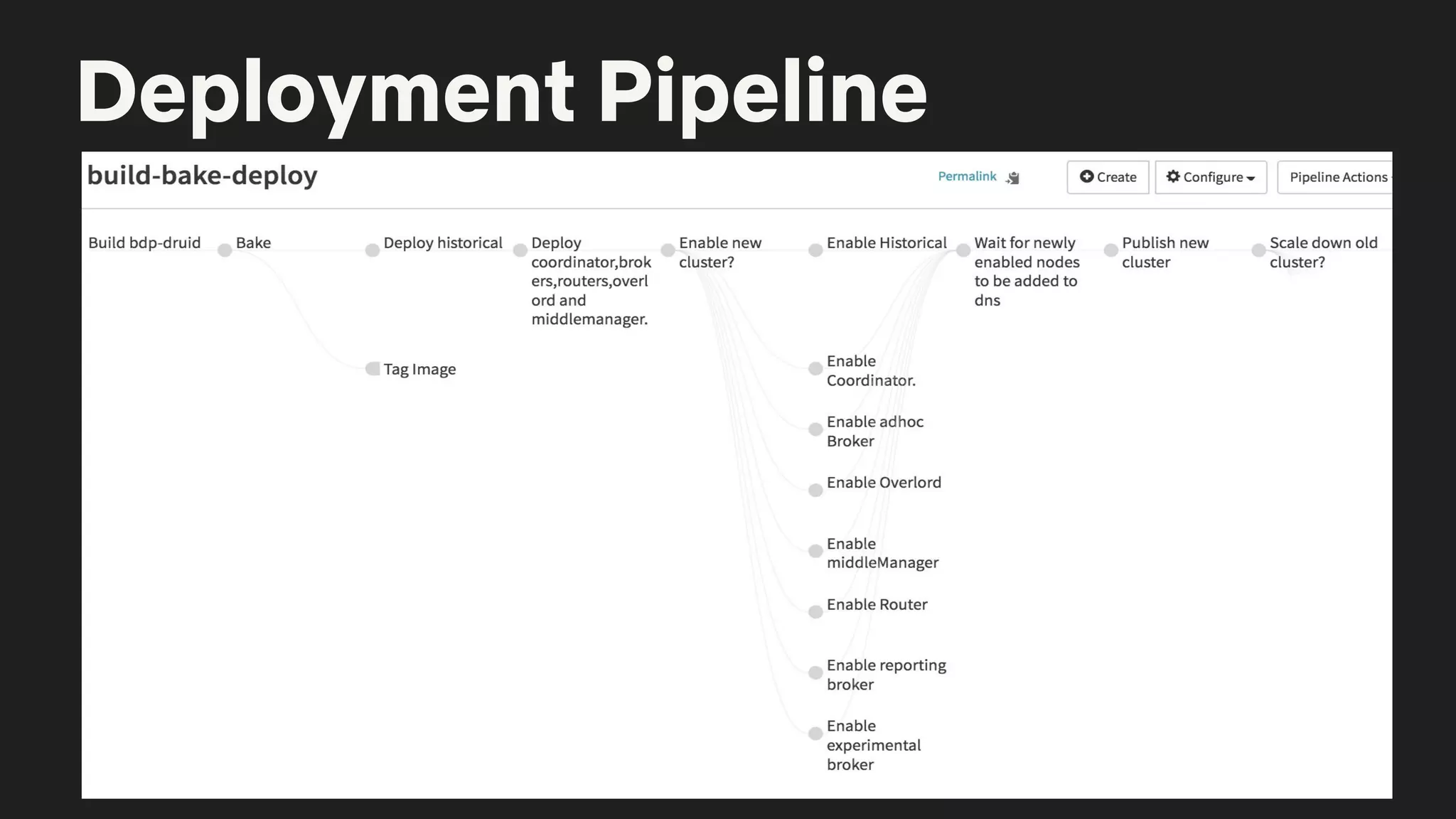

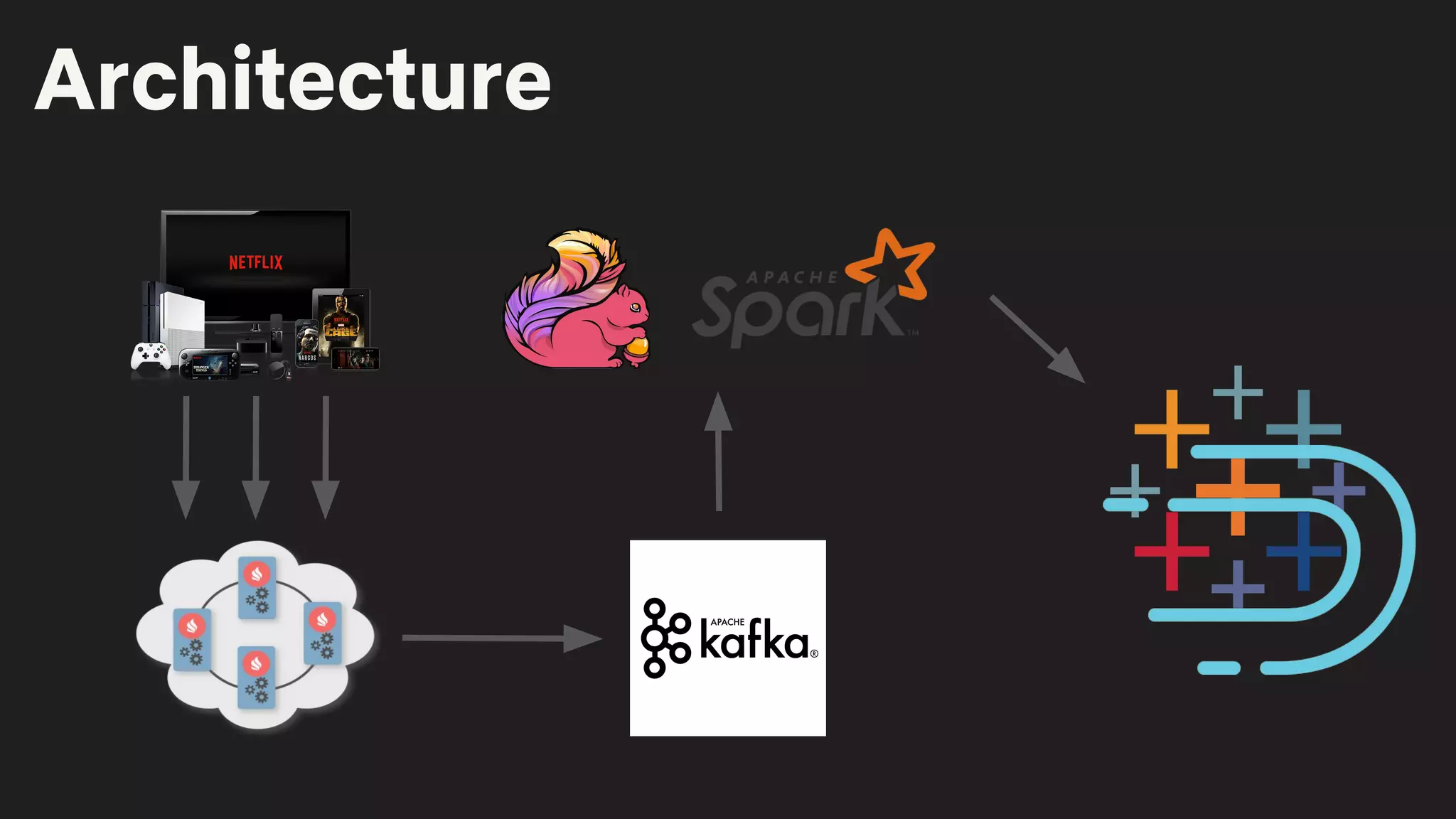

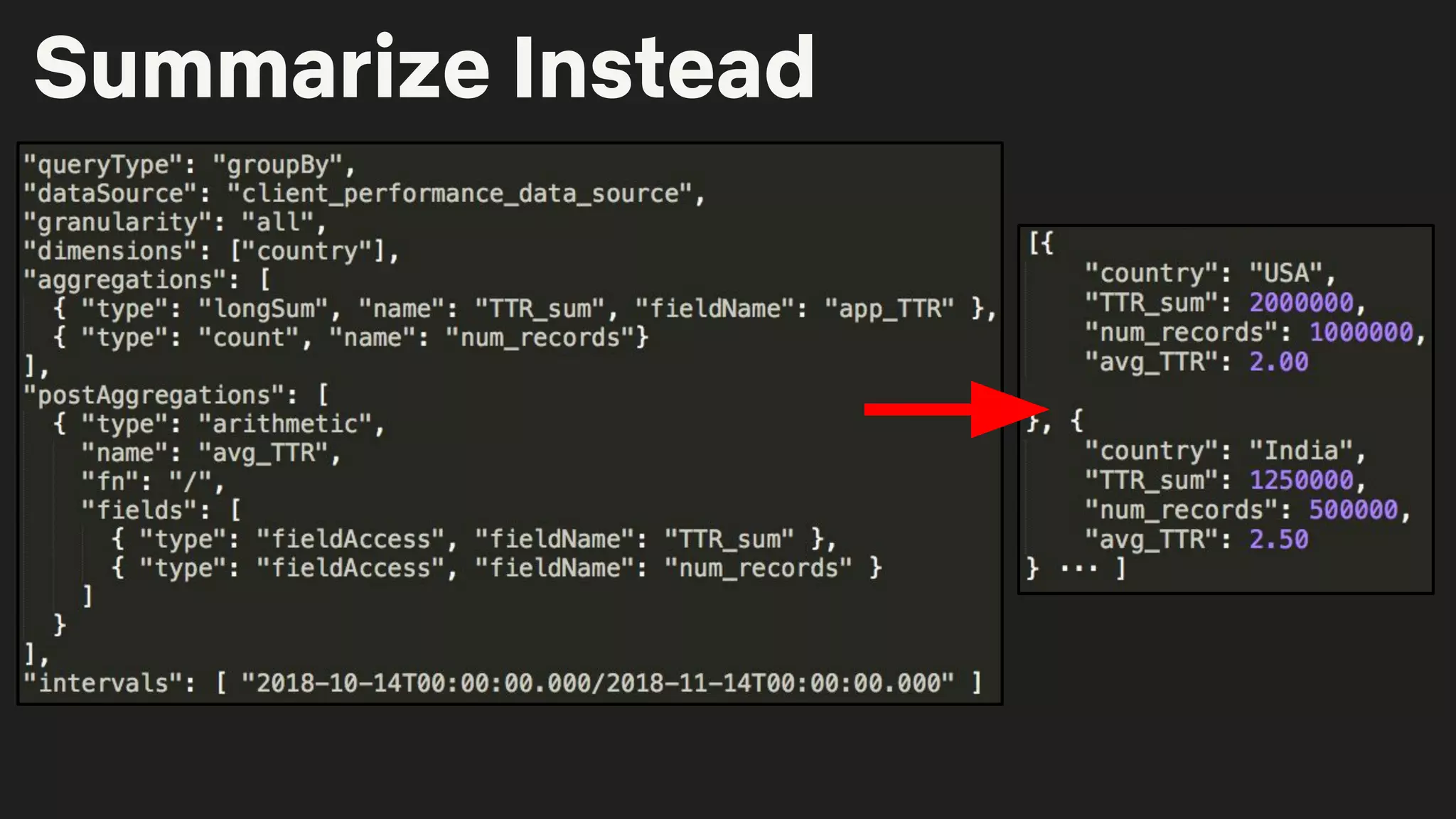

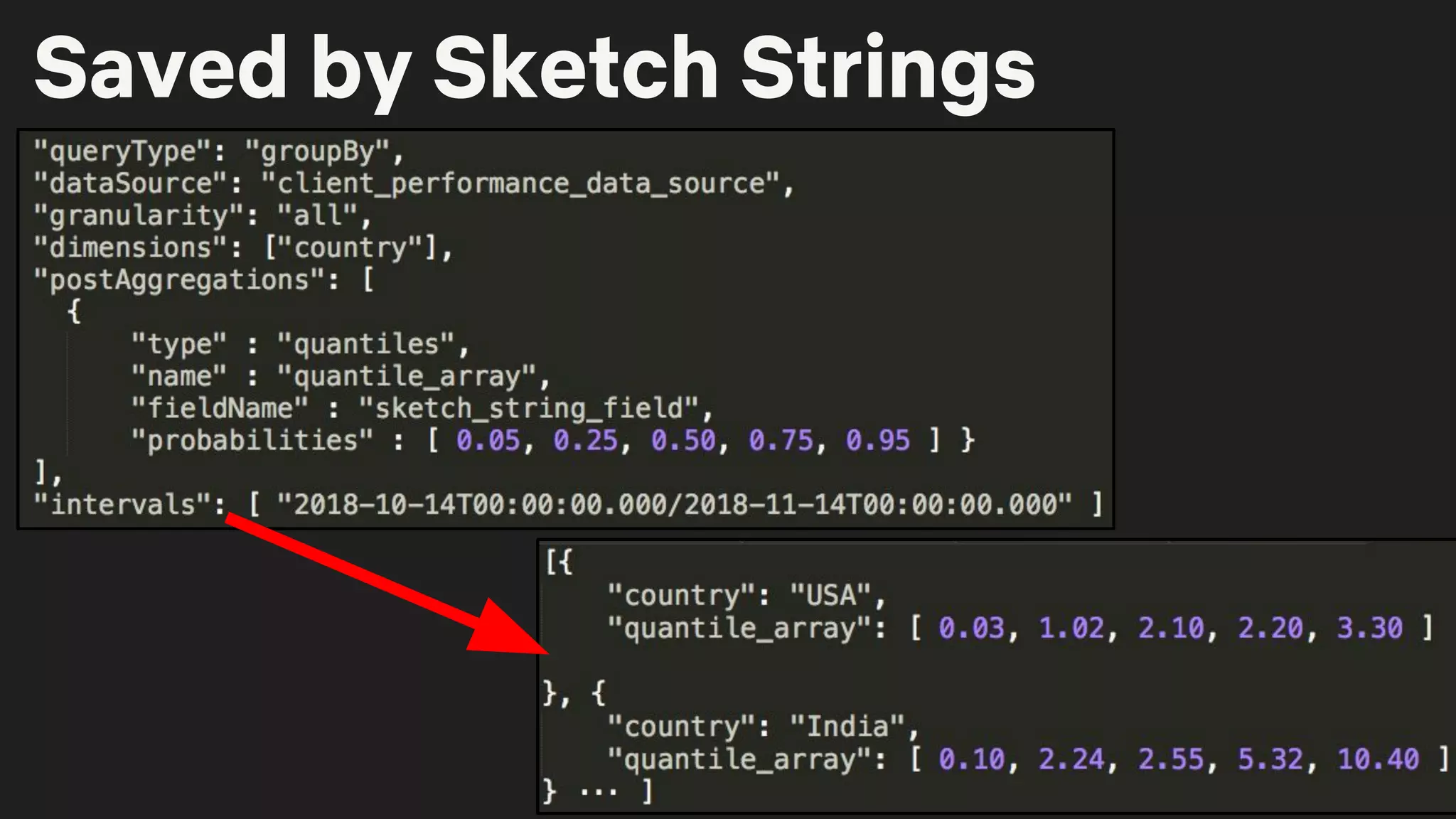

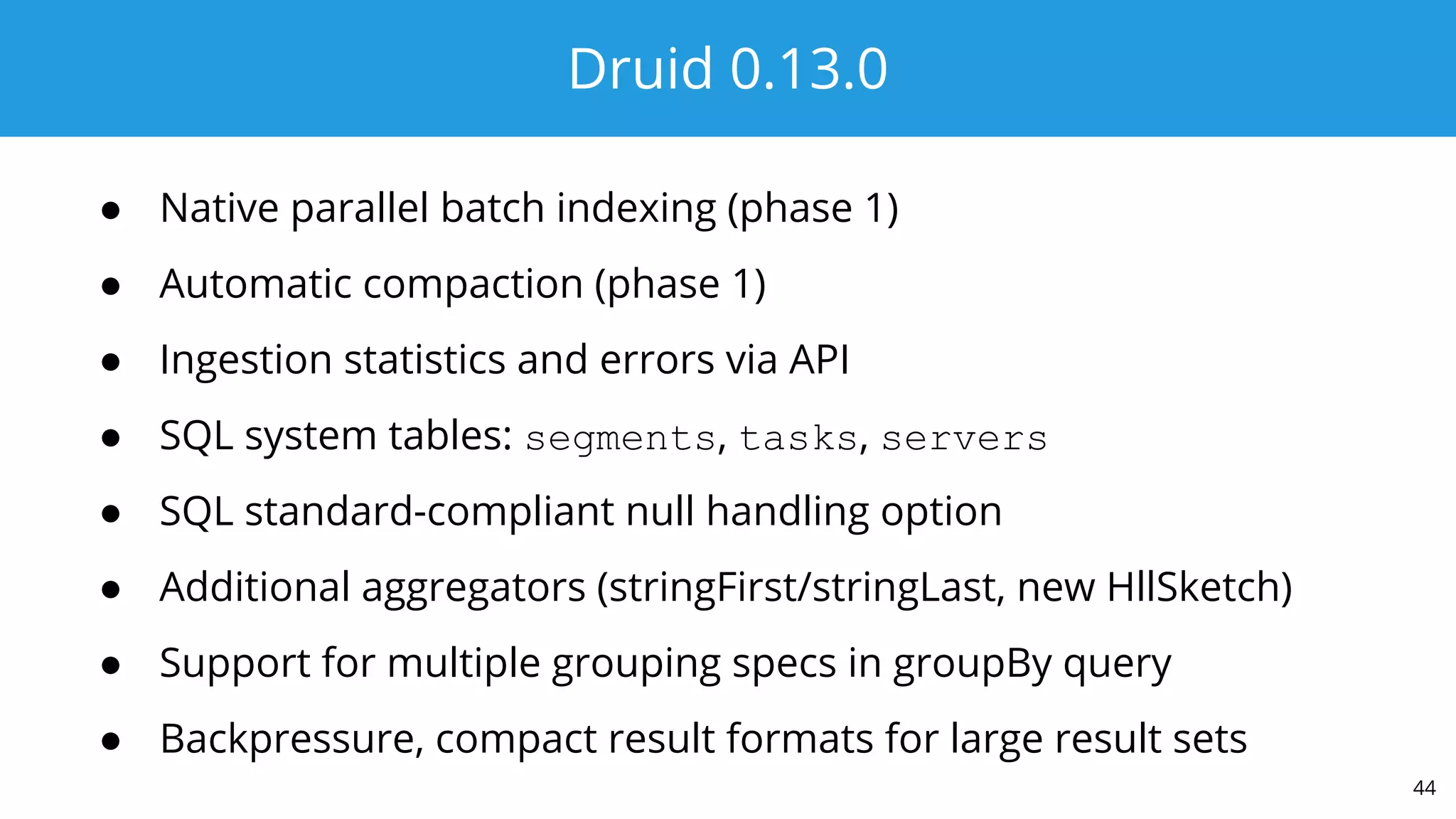

The document details a Druid meetup presentation focused on Druid deployment at Netflix, covering data ingestion, deployment strategies, and specific use cases like interactive dashboards and real-time processing. It highlights Netflix's capacity planning and how they manage 160 billion daily customer interactions while ensuring optimal client performance metrics. The Druid roadmap includes upcoming features and improvements, emphasizing community contributions and ongoing development goals.