Embed presentation

Downloaded 13 times

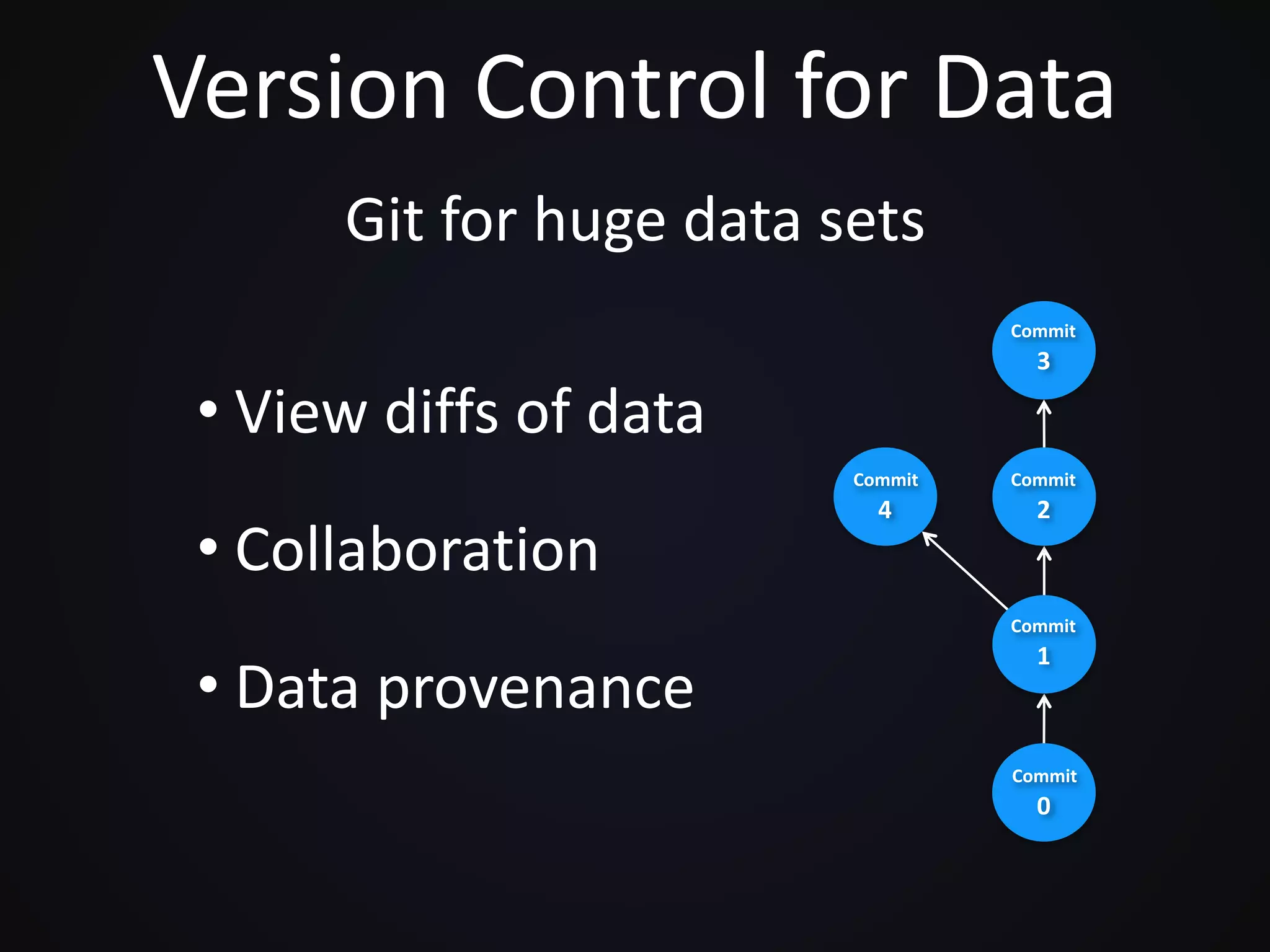

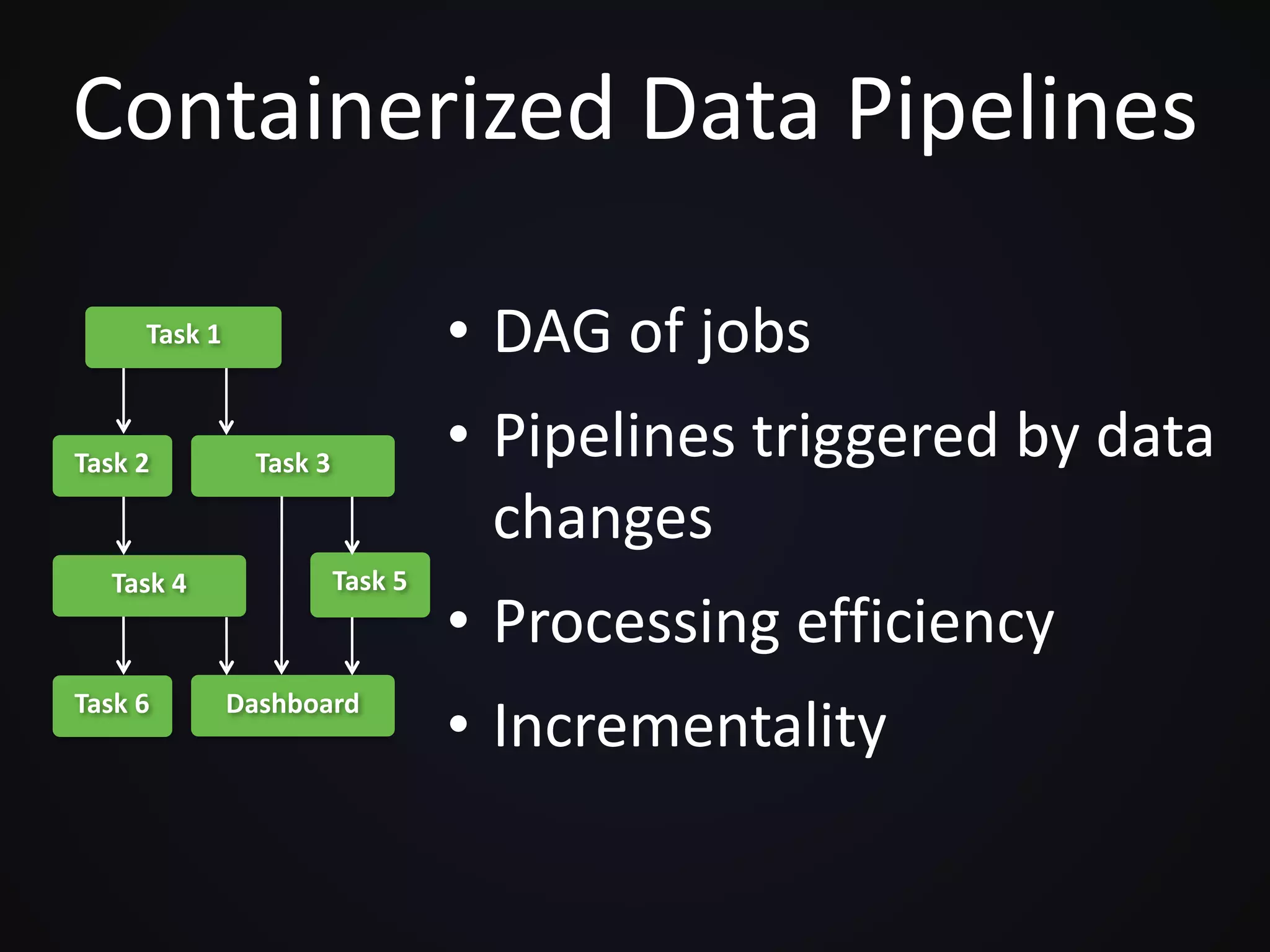

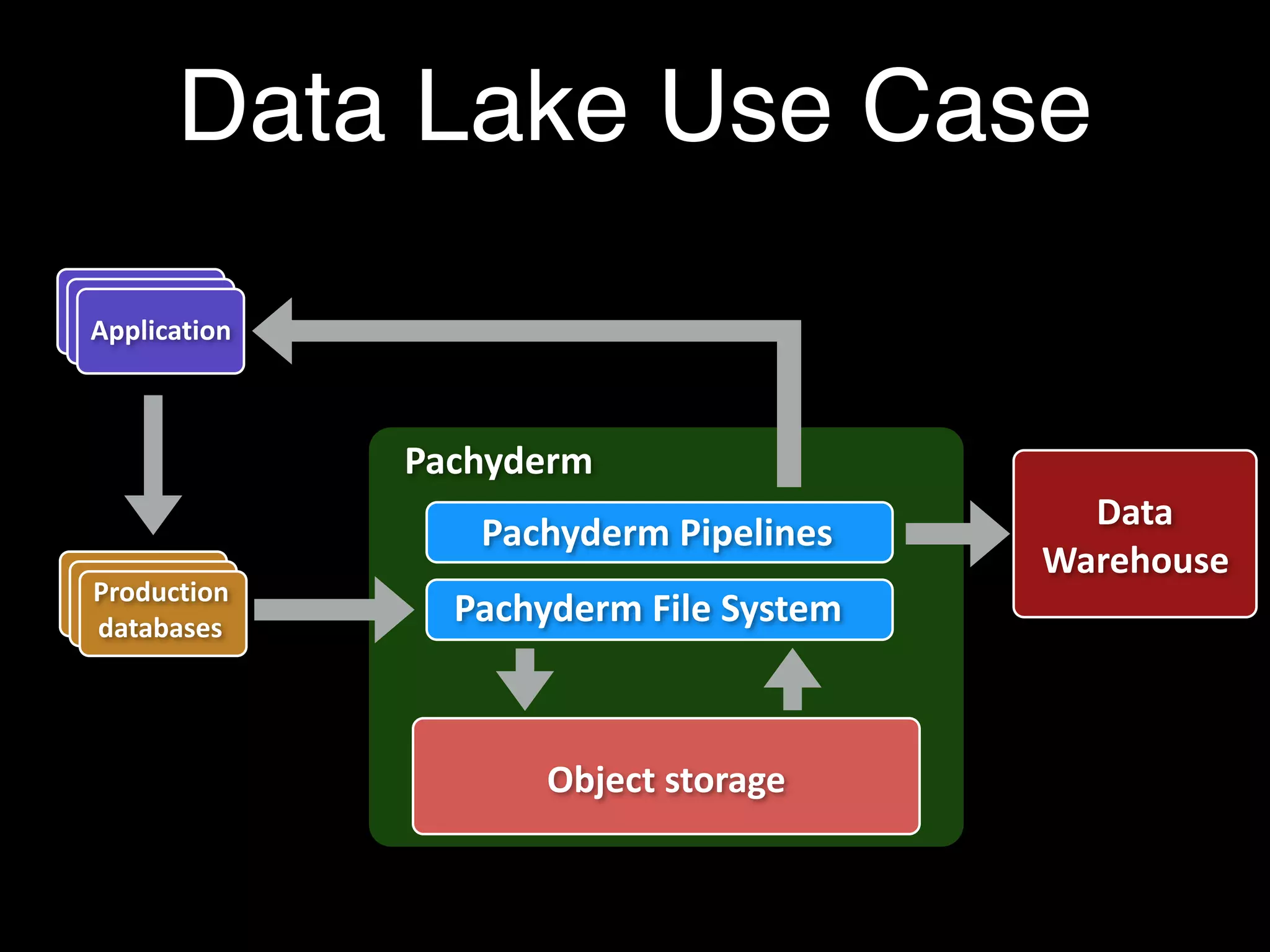

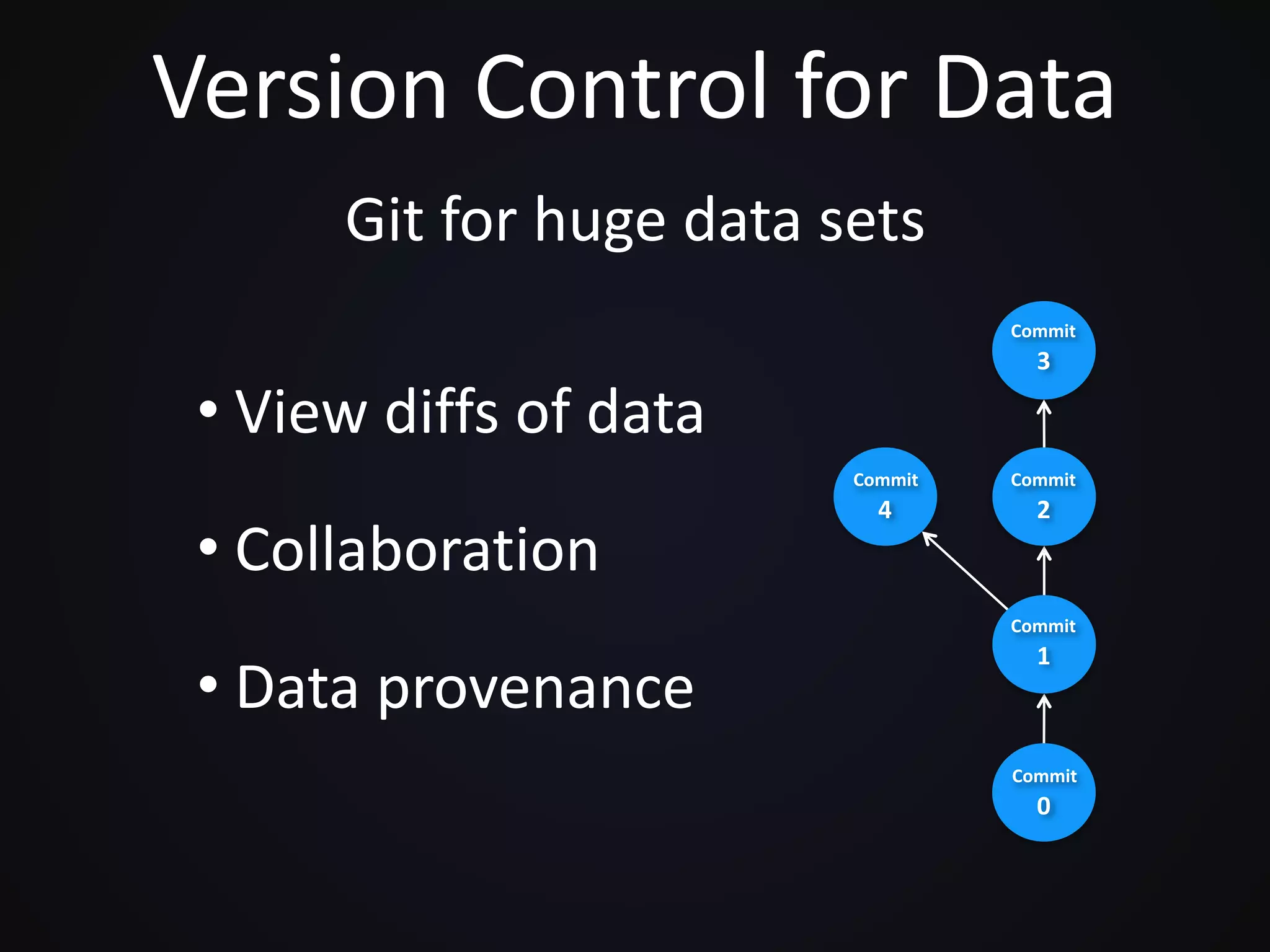

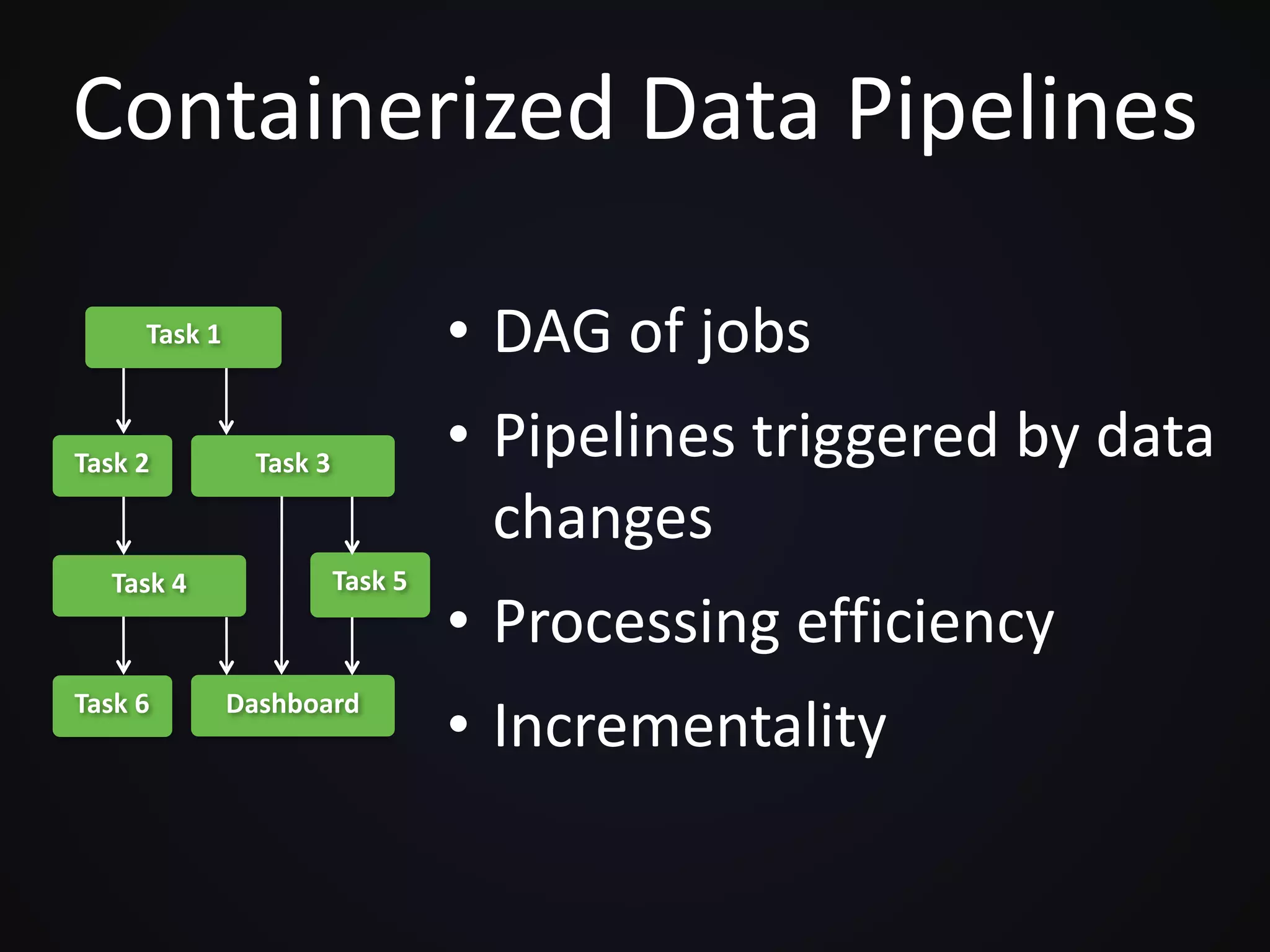

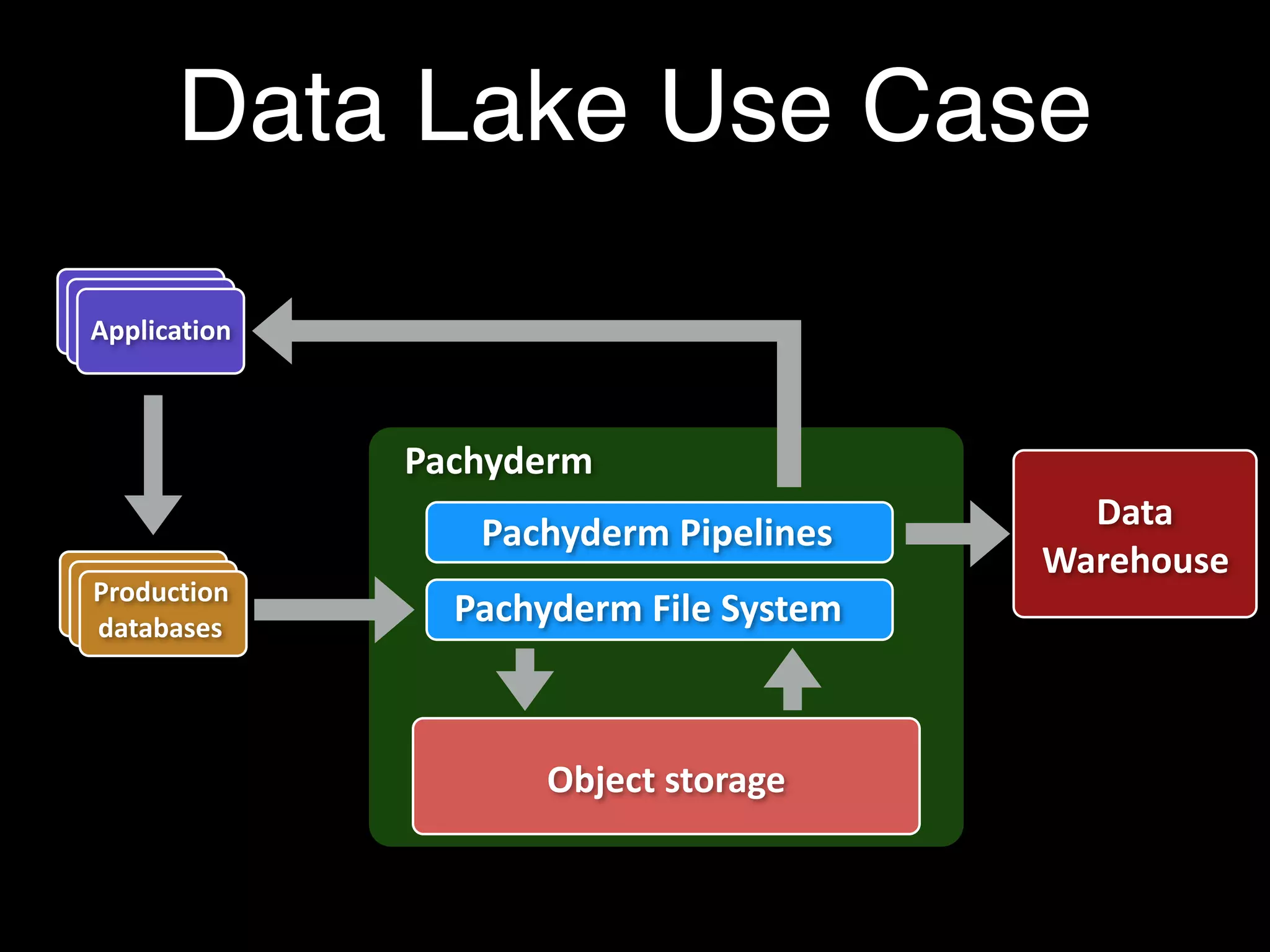

Pachyderm is a platform that utilizes containers to manage big data applications, offering features like version control, data processing through batched and streaming methods, and integration with object storage services. The document discusses the role of containers and Kubernetes in enhancing data processing efficiency and collaboration. It also outlines the functionality of Pachyderm's containerized data pipelines and provides a demo for using the platform on Kubernetes.