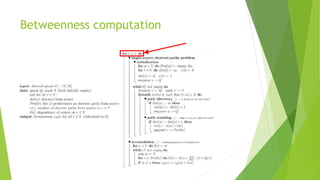

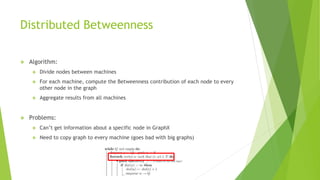

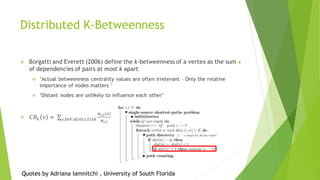

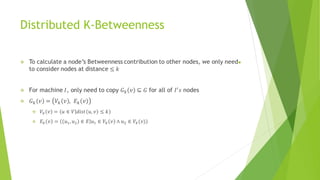

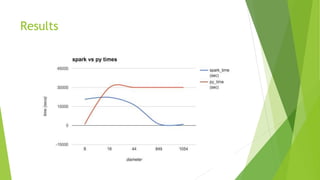

The document discusses the challenges and solutions related to distributed k-betweenness centrality computation in complex networks using technologies like Spark and GraphX. It highlights the difficulties of betweenness computation on large graphs, especially regarding node information access and graph size limitations. Key results indicate that while the implementation performs well on large diameter graphs, it struggles with small diameter graphs and requires careful tuning.