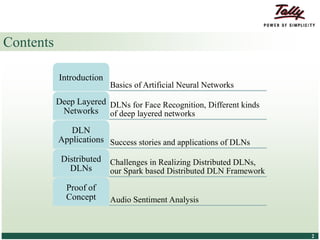

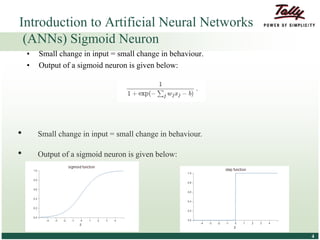

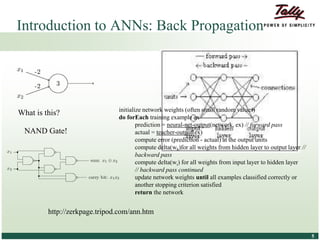

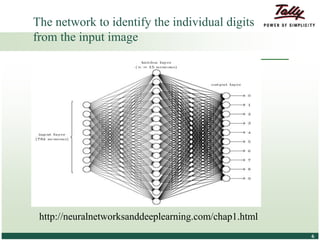

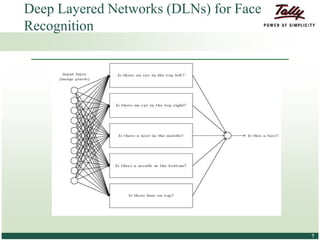

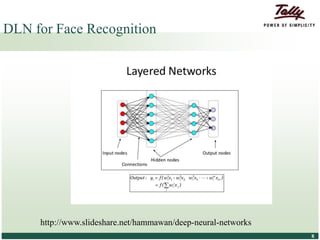

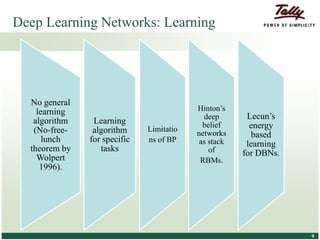

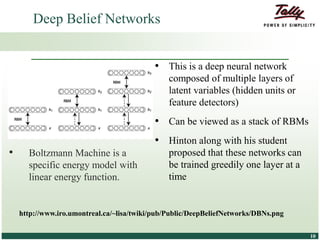

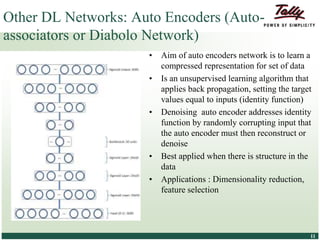

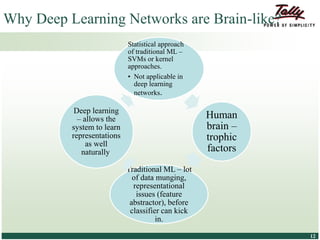

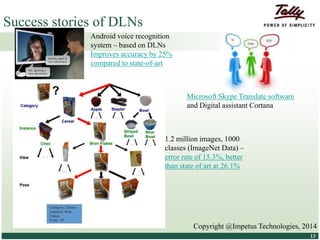

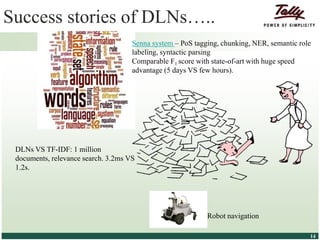

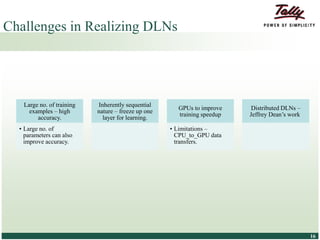

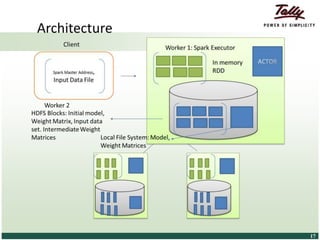

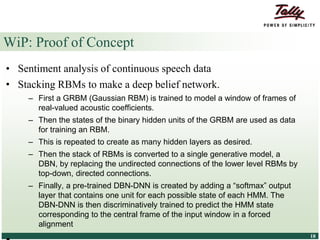

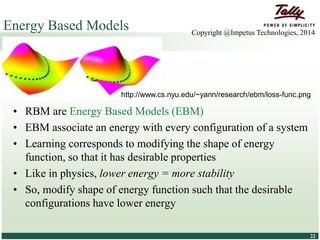

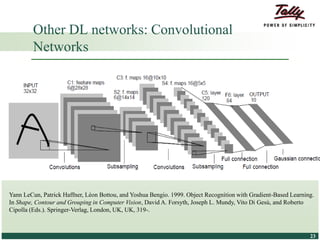

The document discusses the principles and applications of distributed deep learning networks (DLNs) in various fields including face recognition, speech analysis, and natural language processing. It highlights the architectures of artificial neural networks (ANNs), such as deep belief networks and autoencoders, along with their success stories and challenges. The potential for DLNs to enhance business data analytics within Tally solutions is also explored, indicating ongoing advancements in distributed deep learning frameworks.