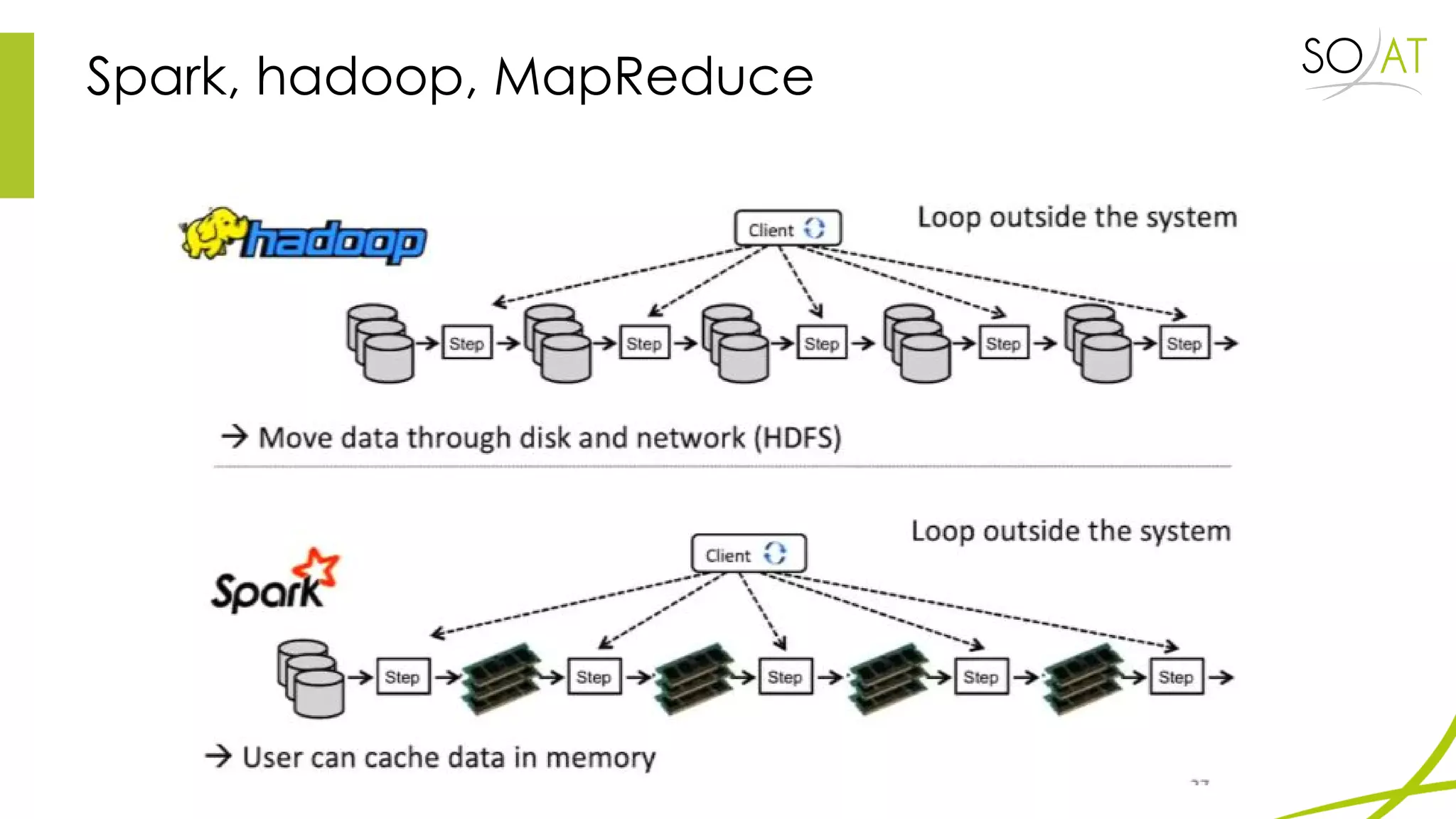

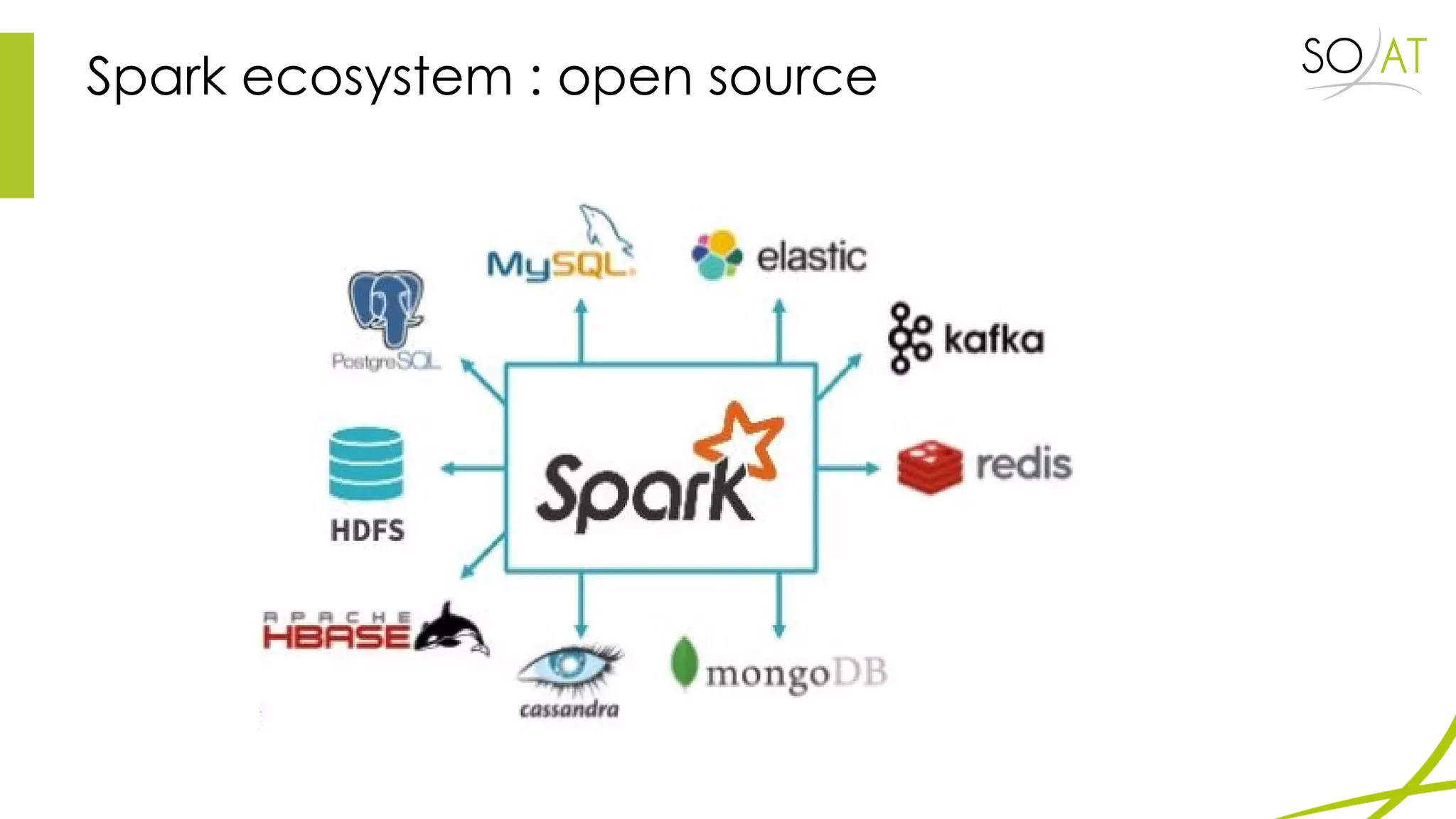

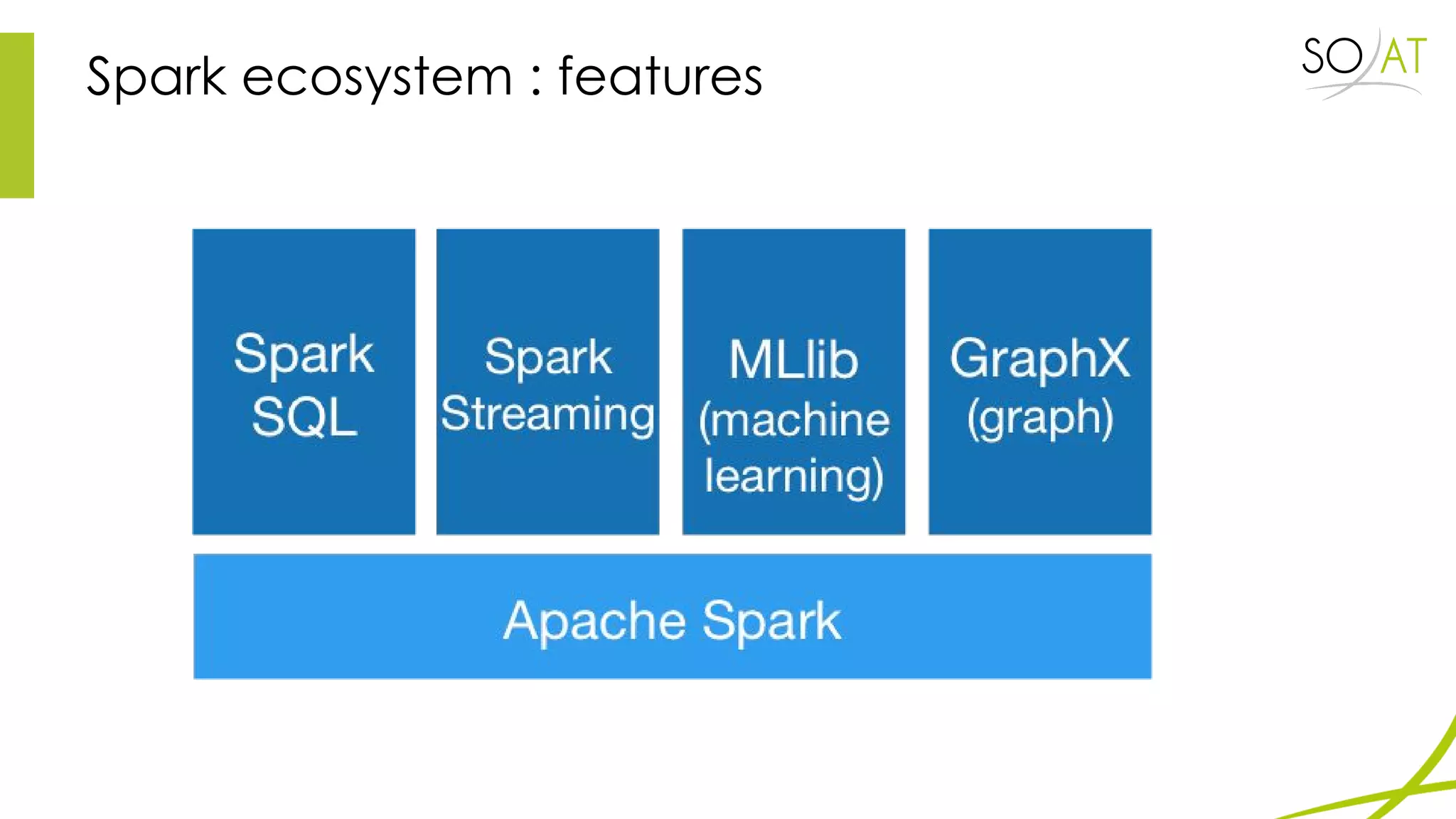

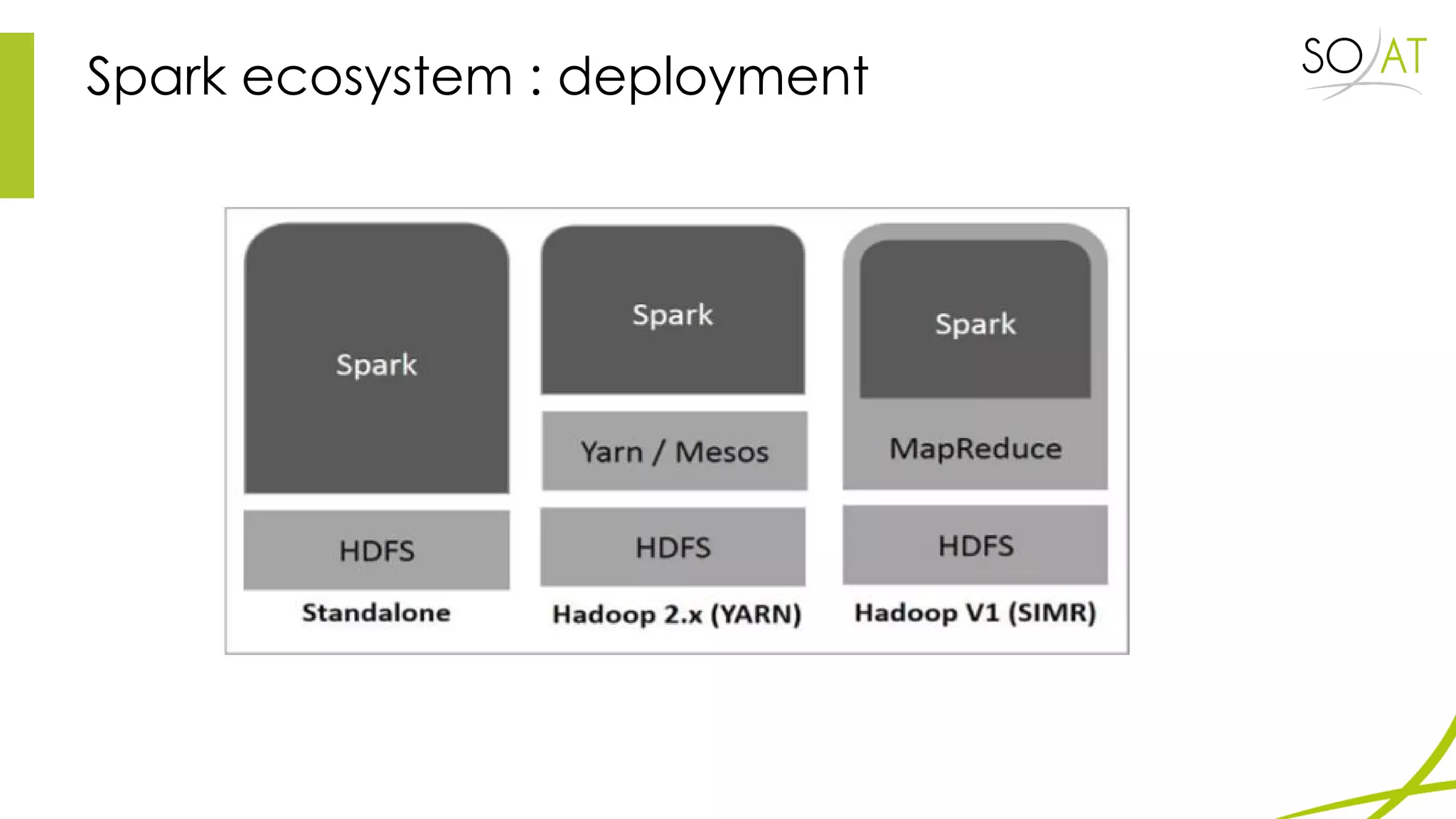

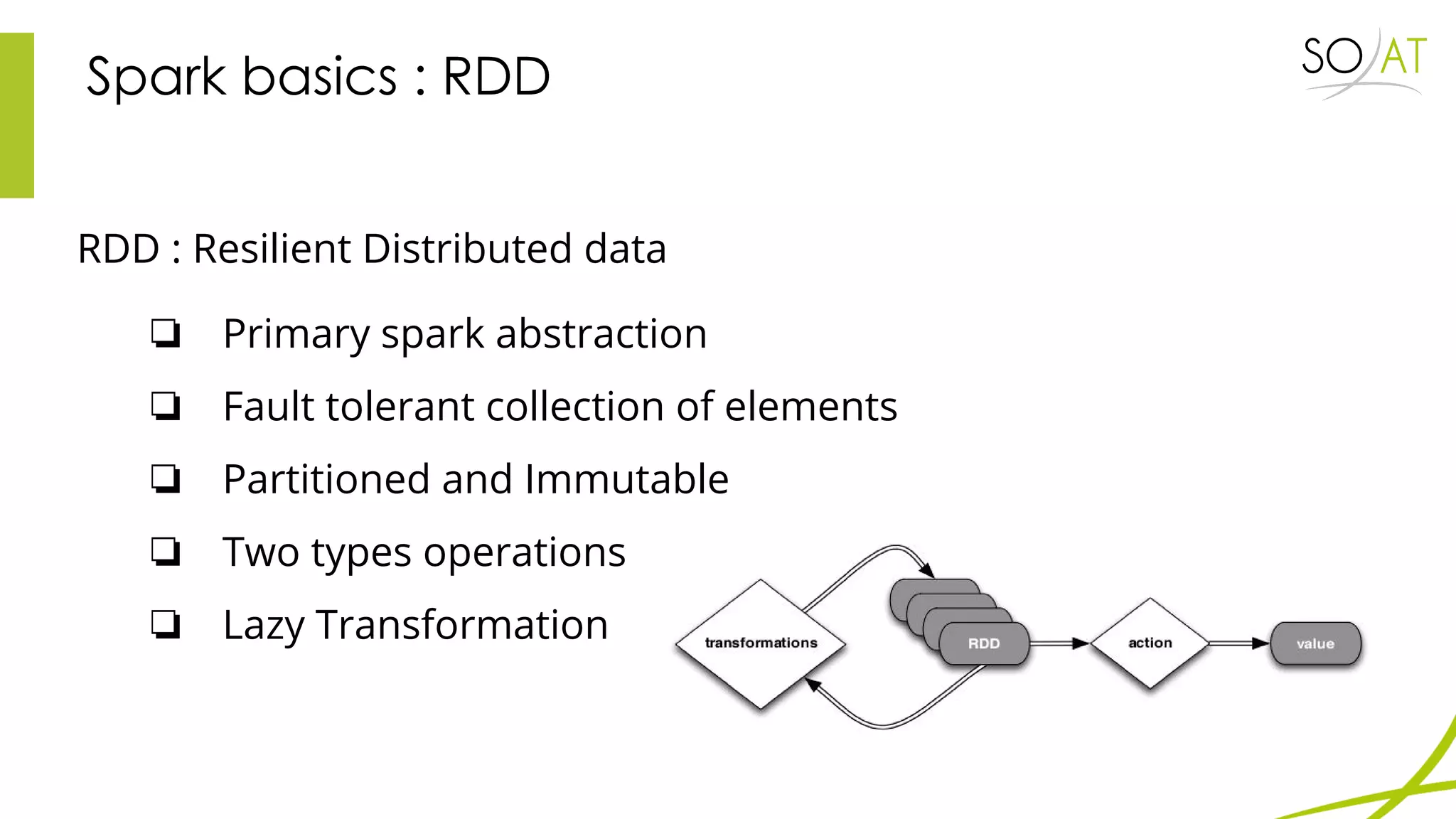

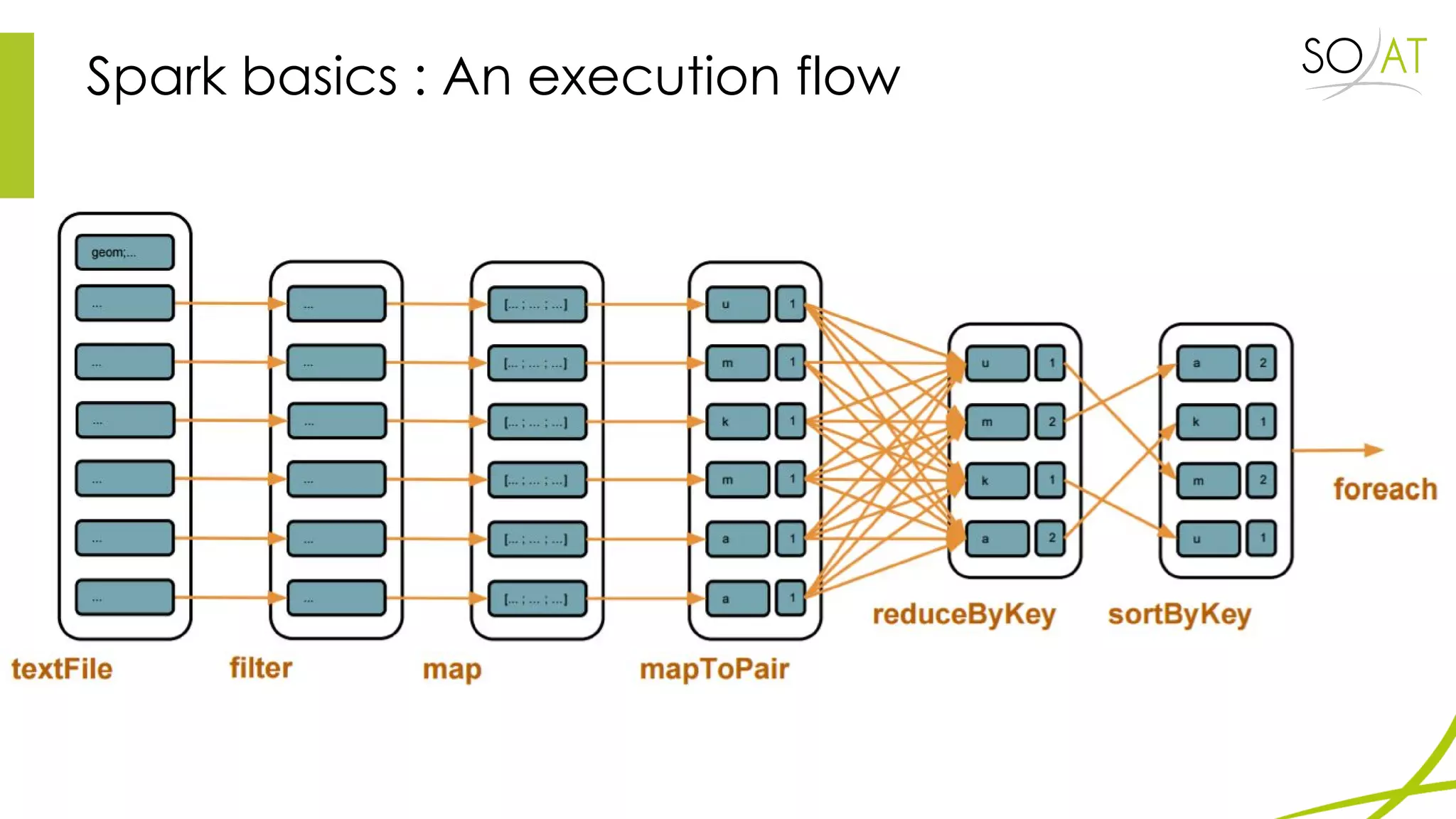

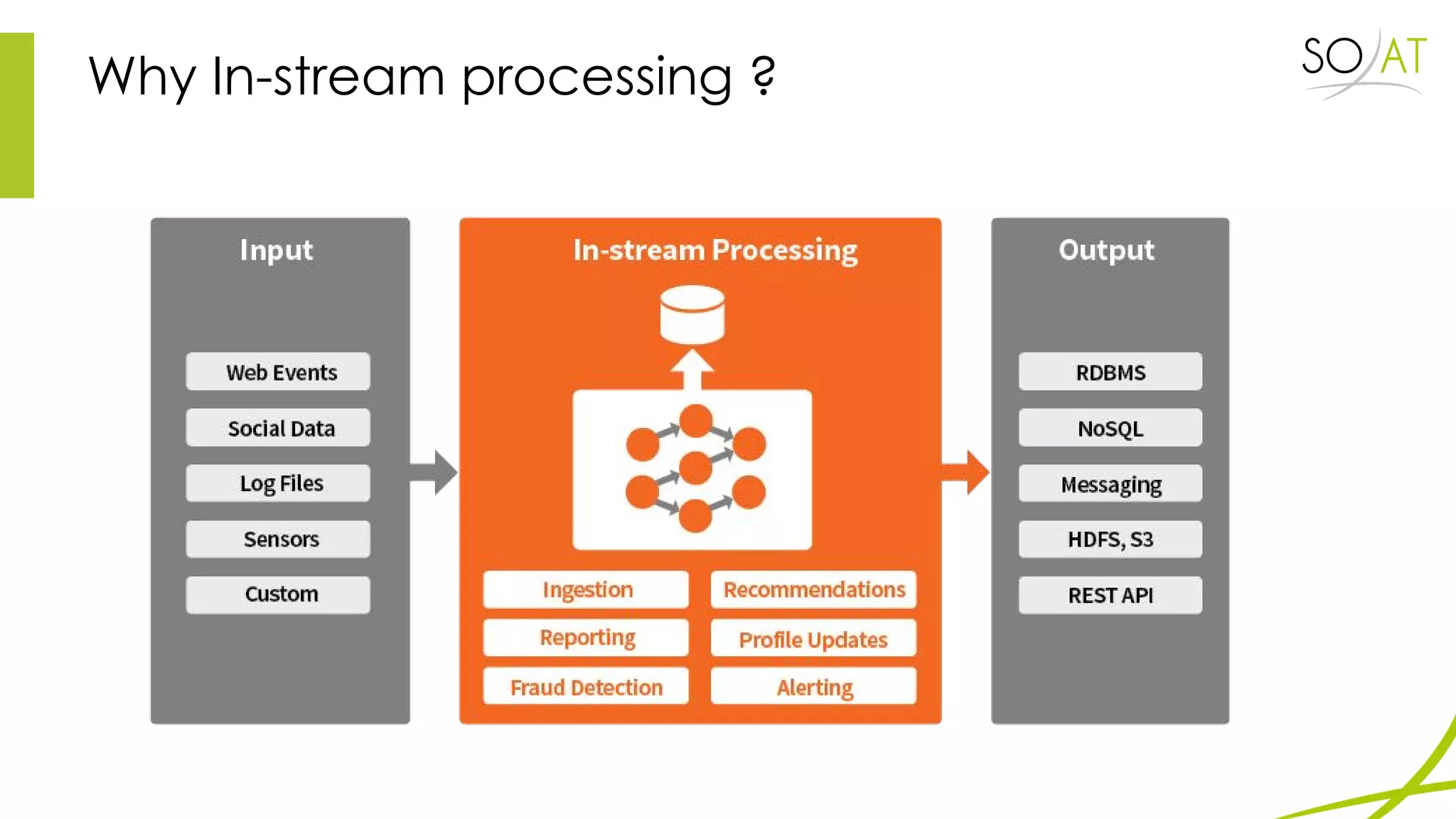

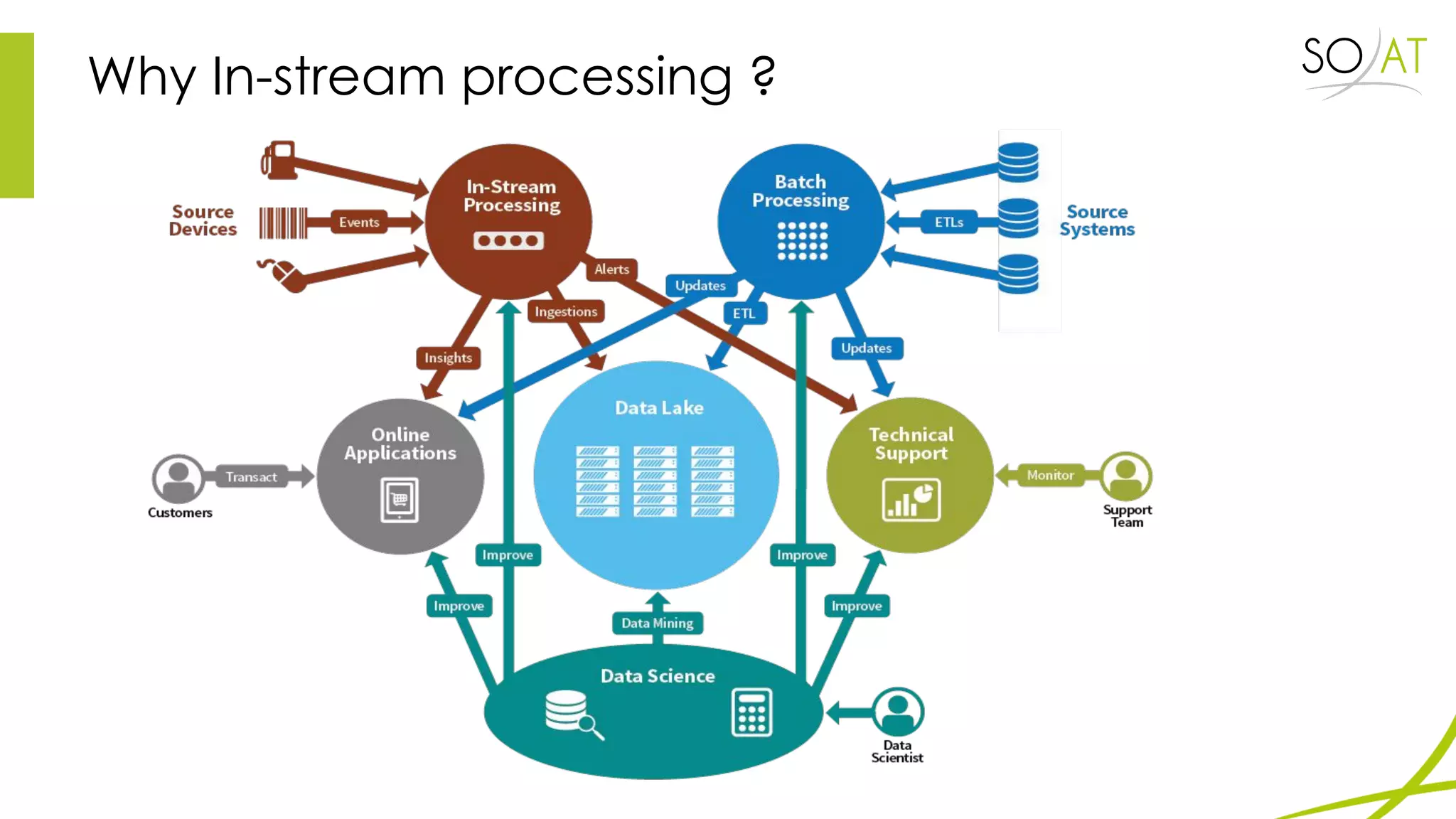

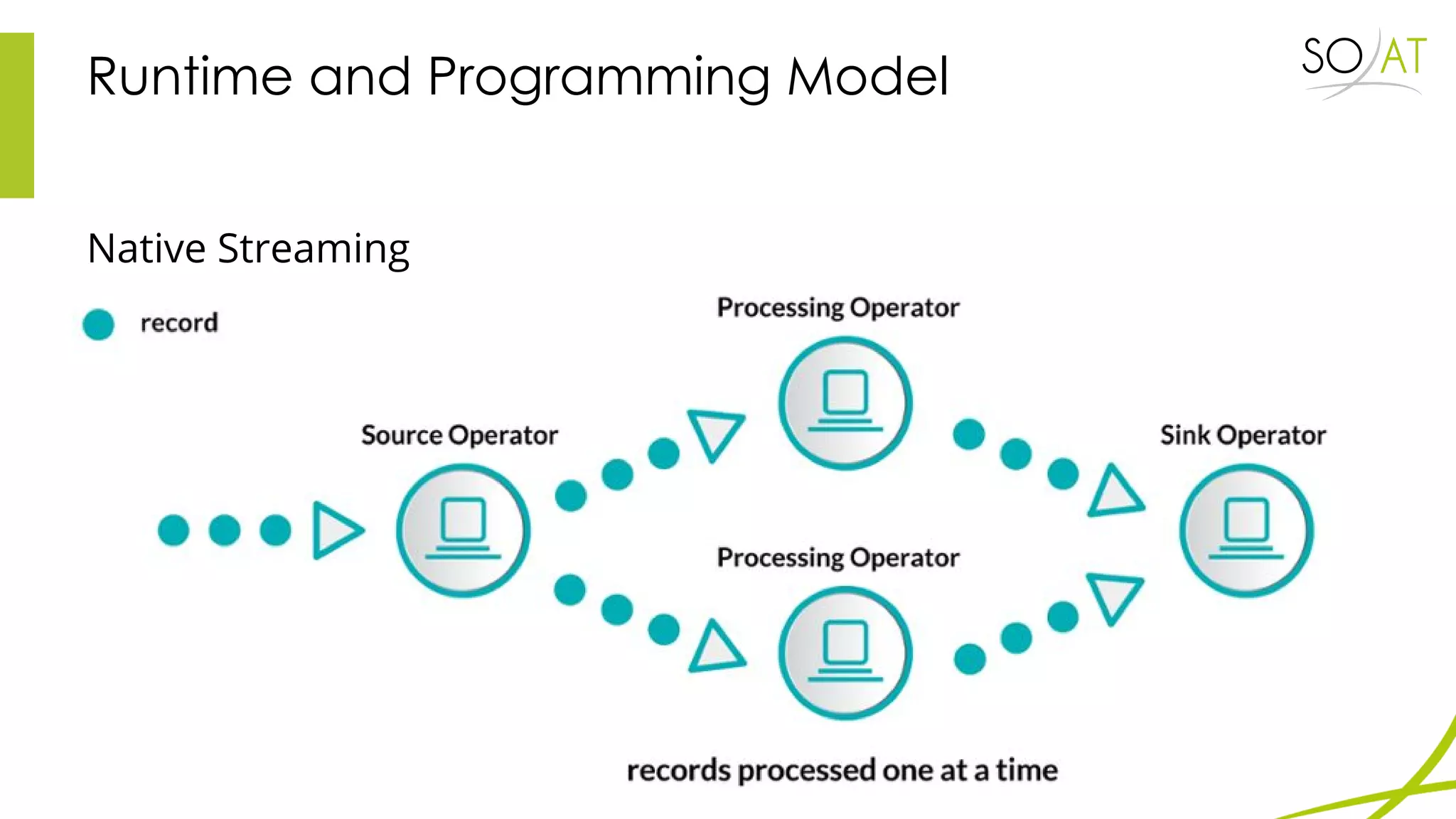

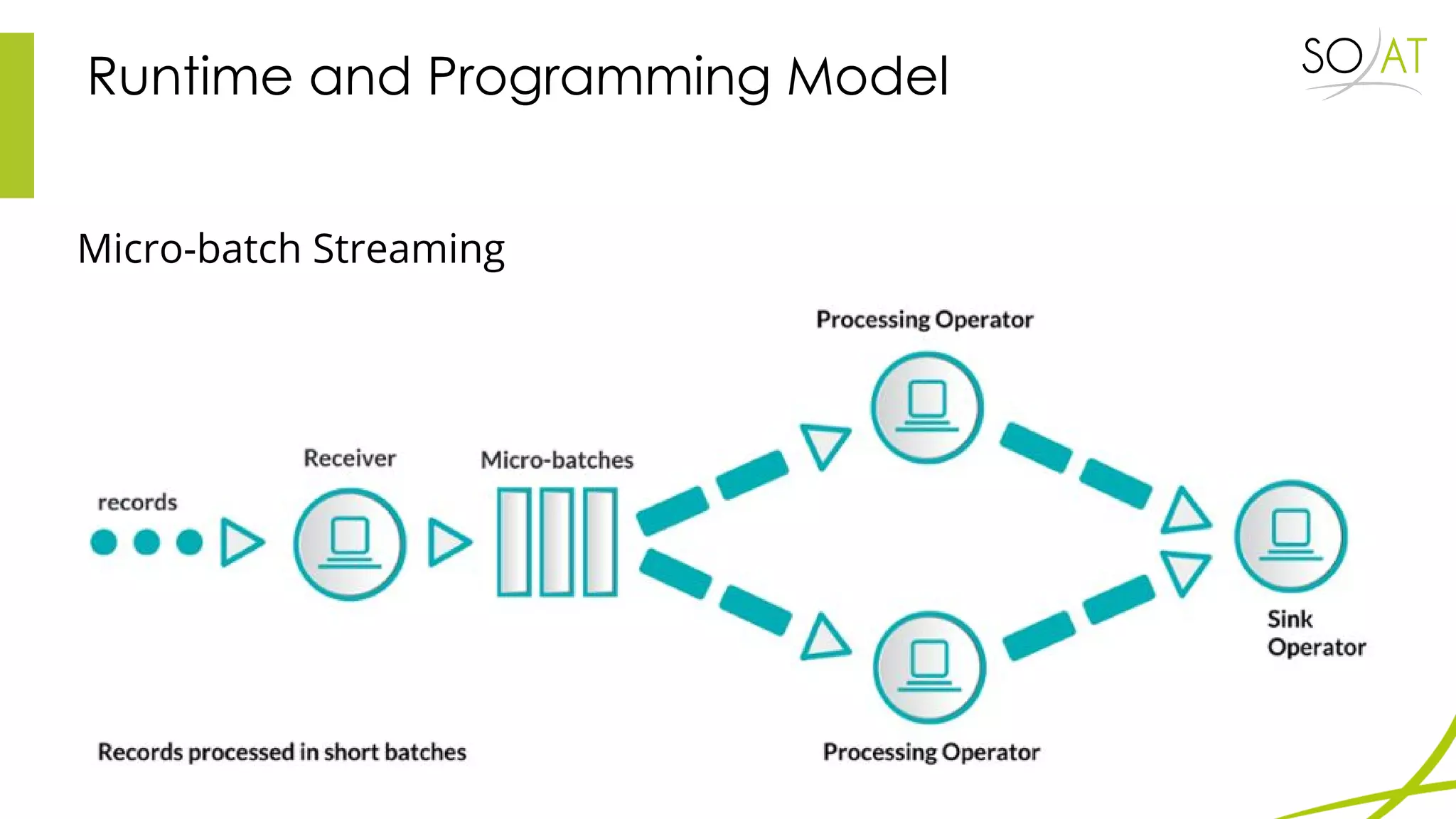

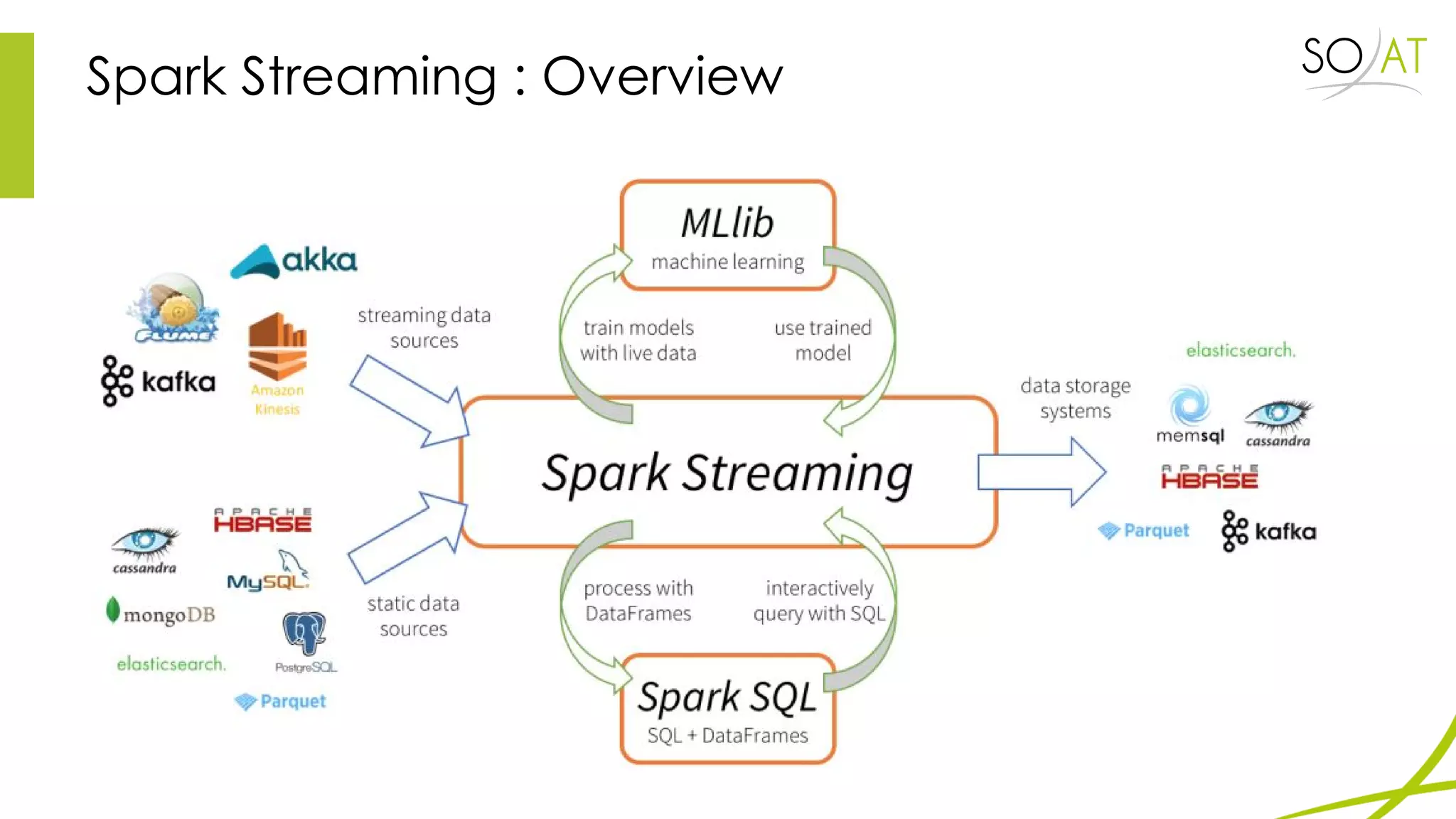

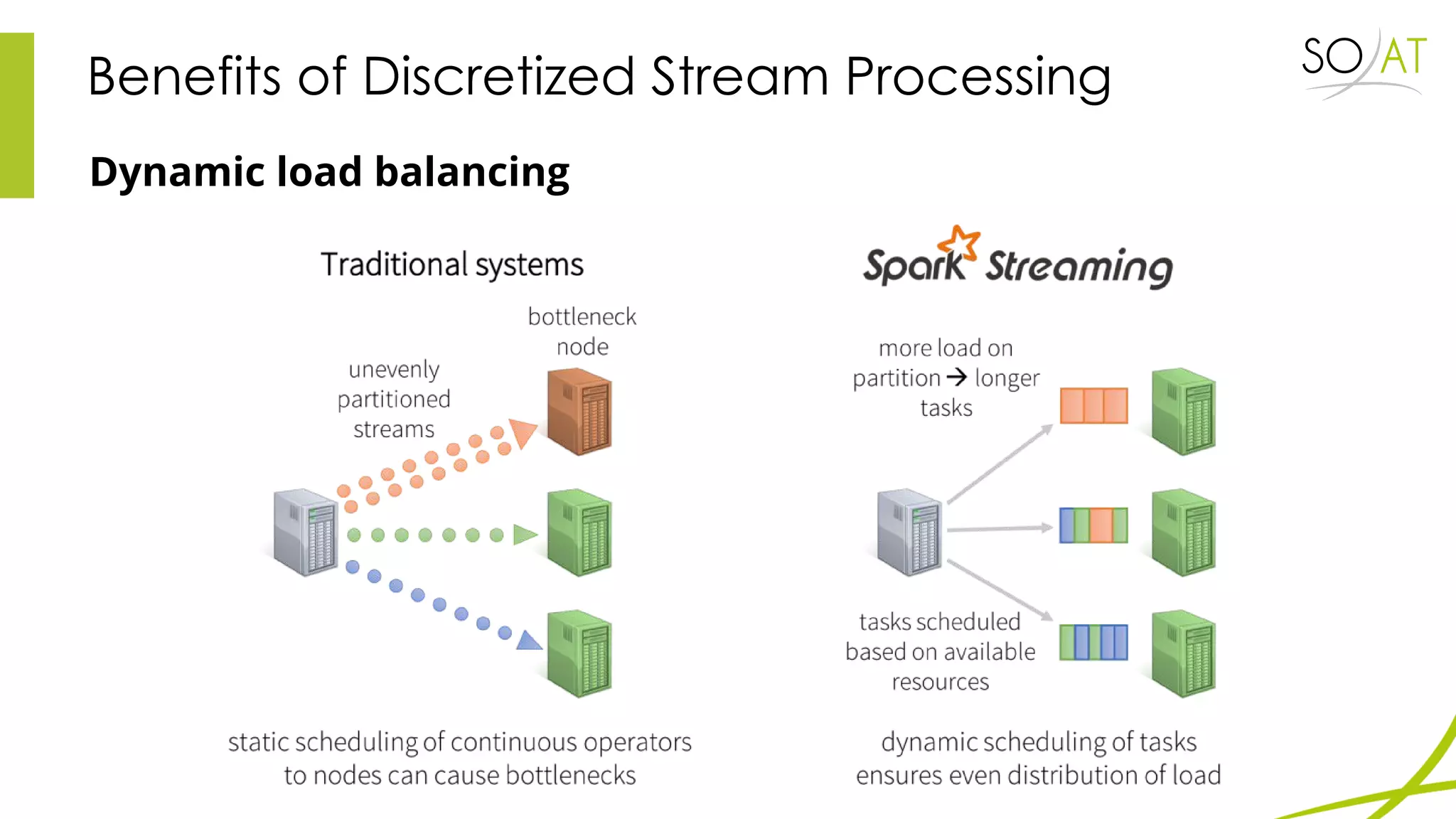

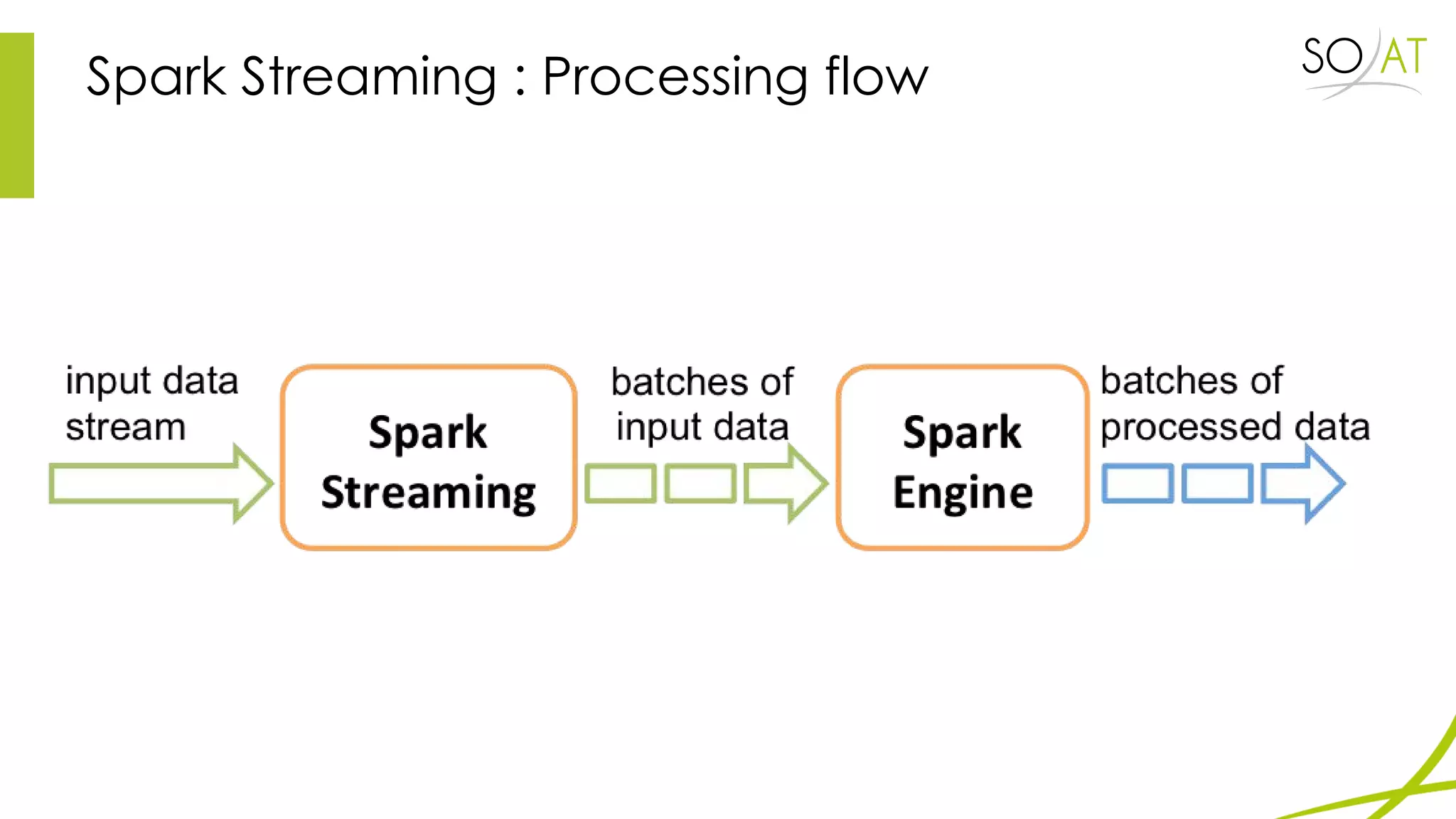

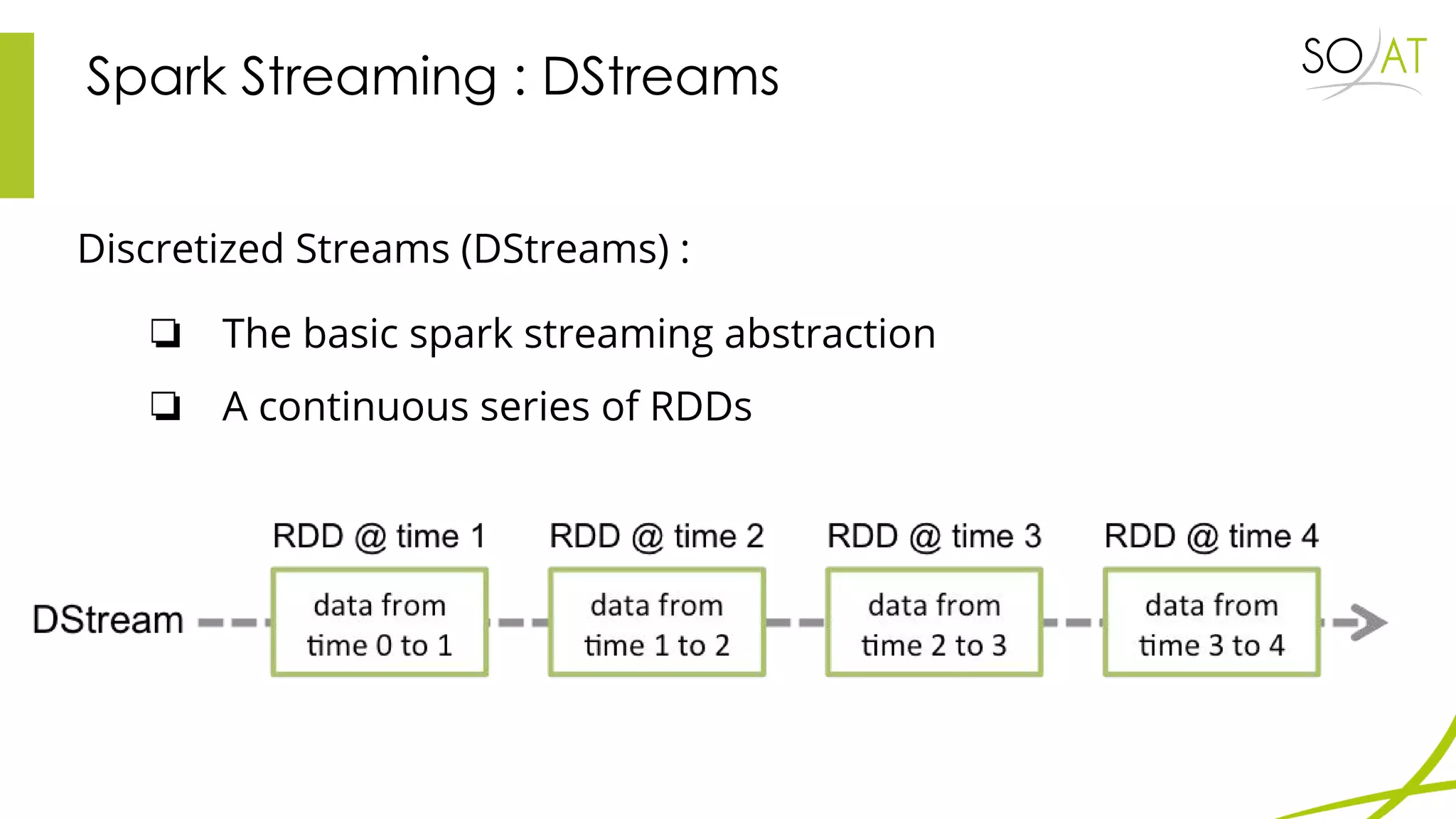

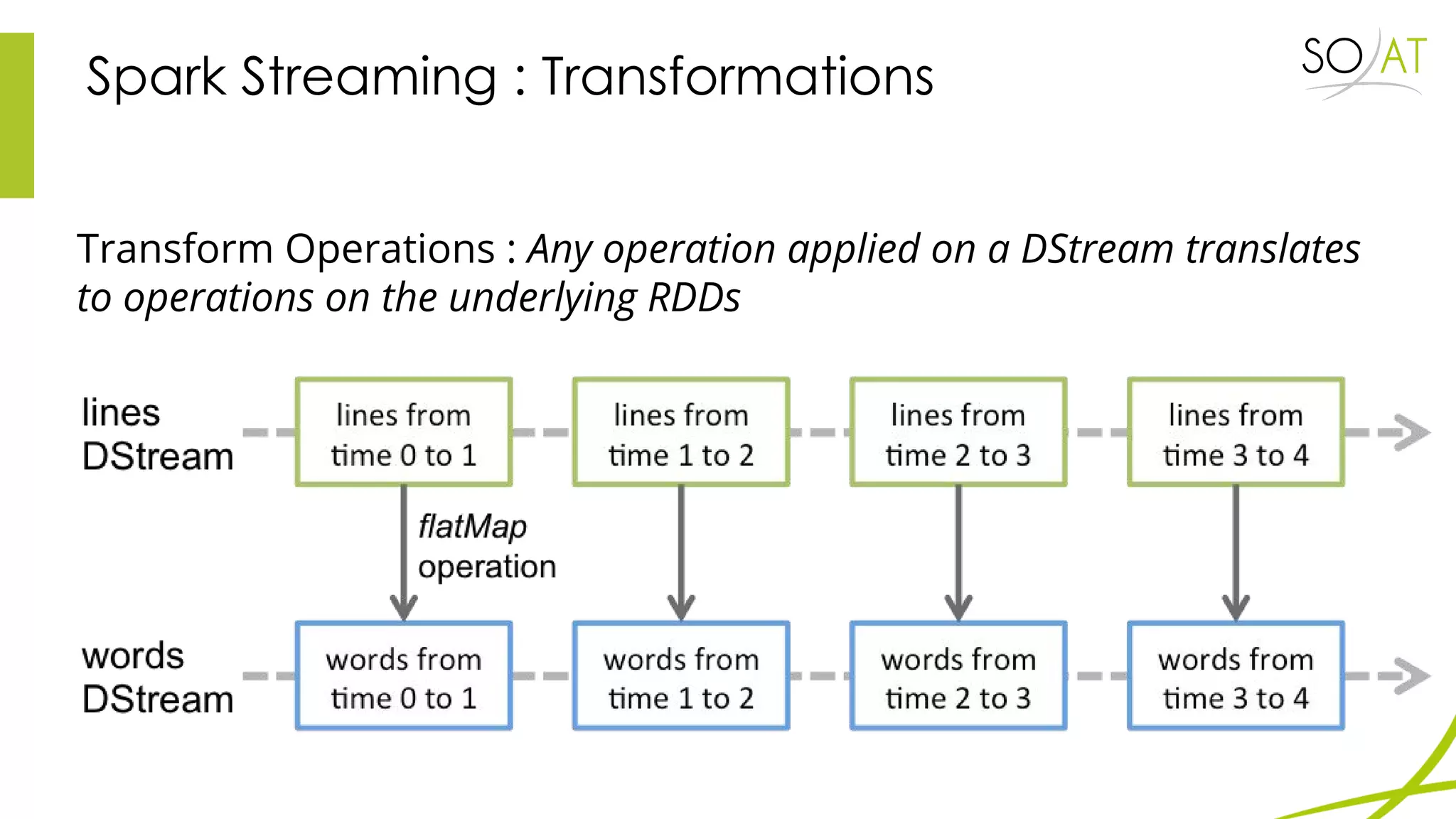

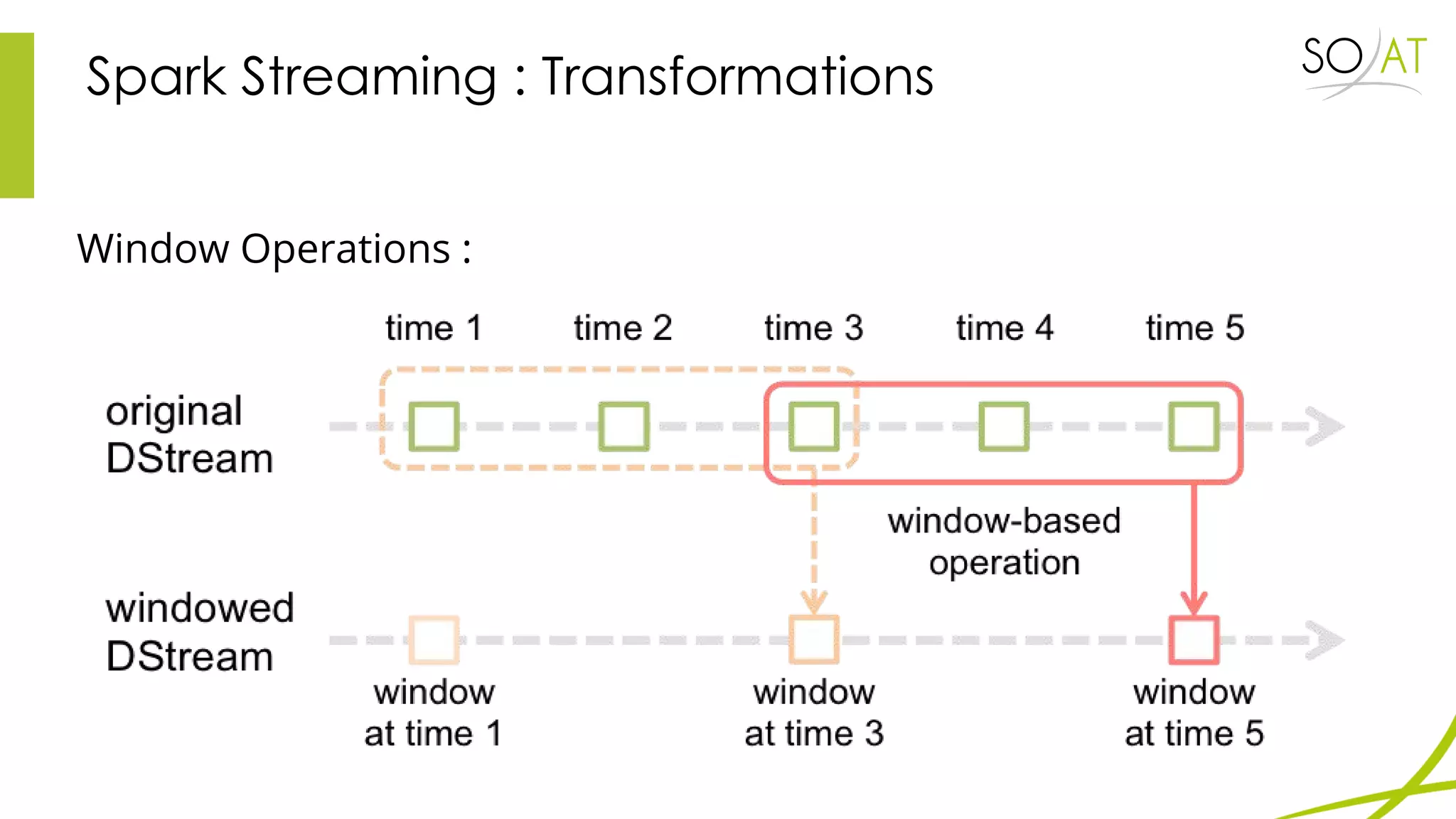

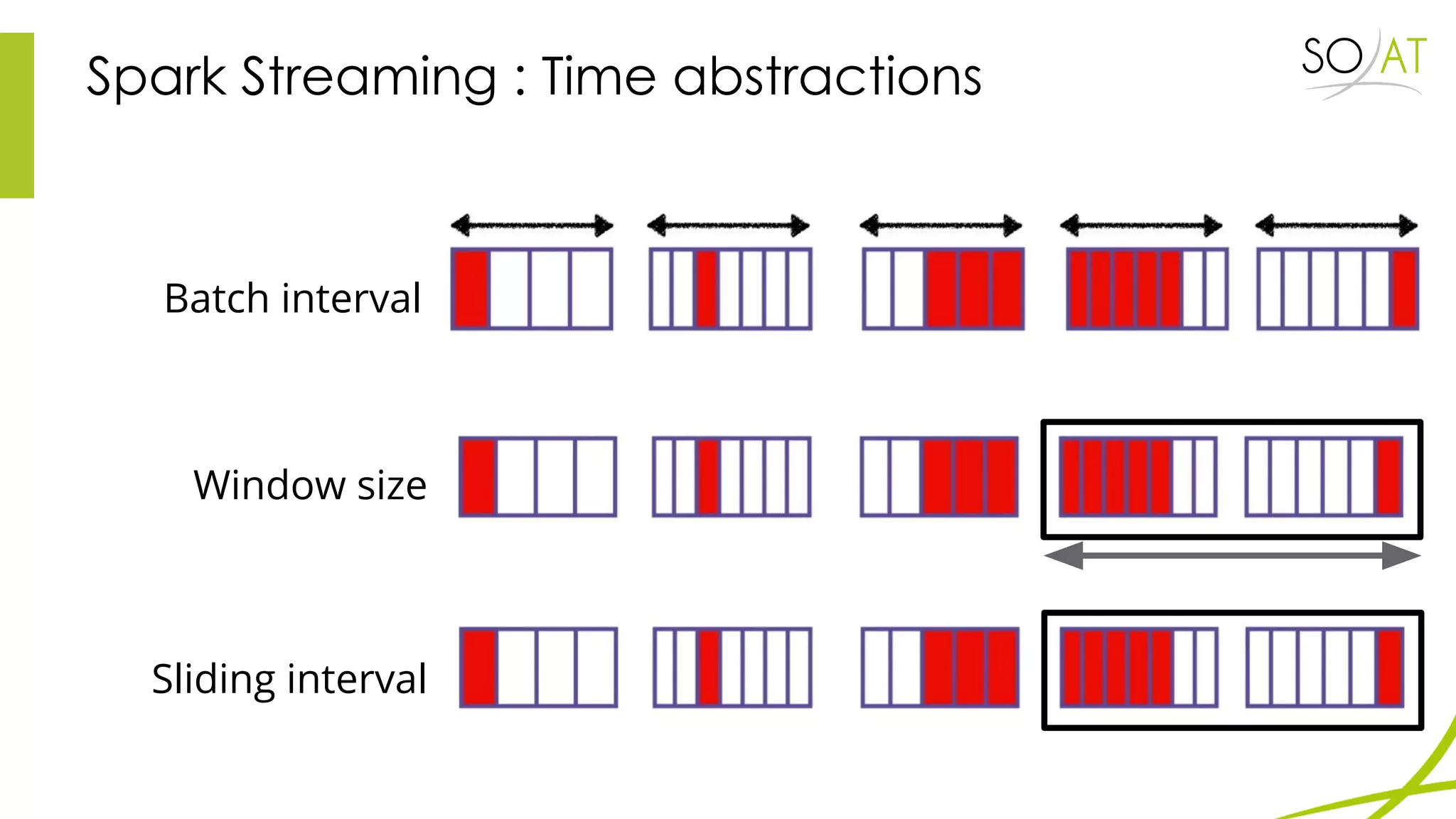

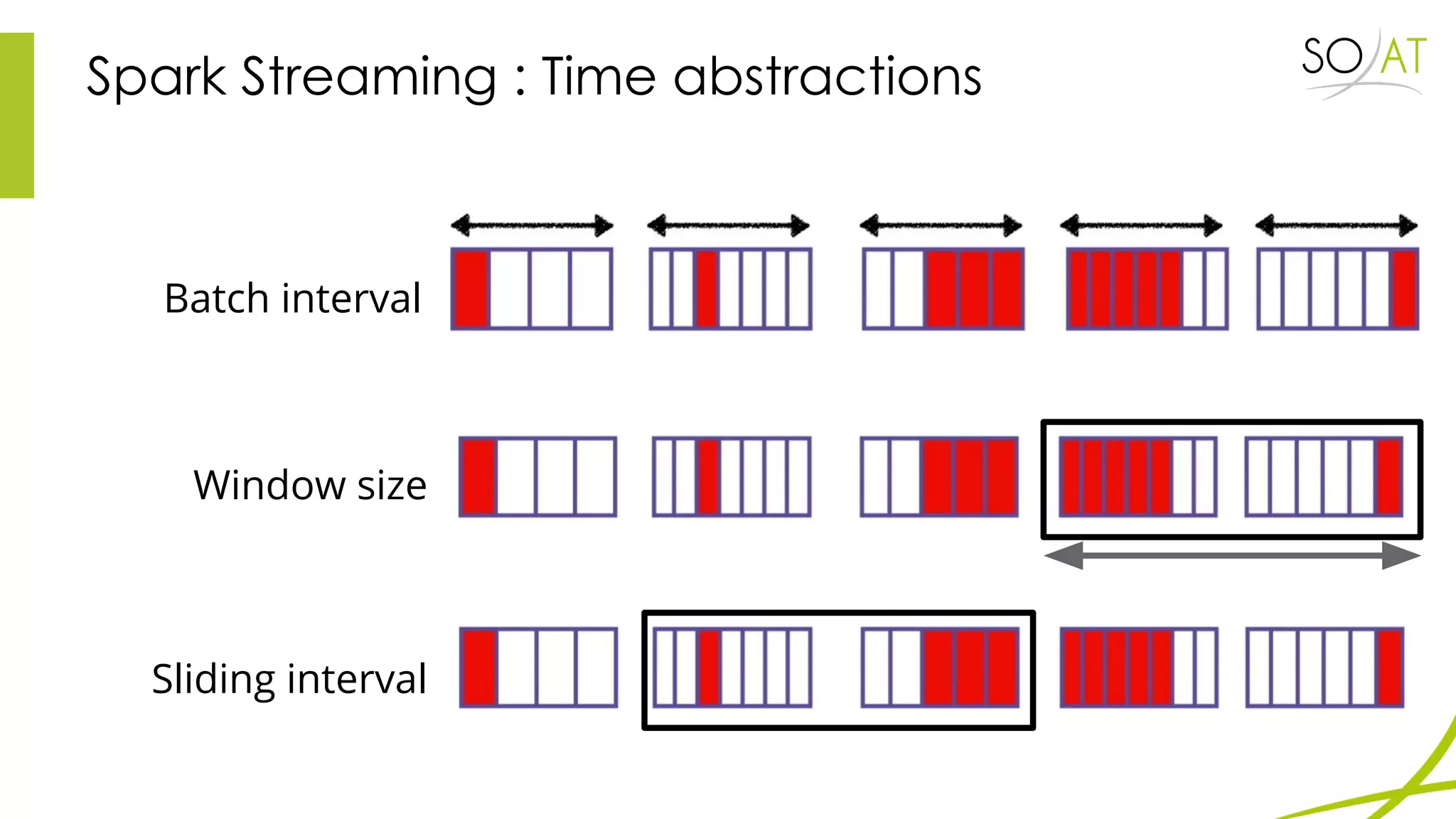

This document provides an overview of Spark, including how it differs from MapReduce by loading more data into memory and implementing caching mechanisms. It discusses Spark's ecosystem, features like RDDs, and how it can run on Hadoop clusters. It also summarizes Spark Streaming, describing how it processes data in micro-batches, provides windowing and transformation operations on DStreams, and unifies batch, streaming, and interactive analytics.