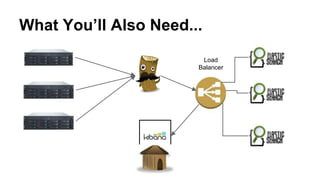

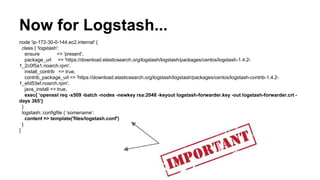

This document provides instructions for deploying an ELK (Elasticsearch, Logstash, Kibana) stack using Puppet. It discusses setting up Elasticsearch on EC2 instances using Puppet modules, configuring Logstash to accept logs and send them to Elasticsearch, and installing Kibana for visualization. The key steps are preparing base EC2 images, configuring Elasticsearch for clustering and plugins, defining the Logstash input, filters and Elasticsearch output, and installing Kibana using a Puppet module to configure it to connect to Elasticsearch.

![1st Prep a Base Image

Save yourself some headache and just prep an

empty image that sets puppet master in

/etc/hosts

[ec2-user@ip-172-30-0-118 ~]$ cat /etc/hosts

127.0.0.1 localhost localhost.localdomain

172.30.0.41 puppet](https://image.slidesharecdn.com/deployingelkw-puppet-150414195034-conversion-gate01/85/Deploying-E-L-K-stack-w-Puppet-7-320.jpg)

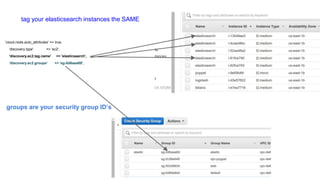

![package_url => 'https://download.elasticsearch.org/elasticsearch/elasticsearch/elasticsearch-1.4.3.noarch.rpm',

java_install => true,

config => {

'cluster.name' => 'Frederick-Von-Clusterberg',

'cloud.aws.access_key' => ‘SDFDSGGSDSDGFSRSGsgfse’,

'cloud.aws.secret_key' => ‘WhaTEVerUrKEYHaPp3n5t0B3ItWoodG0h3R3’,

'cloud.aws.region' => 'us-east',

'cloud.node.auto_attributes' => true,

'discovery.type' => 'ec2',

'discovery.ec2.tag.name' => 'elasticsearch',

'discovery.ec2.groups' => 'sg-0d6aaa69',

'http.port' => '9200',

'http.enabled' => true,

'http.cors.enabled' => true,

'http.cors.allow-origin' => 'http://54.152.82.147',

'path.data' => '/opt/elasticsearch/data',

'discovery.zen.ping.multicast.enabled' => false,

'discovery.zen.ping.unicast.hosts' => ["172.30.0.189", "172.30.0.190","172.30.0.159","172.30.0.160","172.30.0.4"],

}

}

exec{'export ES_HEAP_SIZ=2g':}

The ElasticSearch Package you

want to use

Give your cluster a name](https://image.slidesharecdn.com/deployingelkw-puppet-150414195034-conversion-gate01/85/Deploying-E-L-K-stack-w-Puppet-12-320.jpg)

![Except it Doesn’t work.

'discovery.type' => 'ec2',

'http.port' => '9200',

'http.enabled' => true,

'http.cors.enabled' => true,

'http.cors.allow-origin' => 'http://54.152.82.147',

'path.data' => '/opt/elasticsearch/data',

'discovery.zen.ping.multicast.enabled' => false,

'discovery.zen.ping.unicast.hosts' => ["172.30.0.189", "172.30.0.190","172.30.0.159","172.30.0.160","172.30.0.4"],

}

}](https://image.slidesharecdn.com/deployingelkw-puppet-150414195034-conversion-gate01/85/Deploying-E-L-K-stack-w-Puppet-15-320.jpg)

![Now add some Plugins!!

elasticsearch::plugin { 'elasticsearch/elasticsearch-cloud-aws/2.4.1':

module_dir => 'cloud-aws',

instances => ['es1'],

}

elasticsearch::plugin { 'mobz/elasticsearch-head':

module_dir => 'head',

instances => ['es1'],

}

elasticsearch::plugin { 'lmenezes/elasticsearch-kopf':

module_dir => 'kopf',

instances => ['es1'],

}

elasticsearch::plugin { 'lukas-vlcek/bigdesk':

module_dir => 'bigdesk',

instances => ['es1'],

}

}

And Make

Sure to add

your instance

Name](https://image.slidesharecdn.com/deployingelkw-puppet-150414195034-conversion-gate01/85/Deploying-E-L-K-stack-w-Puppet-19-320.jpg)

![Filters….

filter {

grok {

type => "apache-access"

match => { message => "%{COMBINEDAPACHELOG}" }

}

date {

match => [ "timestamp" , "dd/MMM/yyyy:HH:mm:ss Z" ]

}

geoip {

source => clientip

}

}](https://image.slidesharecdn.com/deployingelkw-puppet-150414195034-conversion-gate01/85/Deploying-E-L-K-stack-w-Puppet-26-320.jpg)

![like go, the keys you made earlier, logstash

forwarder...

{

"network": {

"servers": [ "ip-172-30-0-144:1234" ],

"ssl key":"/root/.logstash/logstash-forwarder.key",

"ssl ca": "/root/.logstash/logstash-forwarder.crt",

"timeout": 120

},

"files": [

{

"paths": [

"/home/logdir/access*[^.][^g][^z]"

],

"start_position": "beginning",

"fields": { "type": "apache-access" }

}

]

}](https://image.slidesharecdn.com/deployingelkw-puppet-150414195034-conversion-gate01/85/Deploying-E-L-K-stack-w-Puppet-35-320.jpg)