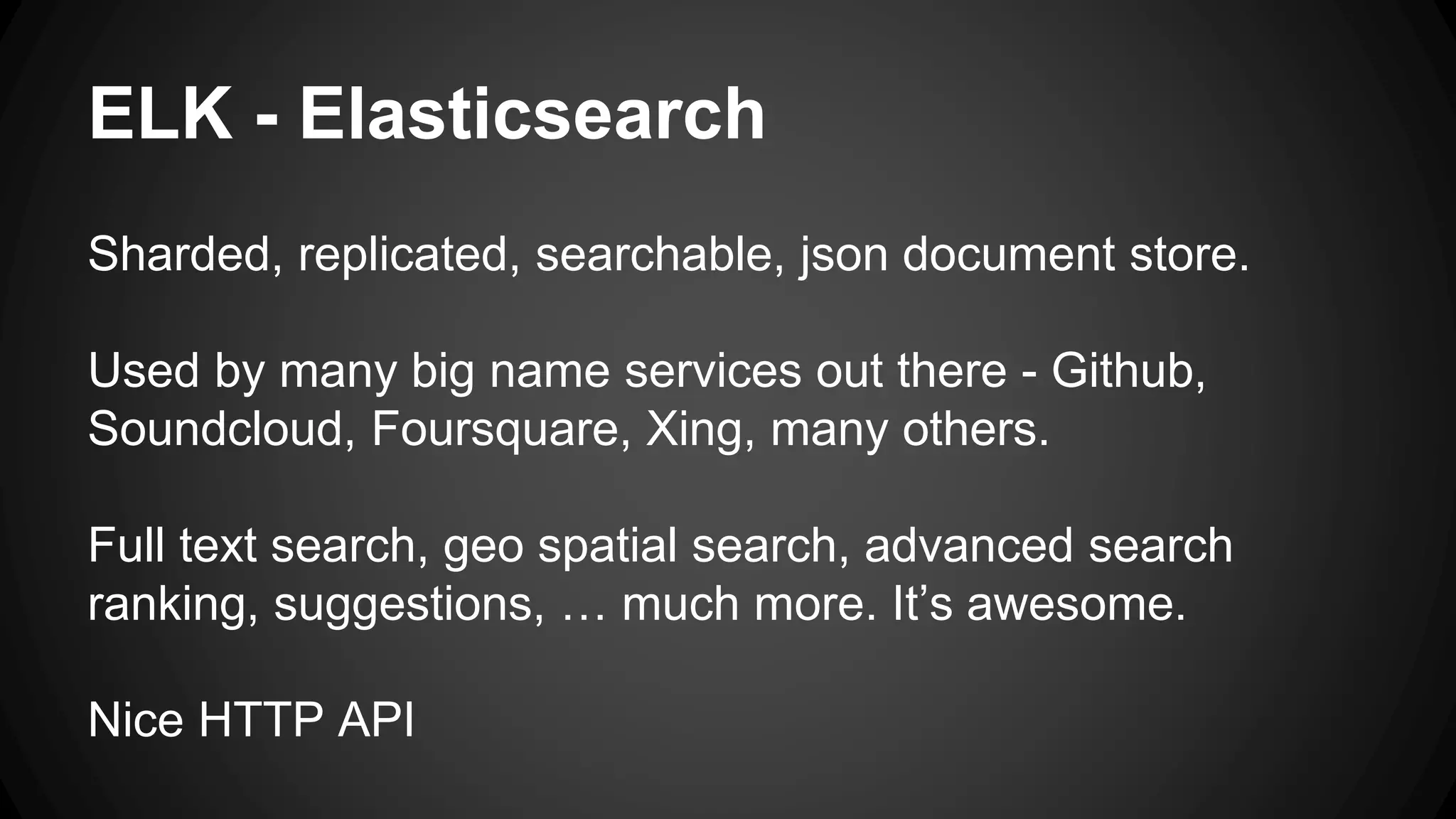

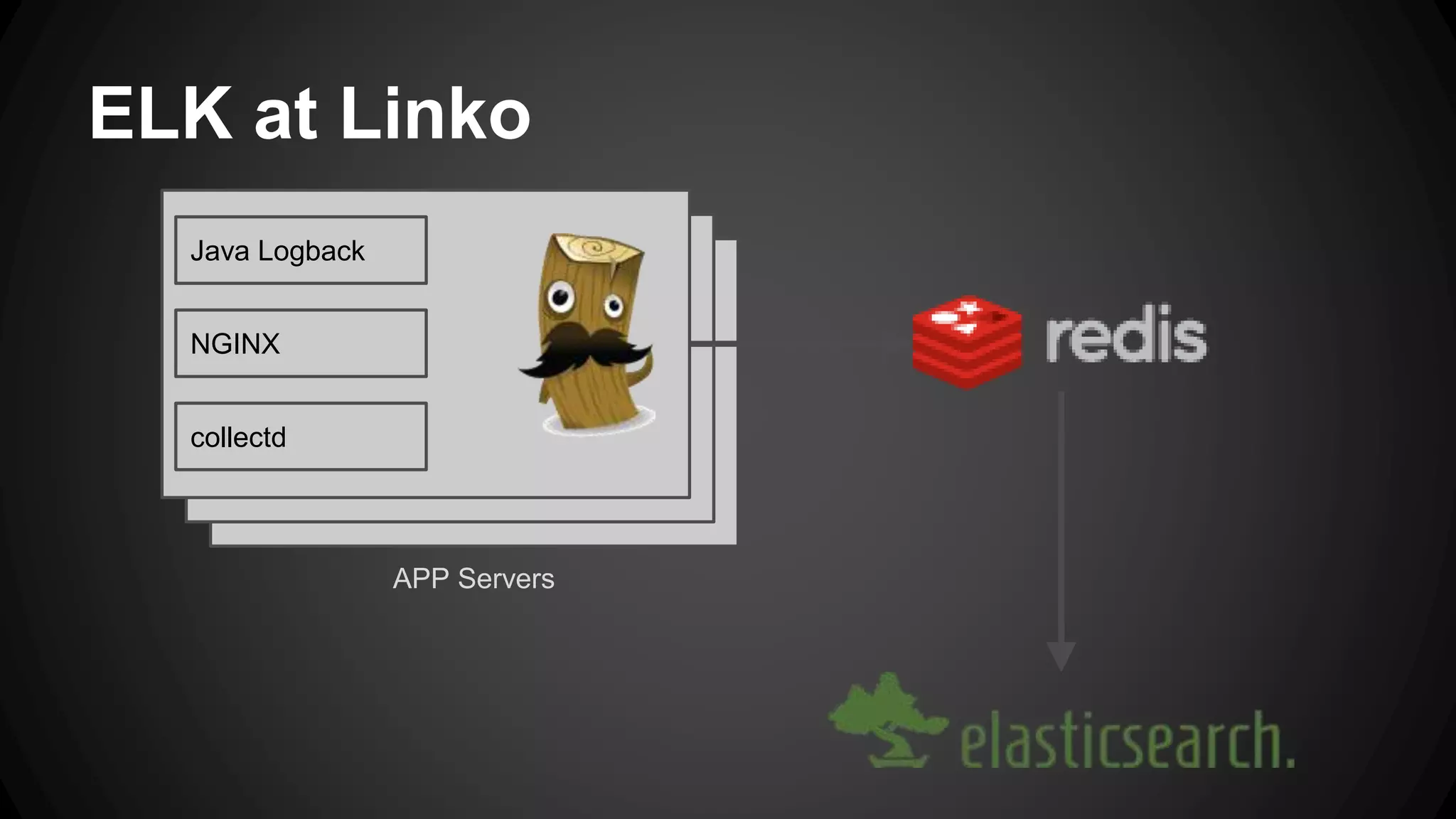

Jilles van Gurp presents on the ELK stack and how it is used at Linko to analyze logs from applications servers, Nginx, and Collectd. The ELK stack consists of Elasticsearch for storage and search, Logstash for processing and transporting logs, and Kibana for visualization. At Linko, Logstash collects logs and sends them to Elasticsearch for storage and search. Logs are filtered and parsed by Logstash using grok patterns before being sent to Elasticsearch. Kibana dashboards then allow users to explore and analyze logs in real-time from Elasticsearch. While the ELK stack is powerful, there are some operational gotchas to watch out for like node restarts impacting availability and field data caching

![Linko Logstash - App Server (1)

input {

file {

type => "nginx_access"

path => ["/var/log/nginx/*.log"]

exclude => ["*.gz”, “error.*"]

discover_interval => 10

sincedb_path => "/opt/logstash/sincedb-

access-nginx"

}

}

filter {

grok {

type => "nginx_access"

patterns_dir => "/opt/logstash/patterns"

pattern =>

["%{NGINXACCESSWITHUPSTR}","%{NGINXACCESS}"]

}

date {

type => "nginx_access"

locale => "en"

match => [ "time_local" ,

"dd/MMM/YYYY:HH:mm:ss Z" ]

}

}](https://image.slidesharecdn.com/w1adukd4r5i0gd1oo5bj-140516192314-phpapp01/75/Elk-stack-18-2048.jpg)

![Grok pattern for NGINX

NGINXACCESSWITHUPSTR %{IPORHOST:remote_addr} - %{USERNAME:remote_user}

[%{HTTPDATE:time_local}] "%{WORD:method} %{URIPATHPARAM:request} %{GREEDYDATA:protocol}"

%{INT:status} %{INT:body_bytes_sent} %{QS:http_referer} %{QS:http_user_agent} %{QS:backend}

%{BASE16FLOAT:duration}

NGINXACCESS %{IPORHOST:remote_addr} - %{USERNAME:remote_user} [%{HTTPDATE:time_local}]

%{QS:request} %{INT:status} %{INT:body_bytes_sent} %{QS:http_referer} %{QS:http_user_agent}](https://image.slidesharecdn.com/w1adukd4r5i0gd1oo5bj-140516192314-phpapp01/75/Elk-stack-19-2048.jpg)