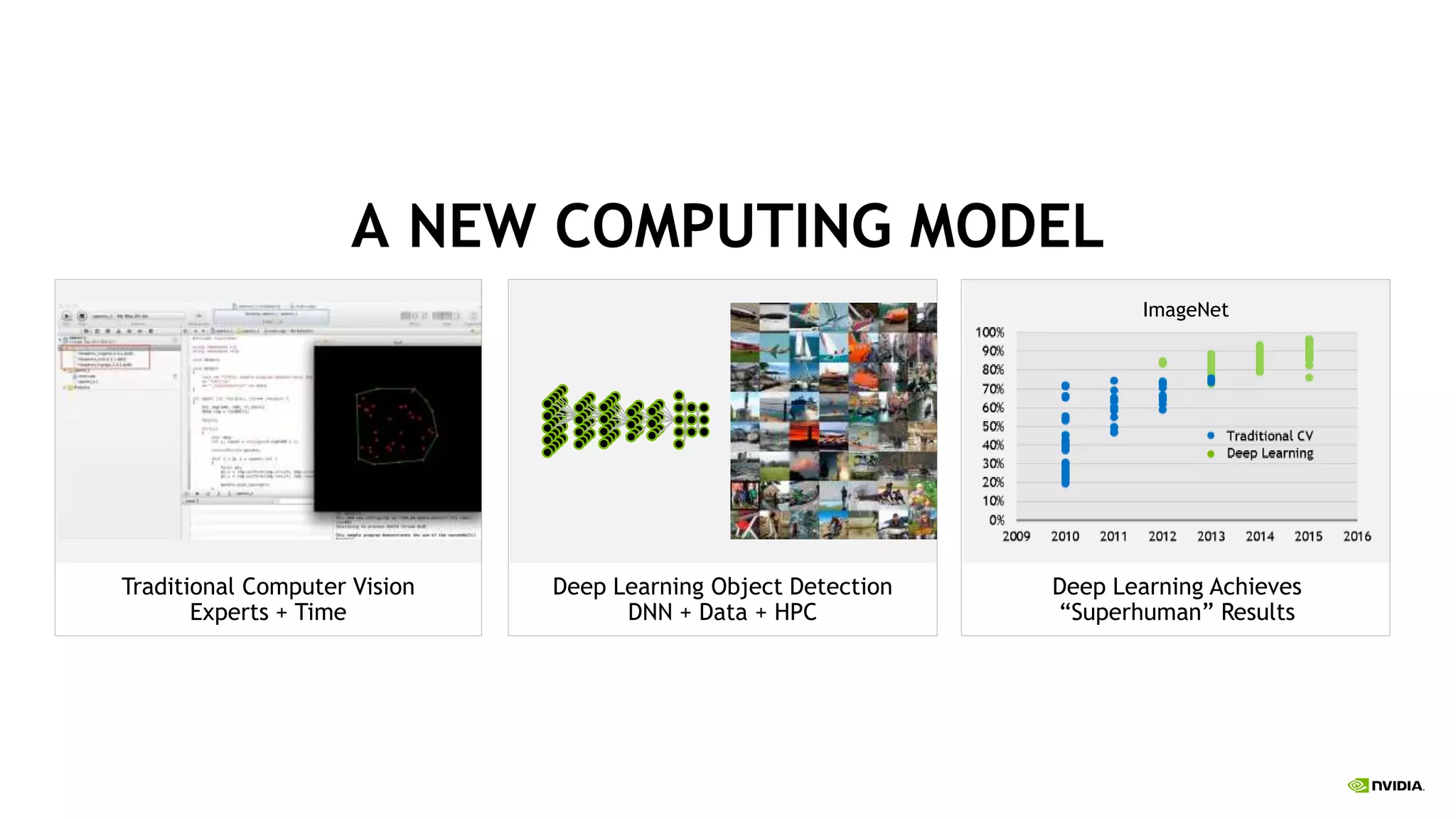

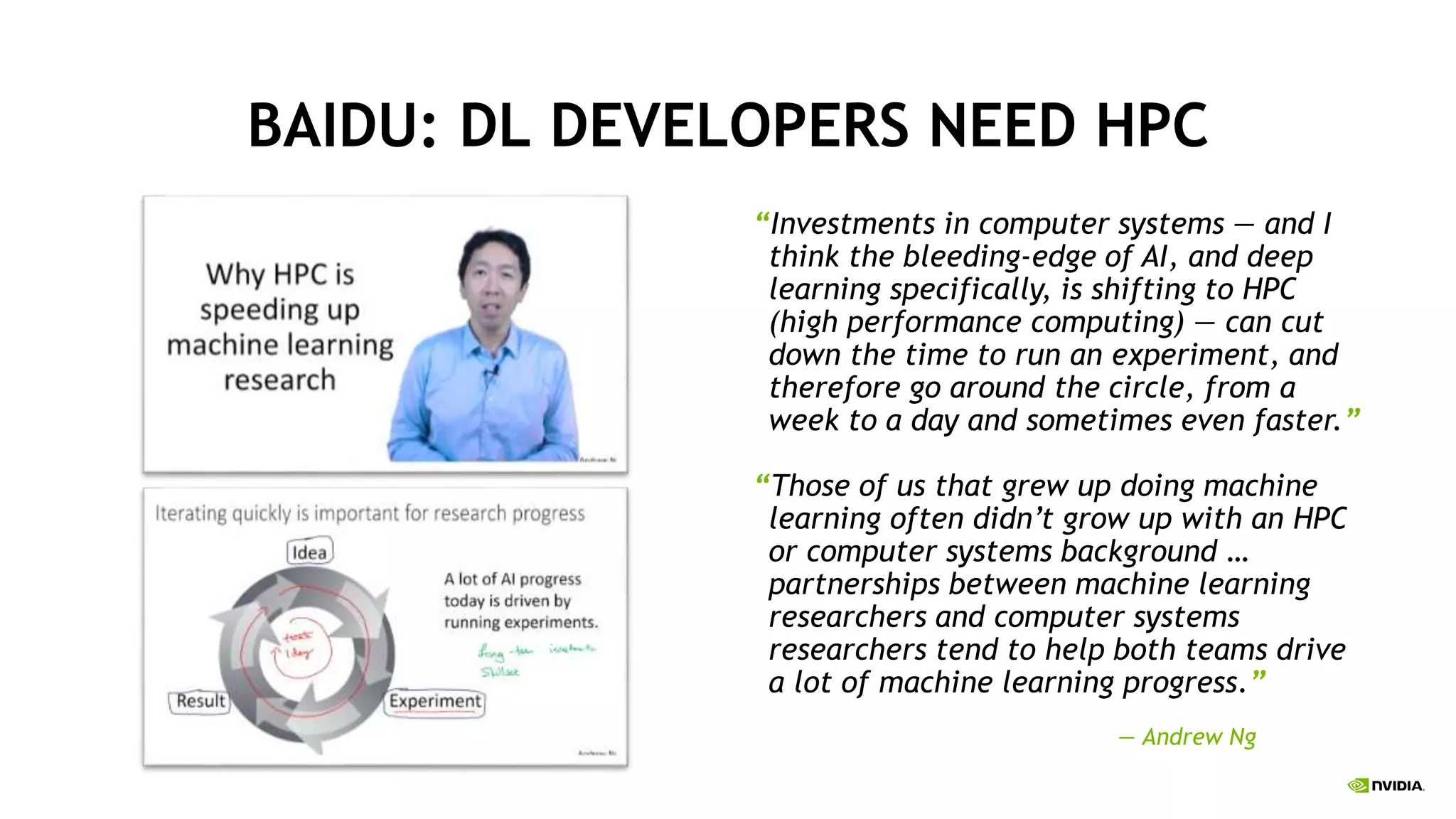

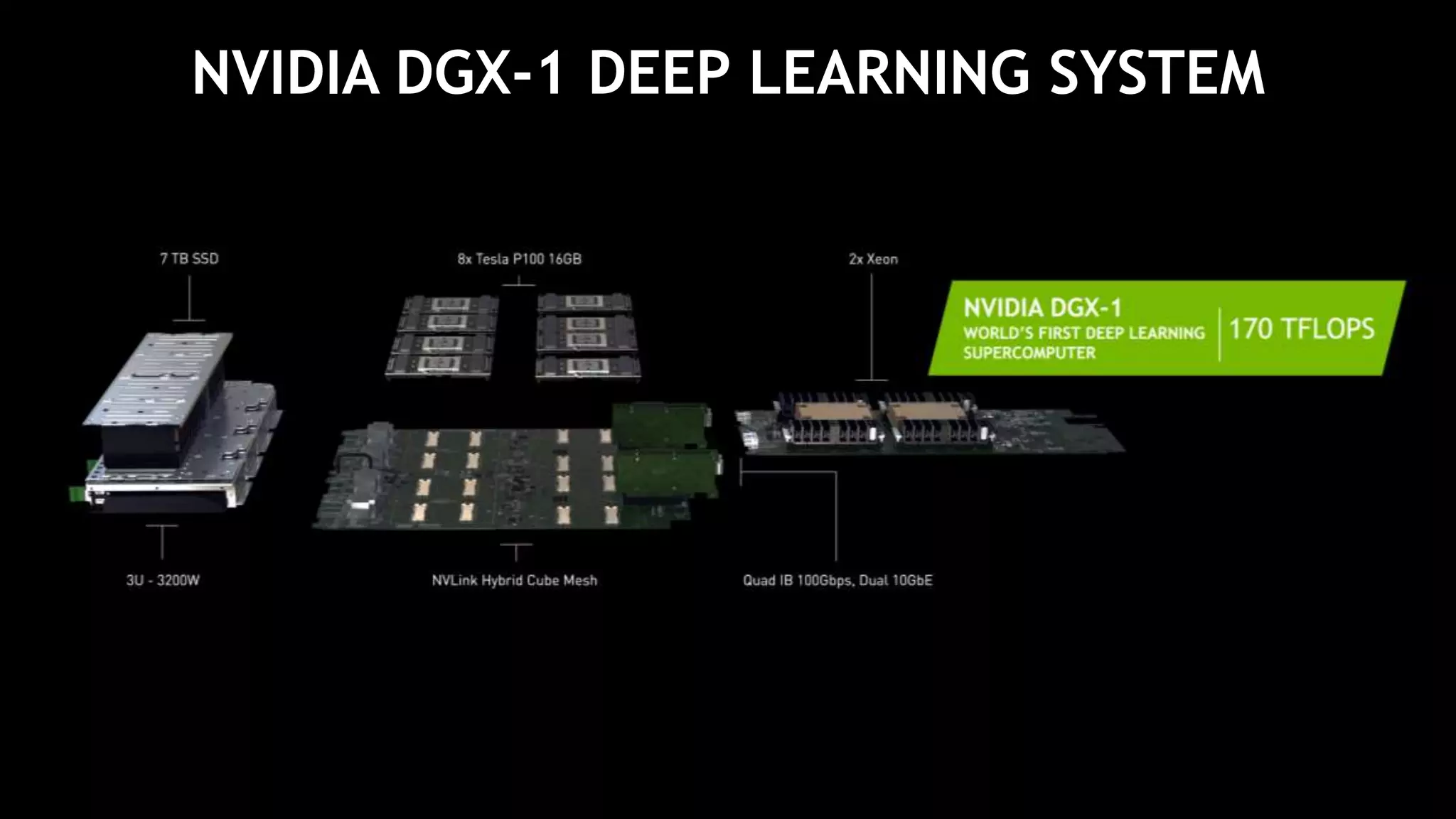

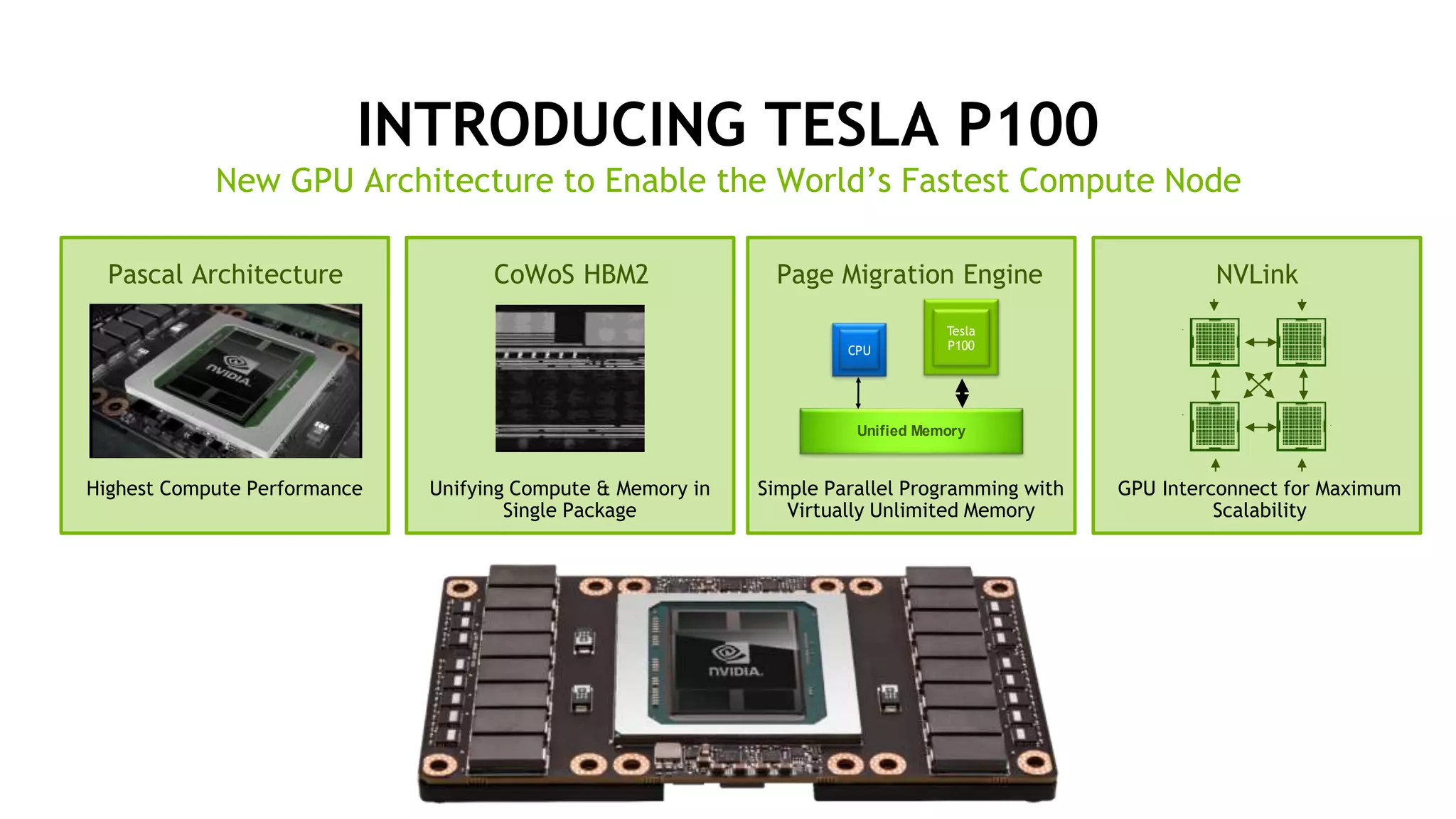

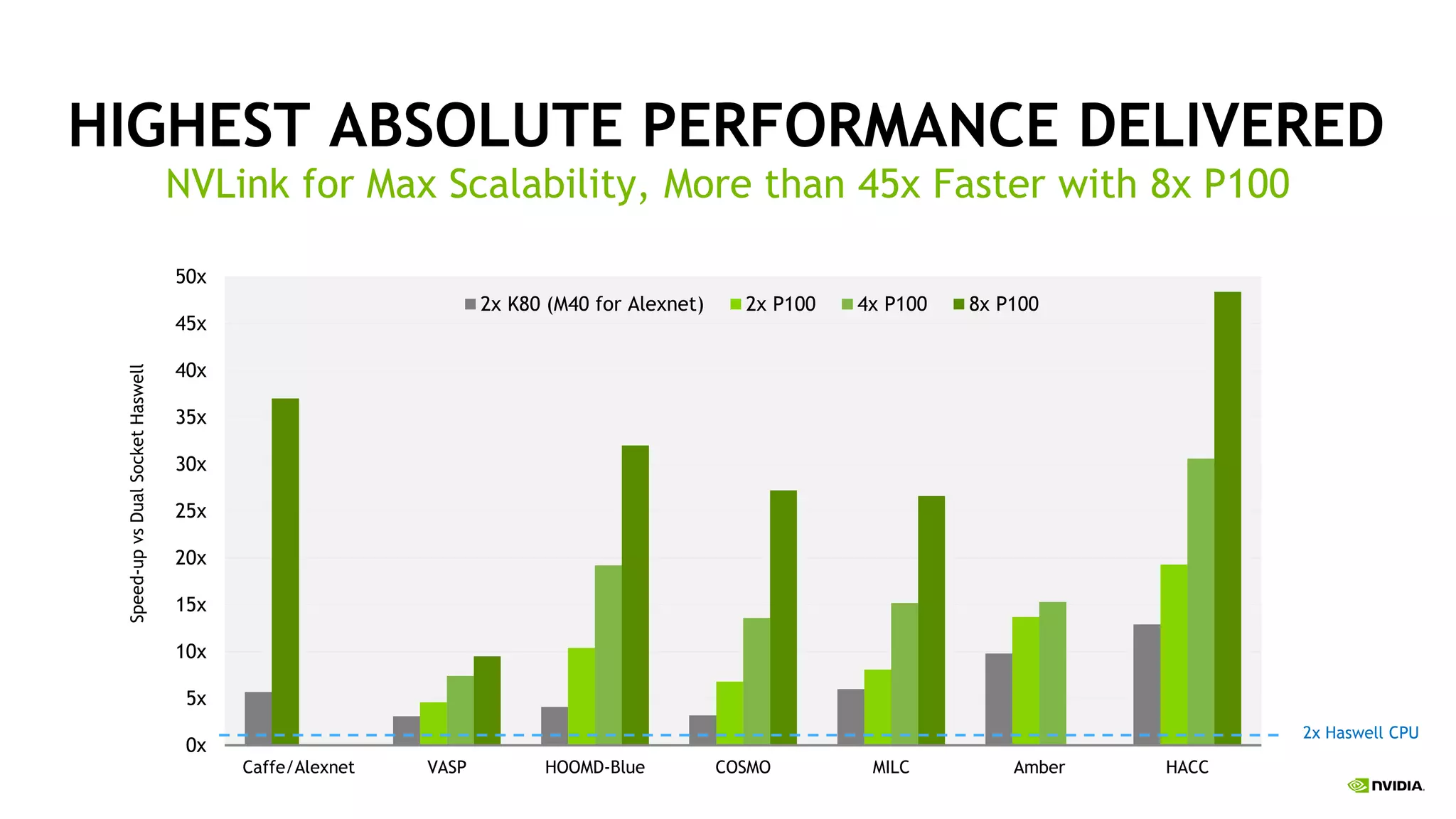

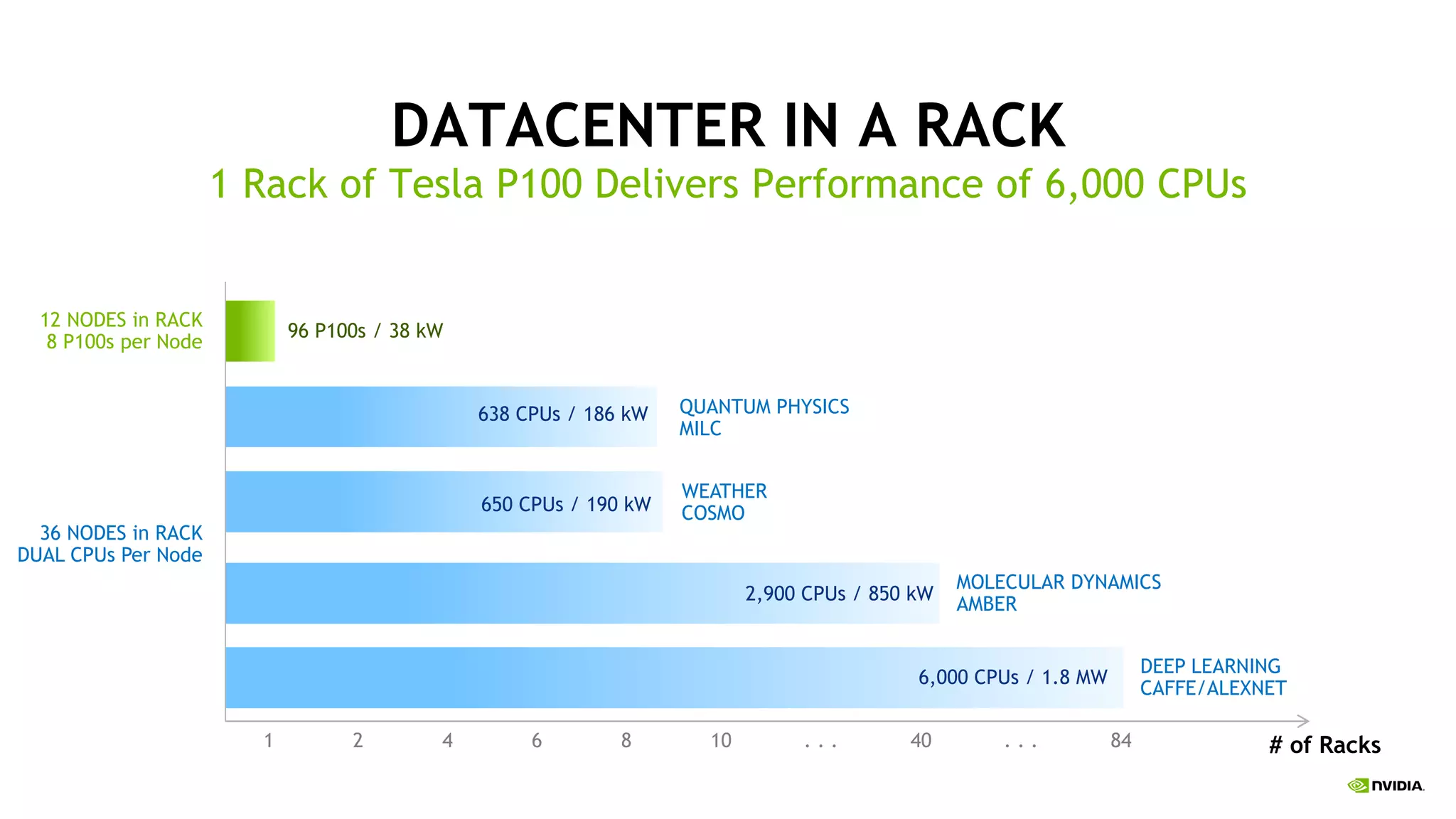

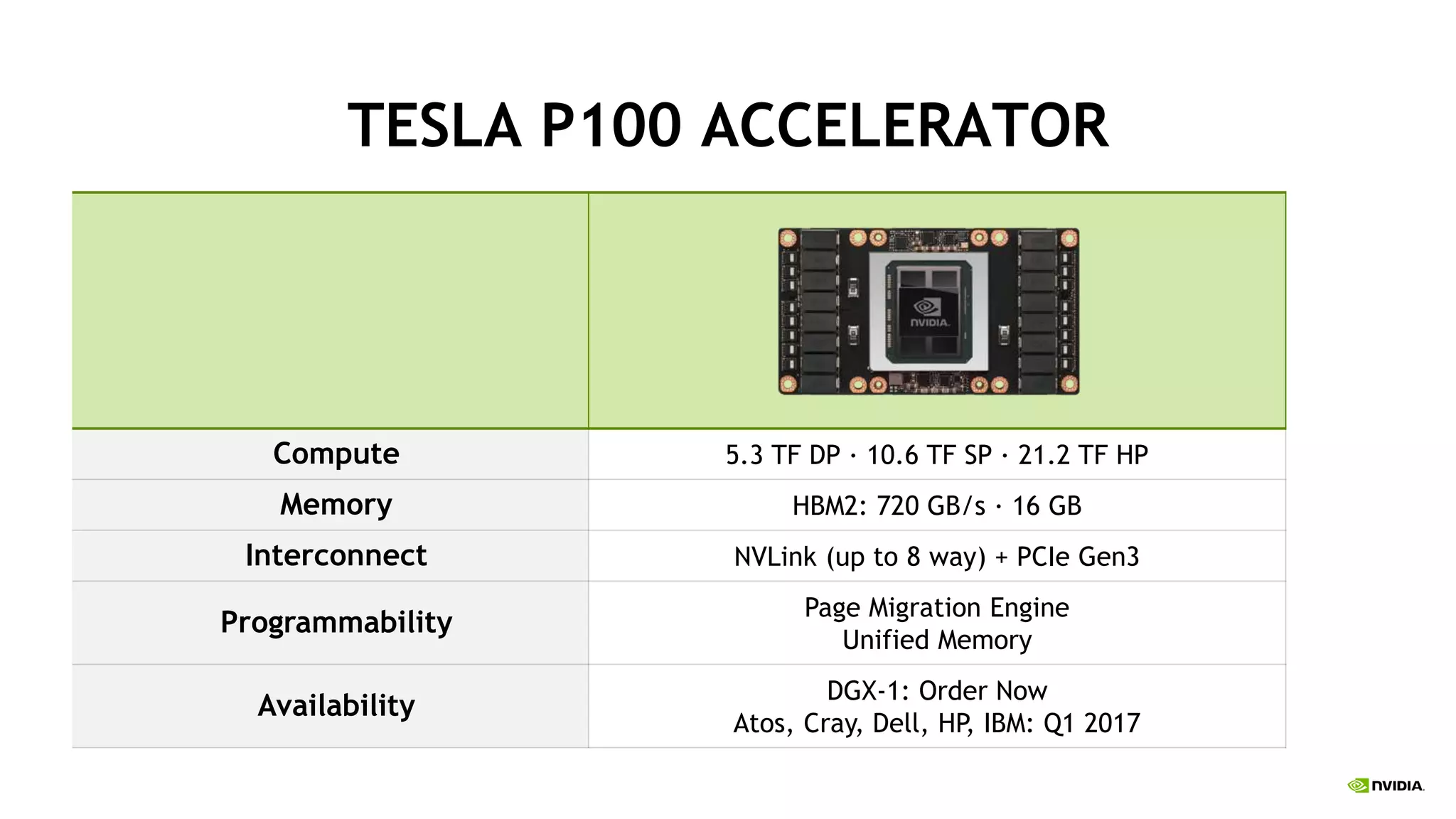

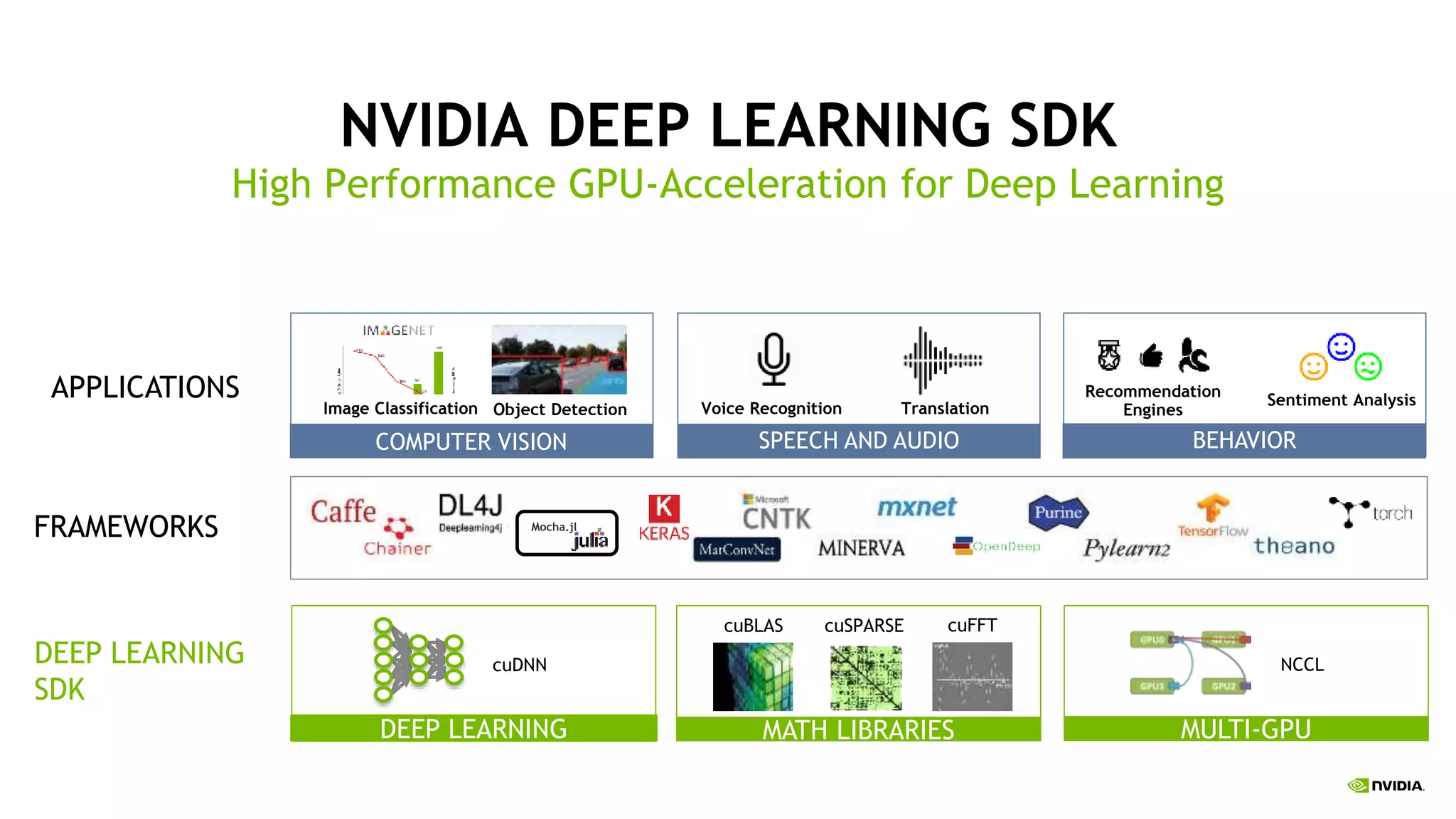

The document provides an update on deep learning and announcements from NVIDIA's GPU Technology Conference (GTC16). It discusses achievements in deep learning like object detection surpassing human-level performance. It also summarizes NVIDIA's latest products like the DGX-1 deep learning supercomputer, Tesla P100 GPU, and improvements to tools like cuDNN that accelerate deep learning. The document emphasizes how these announcements and products will help further progress in deep learning research and applications.