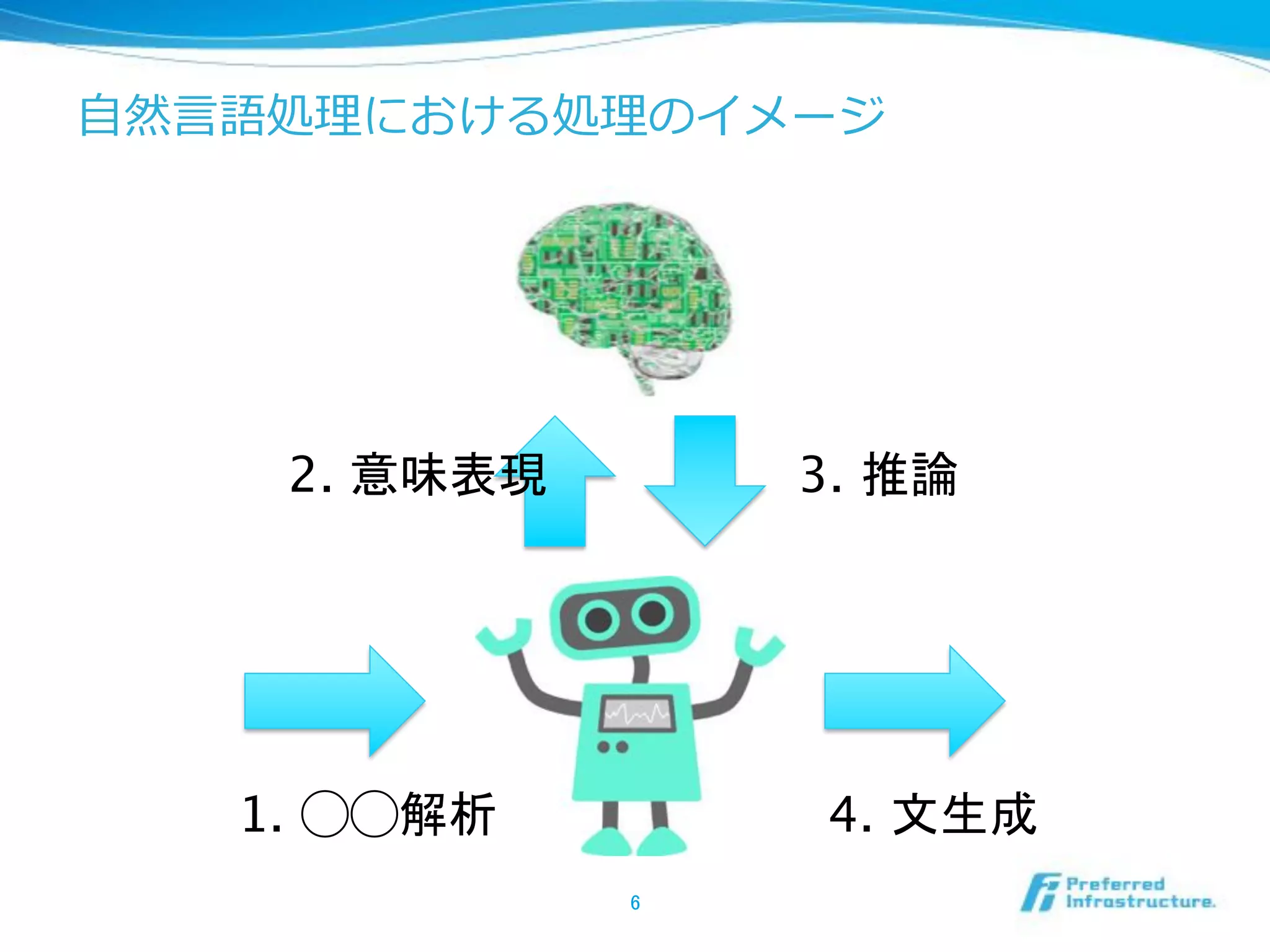

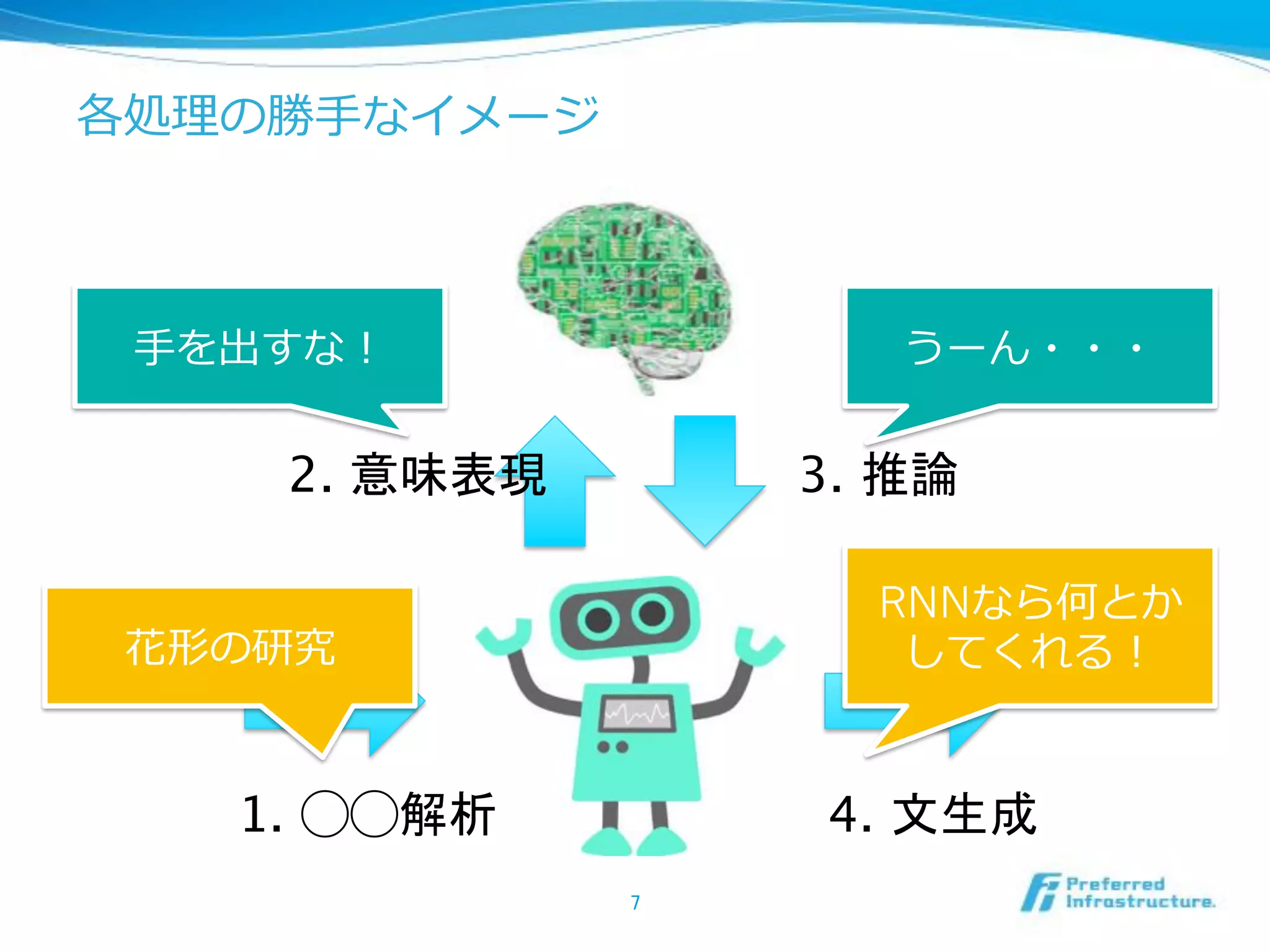

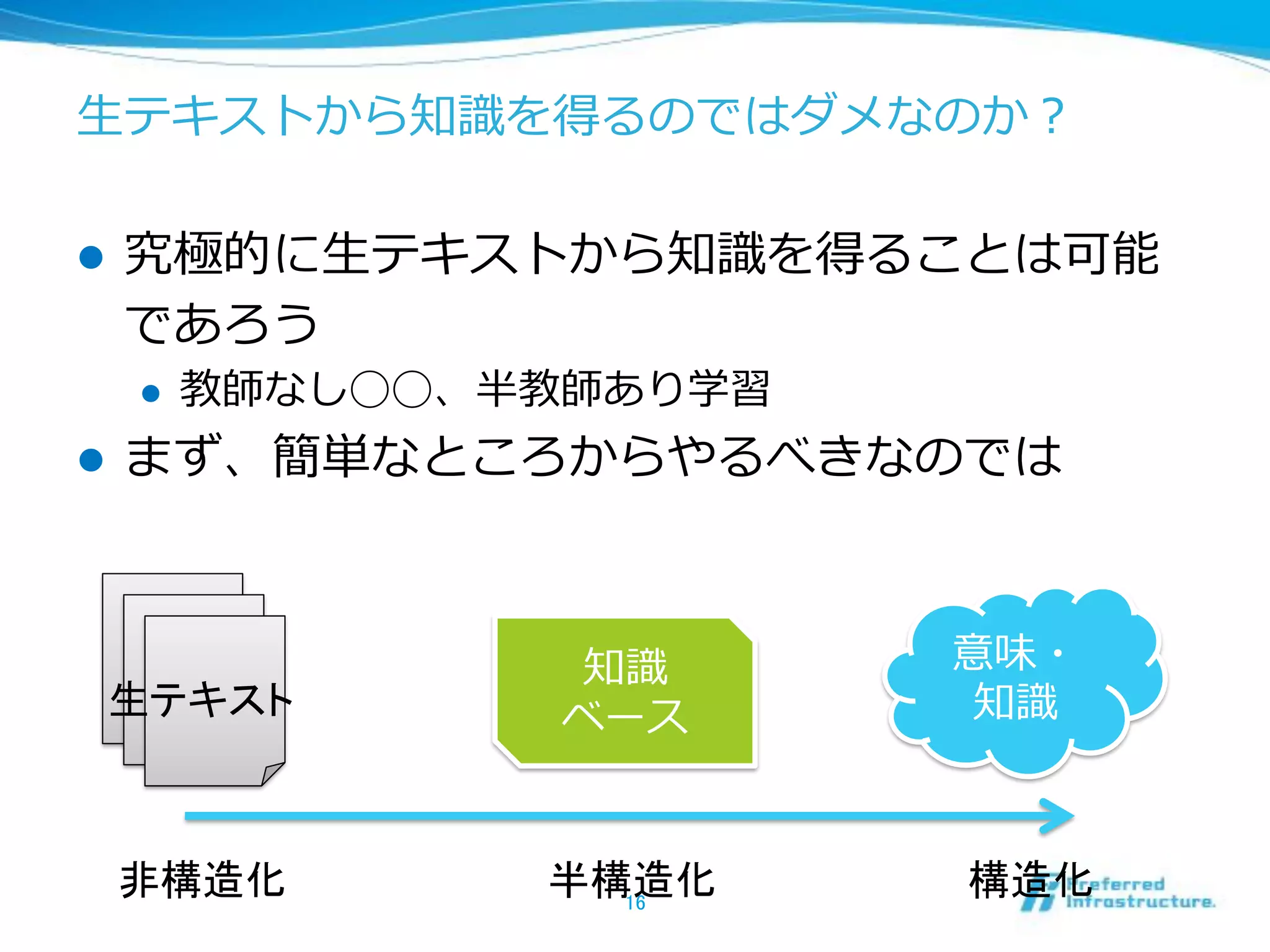

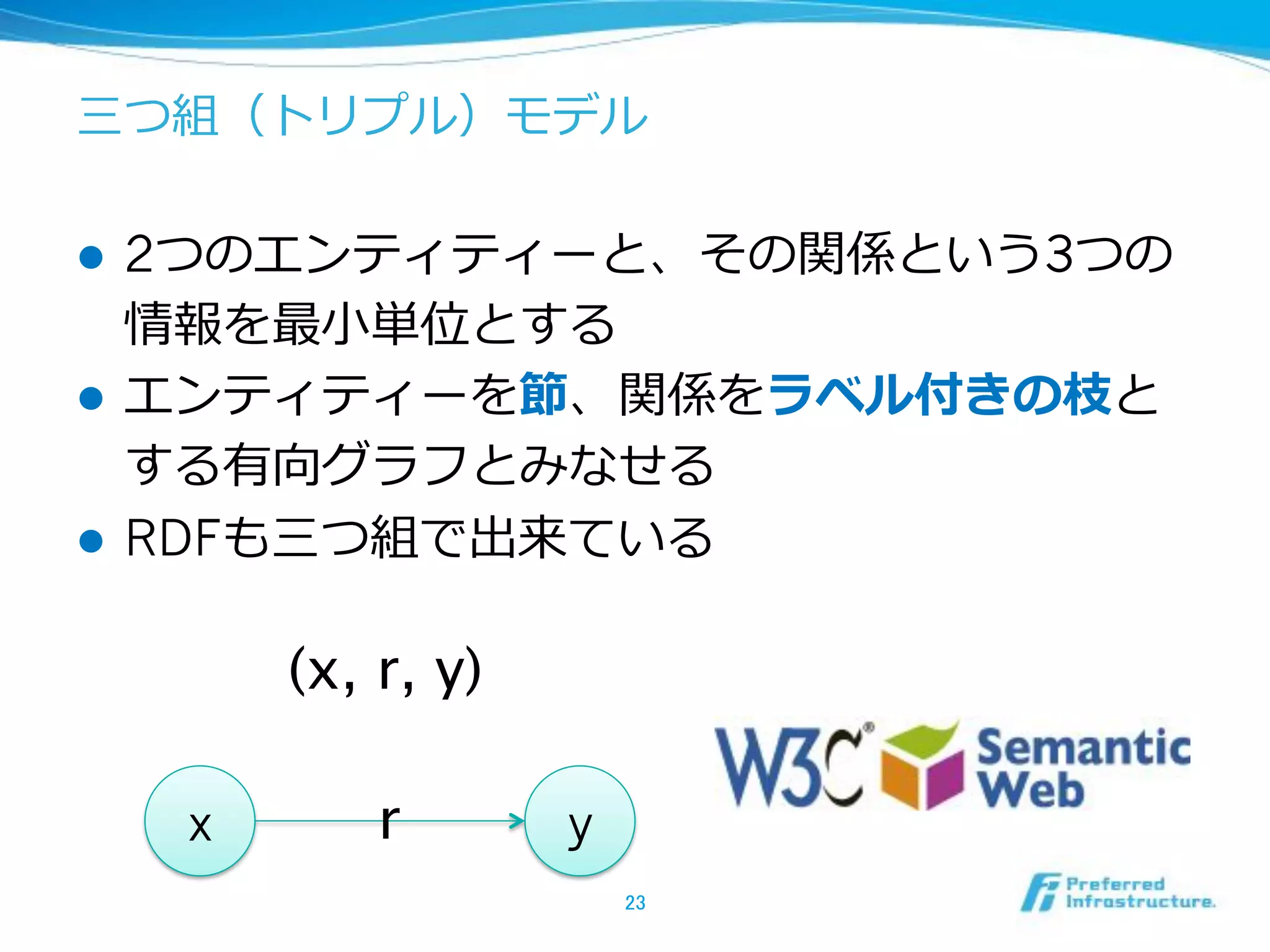

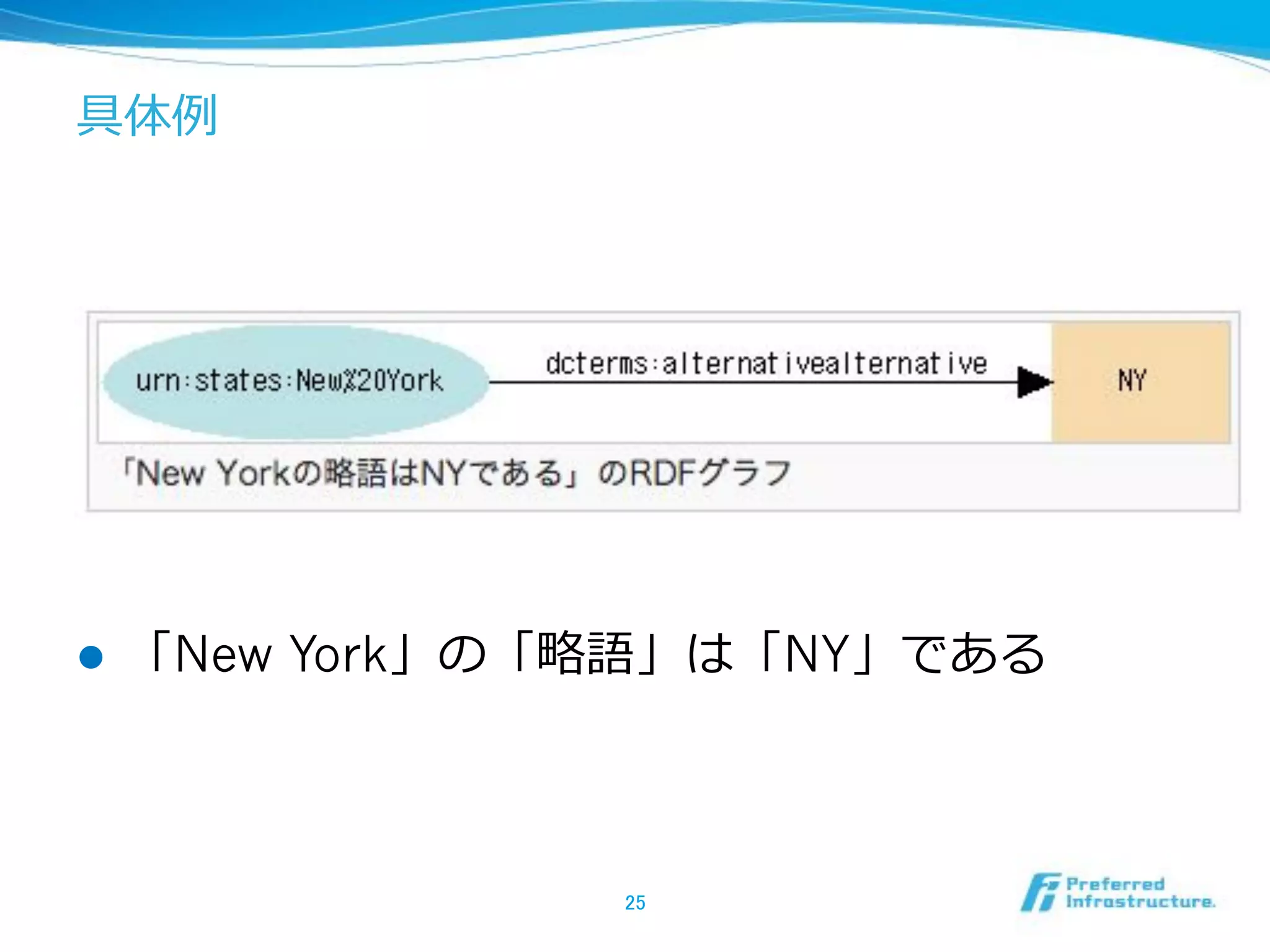

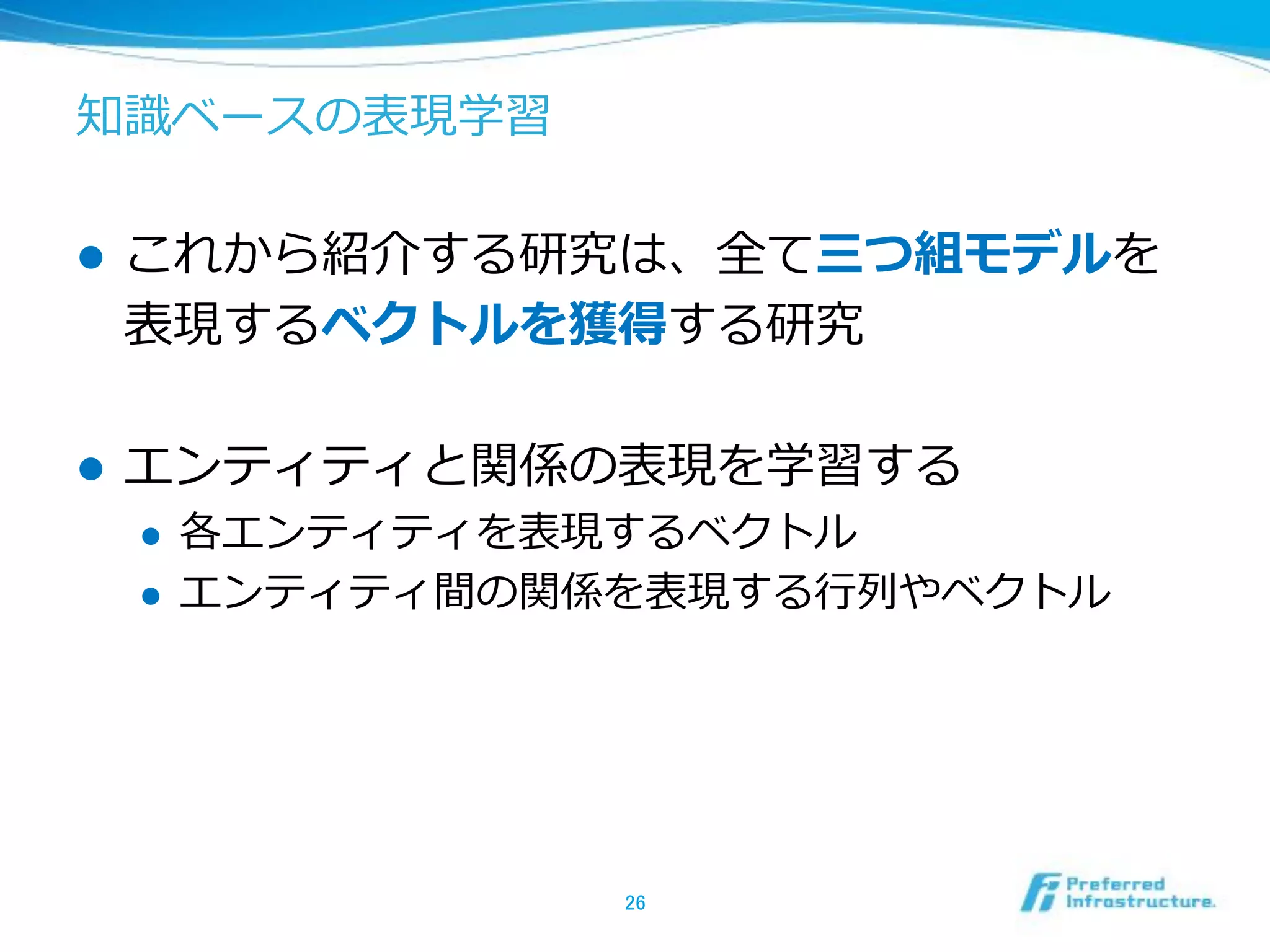

1. The document discusses knowledge representation and deep learning techniques for knowledge graphs, including embedding models like TransE, TransH, and neural network models.

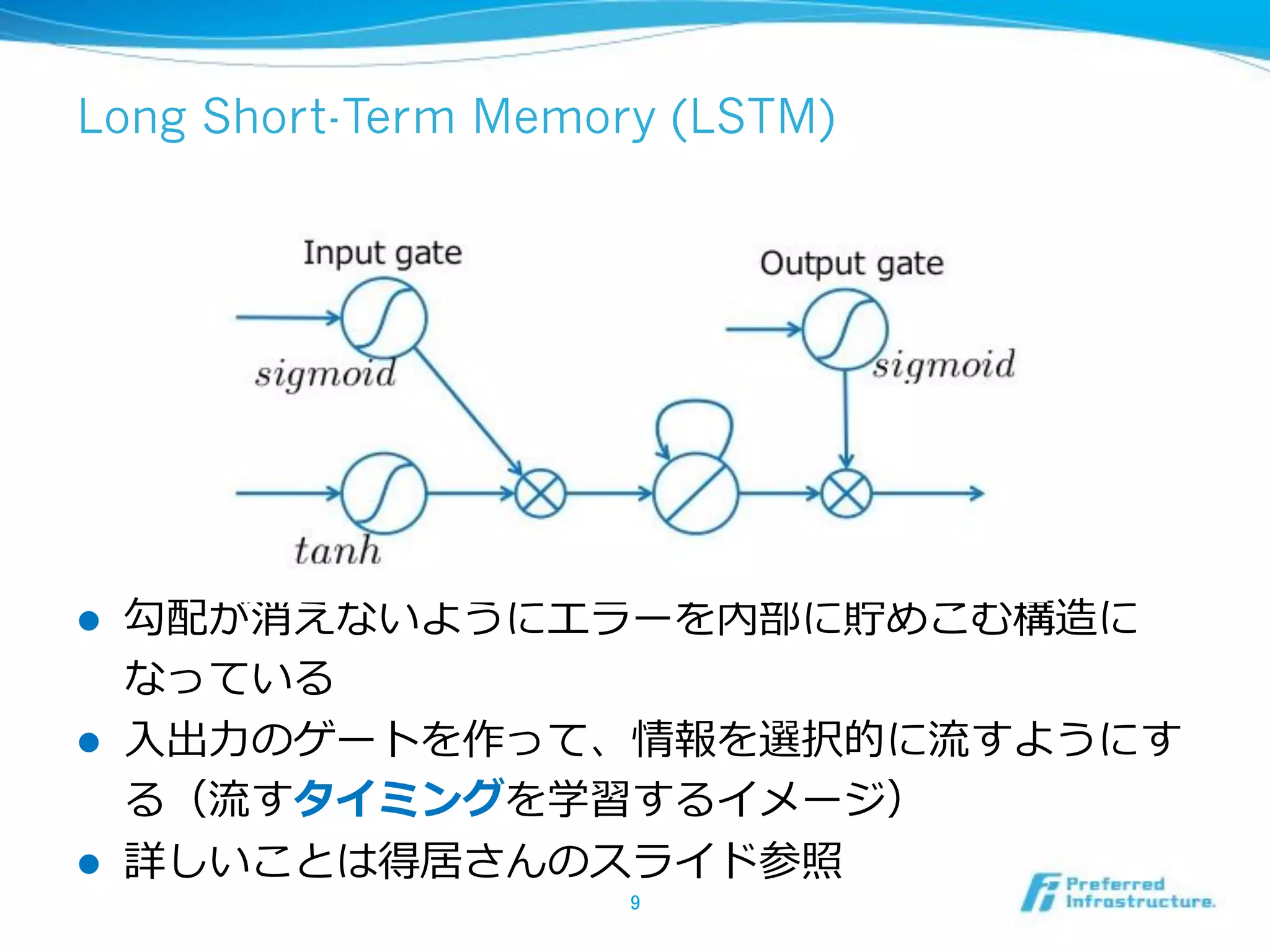

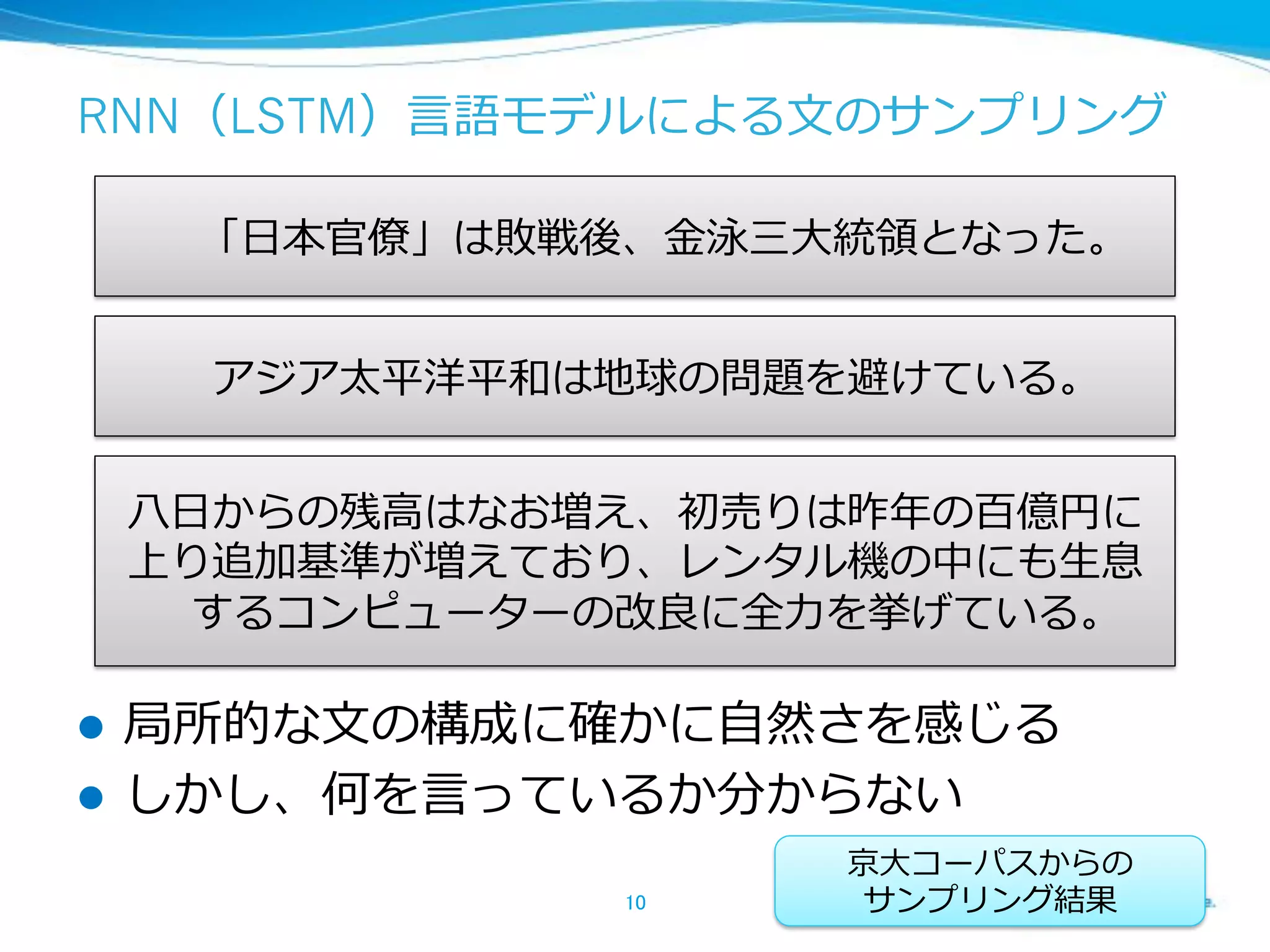

2. It provides an overview of methods for tasks like link prediction, question answering, and language modeling using recurrent neural networks and memory networks.

3. The document references several papers on knowledge graph embedding models and their applications to natural language processing tasks.

![Recurrent Neural Network Language Model

(RNNLM) [Mikolov+10]

! RNN

!

!

! LSTM](https://image.slidesharecdn.com/20150604dlforkb-150604063255-lva1-app6892/75/Deep-Learning-8-2048.jpg)

![[ 15]

!

1

! -‐‑‒](https://image.slidesharecdn.com/20150604dlforkb-150604063255-lva1-app6892/75/Deep-Learning-13-2048.jpg)

![-‐‑‒ [ 15]

! Pydata Tokyo](https://image.slidesharecdn.com/20150604dlforkb-150604063255-lva1-app6892/75/Deep-Learning-14-2048.jpg)

![! EMNLP2014 Bordes Weston

1 part 2 [Bordes&Weston14]](https://image.slidesharecdn.com/20150604dlforkb-150604063255-lva1-app6892/75/Deep-Learning-18-2048.jpg)

![! [

15]](https://image.slidesharecdn.com/20150604dlforkb-150604063255-lva1-app6892/75/Deep-Learning-19-2048.jpg)

![Distance model (Structured Embedding) [Bordes

+11]

! e

! Rleft, Rright

!

! f

f(x, r, y) = || Rleft(r) e(x) – Rright(r) e(y) ||1](https://image.slidesharecdn.com/20150604dlforkb-150604063255-lva1-app6892/75/Deep-Learning-30-2048.jpg)

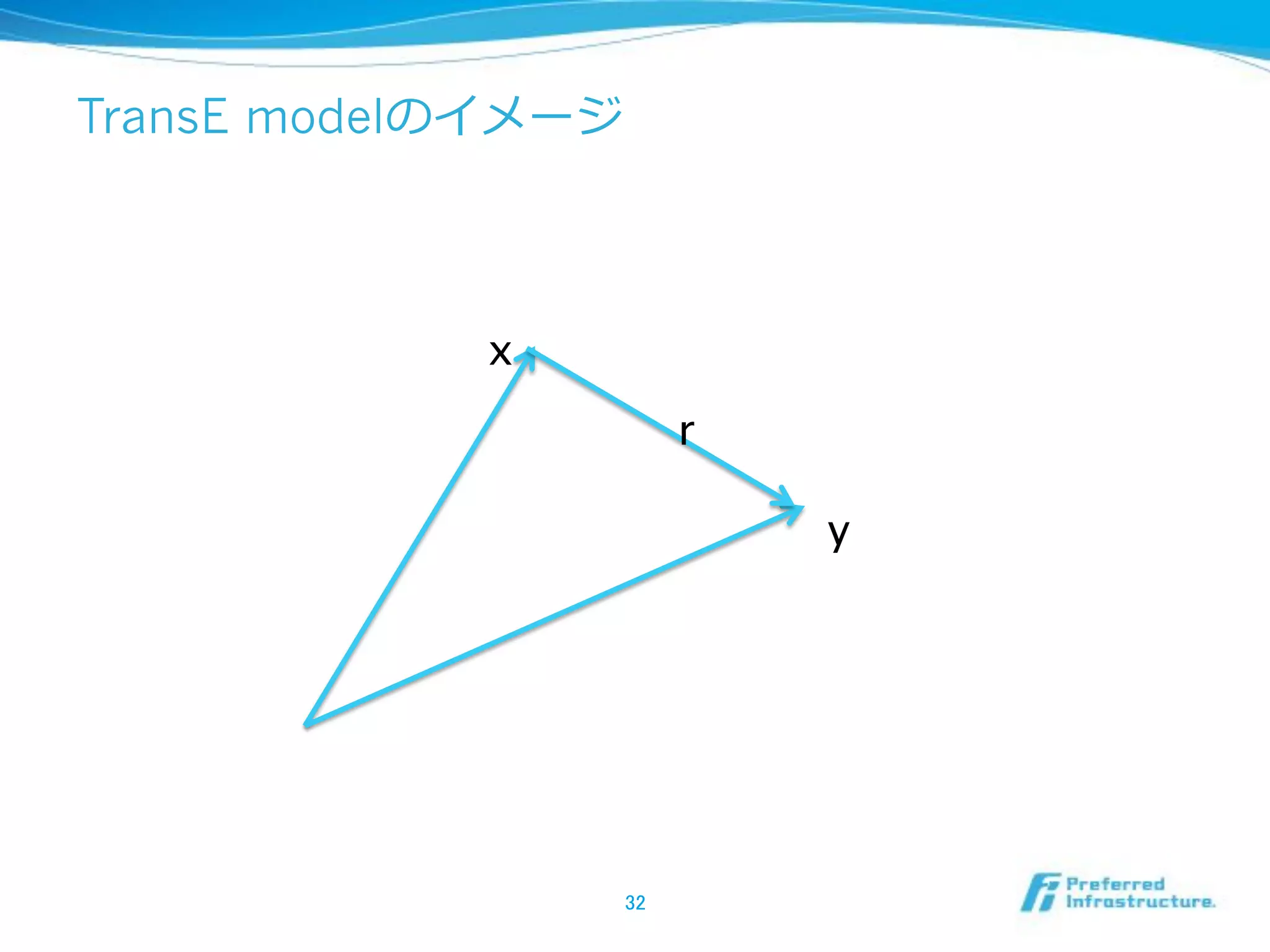

![TransE model [Brodes+13]

! r r

!

f(x, r, y) = || e(x) + r – e(y) ||2

2](https://image.slidesharecdn.com/20150604dlforkb-150604063255-lva1-app6892/75/Deep-Learning-31-2048.jpg)

![TransM model [Fan+14]

! r

! wr r x, y

f(x, r, y) = wr|| e(x) + r – e(y) ||2

2](https://image.slidesharecdn.com/20150604dlforkb-150604063255-lva1-app6892/75/Deep-Learning-34-2048.jpg)

![TransH model [Wang+14]

! TransE](https://image.slidesharecdn.com/20150604dlforkb-150604063255-lva1-app6892/75/Deep-Learning-35-2048.jpg)

![Neural Tensor Network (NTN) [Socher+13]

f(x, r, y) = ur tanh(e(x)Wre(y)

+ V1

r e(x) + V2

r e(y) + br)

! r

!](https://image.slidesharecdn.com/20150604dlforkb-150604063255-lva1-app6892/75/Deep-Learning-37-2048.jpg)

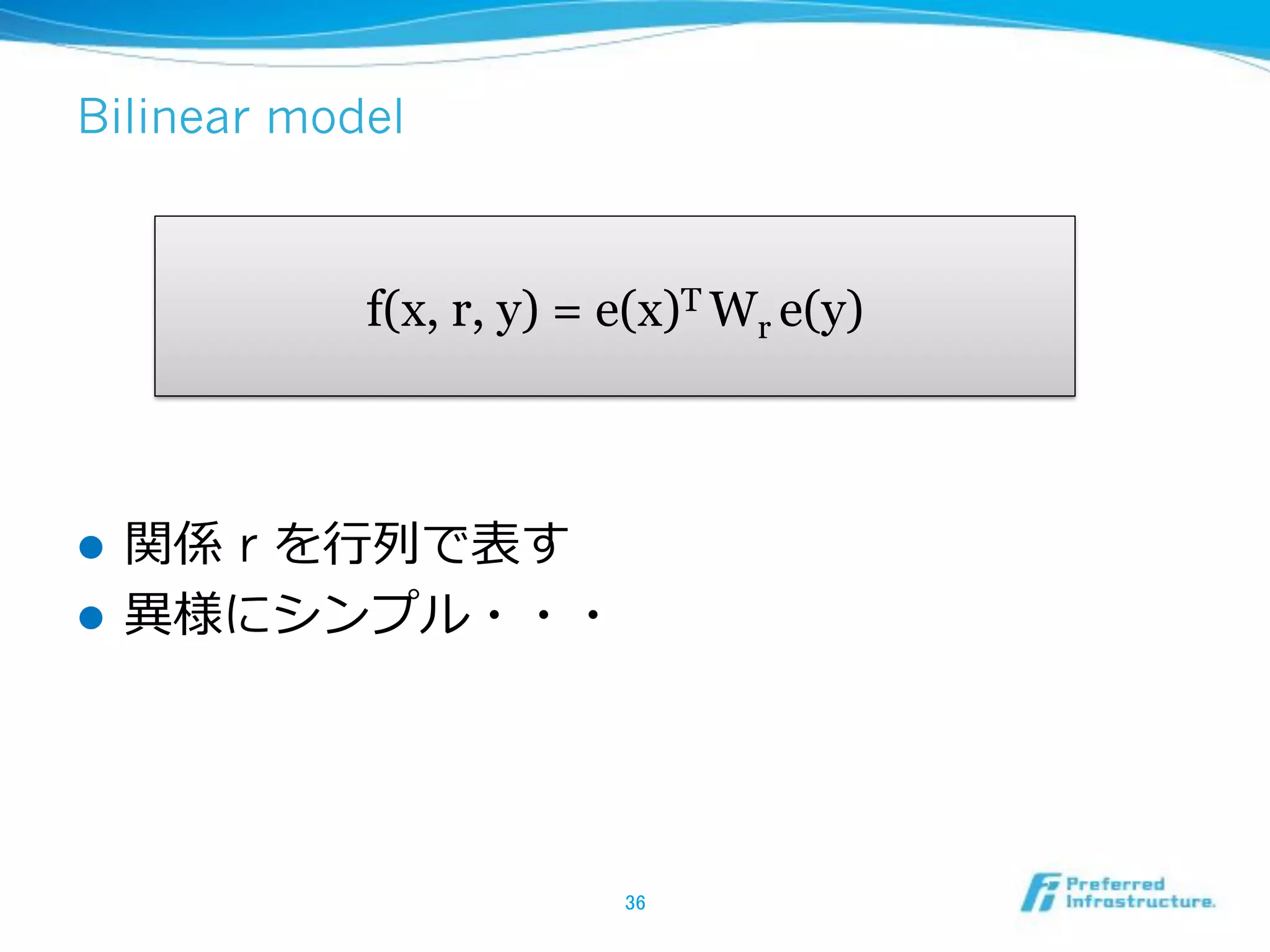

![[Yang+15]

! 2

!

Bilinear](https://image.slidesharecdn.com/20150604dlforkb-150604063255-lva1-app6892/75/Deep-Learning-38-2048.jpg)

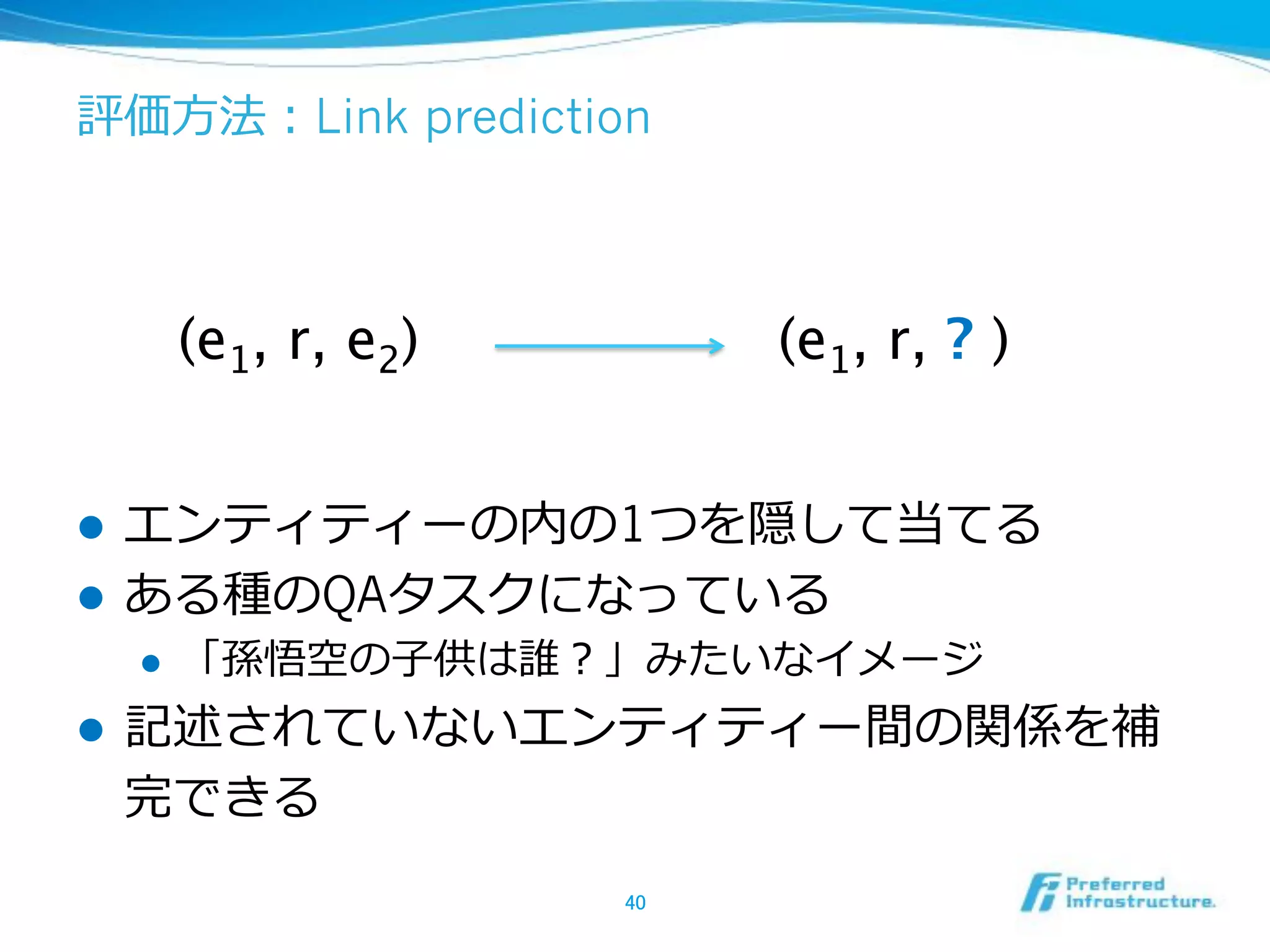

![Q:

-‐‑‒

A: [Nickel+11]](https://image.slidesharecdn.com/20150604dlforkb-150604063255-lva1-app6892/75/Deep-Learning-39-2048.jpg)

![TransH

TransE

[Bordes&Weston14]](https://image.slidesharecdn.com/20150604dlforkb-150604063255-lva1-app6892/75/Deep-Learning-41-2048.jpg)

![[Weston+13]

! x y r

!

[Bordes&Weston14]](https://image.slidesharecdn.com/20150604dlforkb-150604063255-lva1-app6892/75/Deep-Learning-43-2048.jpg)

![[Weston+13]

!

!

-‐‑‒

[Bordes&Weston14]](https://image.slidesharecdn.com/20150604dlforkb-150604063255-lva1-app6892/75/Deep-Learning-44-2048.jpg)

![[Bordes&Weston14]](https://image.slidesharecdn.com/20150604dlforkb-150604063255-lva1-app6892/75/Deep-Learning-45-2048.jpg)

![QA [Bordes+14]

!

! q t

f(q) g(t)

! f(q), g(t) q, t](https://image.slidesharecdn.com/20150604dlforkb-150604063255-lva1-app6892/75/Deep-Learning-47-2048.jpg)

![Memory networks:

[Weston+15]

! I: I(x)

! G: mi = G(mi, I(x), m)

! O: o = O(I(x), m)

! R: r = R(o)](https://image.slidesharecdn.com/20150604dlforkb-150604063255-lva1-app6892/75/Deep-Learning-48-2048.jpg)

![RNN [Peng&Yao15]

! RNN

!](https://image.slidesharecdn.com/20150604dlforkb-150604063255-lva1-app6892/75/Deep-Learning-53-2048.jpg)

![1/4

! [Mikolov+10] T. Mikolov, M. Karafiat, L. Burget, J. H. Cernocky, S.

Khudanpur.

Recurrent neural network based language model.

Interspeech 2010.

! [ 15] .

.

2015.

! [ 15] .

NLP Introduction based on Project Next NLP.

PyData.Tokyo Meetup #5, 2015.

! [Bordes&Weston14] A. Bordes, J. Weston.

Embedding Methods for Natural Language Processing.

EMNLP2014 tutorial.

! [ 15] .

.

JSAI 2015 .](https://image.slidesharecdn.com/20150604dlforkb-150604063255-lva1-app6892/75/Deep-Learning-56-2048.jpg)

![2/4

! [Bordes+11] A. Bordes, J. Weston, R. Collobert, Y. Bengio.

Learning structured embeddings of knowledge bases.

AAAI2011.

! [Bordes+13] A. Bordes, N. Usunier, A. Garcia-Duran, J. Weston,

O. Yakhnenko.

Translating Embeddings for Modeling Multi-relational Data.

NIPS 2013.

! [Fan+14] M. Fan, Q. Shou, E. Chang, T. F. Zheng.

Transition-based Knowledge Graph Embedding with Relational

Mapping Properties.

PACLIC 2014.

! [Wang+14] Z. Wang, J. Zhang, J. Feng, Z. Chen.

Knowledge Graph Embedding by Translating on Hyperplanes.

AAAI 2014.](https://image.slidesharecdn.com/20150604dlforkb-150604063255-lva1-app6892/75/Deep-Learning-57-2048.jpg)

![3/4

! [Socher+13] R. Socher, D. Chen, C. D. Manning, A. Y. Ng.

Reasoning With Neural Tensor Networks for Knowledge Base

Completion.

NIPS 2013.

! [Yang+15] B. Yang, W. Yih, X. He, J. Gao, L. Deng.

Embedding Entities and Relations for Learning and Inferenece

in Knowledge Bases.

ICLR 2015.

! [Nickel+11] M. Nickel, V. Tresp, H. P. Kriegel.

A Three-Way Model for Collective Learning on Multi-Relational

Data.

ICML 2011.](https://image.slidesharecdn.com/20150604dlforkb-150604063255-lva1-app6892/75/Deep-Learning-58-2048.jpg)

![4/4

! [Bordes+14] A. Bordes, J. Weston, N. Usunier.

Open Question Answering with Weakly Supervised

Embedding Models.

ECML/PKDD 2014.

! [Weston+15] J. Weston, S. Chopra, A. Bordes.

Memory Networks.

ICLR 2015.

! [Peng&Yao15] B. Peng, K. Yao.

Recurrent Neural Networks with External Memory

for Language Understanding.

arXiv:1506.00195, 2015.](https://image.slidesharecdn.com/20150604dlforkb-150604063255-lva1-app6892/75/Deep-Learning-59-2048.jpg)