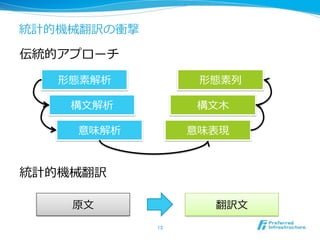

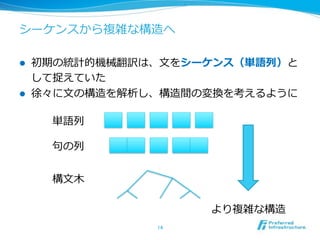

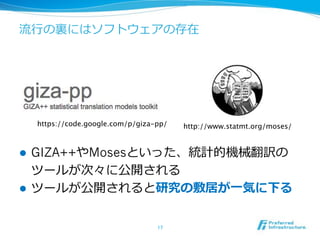

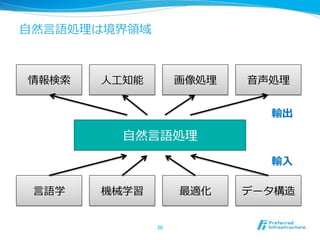

This document provides an overview of preferred natural language processing infrastructure and techniques. It discusses recurrent neural networks, statistical machine translation tools like GIZA++ and Moses, voice recognition systems from NICT and NTT, topic modeling using latent Dirichlet allocation, dependency parsing with minimum spanning trees, and recursive neural networks for natural language tasks. References are provided for several papers on these methods.

![[ 12]](https://image.slidesharecdn.com/20150316ipsjnlp-150319223235-conversion-gate01/85/slide-9-320.jpg)

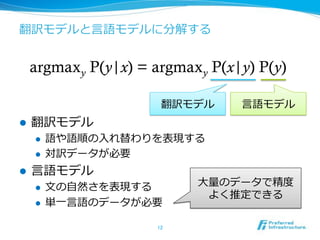

![[Brown

+93]

! x

y

! x y

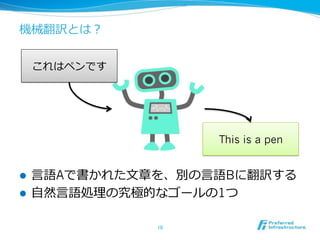

y=This is a penx=

argmaxy P(y|x)](https://image.slidesharecdn.com/20150316ipsjnlp-150319223235-conversion-gate01/85/slide-11-320.jpg)

![[Sutskever

+14]

!

! Recurrent Neural Network Long

Short-Term Memory](https://image.slidesharecdn.com/20150316ipsjnlp-150319223235-conversion-gate01/85/slide-15-320.jpg)

![Latent Dirichlet Allocation [Blei+03]

!

!

!

[Blei+03]](https://image.slidesharecdn.com/20150316ipsjnlp-150319223235-conversion-gate01/85/slide-22-320.jpg)

![MST Parser [McDonald+05]

!

! O(n3) Chu-Liu-Edmonds

O(n2)

[McDonald+05]](https://image.slidesharecdn.com/20150316ipsjnlp-150319223235-conversion-gate01/85/slide-23-320.jpg)

![[McDonald07]

! Relevancy

! Redundancy

! {0, 1}](https://image.slidesharecdn.com/20150316ipsjnlp-150319223235-conversion-gate01/85/slide-24-320.jpg)

![[Socher+11]

!

! Recursive Neural

Network

[Socher+11]](https://image.slidesharecdn.com/20150316ipsjnlp-150319223235-conversion-gate01/85/slide-29-320.jpg)

![[ +13]

!

!](https://image.slidesharecdn.com/20150316ipsjnlp-150319223235-conversion-gate01/85/slide-31-320.jpg)

![1/2

! [ 12] .

.

NLP2012 .

! [Brown+93] P. Brown, S. Della Pietra, V. Della Pietra, R. Mercer.

The mathematics of statistical machine translation: parameter

estimation.

Computational Linguistics, 19(2), 1993.

! [Sutskever+14] I. Sutskever, O. Vinyals, Q. V. Le.

Sequence to Sequence Learning with Neural Networks.

NIPS 2014.](https://image.slidesharecdn.com/20150316ipsjnlp-150319223235-conversion-gate01/85/slide-34-320.jpg)

![2/2

! [Blei+03] D. M. Blei, A. Y. Ng, M. I. Jordan.

Latent Dirichlet Allocation.

JMLR 2003.

! [McDonald+05] R. McDonald, F. Pereira, K. Ribarov, J. Hajic.

Non-projective Dependency Parsing using Spanning Tree Algorithms.

HLT-EMNLP 2005.

! [McDonald07] R. McDonald.

A Study of Global Inference Algorithms in Multi-Document

Summarization.

Advances in Information Retrieval, 2007.

! [Socher+11] R. Socher, C. Lin, A. Y. Ng, C. D. Manning.

Parsing Natural Scenes and Natural Language with Recursive Neural

Networks.

ICML 2011.

! [ +13] , , , , .

.

JSAI 2013.](https://image.slidesharecdn.com/20150316ipsjnlp-150319223235-conversion-gate01/85/slide-35-320.jpg)