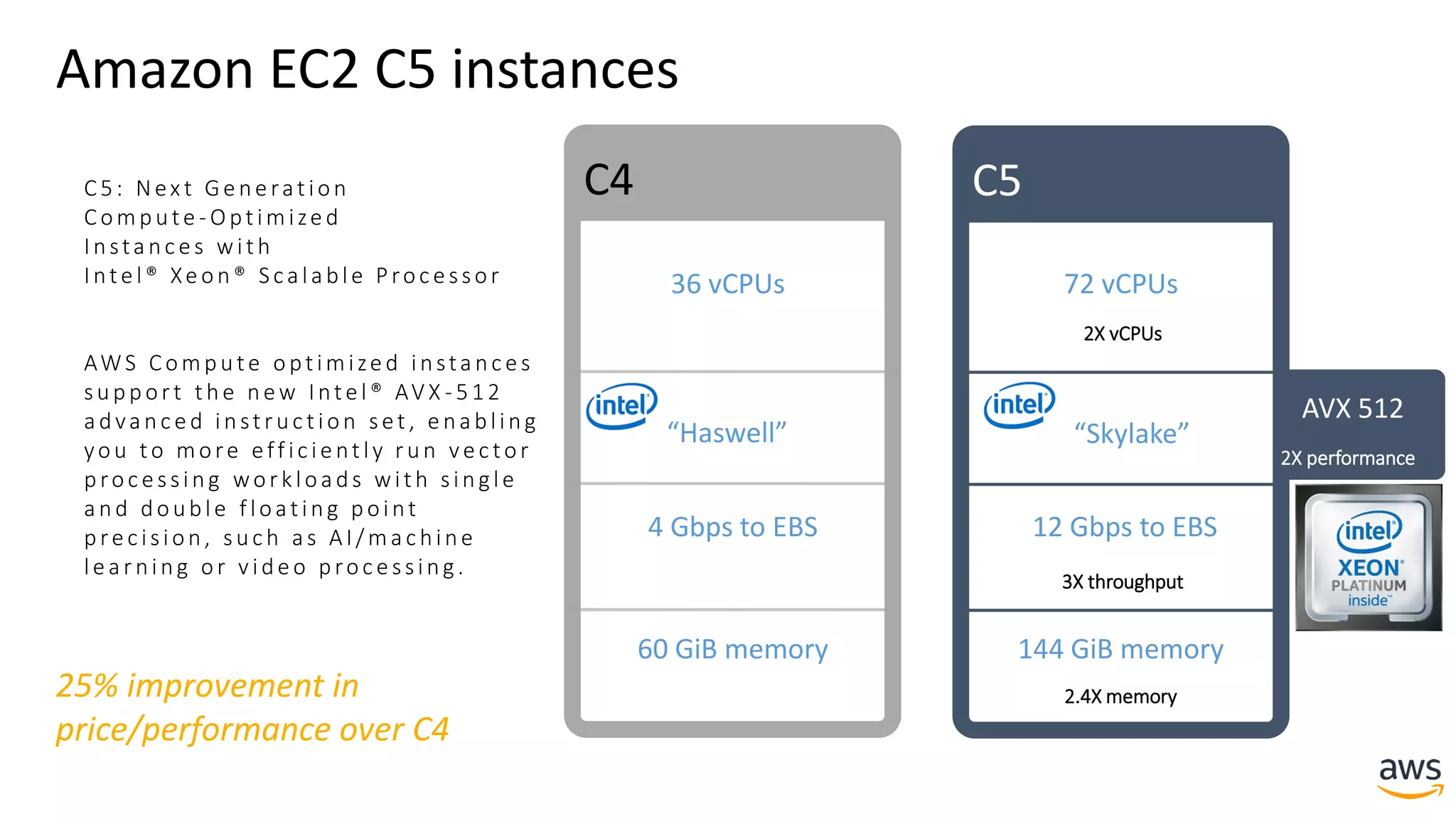

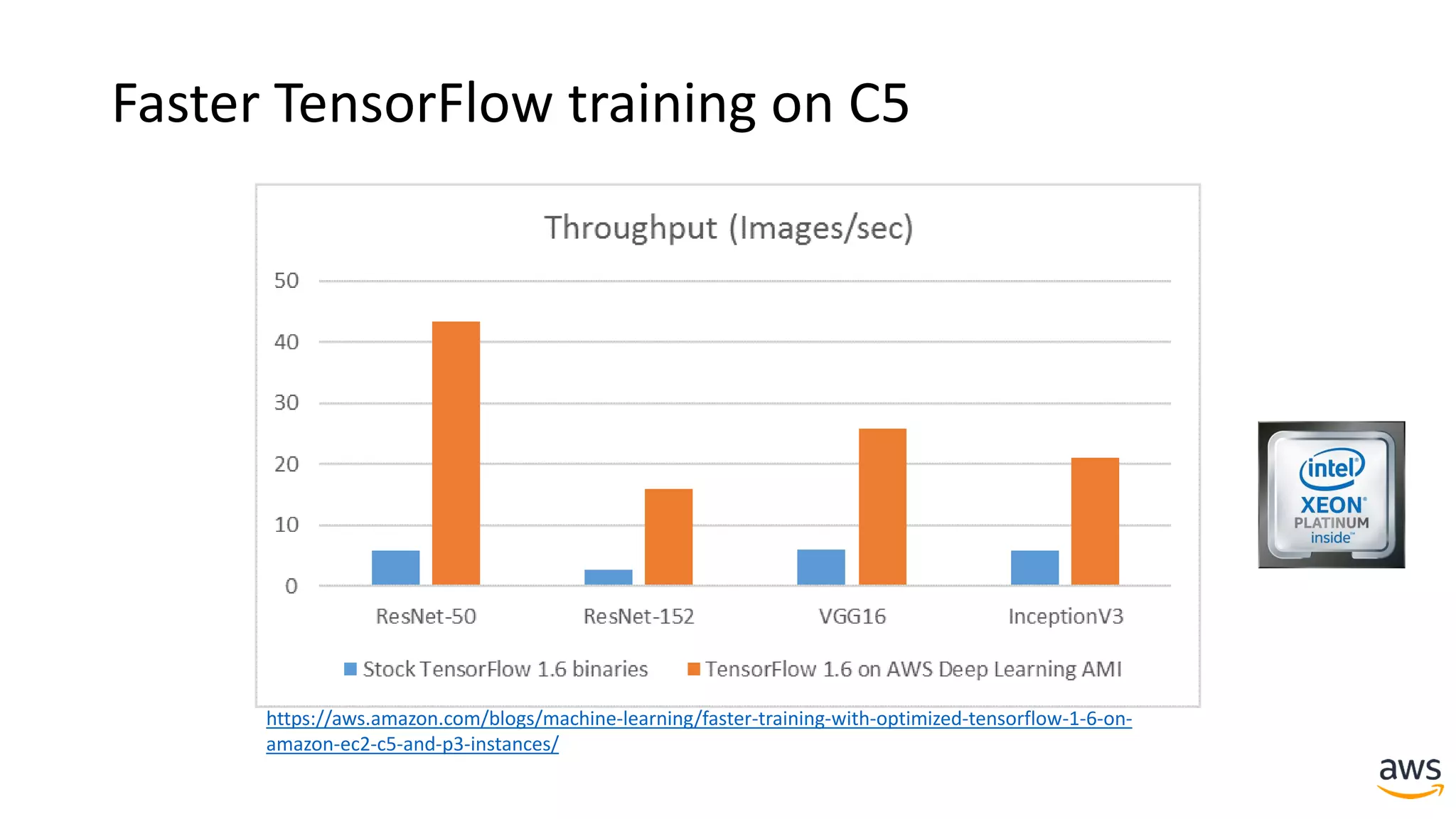

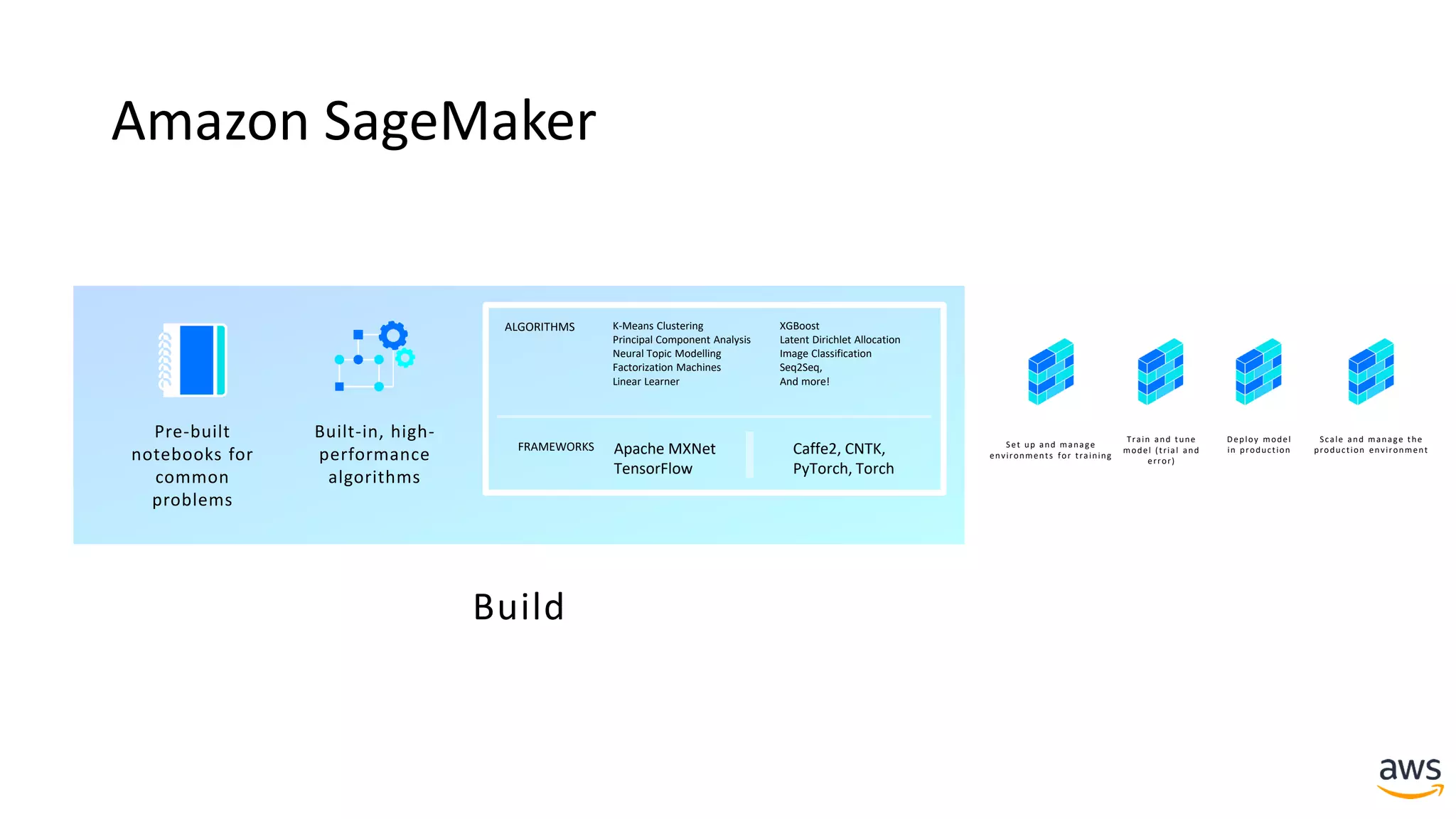

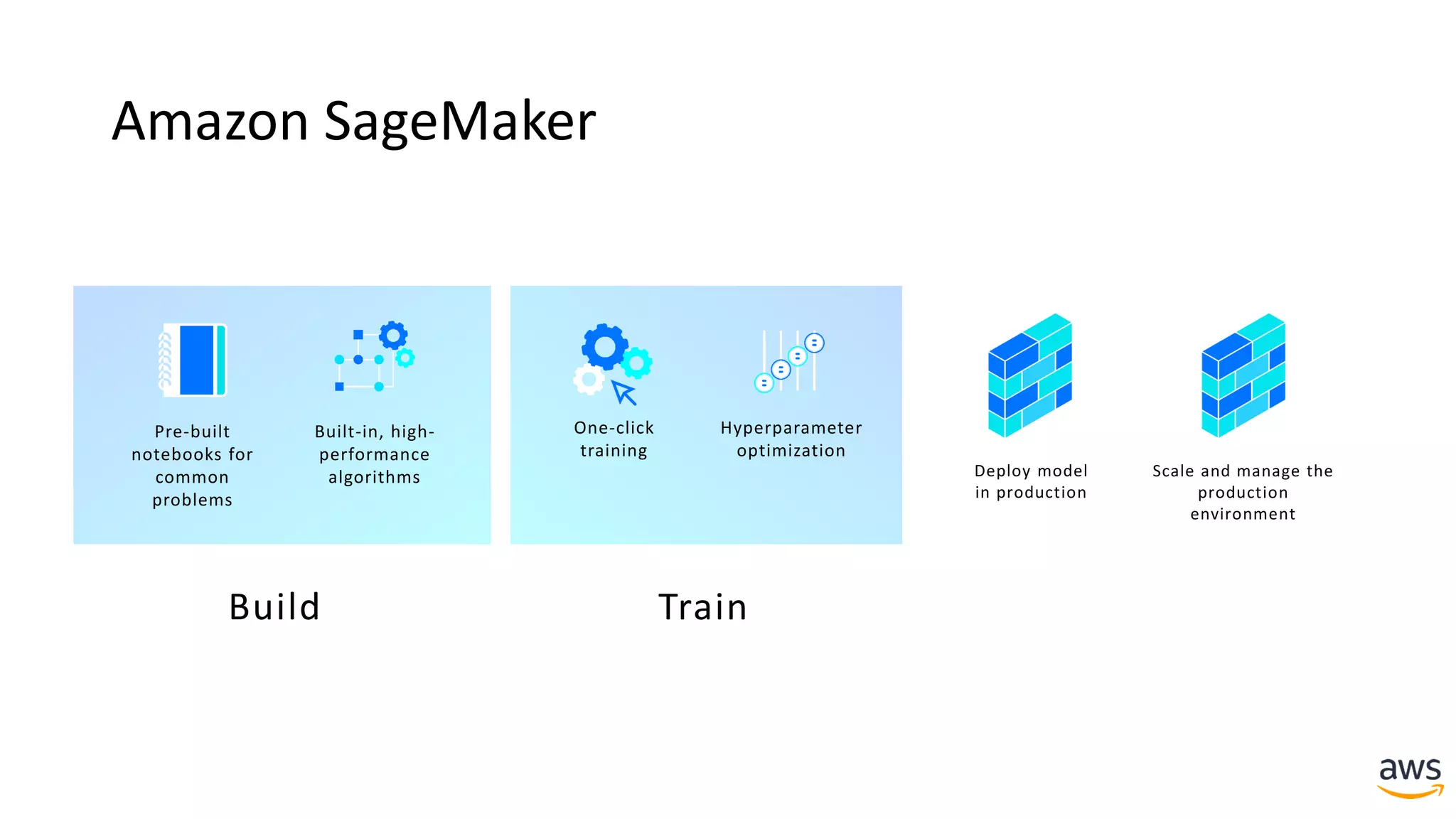

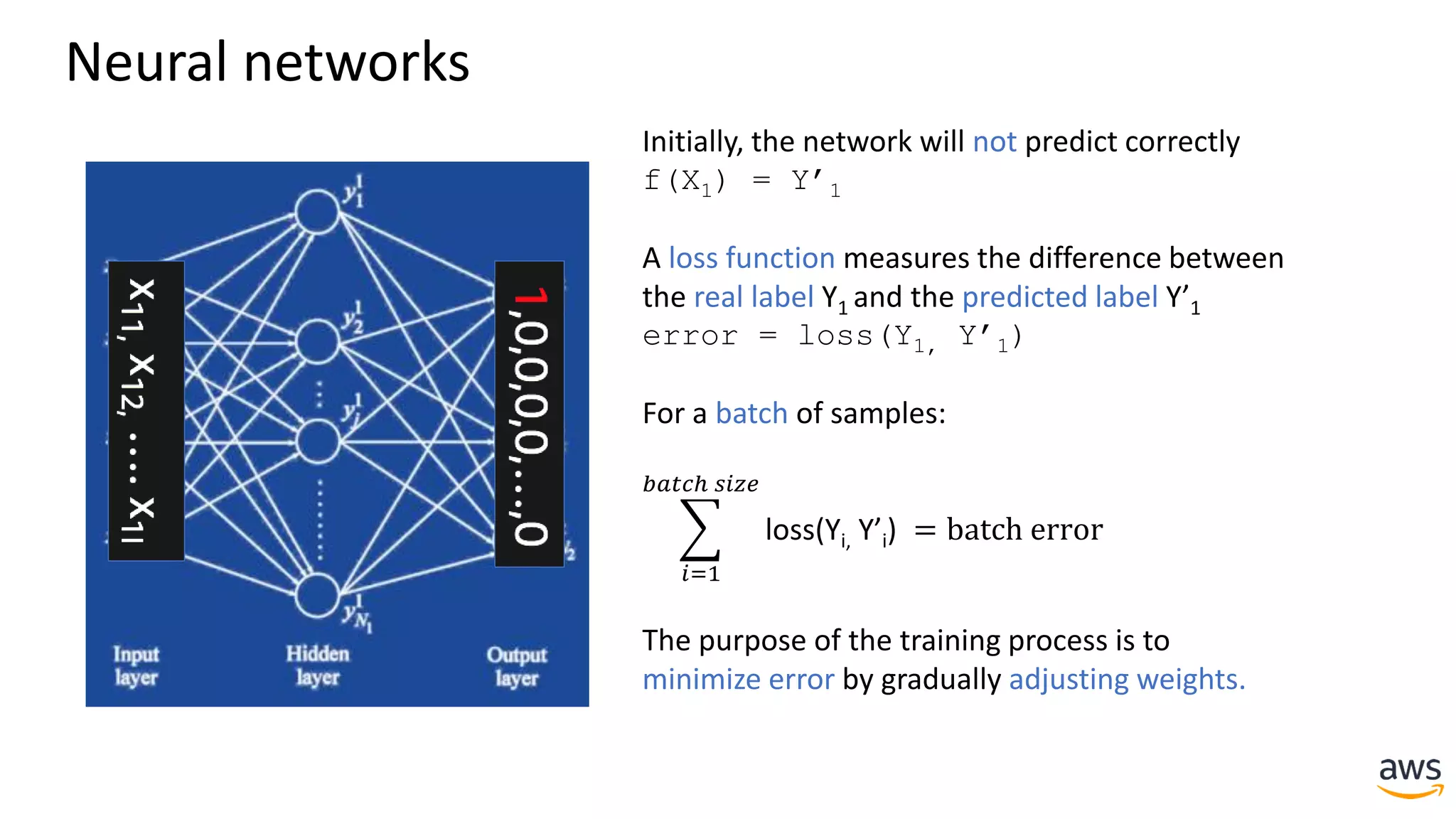

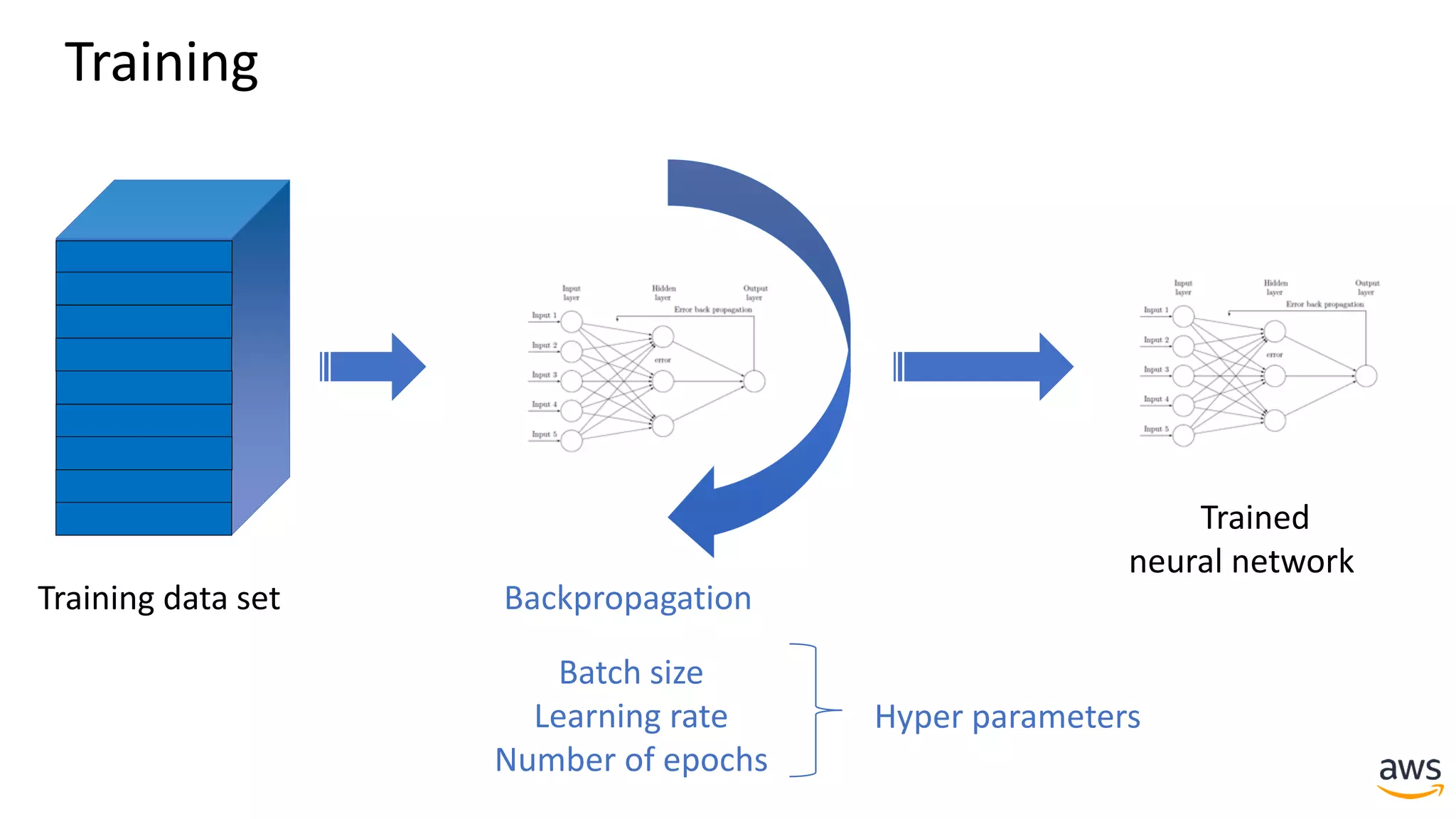

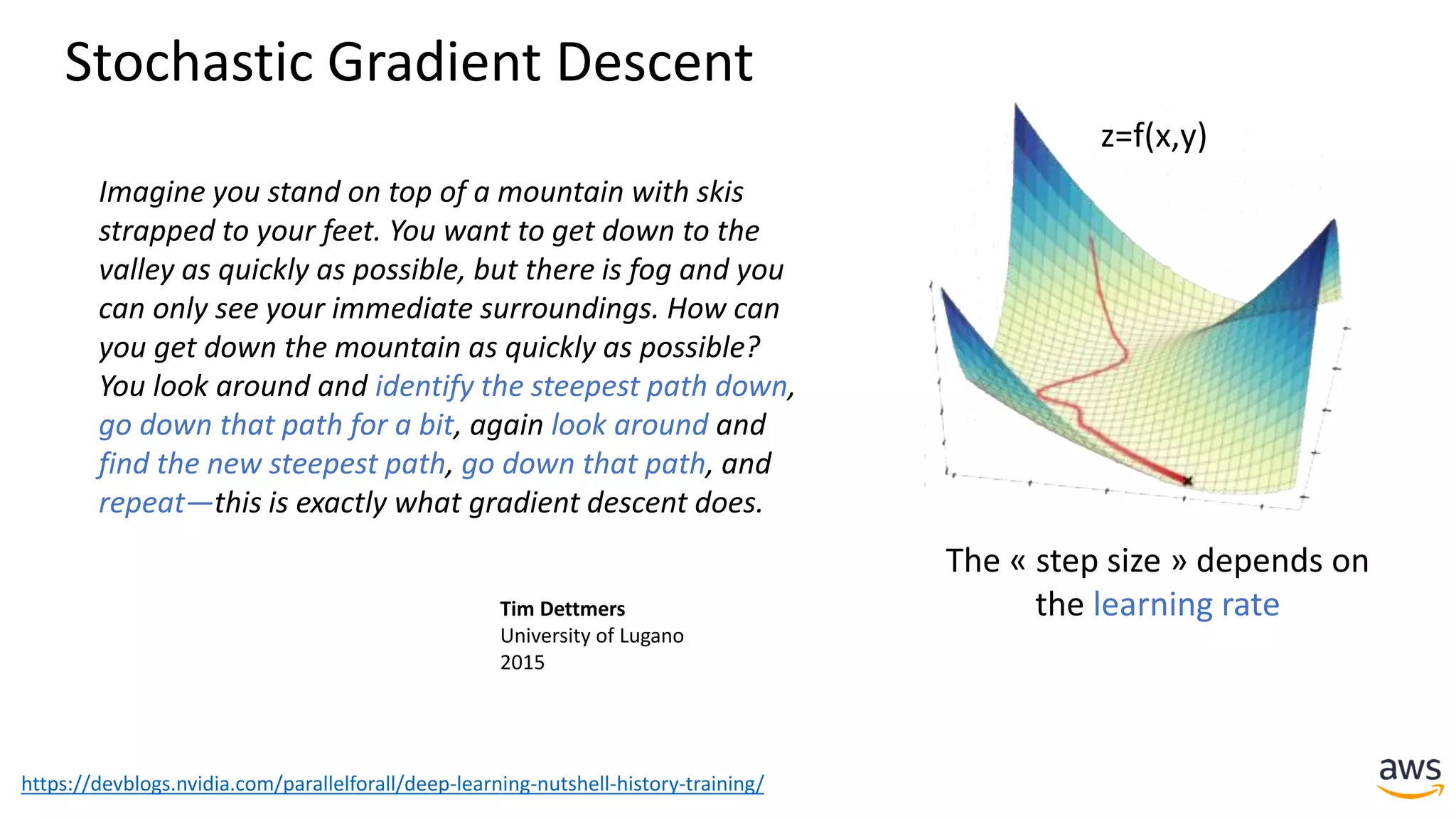

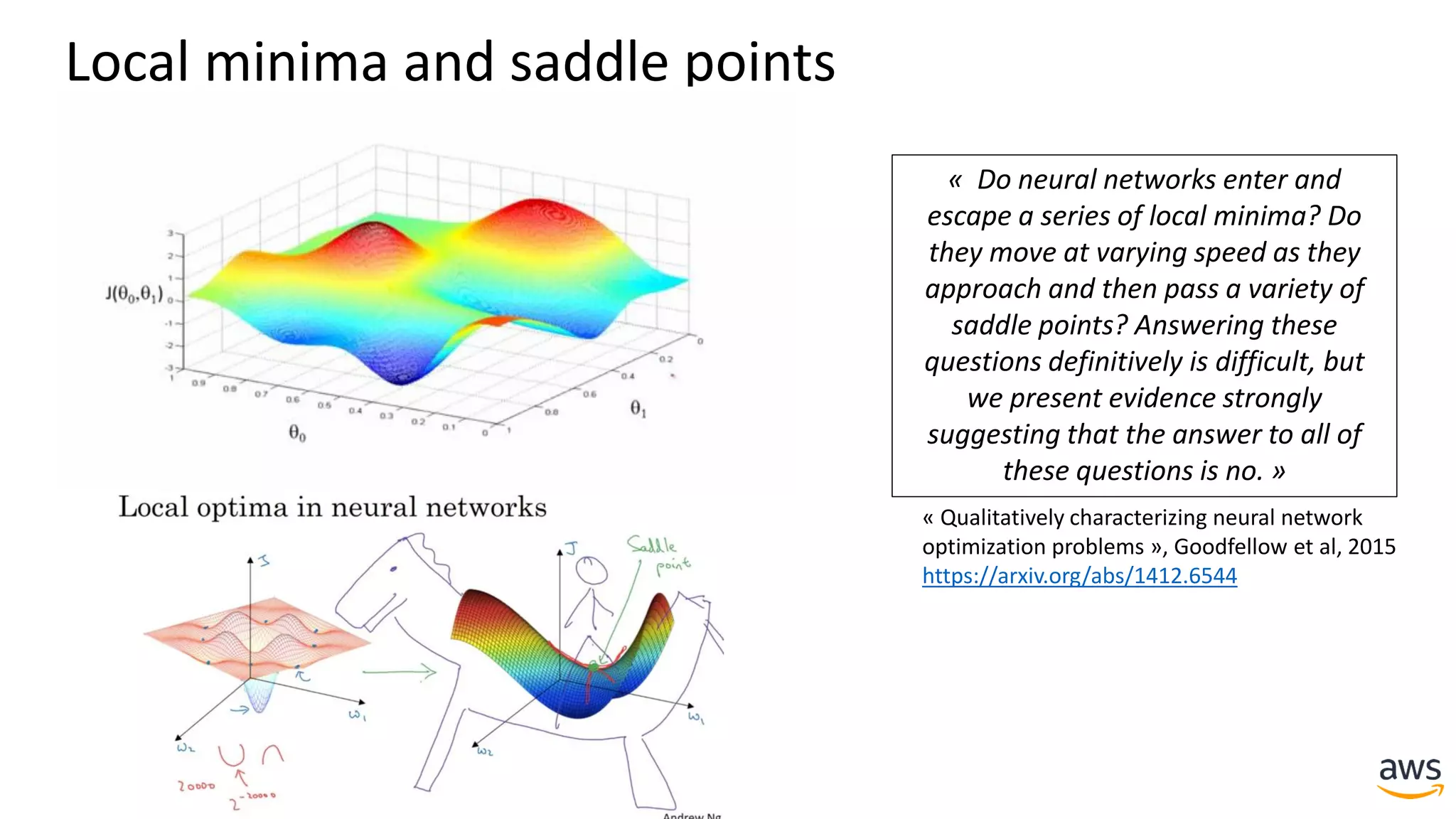

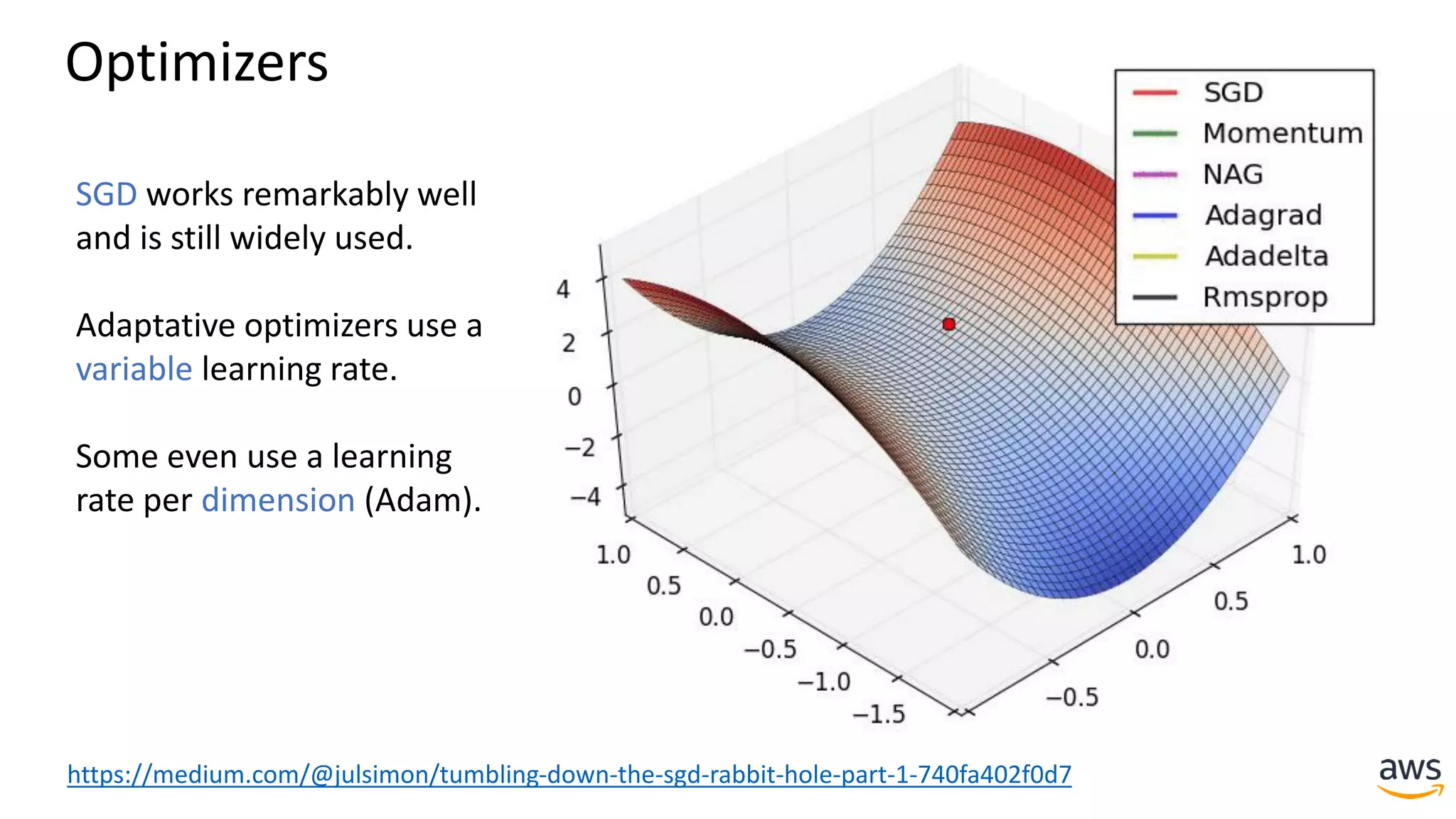

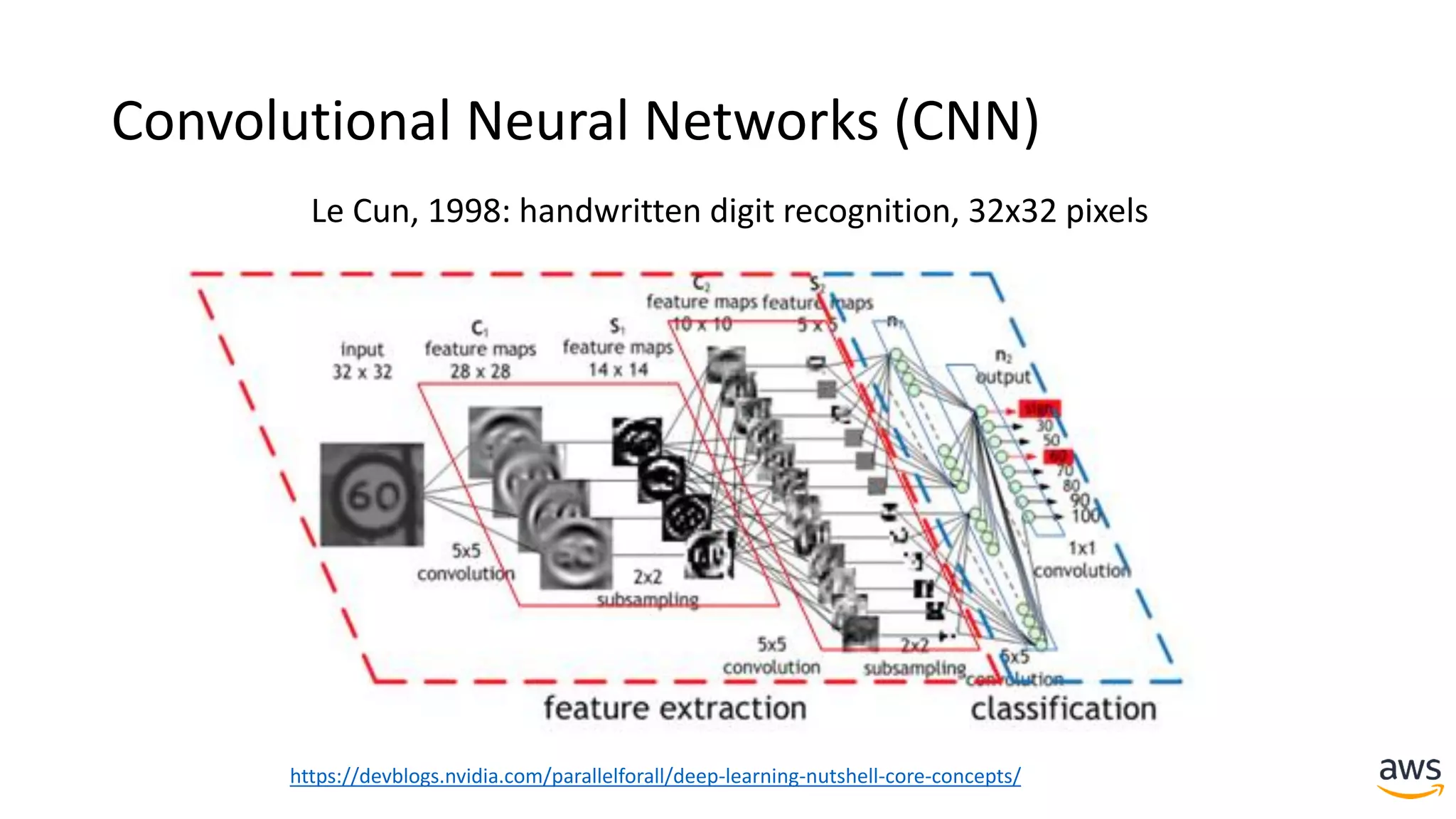

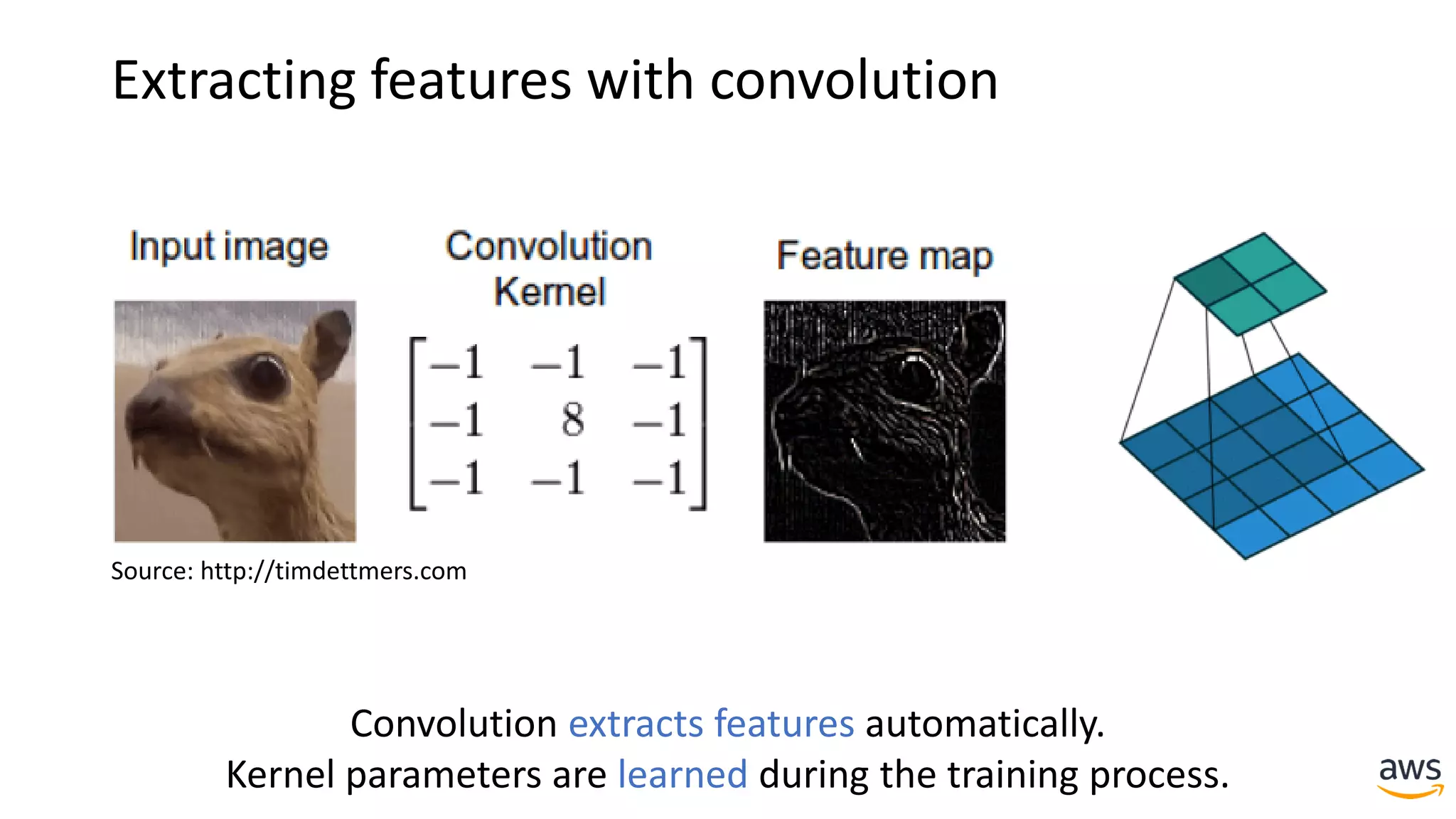

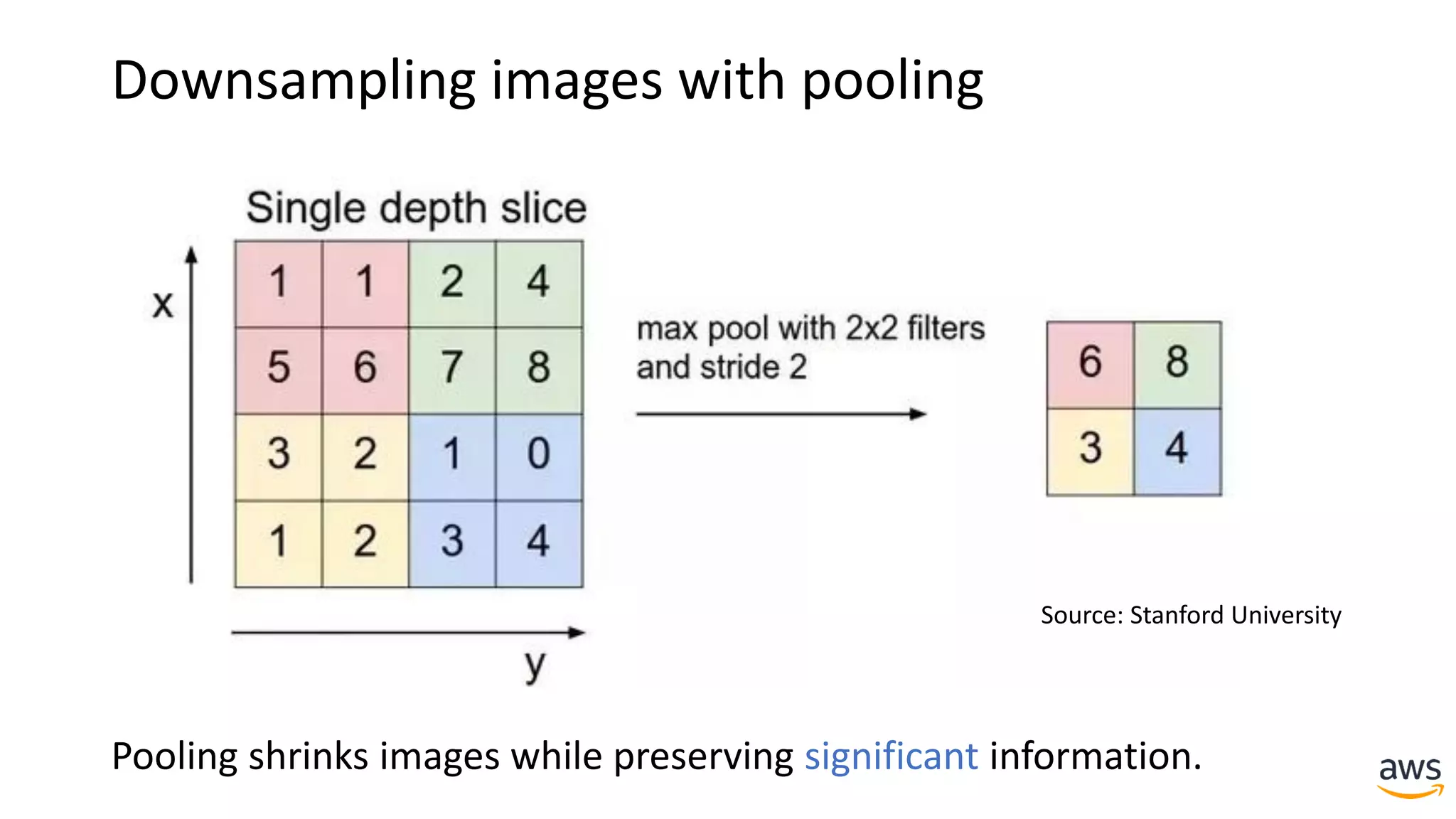

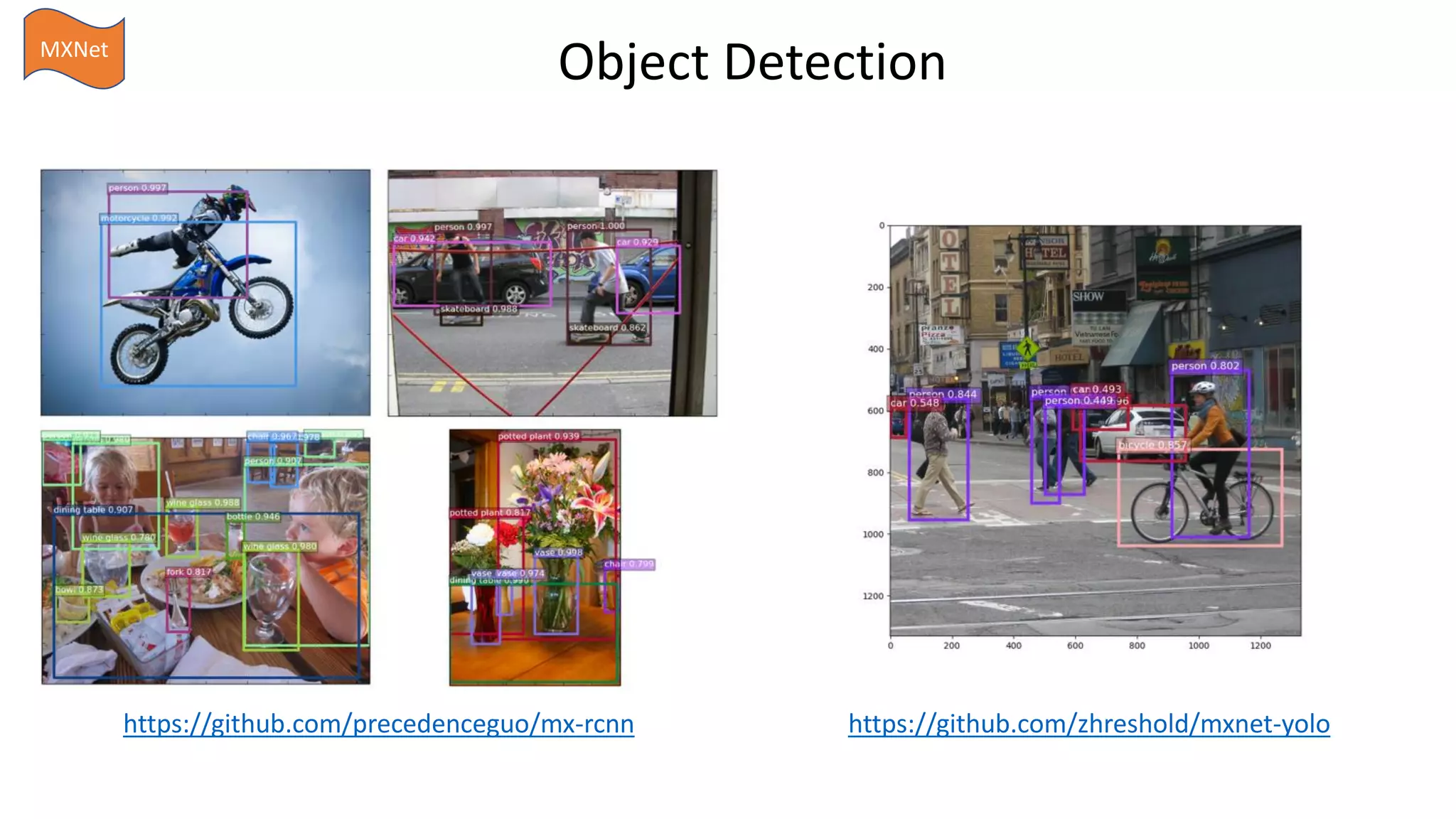

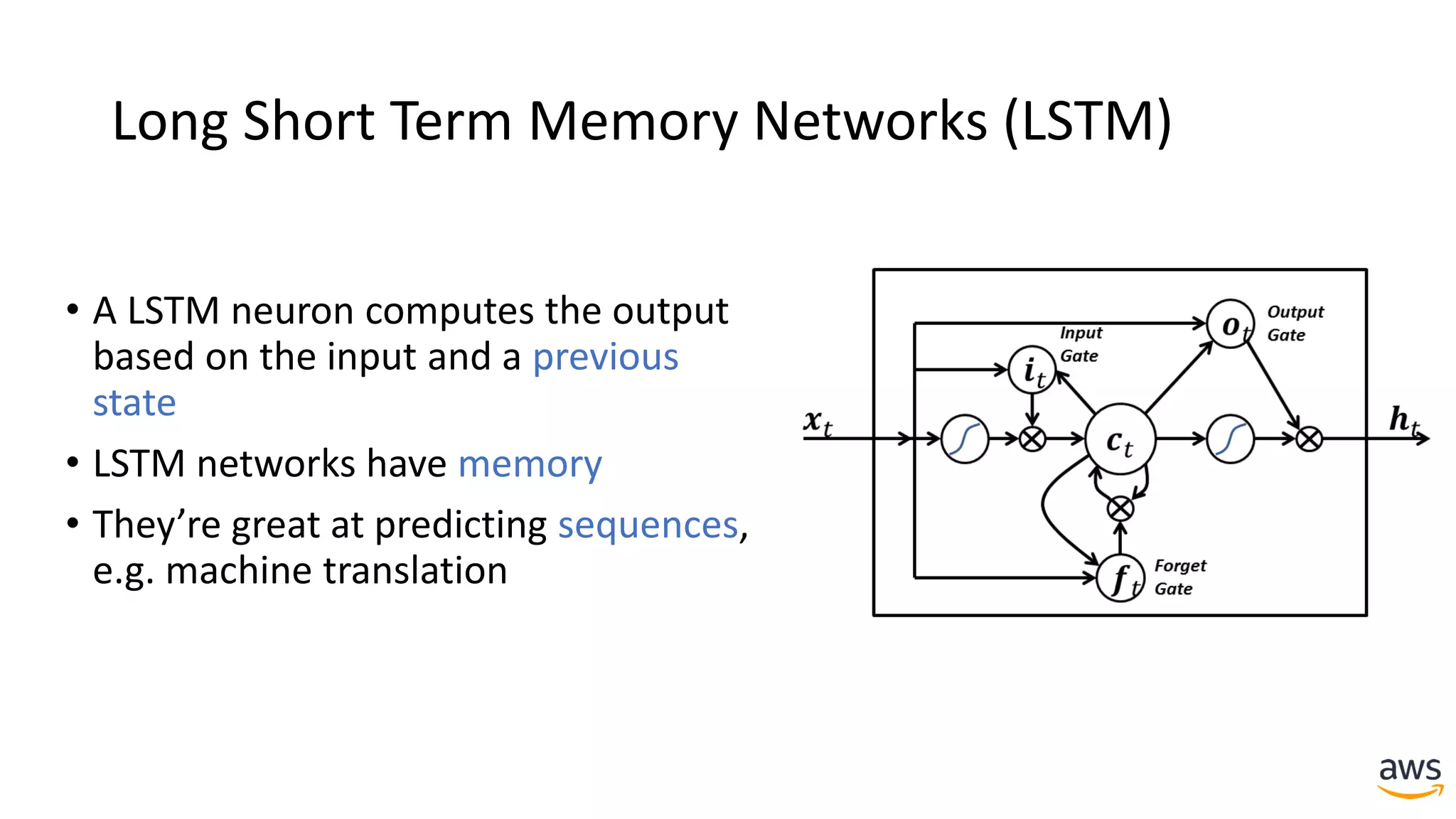

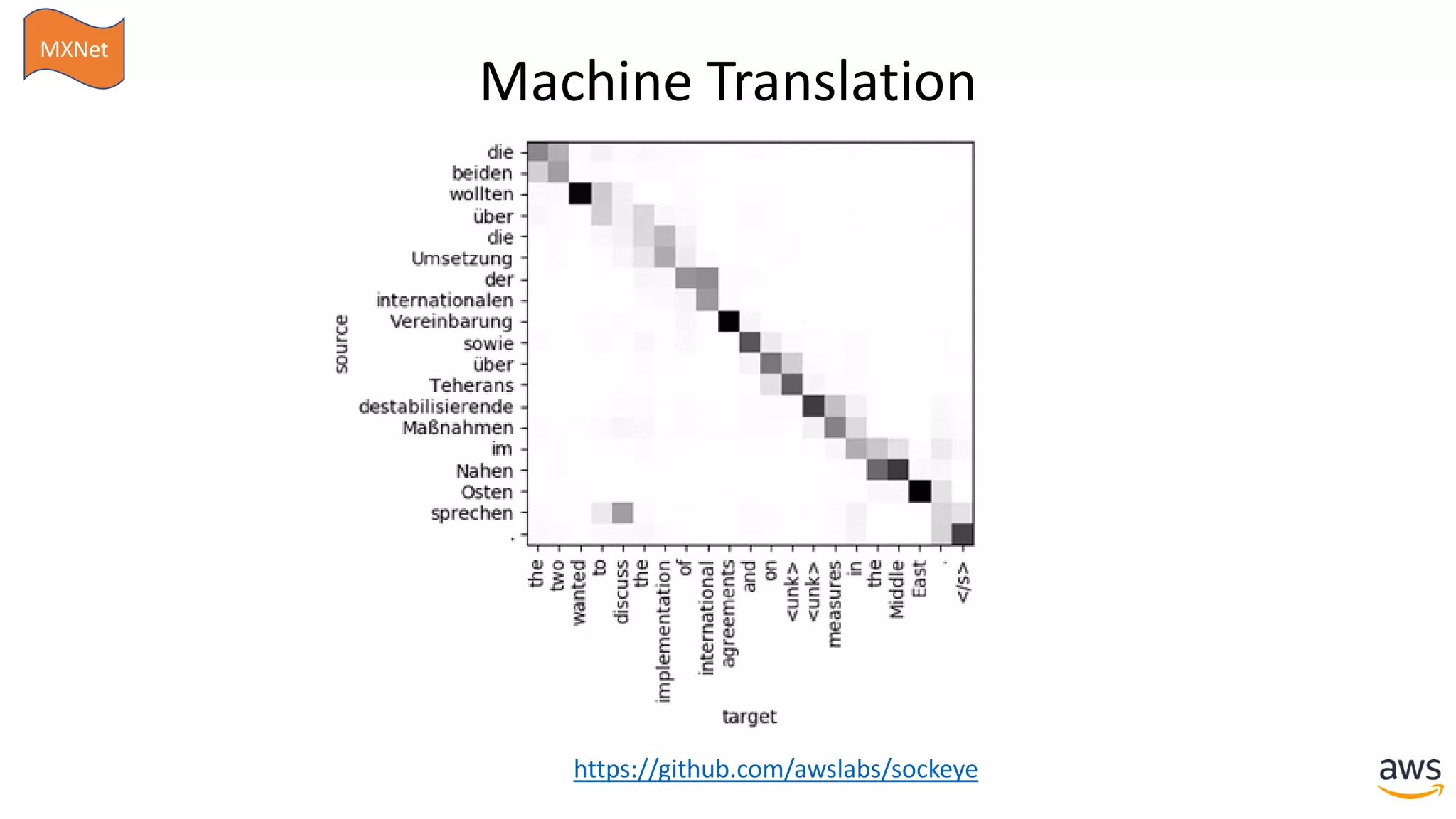

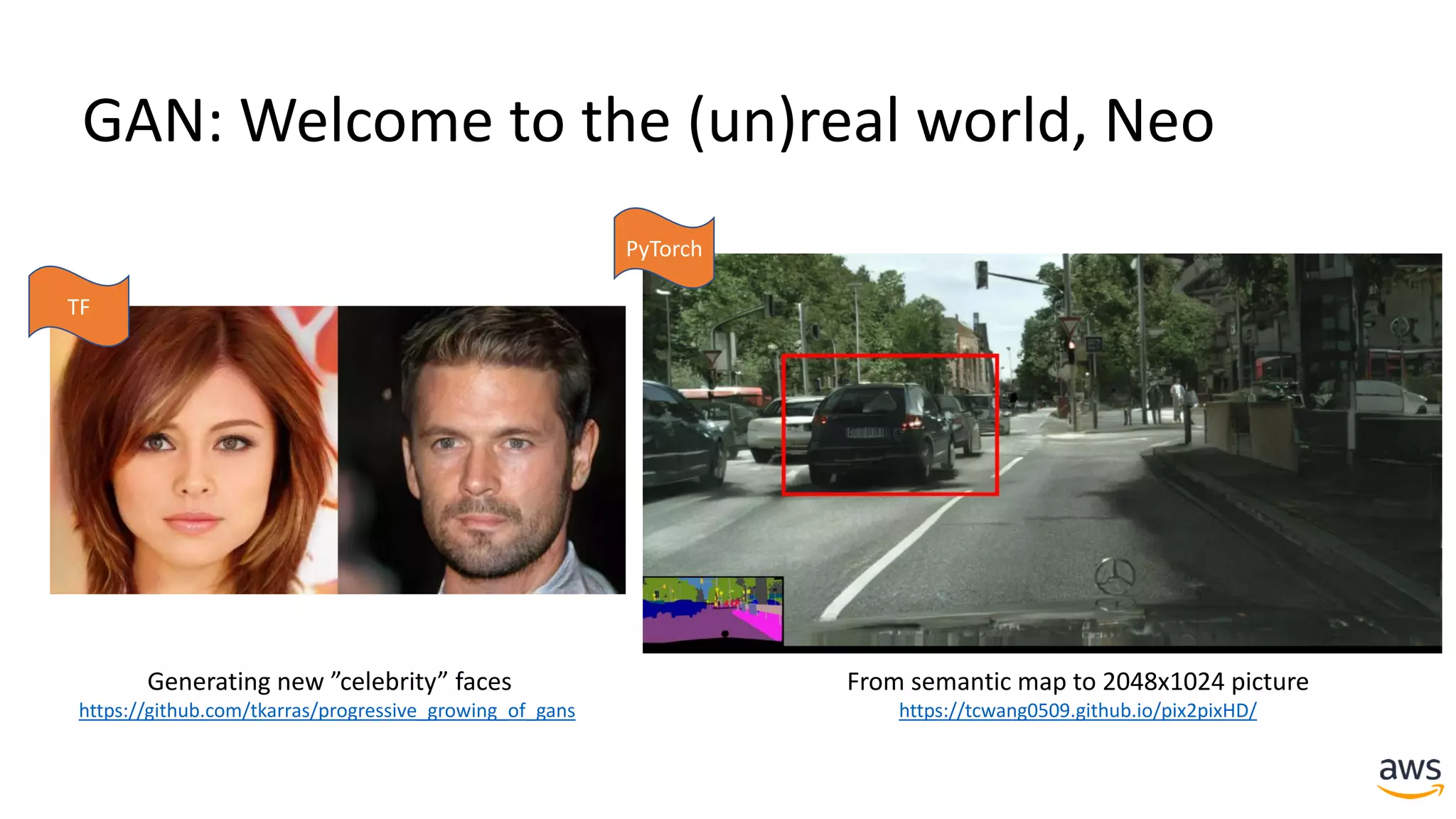

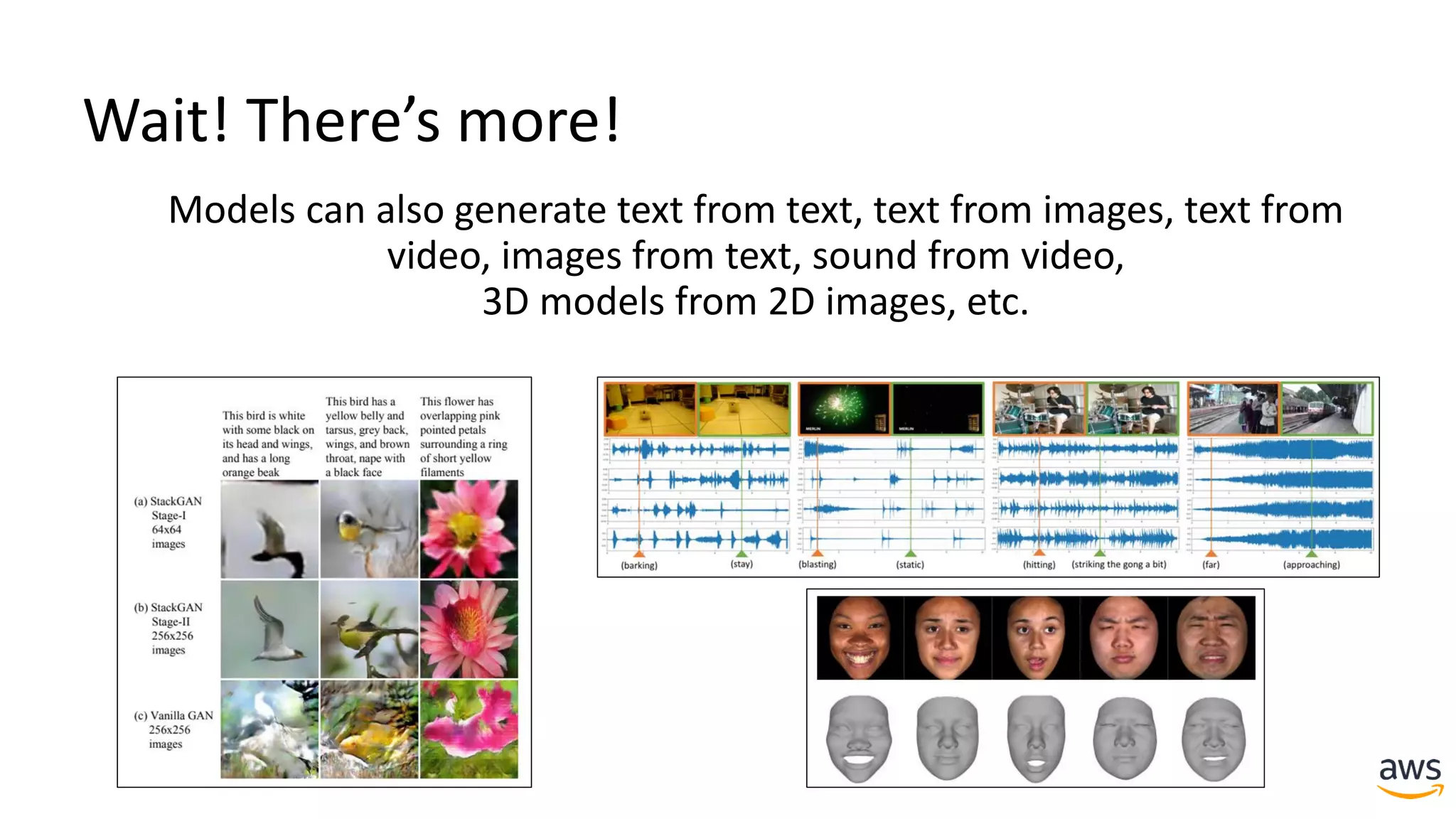

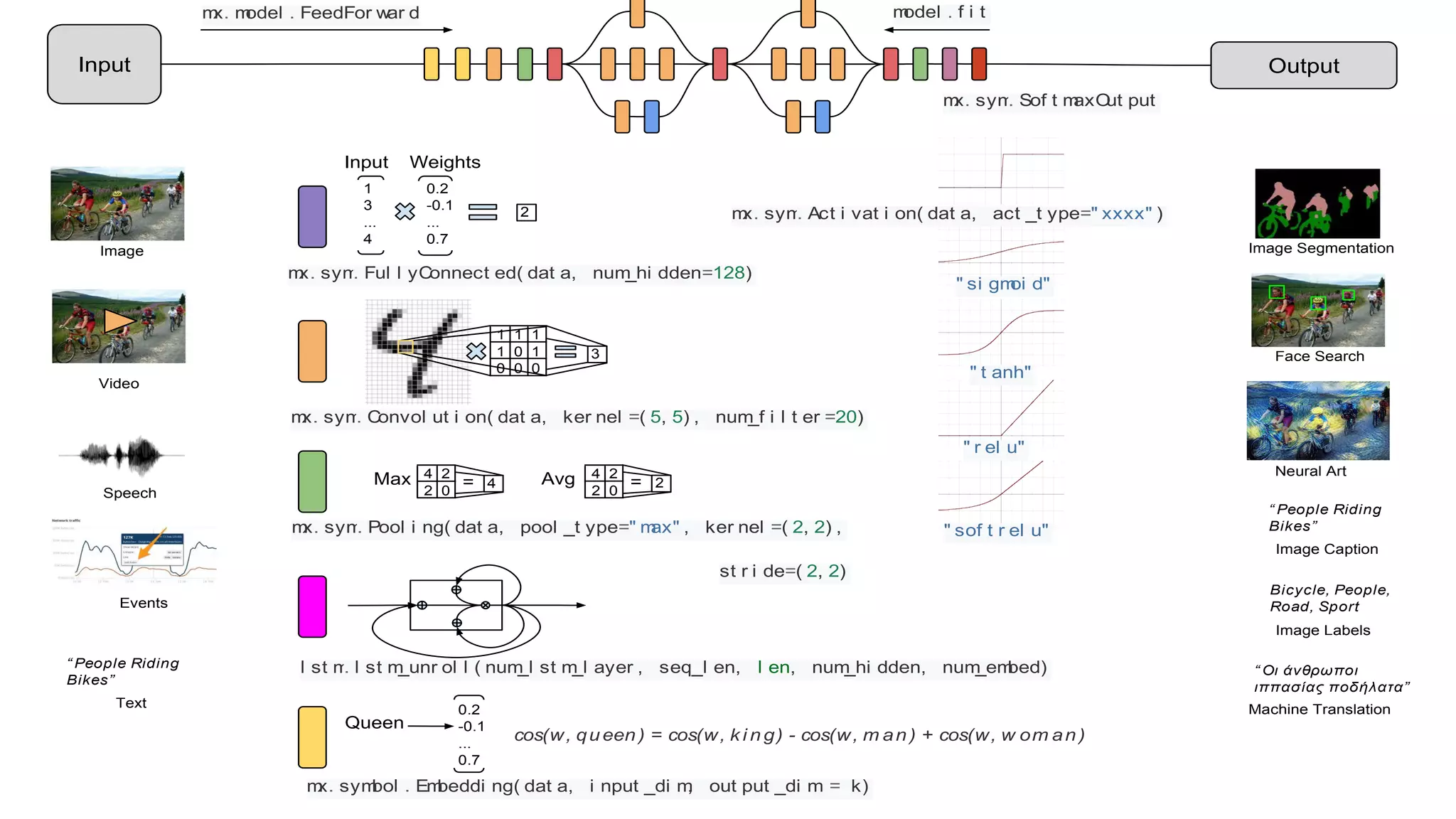

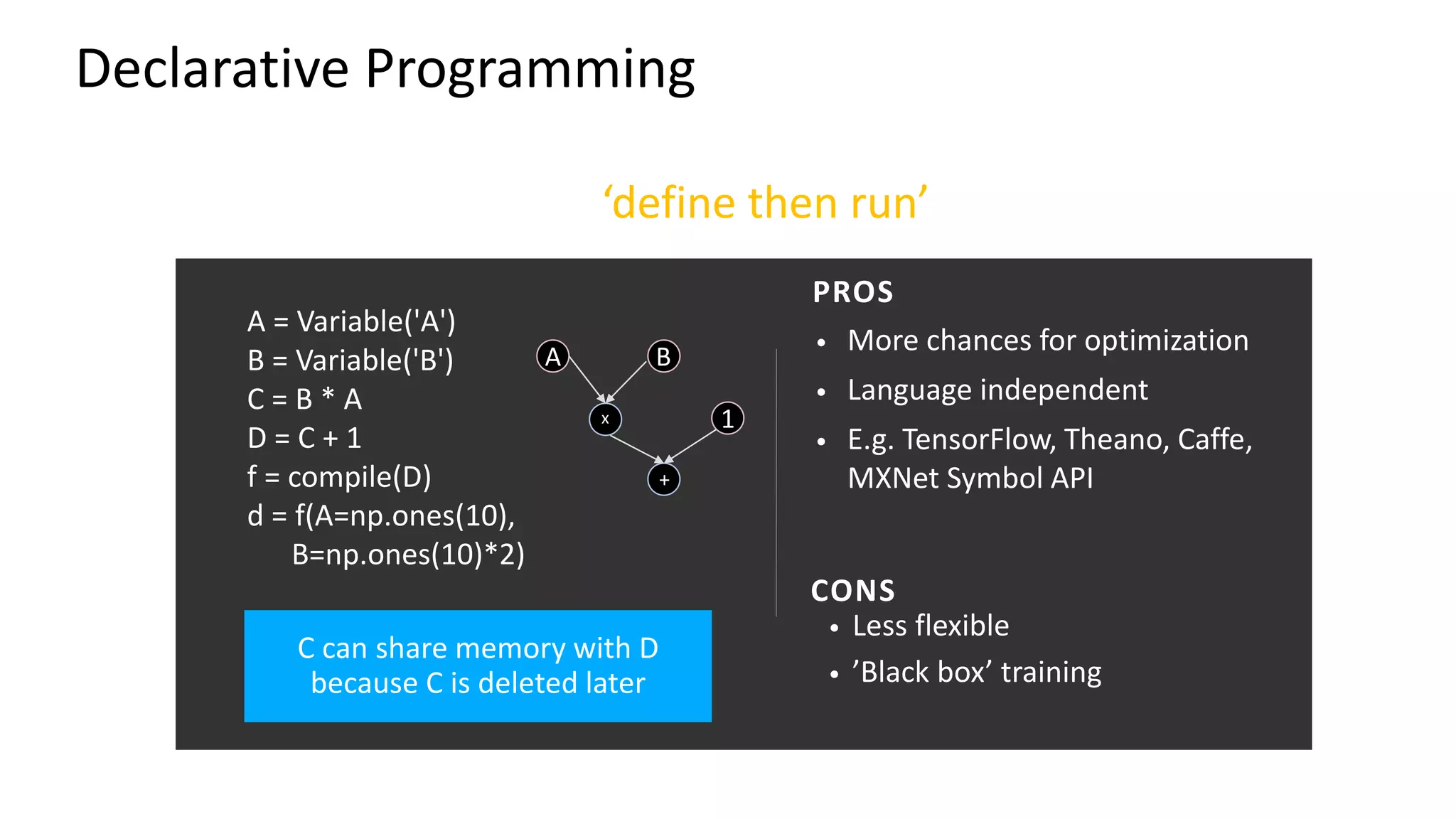

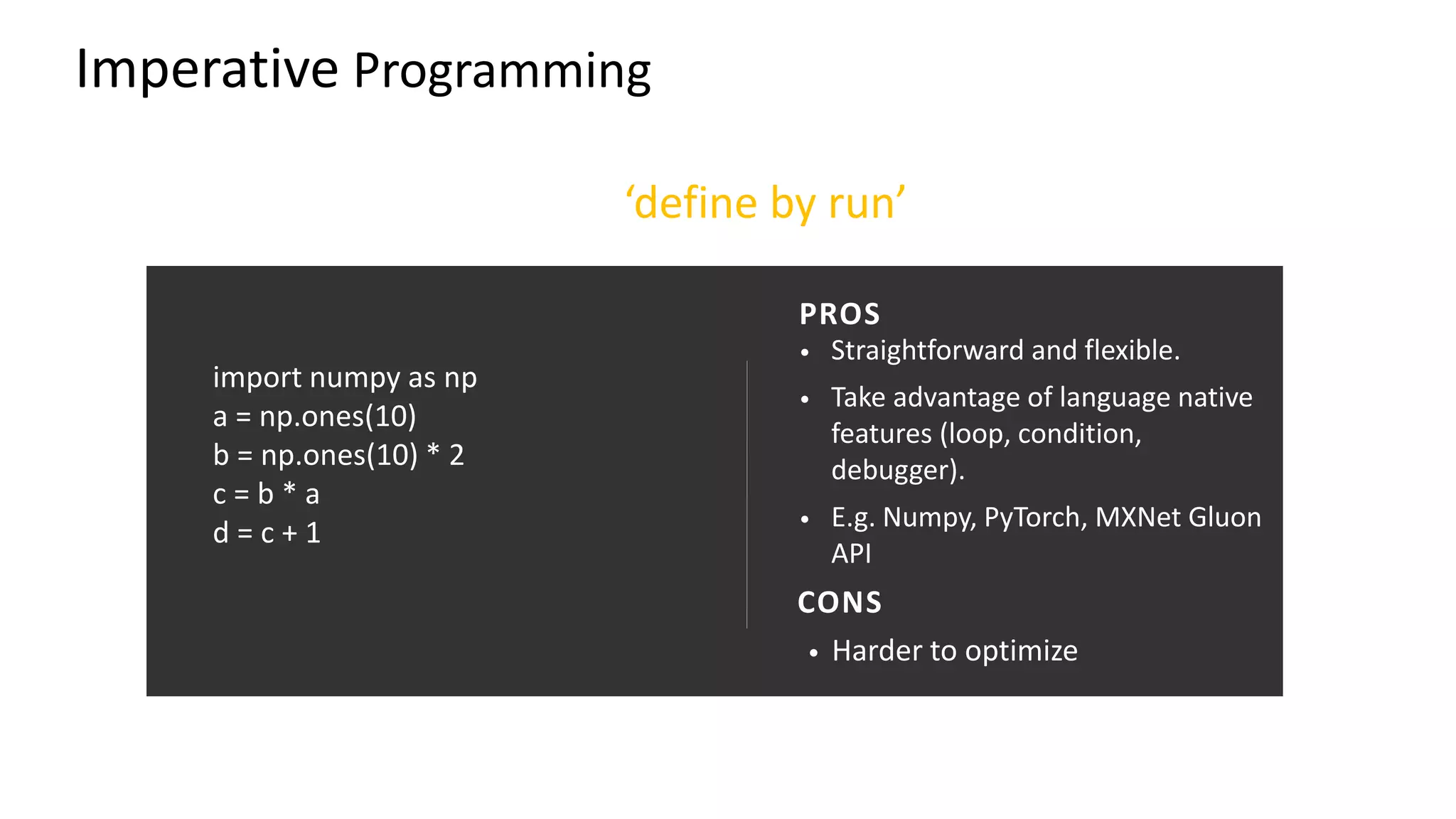

This document provides a summary of a presentation on deep learning concepts, common architectures, Apache MXNet, and infrastructure for deep learning. The agenda includes an overview of deep learning concepts like neural networks and training, common architectures like convolutional neural networks and LSTMs, a demonstration of Apache MXNet's symbolic and imperative APIs, and a discussion of infrastructure for deep learning on AWS like optimized EC2 instances and Amazon SageMaker.

![Gluon CV: classification, detection, segmentation

https://github.com/dmlc/gluon-cv

[electric_guitar],

with probability 0.671](https://image.slidesharecdn.com/20180606berlinsummit-180606151740/75/Deep-Dive-on-Deep-Learning-June-2018-35-2048.jpg)