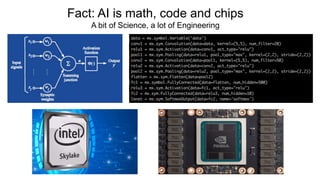

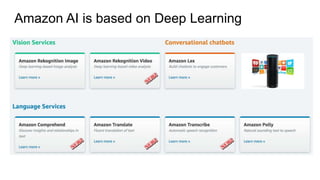

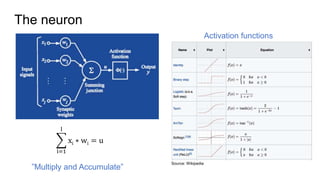

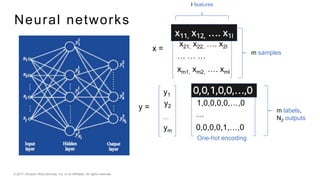

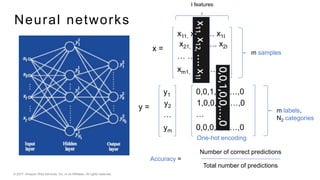

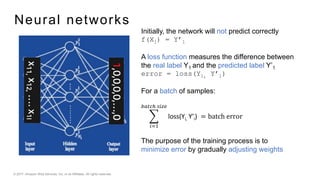

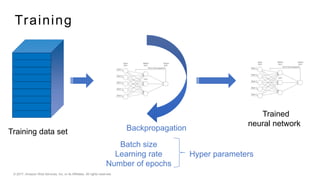

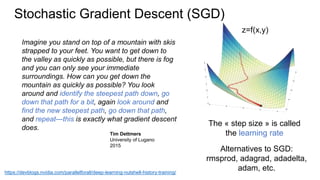

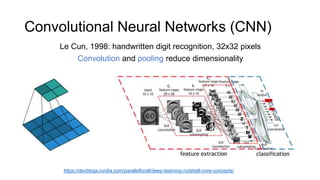

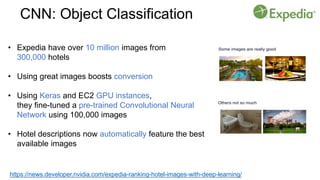

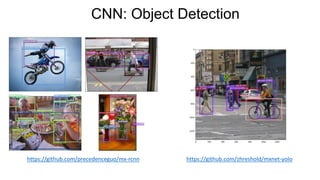

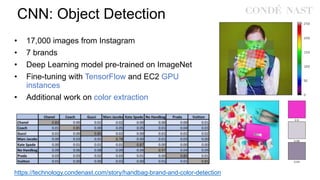

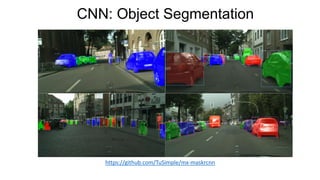

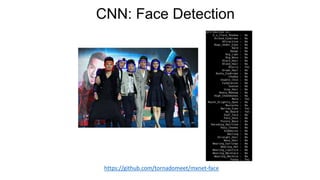

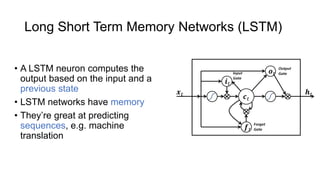

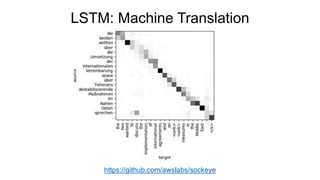

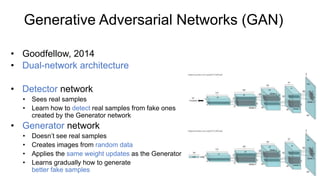

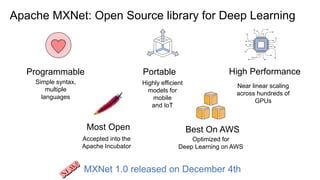

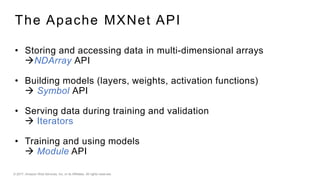

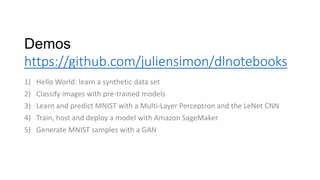

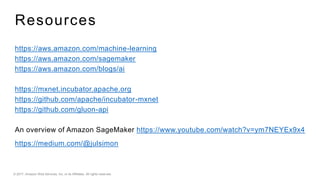

This document provides an introduction to deep learning and neural networks. It discusses key concepts like artificial intelligence, machine learning, deep learning and common network architectures. It also introduces Apache MXNet and demos using Jupyter notebooks on Amazon SageMaker. Deep learning uses neural networks to teach machines from complex data without explicit programming. Common network types discussed are convolutional neural networks, long short-term memory networks and generative adversarial networks. MXNet is an open source deep learning library that programs and scales models across GPUs. Demos show classifying images and generating digits with neural networks.