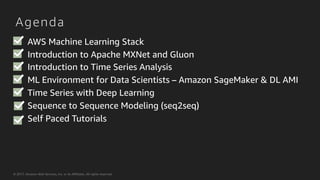

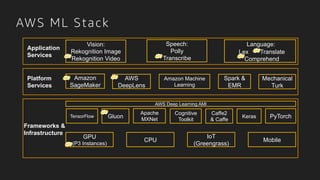

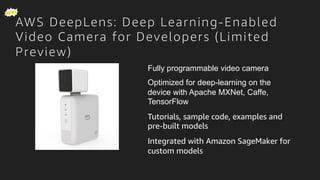

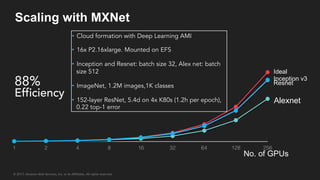

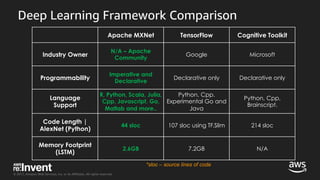

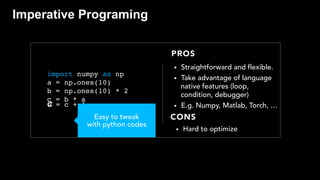

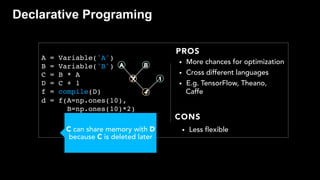

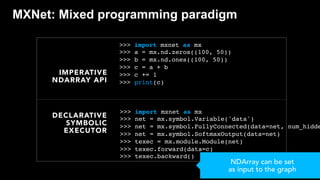

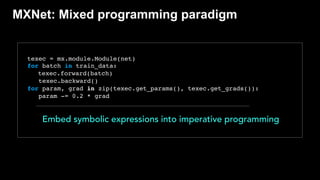

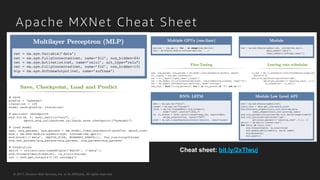

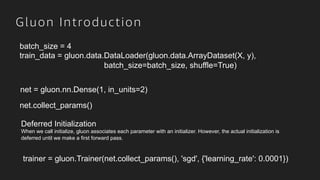

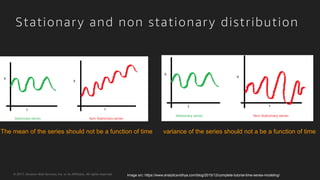

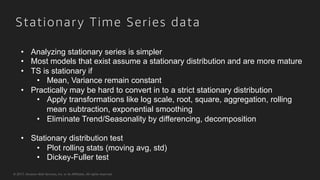

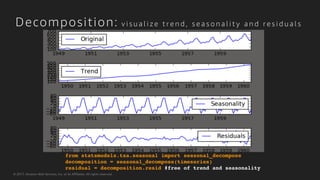

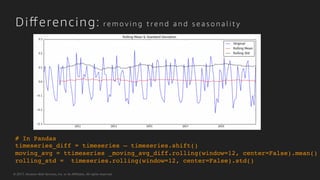

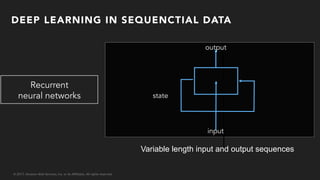

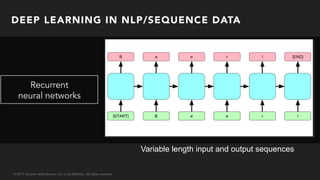

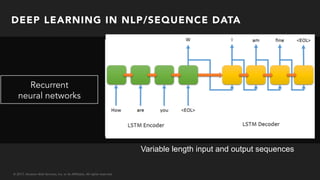

This document provides an overview and agenda for a workshop on time series and sequence modeling using Amazon Web Services machine learning tools. It introduces the AWS machine learning stack including Amazon SageMaker, Apache MXNet, and Gluon. It then discusses time series analysis techniques like differencing and decomposition to make time series stationary. Deep learning techniques for time series like recurrent neural networks are also introduced. The document aims to provide machine learning practitioners an introduction to building time series and sequence models on AWS.