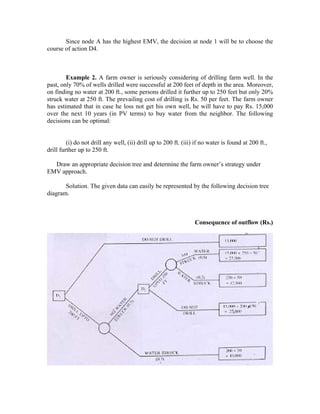

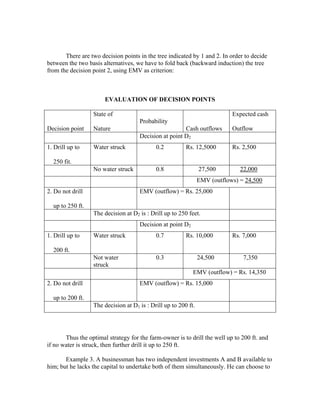

A decision tree is a diagram that visually represents decisions, uncertainties, and outcomes of a complex decision-making process. It breaks down a complex problem into a step-by-step process. The document provides examples of how to construct a decision tree by defining decision points and possible outcomes as branches. It also explains how to evaluate a decision tree by assigning values and probabilities to outcomes and working backwards to calculate expected values at decision points in order to determine the optimal decision.