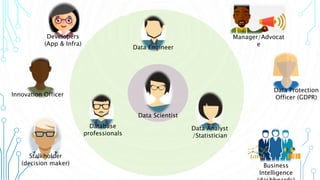

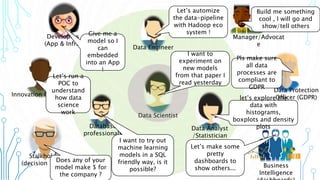

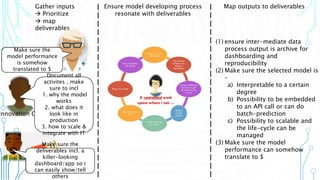

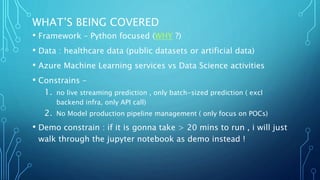

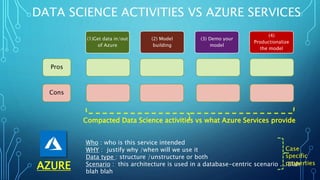

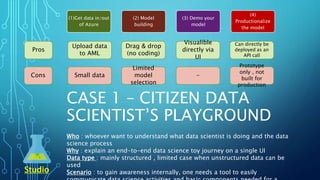

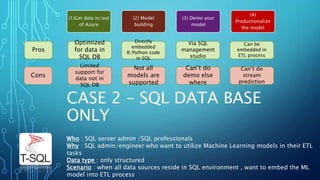

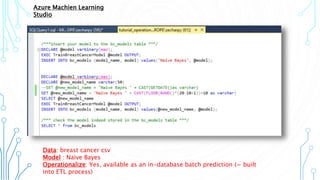

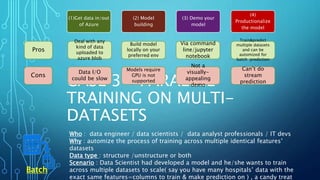

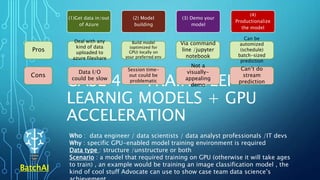

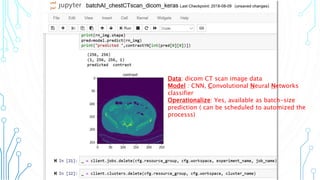

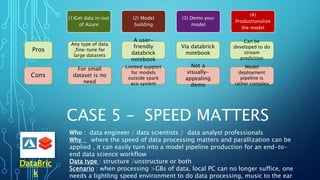

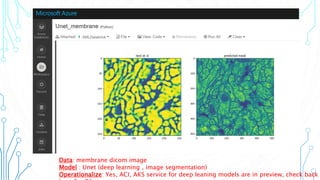

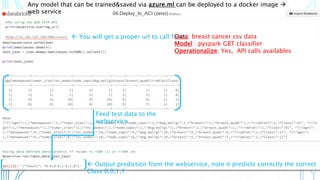

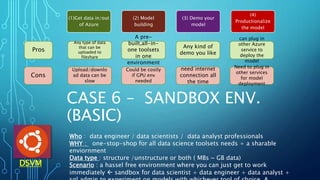

The document outlines an agenda focused on exploring data science practices within Azure for various professionals, including data scientists and managers. It emphasizes the need for compliance with GDPR while experimenting with models and conducting proofs of concept (POCs). The document also details specific case studies demonstrating data processing capabilities and the use of Azure services for machine learning.