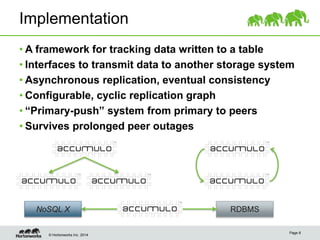

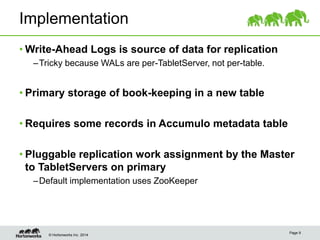

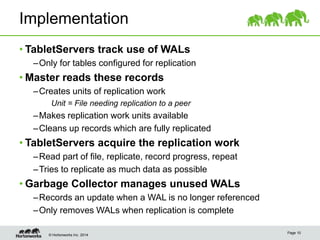

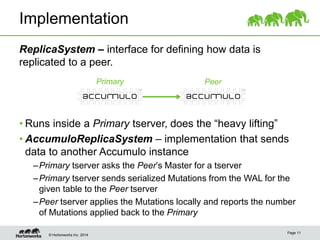

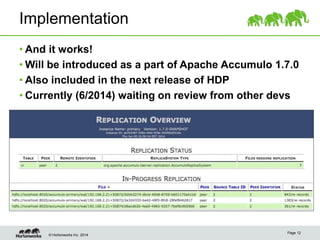

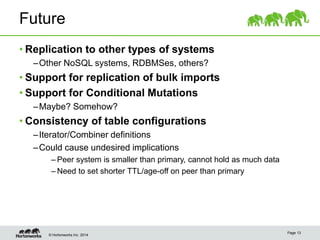

This document describes the implementation of data replication in Apache Accumulo. It discusses justifying the need for replication to handle failures, describes how replication is implemented using write-ahead logs, and outlines future work including replicating to other systems and improving consistency.