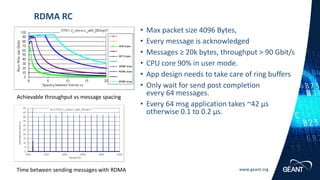

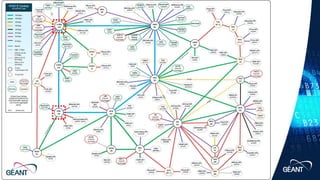

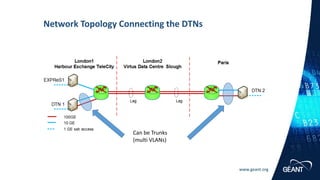

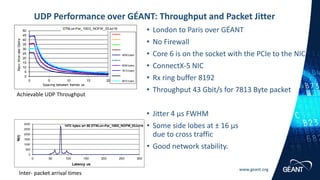

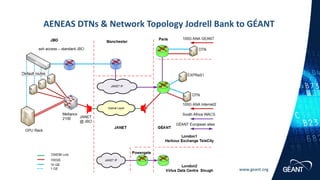

The document summarizes experiments testing data transfer speeds over 100 gigabit per second network paths using data transfer nodes. Key results include:

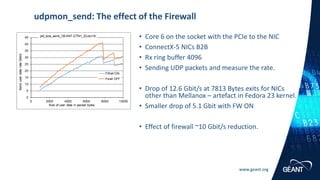

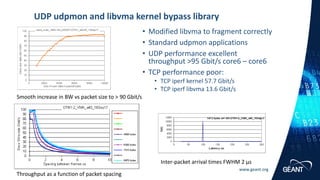

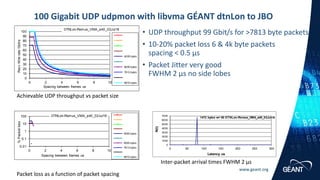

- UDP transfers achieved throughput over 95 Gbit/s between nodes in the GÉANT lab and over GÉANT's core network.

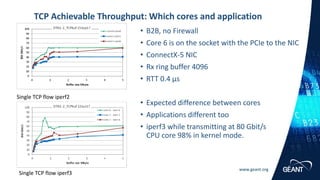

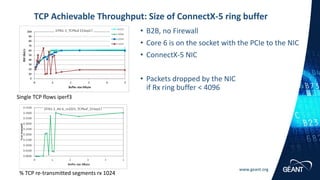

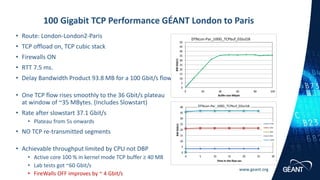

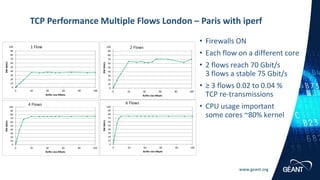

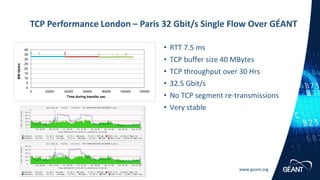

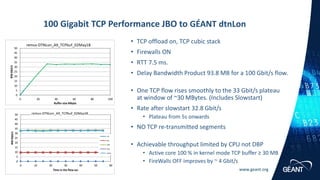

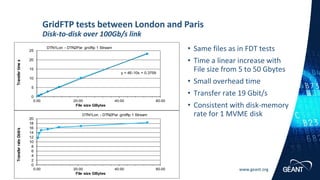

- TCP transfers reached plateau throughput of 37.1 Gbit/s for a single flow and 75 Gbit/s for multiple flows between London and Paris over the GÉANT network.

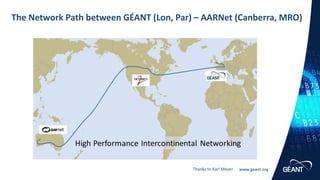

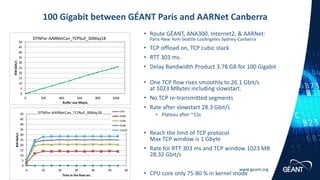

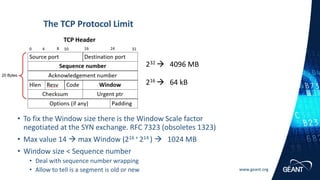

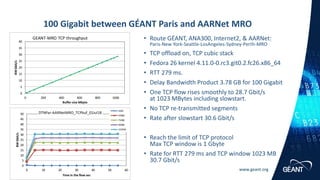

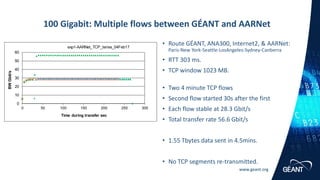

- Transfers between GÉANT in Paris and AARNet in Canberra achieved 28.3 Gbit/s, reaching the theoretical limit of TCP throughput over the 303 millisecond round-trip path.