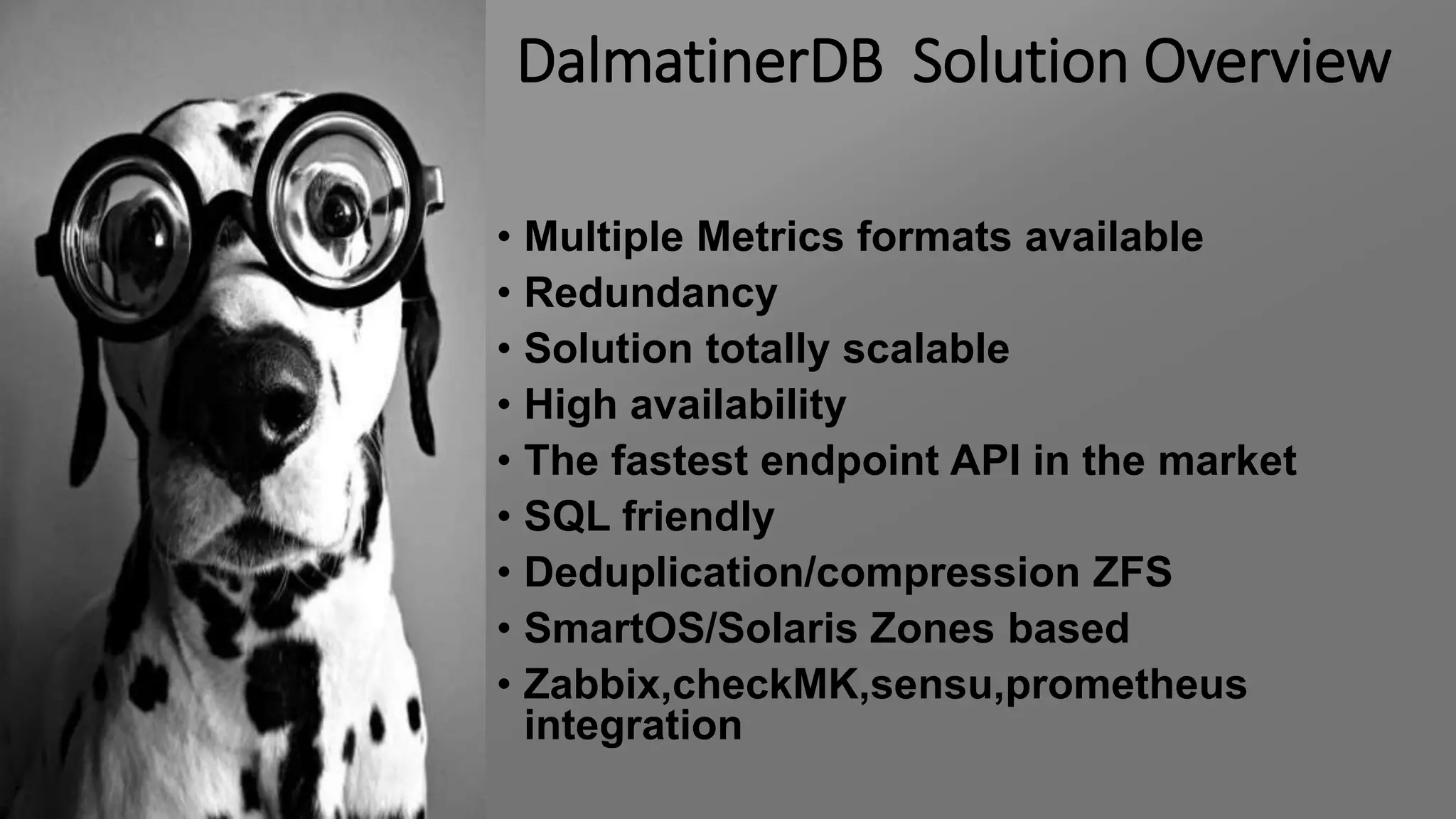

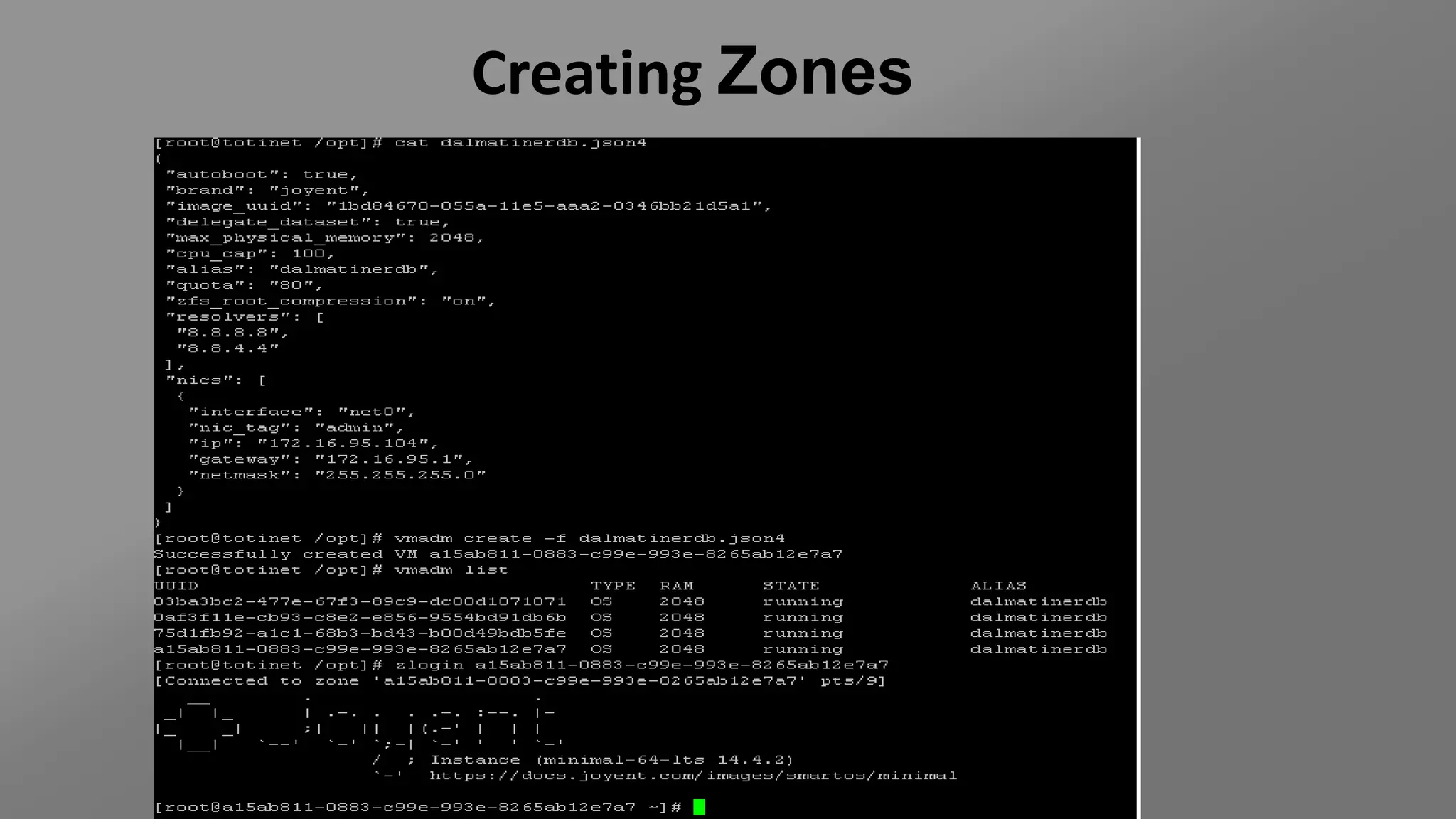

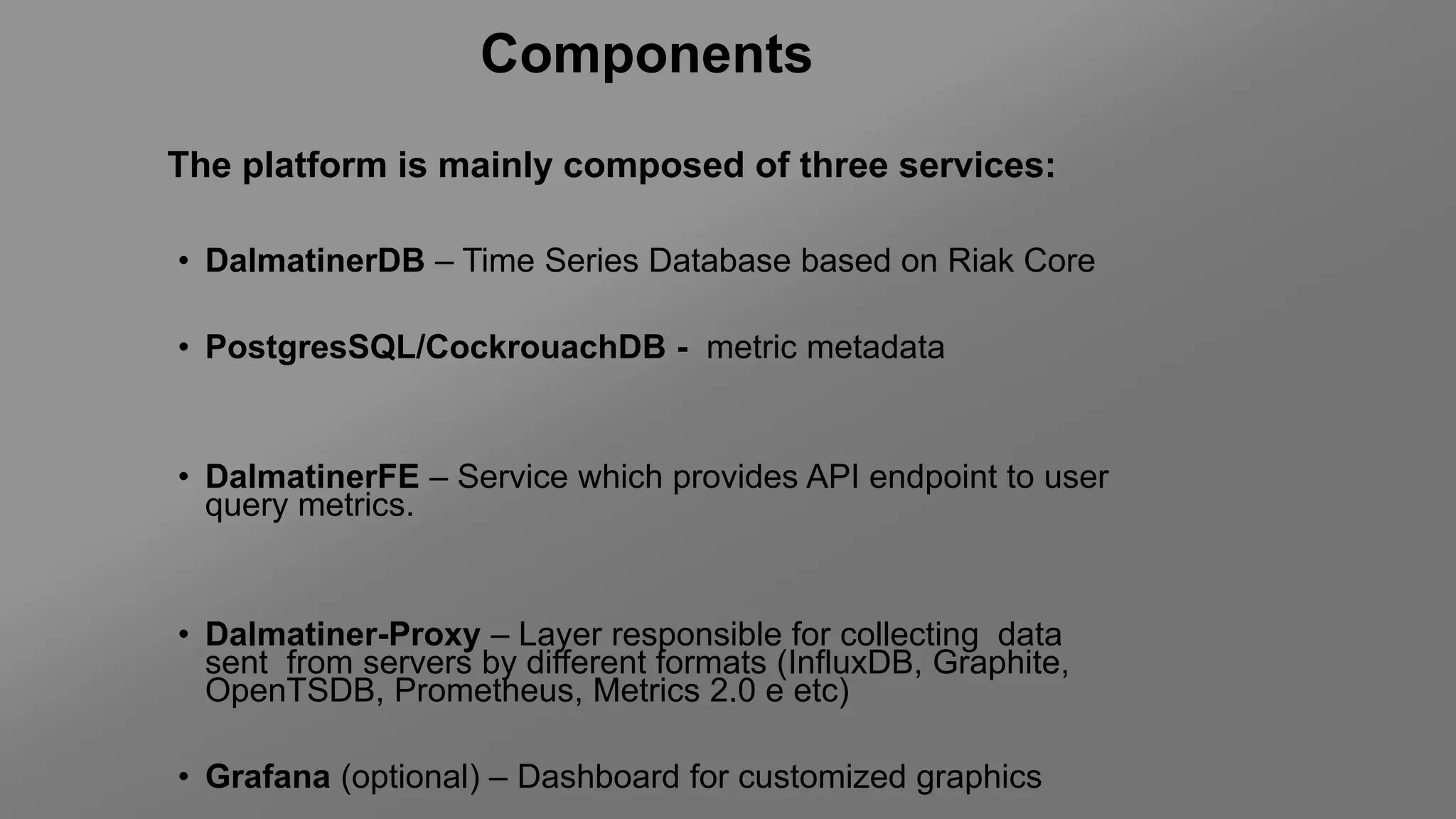

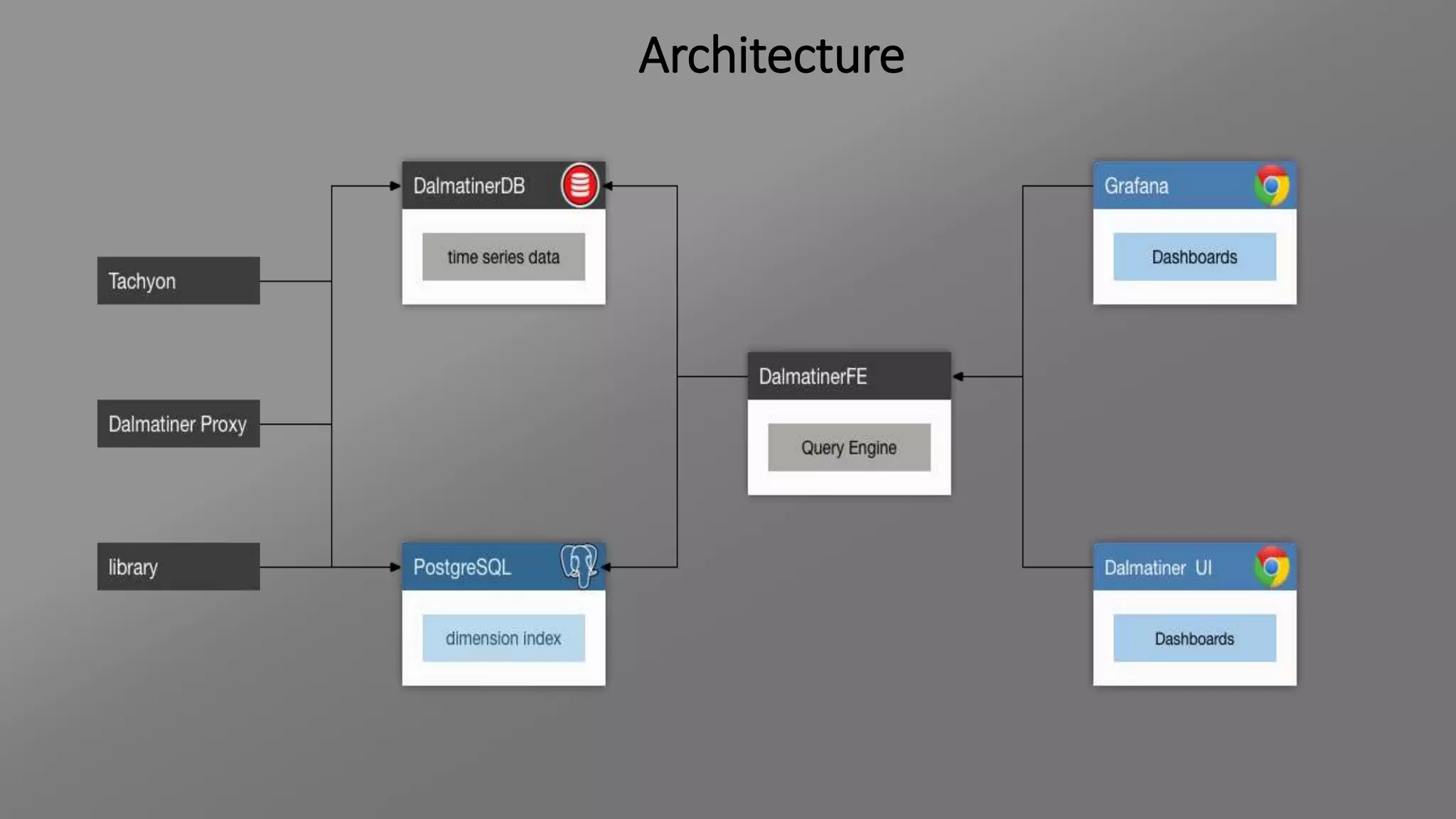

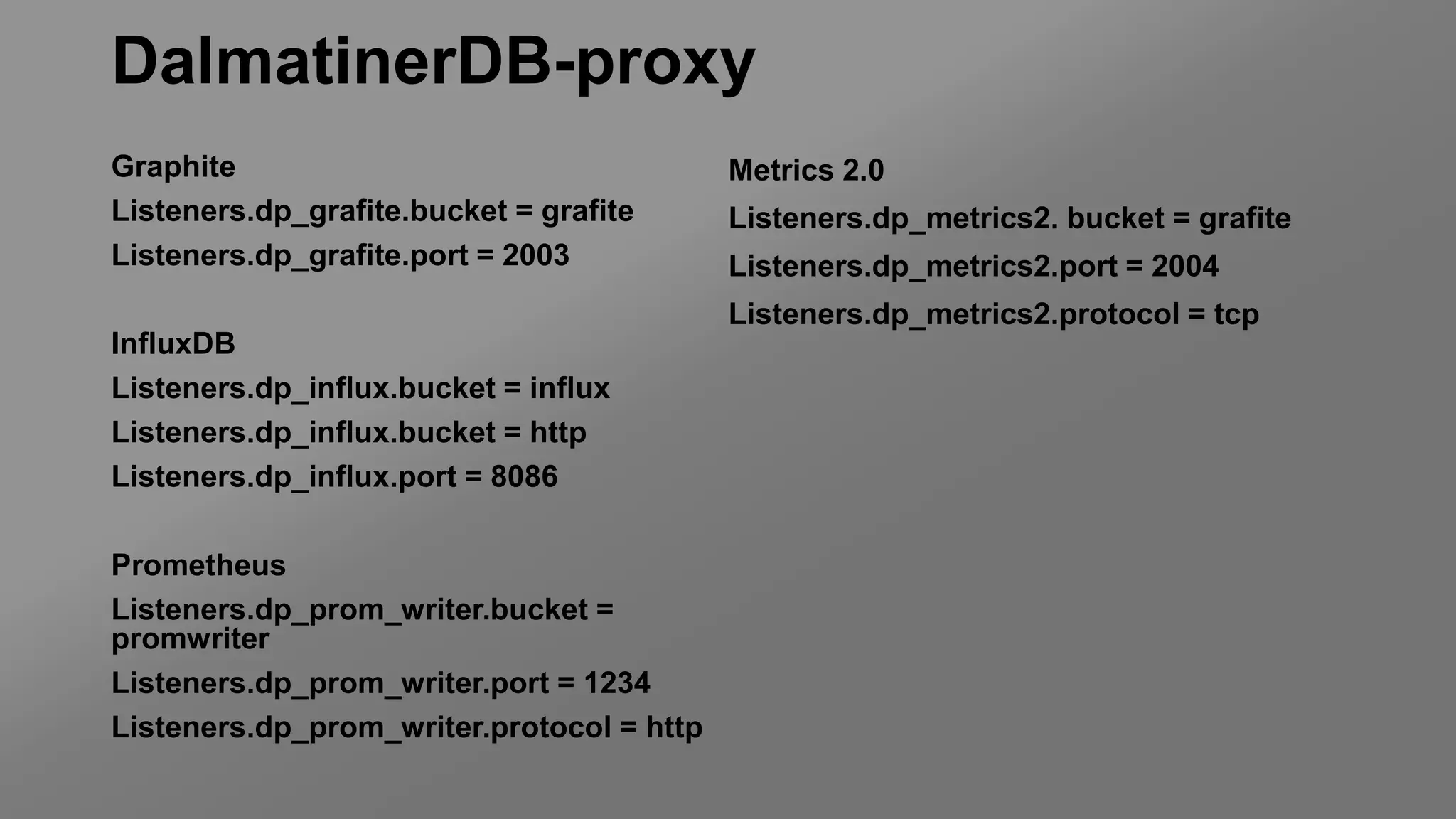

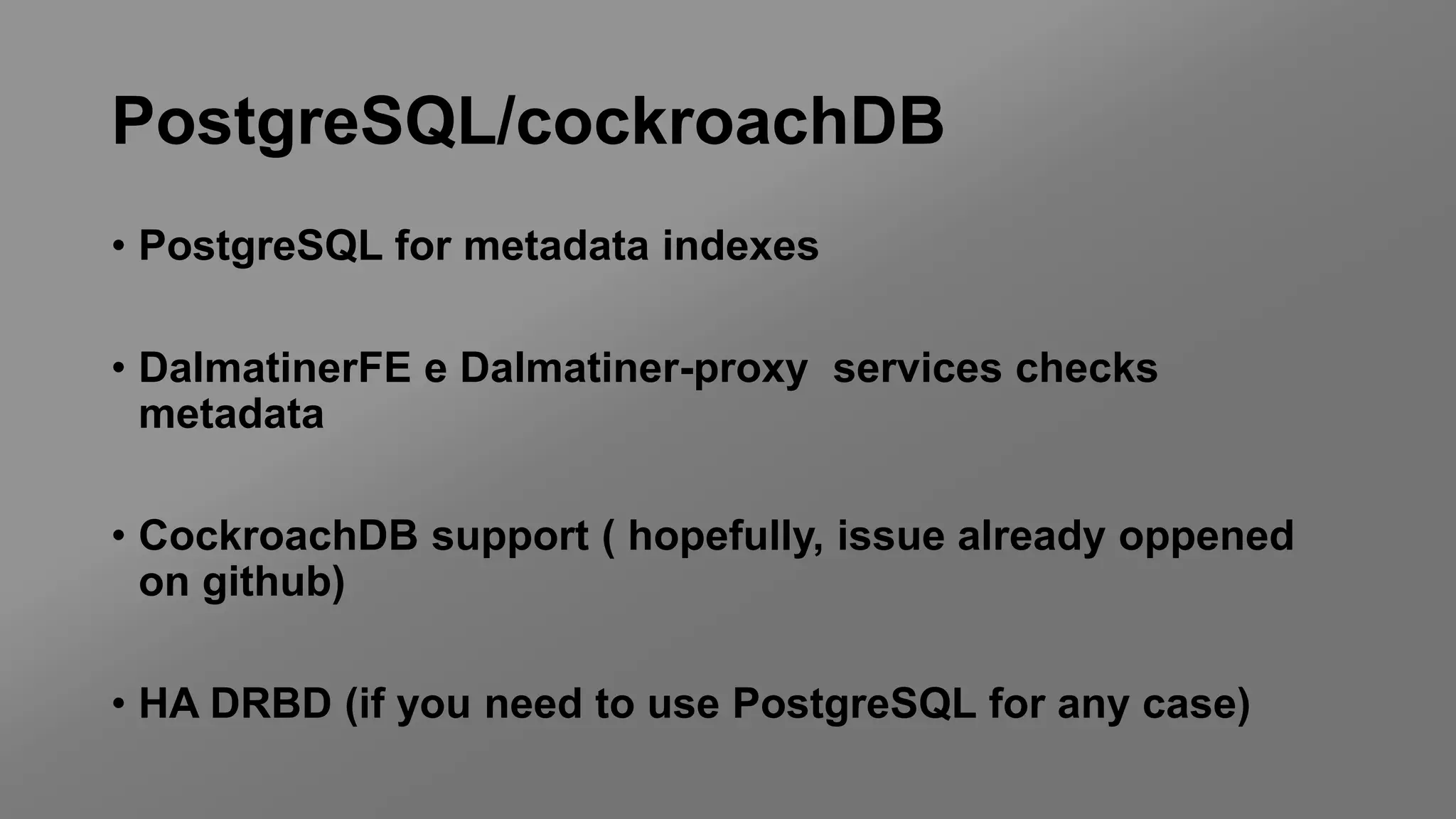

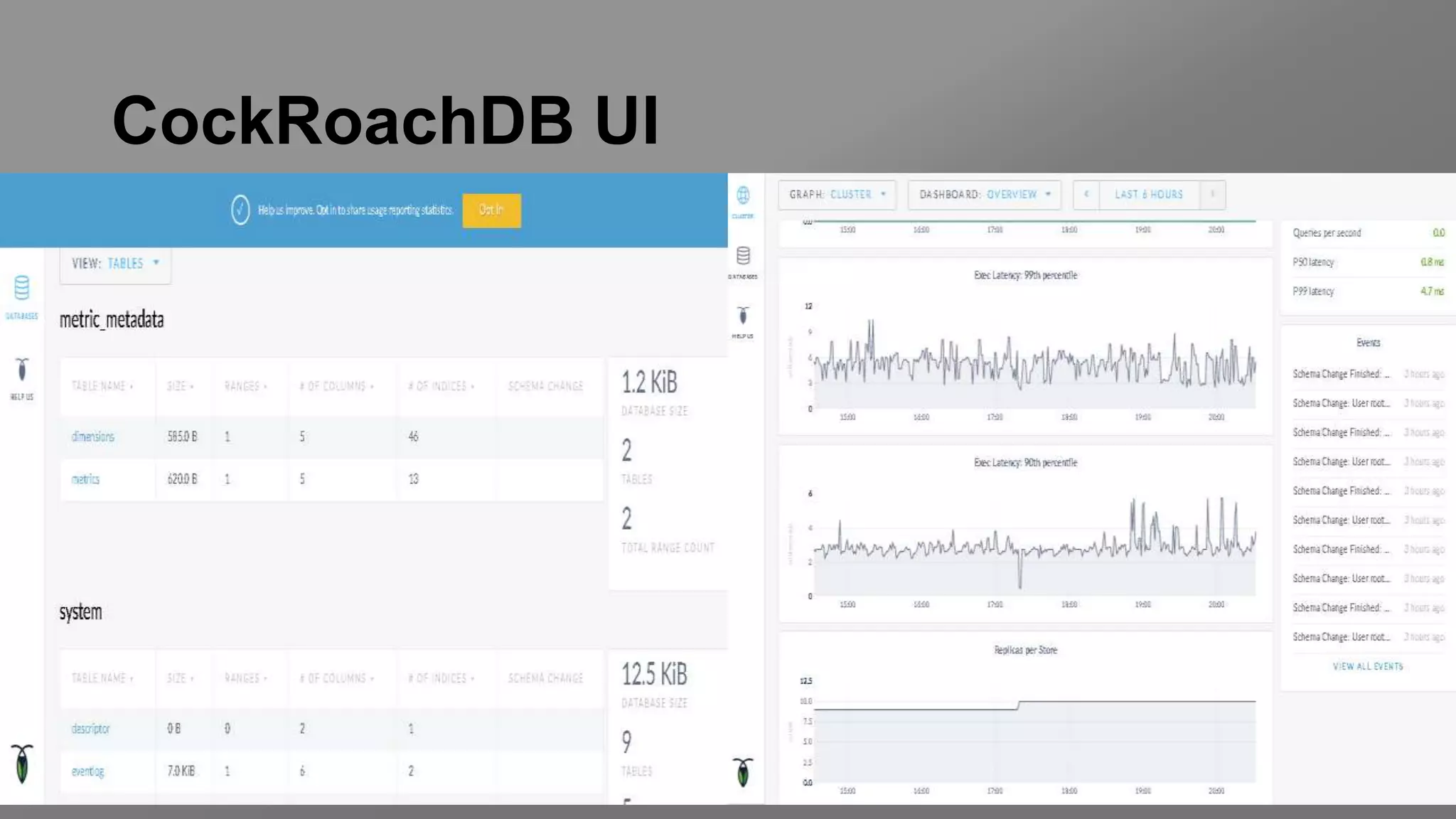

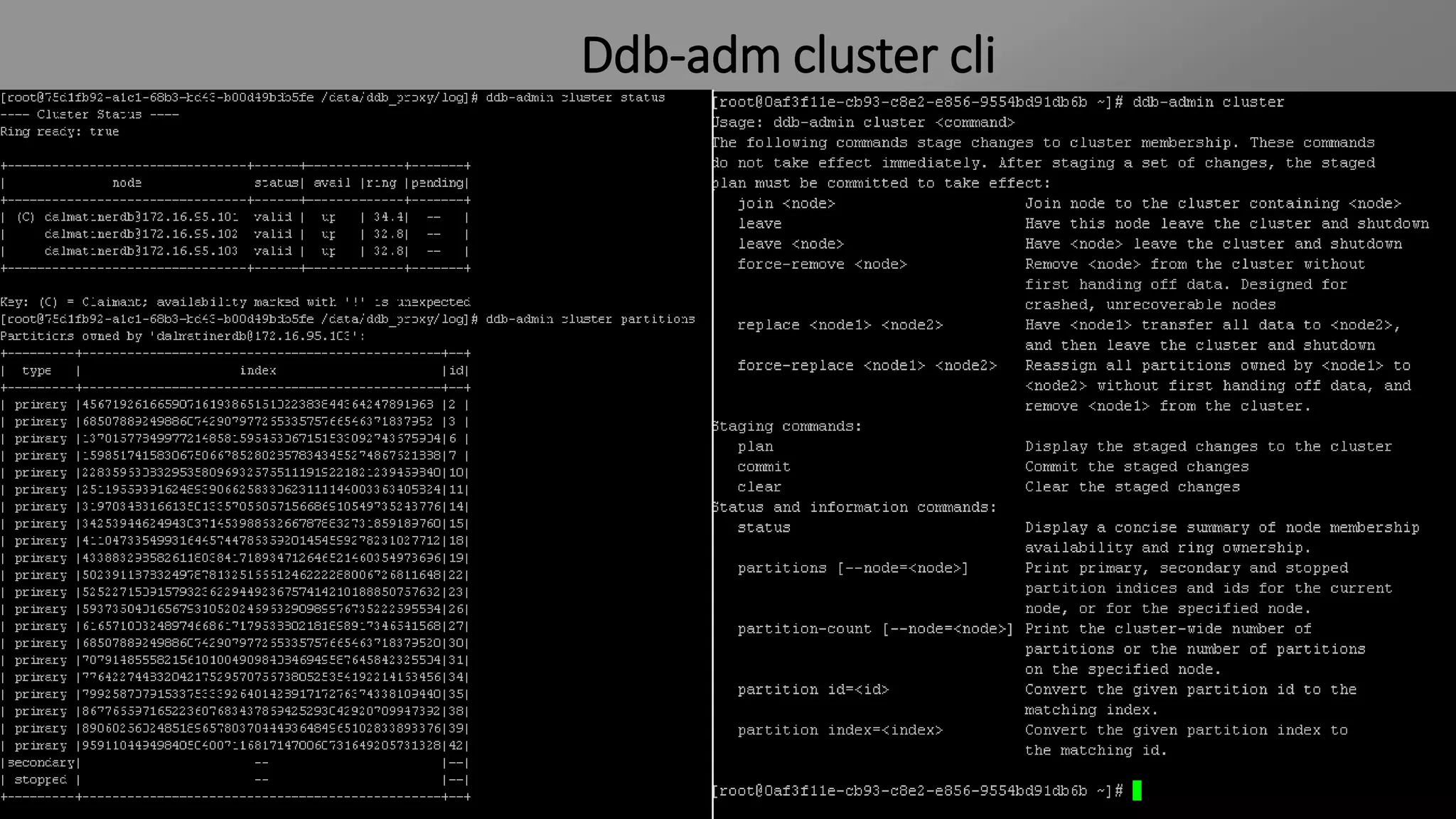

The document outlines a monitoring solution involving DalmatinerDB and CockroachDB, emphasizing features like scalability, high availability, and integration capabilities with various monitoring tools. It details the architecture composed of multiple services including DalmatinerDB, PostgreSQL/CockroachDB for metadata, and DalmatinerFe for user queries. The document also discusses the system's operation and configuration, highlighting the benefits of using ZFS for data management and the advantages of CockroachDB as a distributed SQL database.