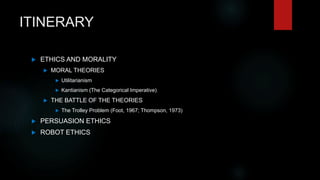

This document discusses the psychology of technology and ethics relating to computers and robots. It covers several topics:

- Moral theories like utilitarianism and Kantianism and how they relate to issues like the trolley problem.

- Robot ethics and Asimov's Three Laws of Robotics.

- Persuasive technologies and ensuring they are used ethically.

- Issues around autonomous systems and at what point robots require independent ethics.

- Open questions around when a system becomes an entity with rights and how to balance human and computer agency.