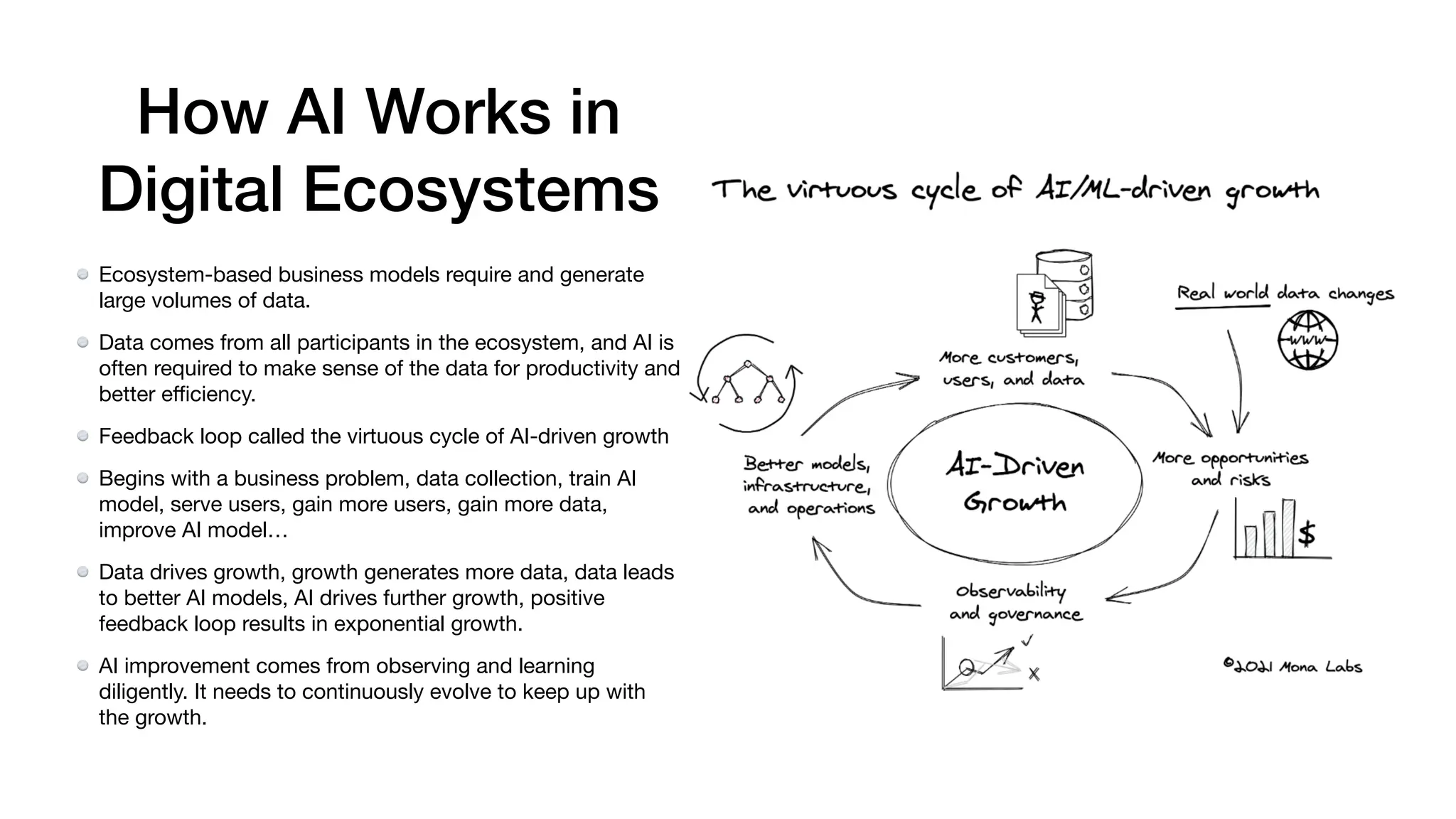

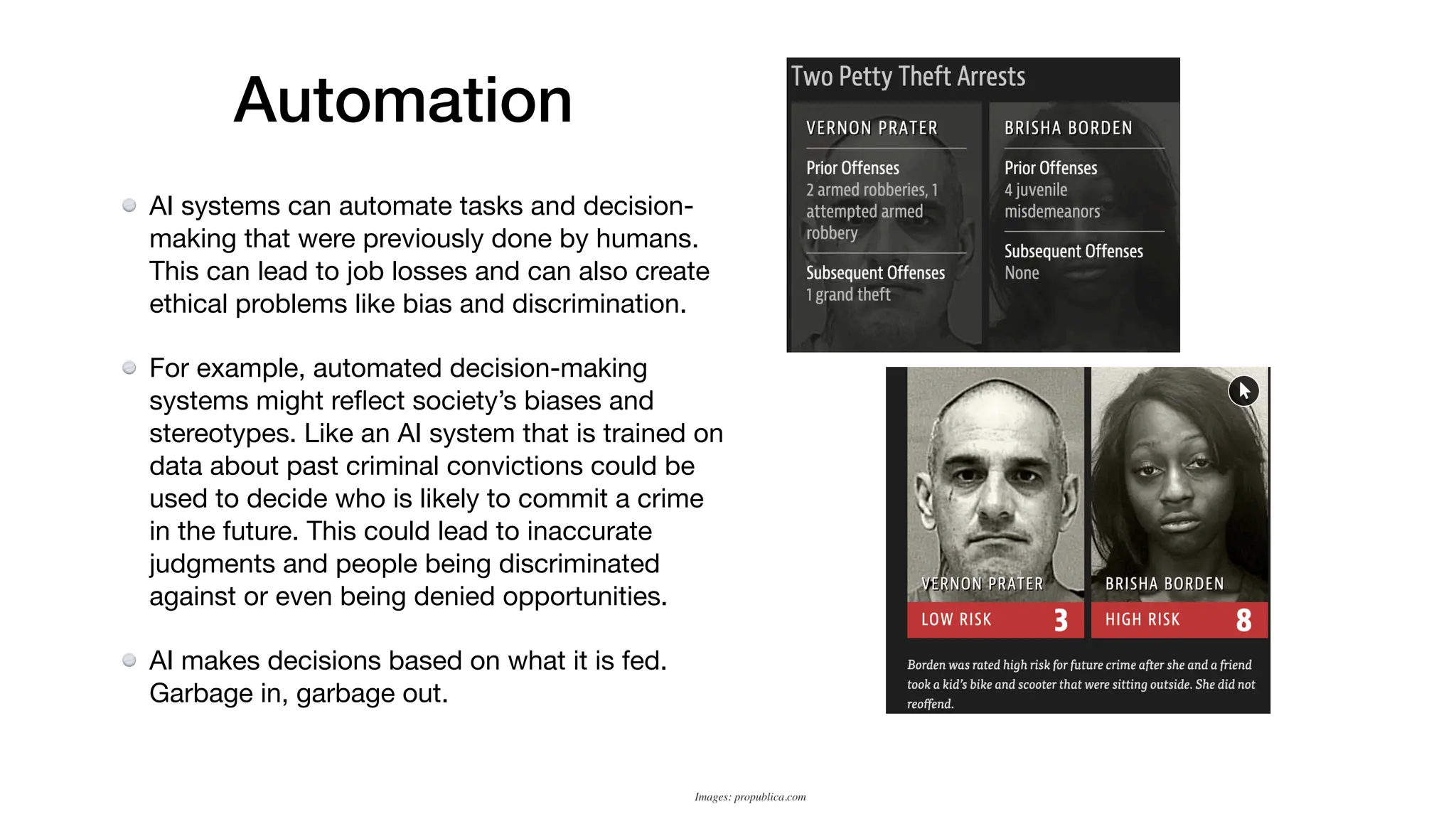

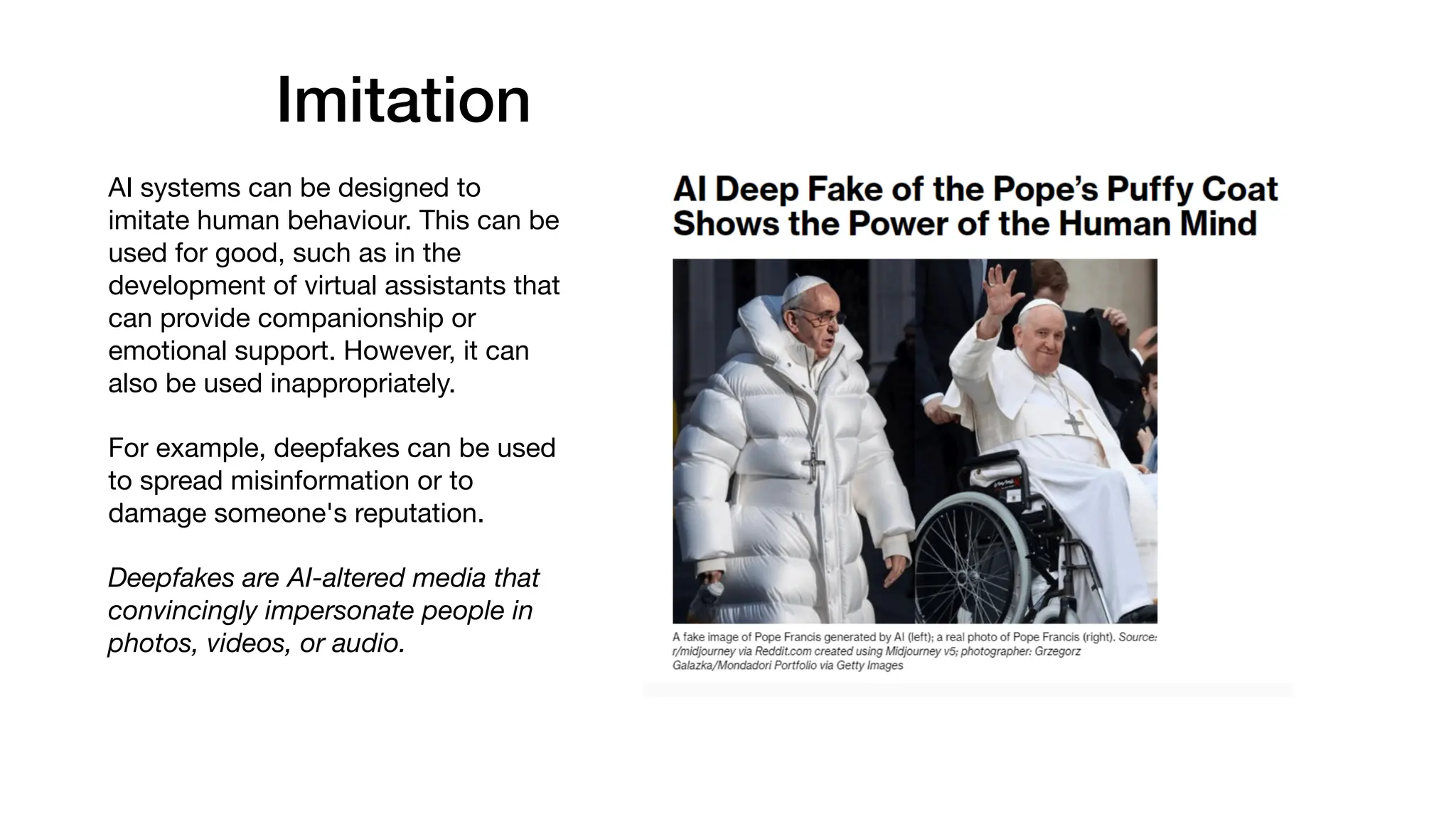

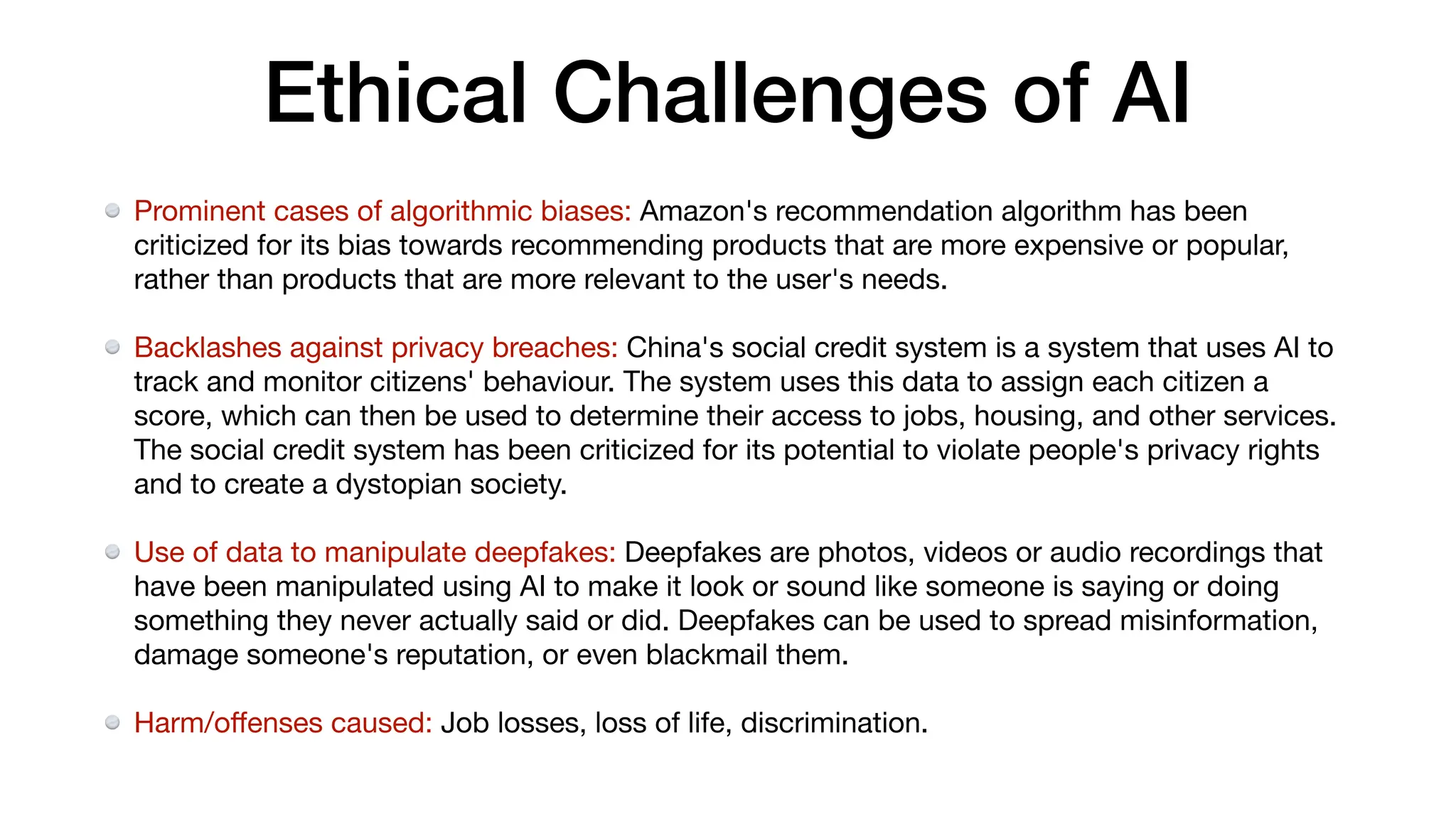

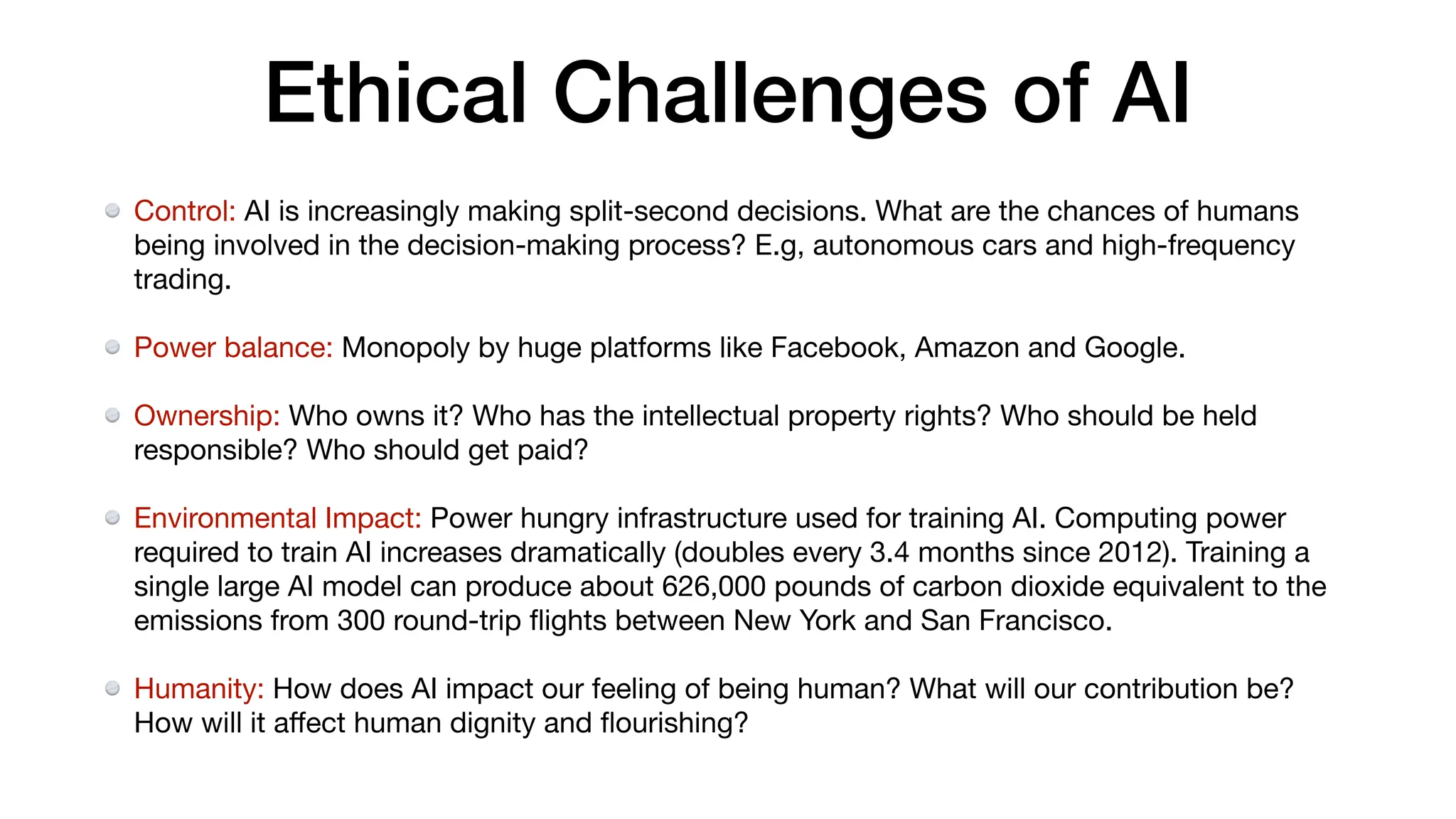

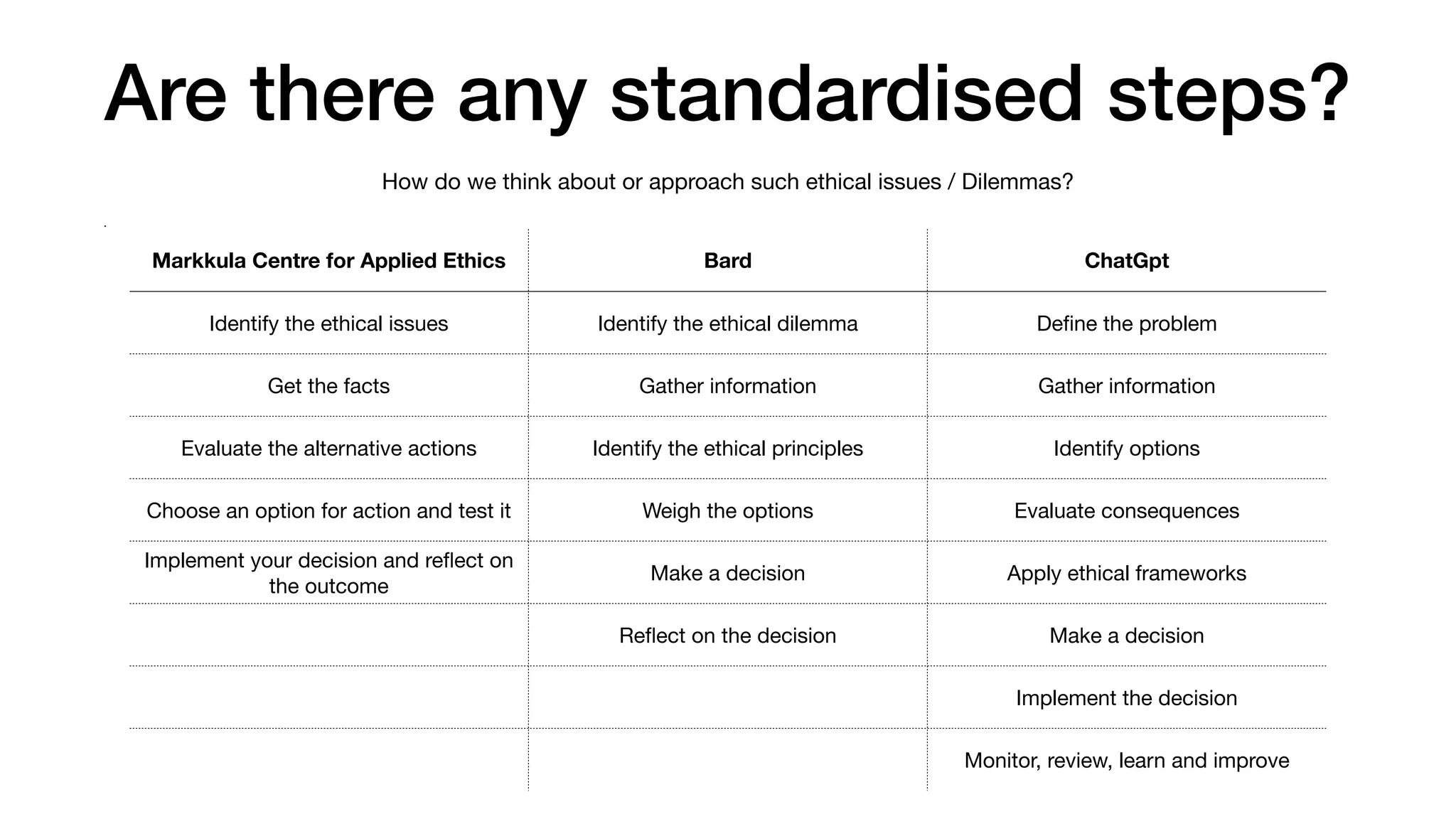

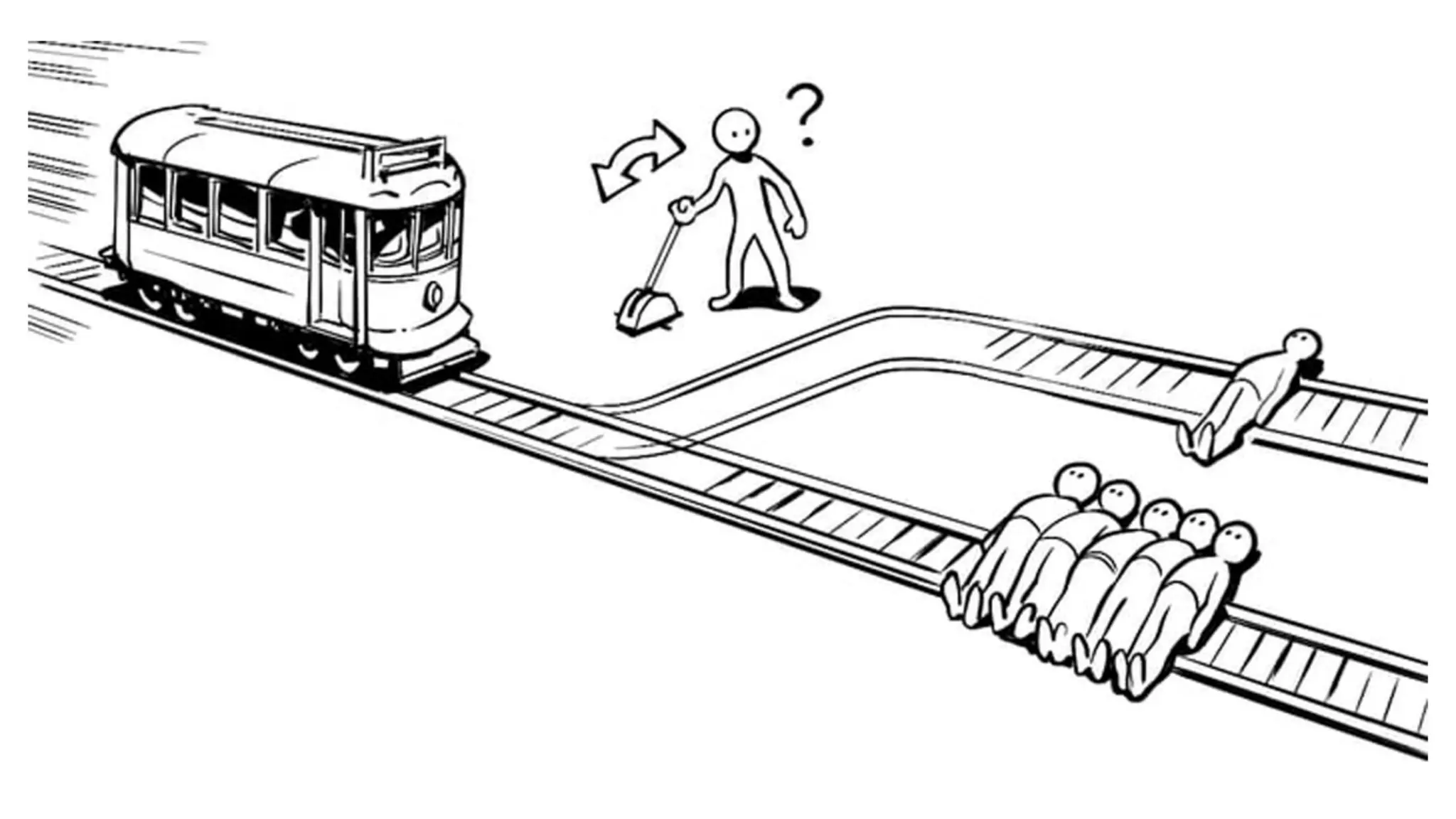

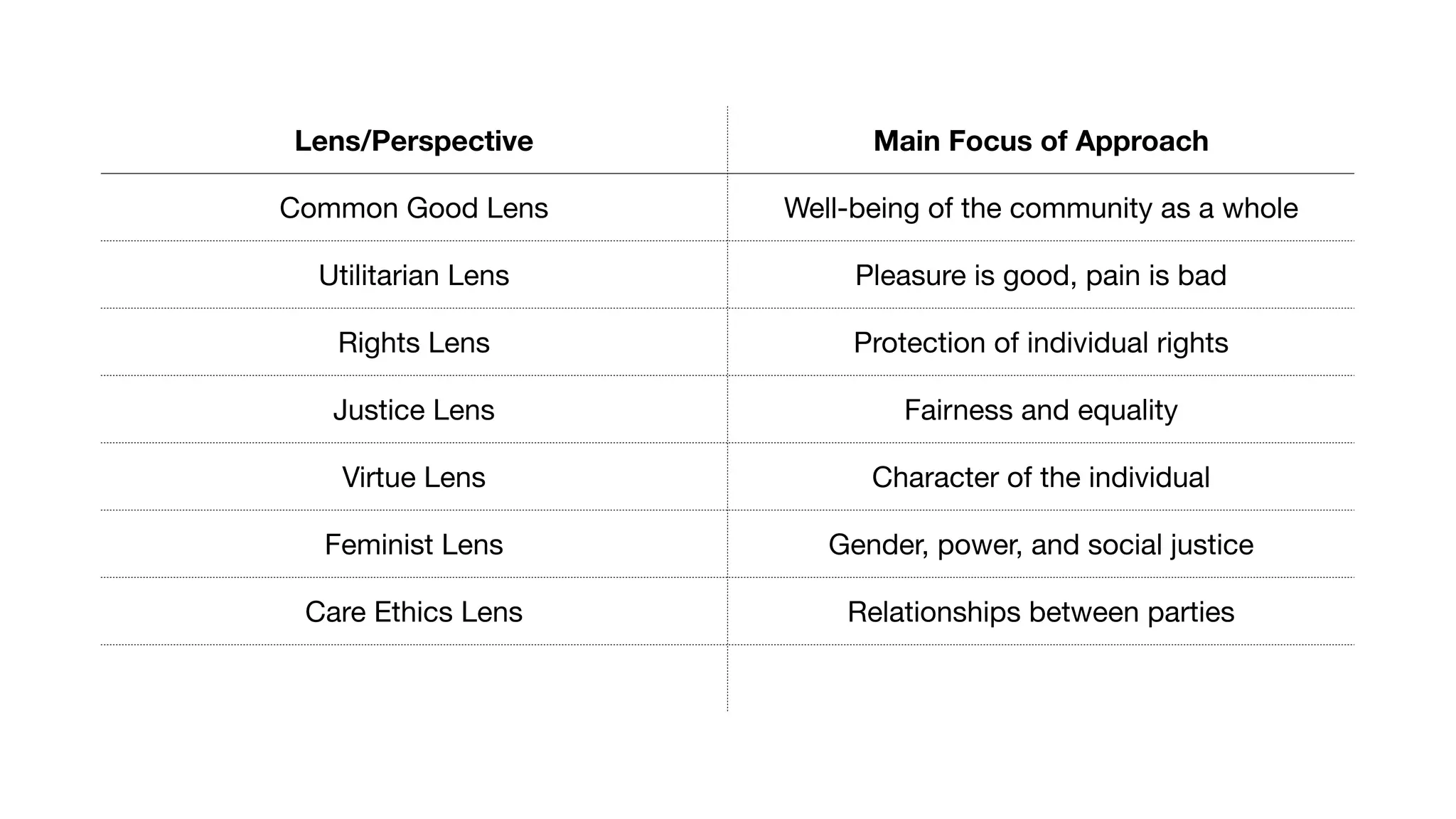

The document discusses the ethics of artificial intelligence (AI) within digital ecosystems, emphasizing its role in data-driven growth and user experience enhancement. It addresses the ethical challenges associated with AI, including issues of privacy, bias, discrimination, and the responsibility of developers to align AI technologies with societal values. Various ethical frameworks are proposed for guiding decision-making in AI applications, highlighting the importance of considering consequences, rights, fairness, and the well-being of individuals and communities.