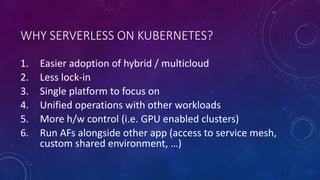

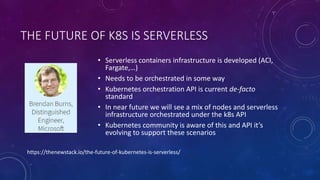

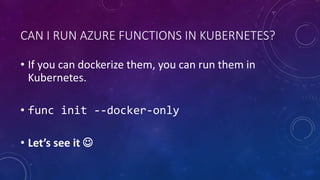

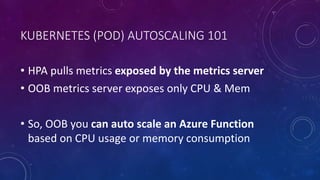

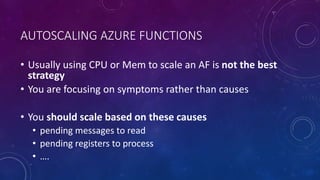

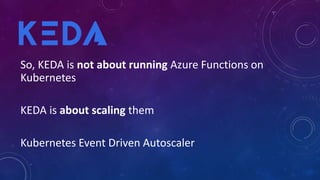

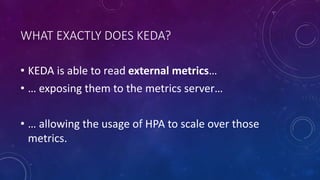

The document discusses running Azure Functions on Kubernetes using KEDA for serverless applications, highlighting the benefits of hybrid/multicloud environments and reduced lock-in. It addresses the challenges of auto-scaling Azure Functions appropriately, emphasizing KEDA's role in scaling based on external metrics rather than just CPU or memory usage. By leveraging KEDA, users can effectively auto-scale their Azure Functions based on specific causes, enhancing Kubernetes orchestration for serverless architectures.