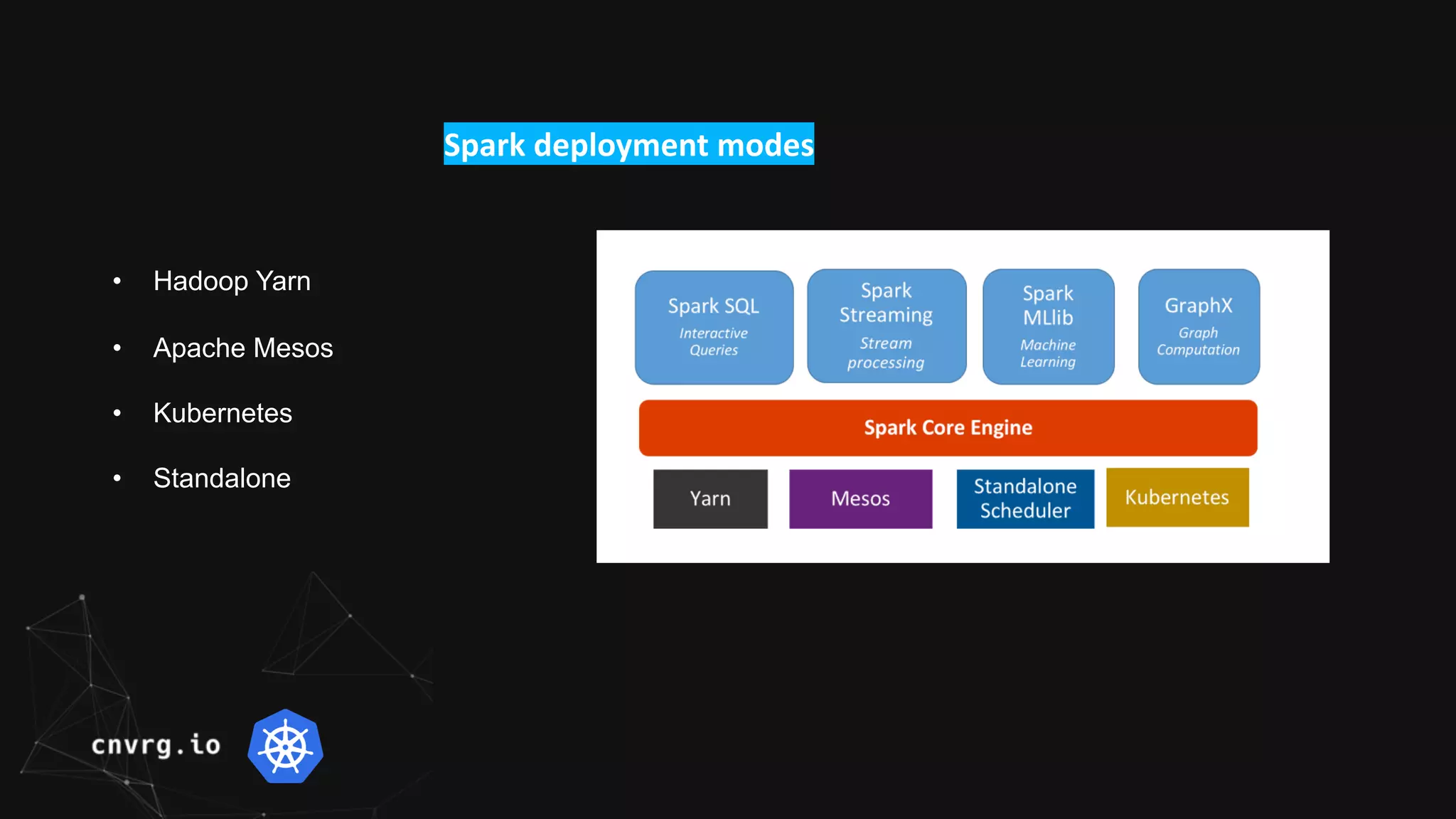

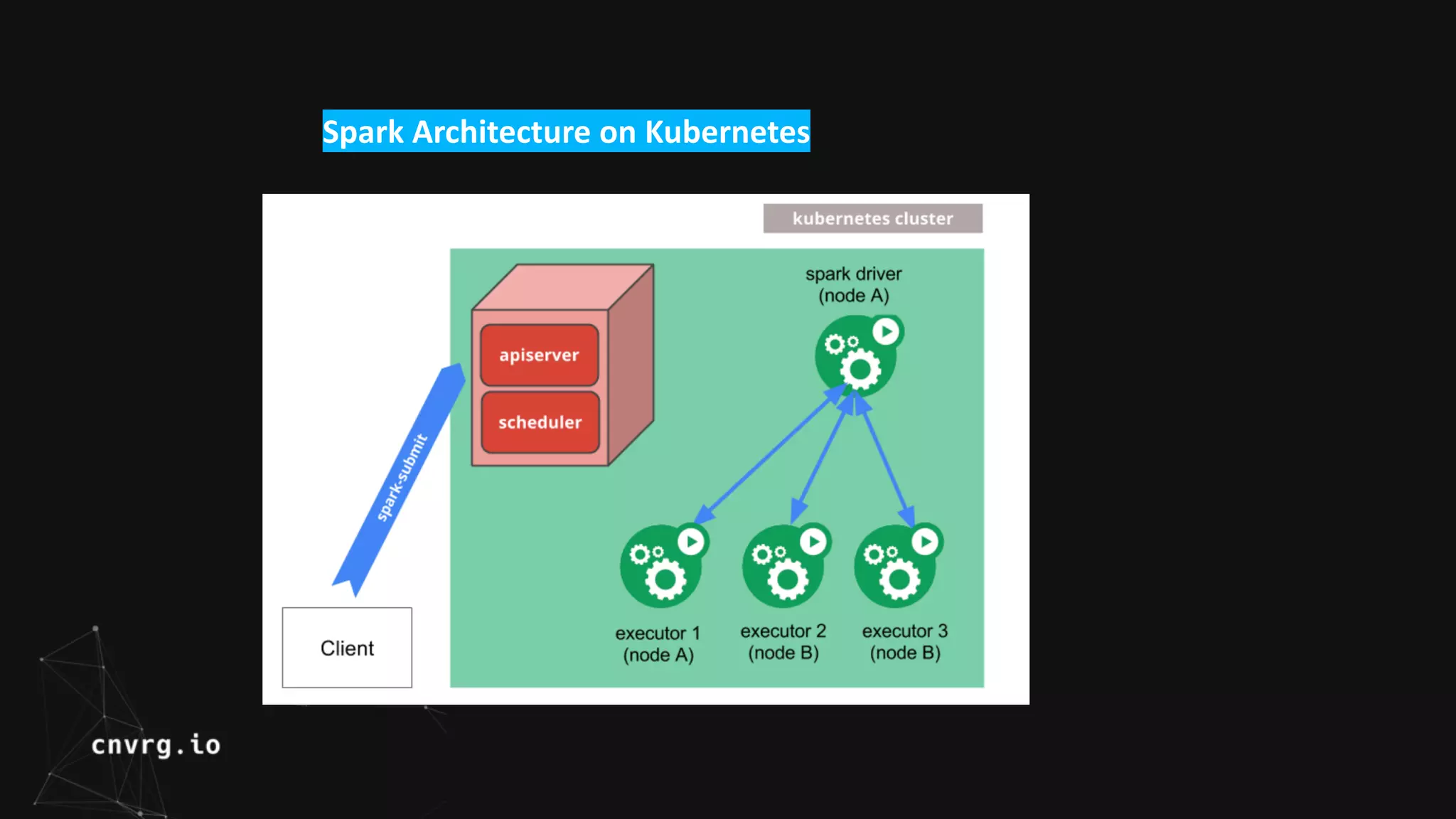

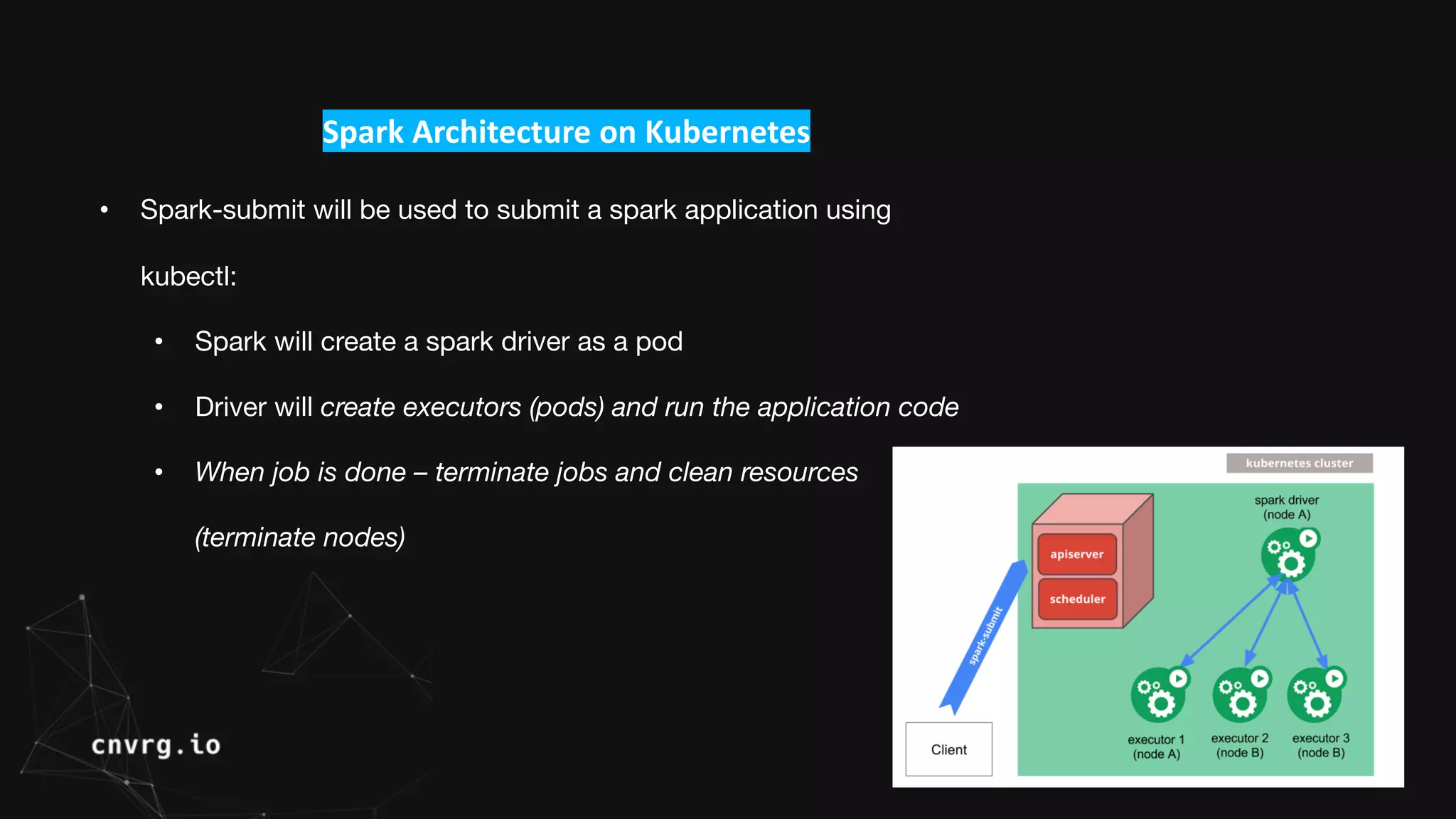

Spark is a unified analytics engine for large-scale data processing that can run on different platforms including Kubernetes. Spark on Kubernetes uses Kubernetes to manage Spark driver and executor pods, allowing Spark applications to leverage Kubernetes features like auto-scaling. The workshop demonstrated submitting a Spark Pi job directly to a Kubernetes cluster, and discussed how CNVRG can help manage, monitor, and automate Spark workloads on Kubernetes with features like reproducible jobs and a unified dashboard.