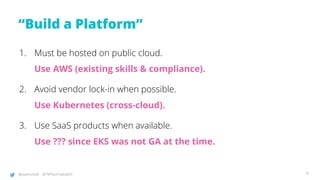

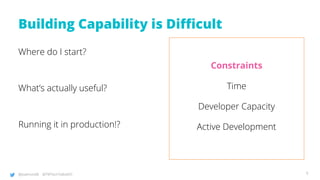

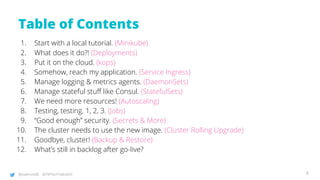

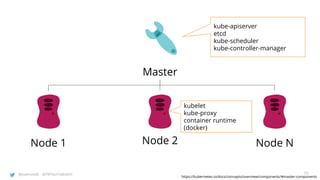

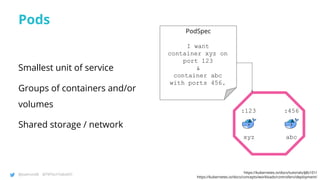

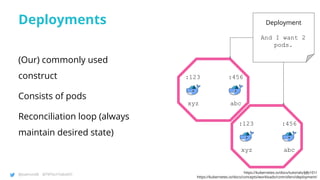

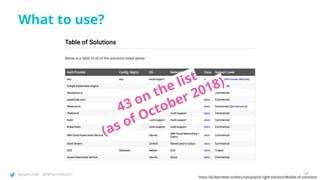

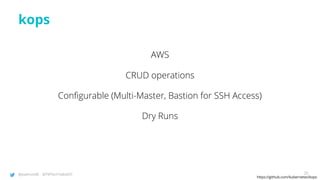

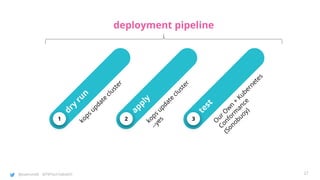

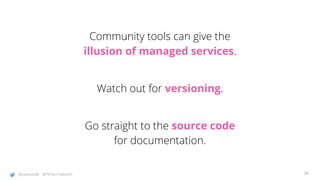

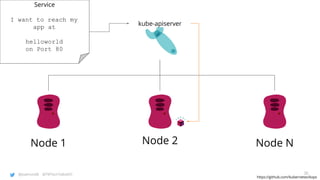

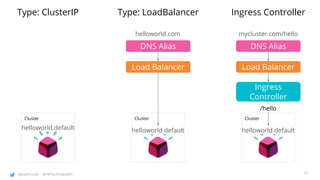

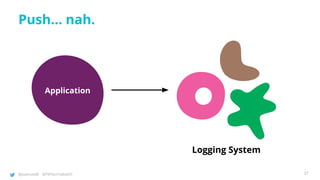

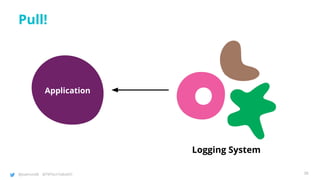

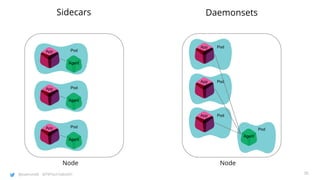

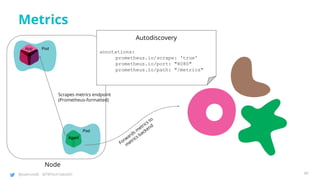

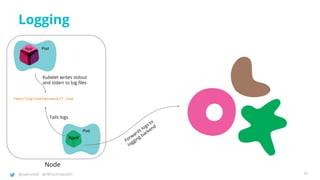

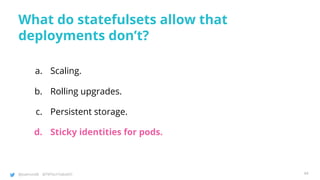

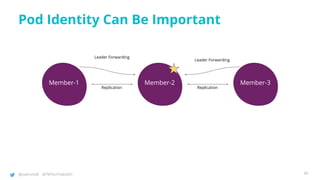

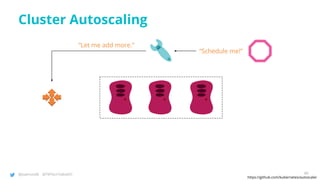

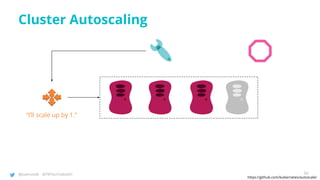

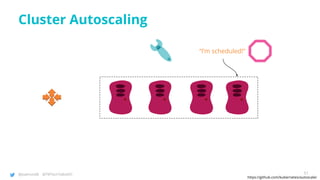

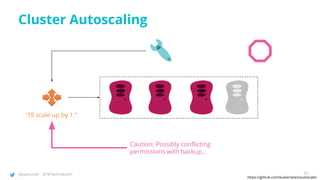

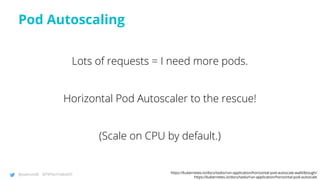

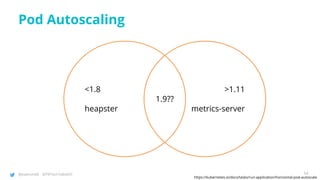

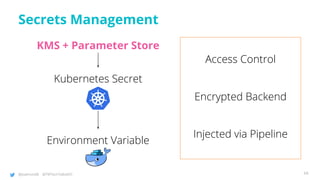

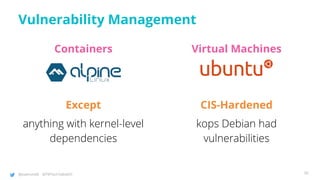

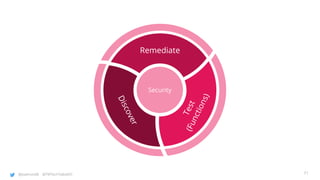

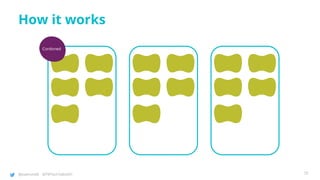

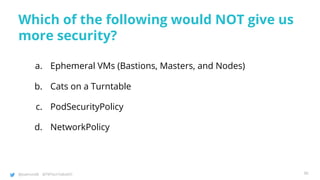

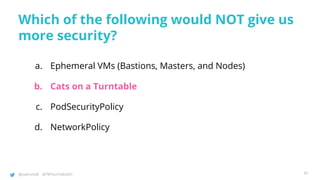

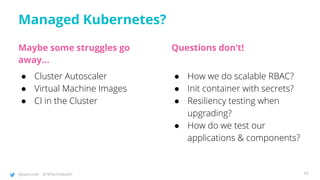

The document discusses building a Kubernetes platform from scratch, focusing on production capabilities, cloud hosting, and avoiding vendor lock-in. It outlines steps for setting up a local cluster, deploying applications, managing logging and metrics, and handling scaling and security. Key concepts include using Minikube for local development, Kops for cloud deployment, and various Kubernetes features for managing containerized applications.