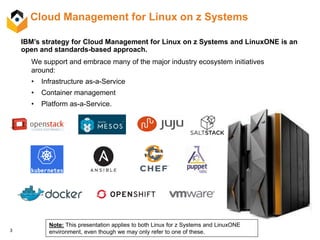

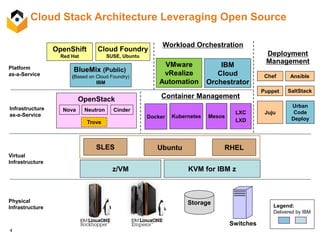

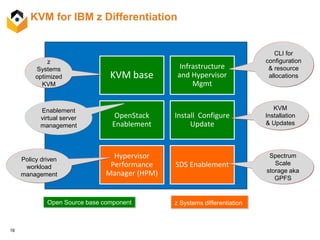

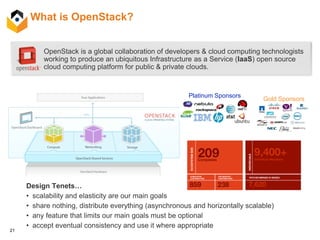

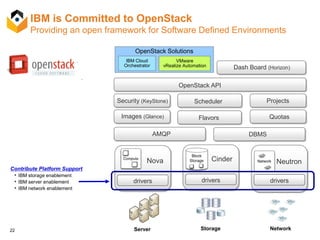

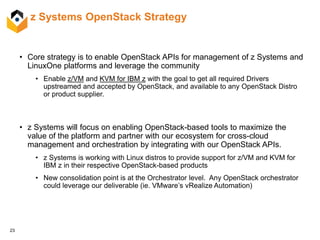

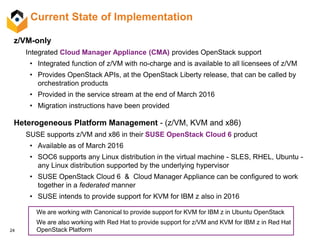

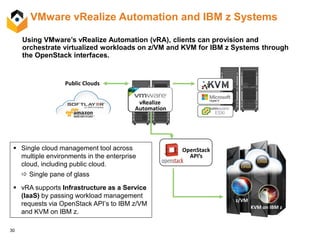

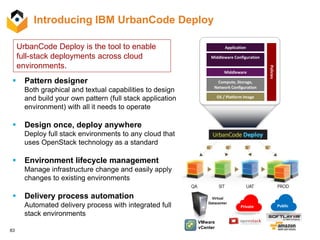

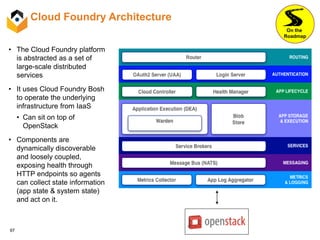

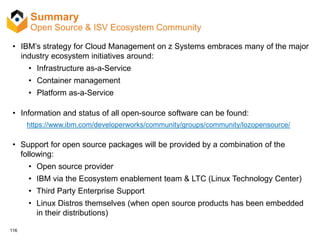

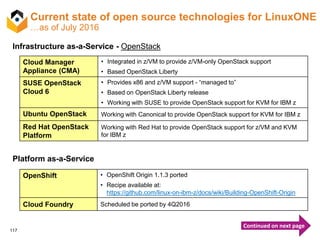

IBM provides an open and standards-based approach to cloud management for Linux on IBM zSystems and LinuxONE. This includes supporting Infrastructure as a Service (IaaS) via the open source OpenStack platform. IBM is committed to OpenStack and contributes drivers and platform support to upstream OpenStack projects. Currently IBM offers an OpenStack-enabled appliance for zSystems that provides OpenStack APIs without additional charge. IBM's strategy is to enable the OpenStack APIs on zSystems and LinuxONE platforms to allow for cross-cloud management and orchestration.