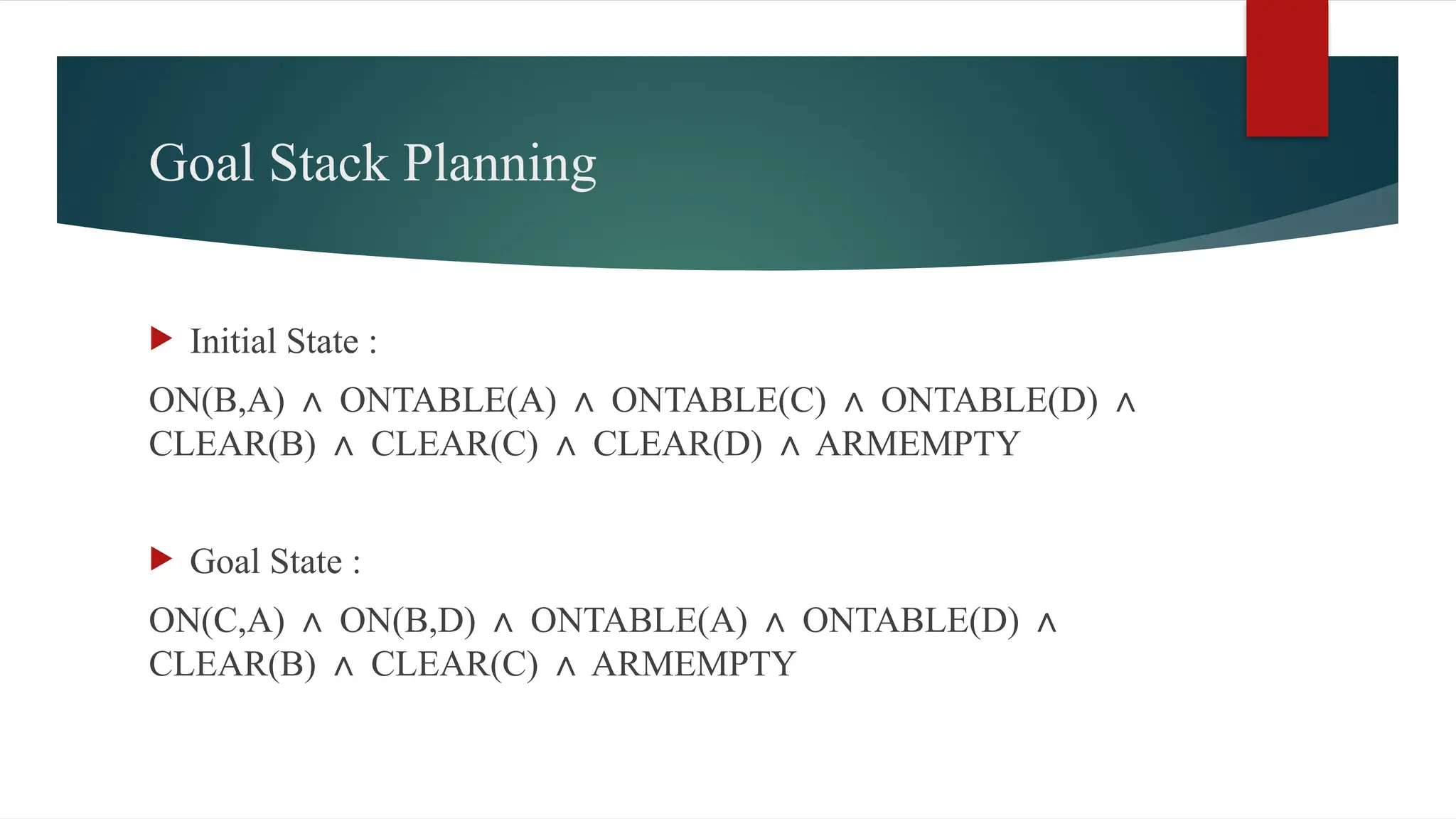

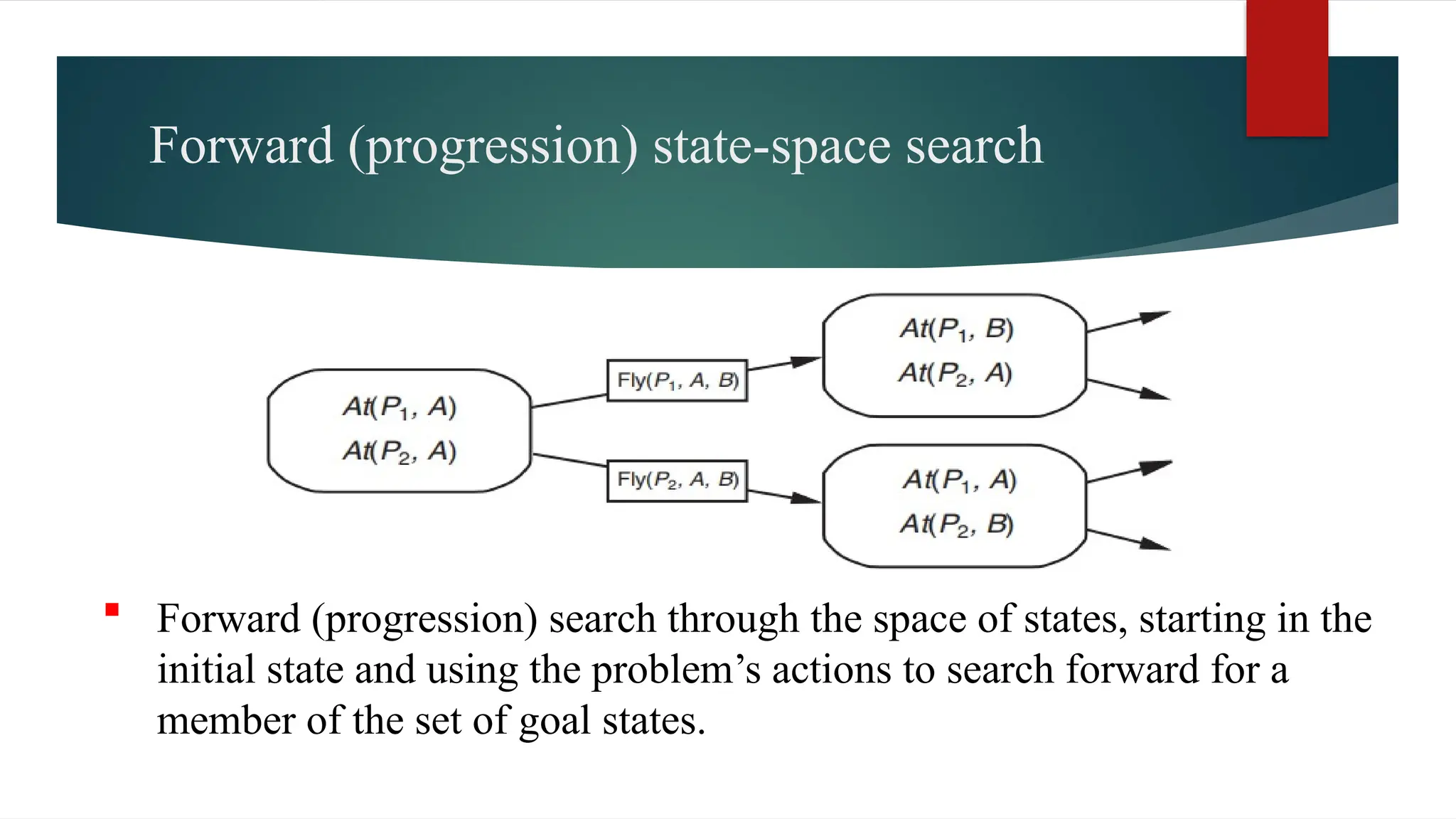

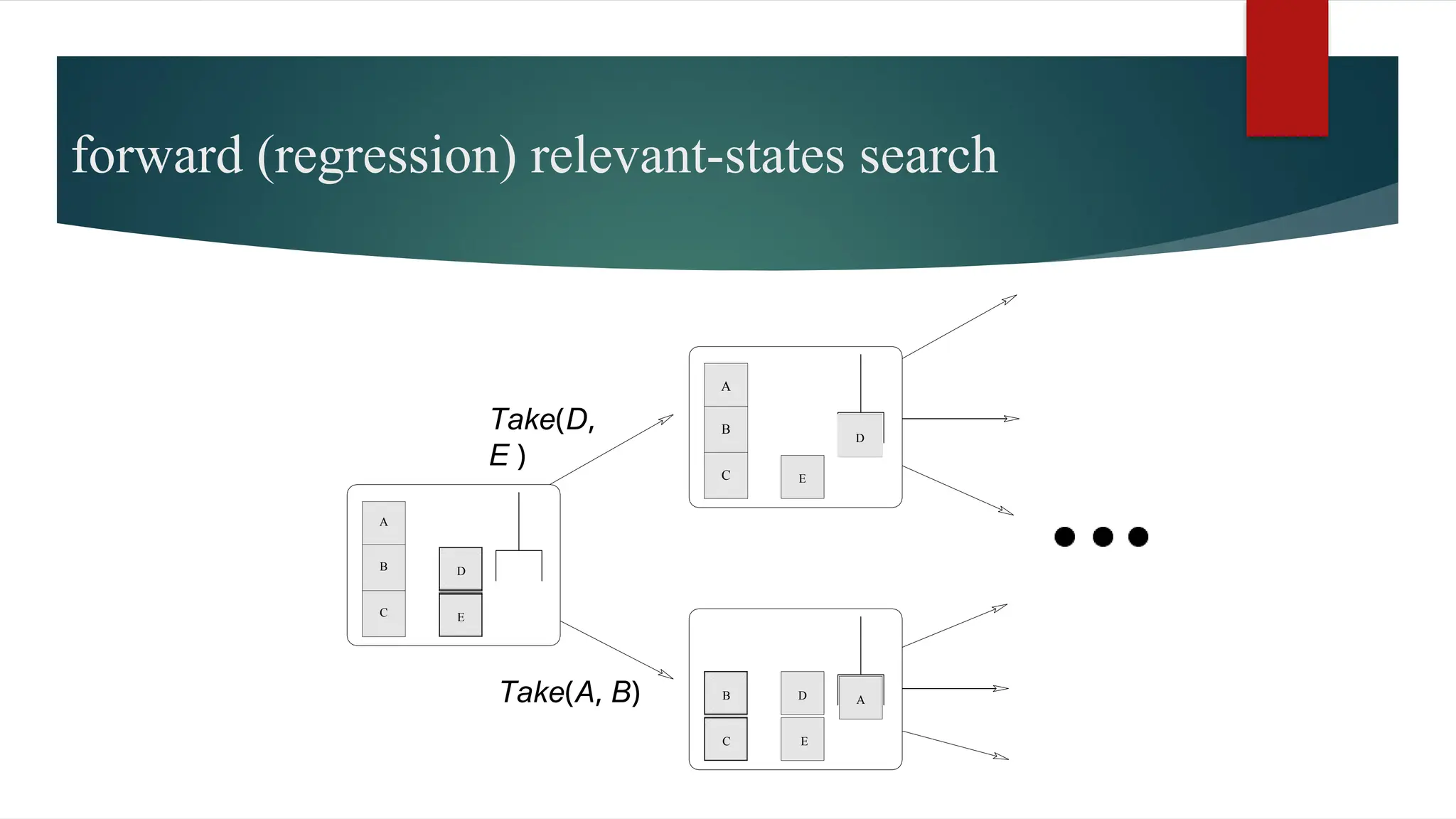

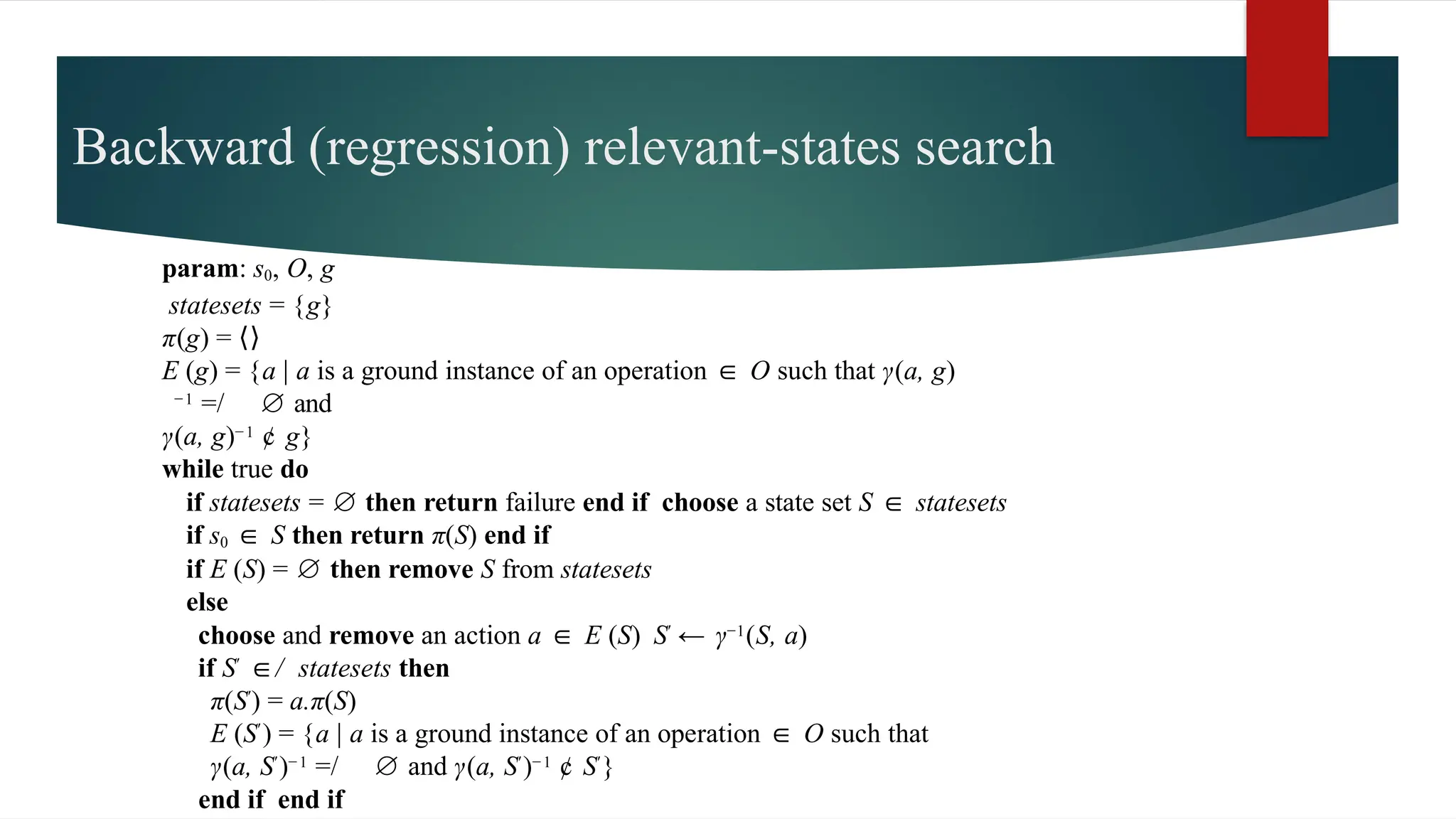

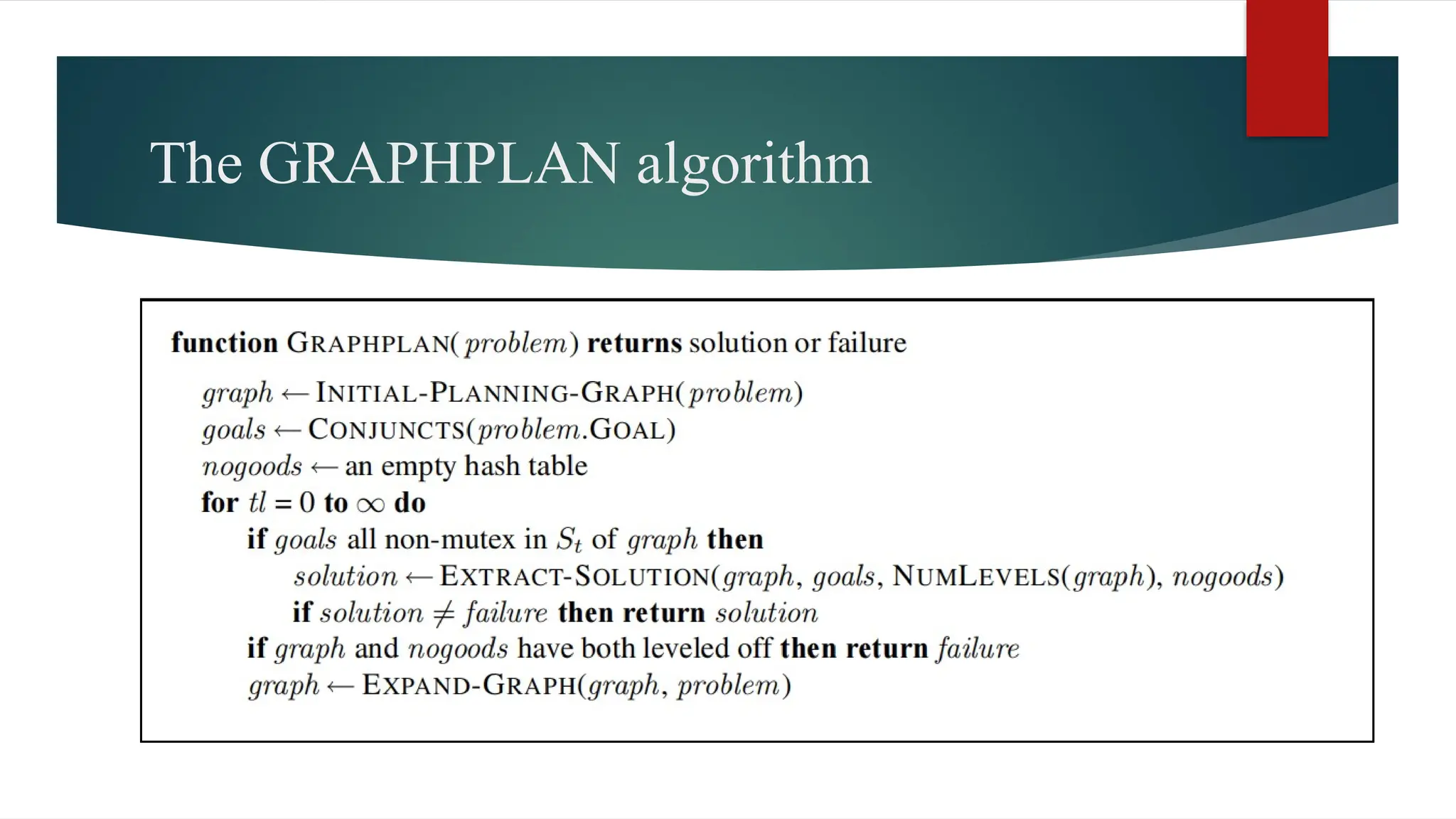

The document covers classical planning in artificial intelligence, emphasizing the importance of decision-making actions to achieve specific goals. It introduces various concepts, such as the blocks world problem and goal stack planning, as well as algorithms for planning that can be approached through state-space search methods. Additionally, it discusses heuristics for efficient planning, the use of planning graphs, and the Graphplan algorithm for determining goal reachability and mutual achievability.