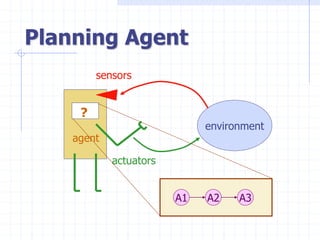

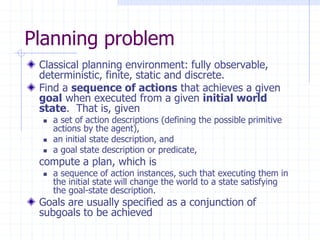

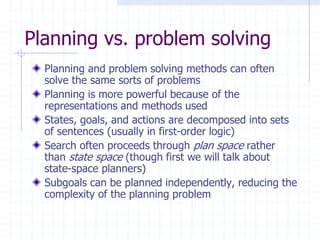

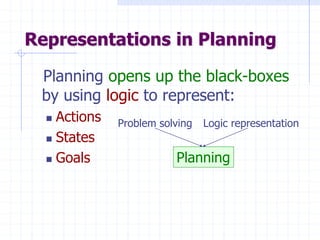

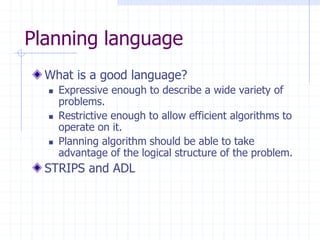

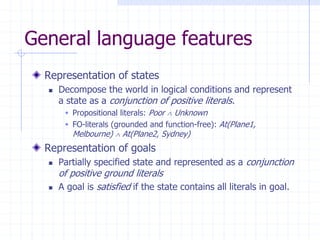

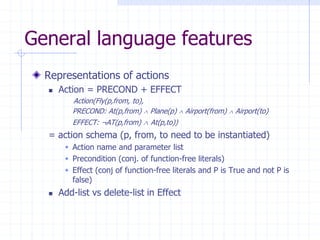

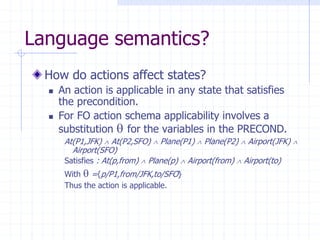

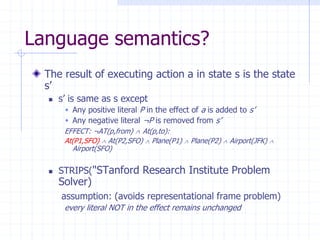

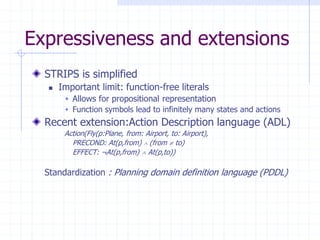

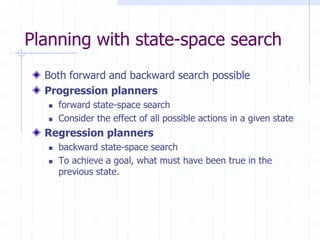

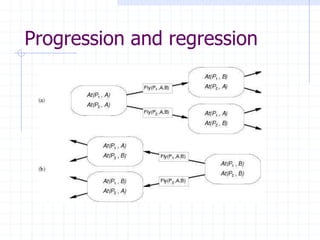

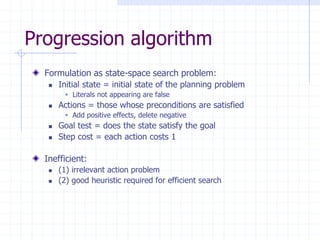

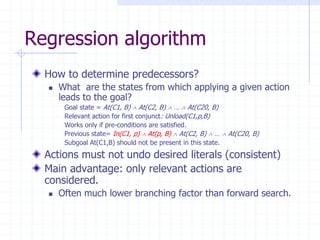

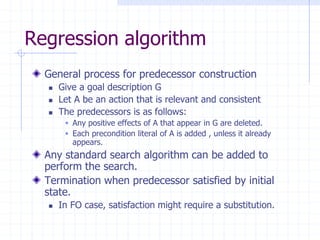

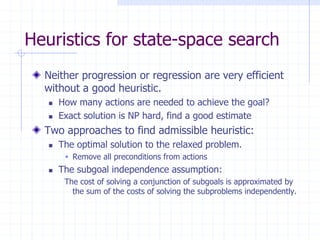

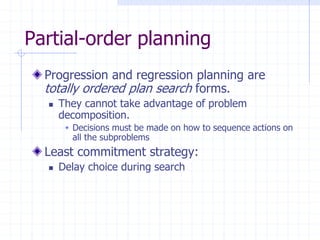

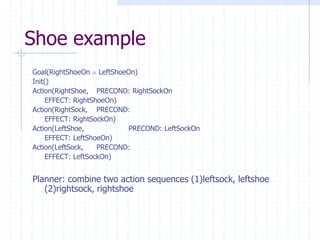

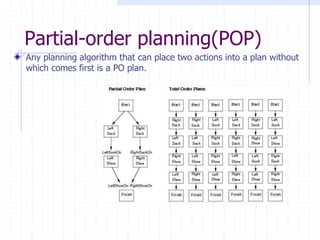

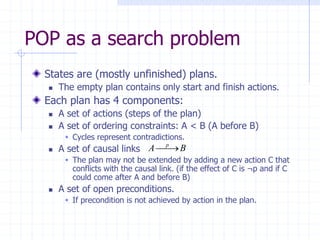

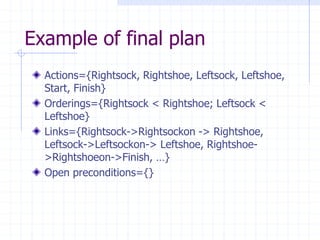

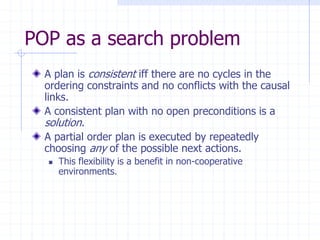

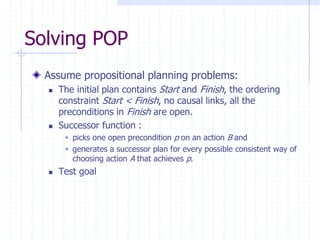

The document discusses planning and problem solving techniques in artificial intelligence. It covers classical planning problems, planning languages like STRIPS and PDDL, and different planning approaches like state-space planning using progression and regression, and partial-order planning. State-space planners search through the space of world states by applying actions, while partial-order planners delay commitments and search through the space of partially ordered plans. Representing problems in a logical planning language allows more powerful techniques compared to general problem solving.